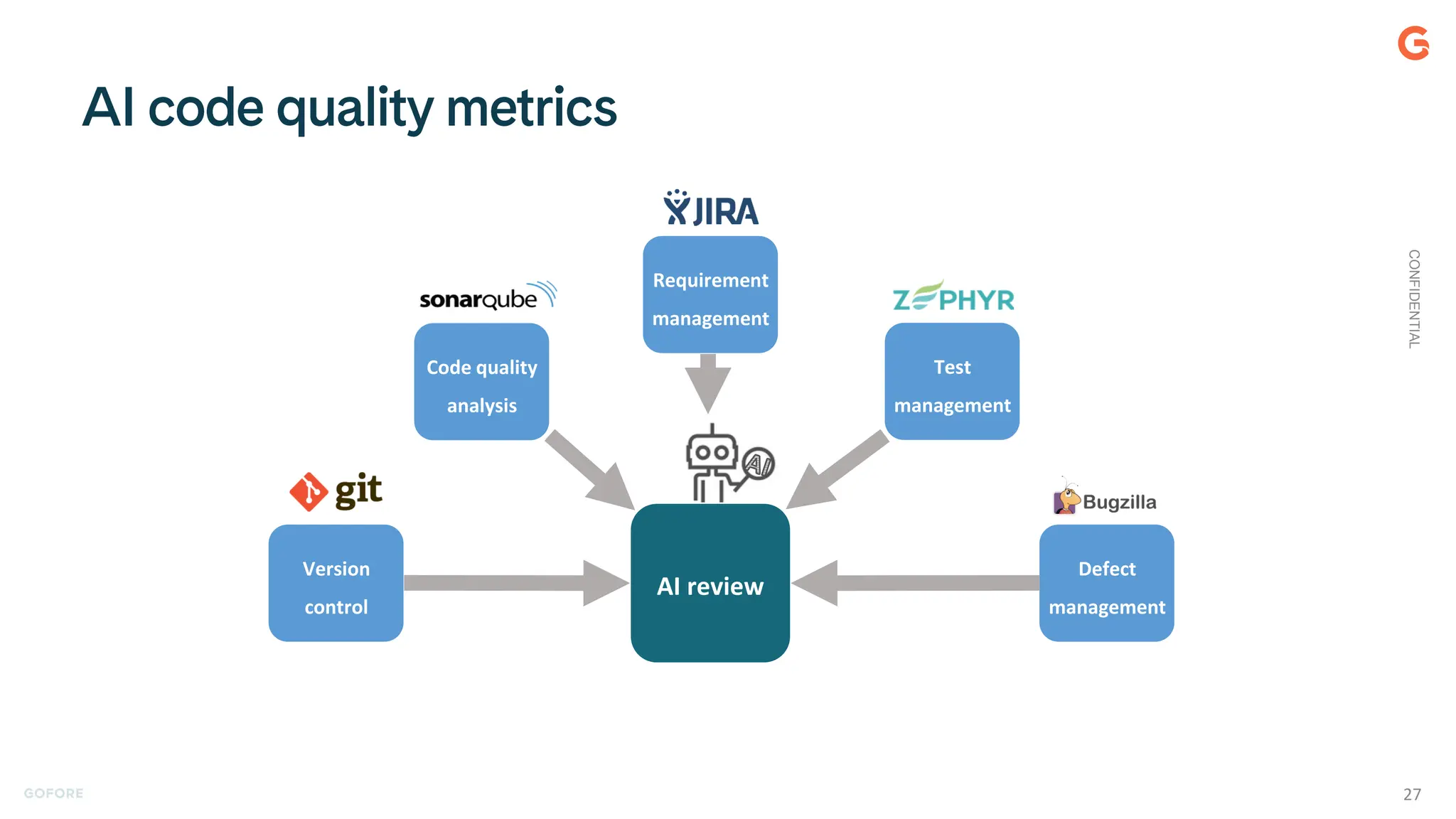

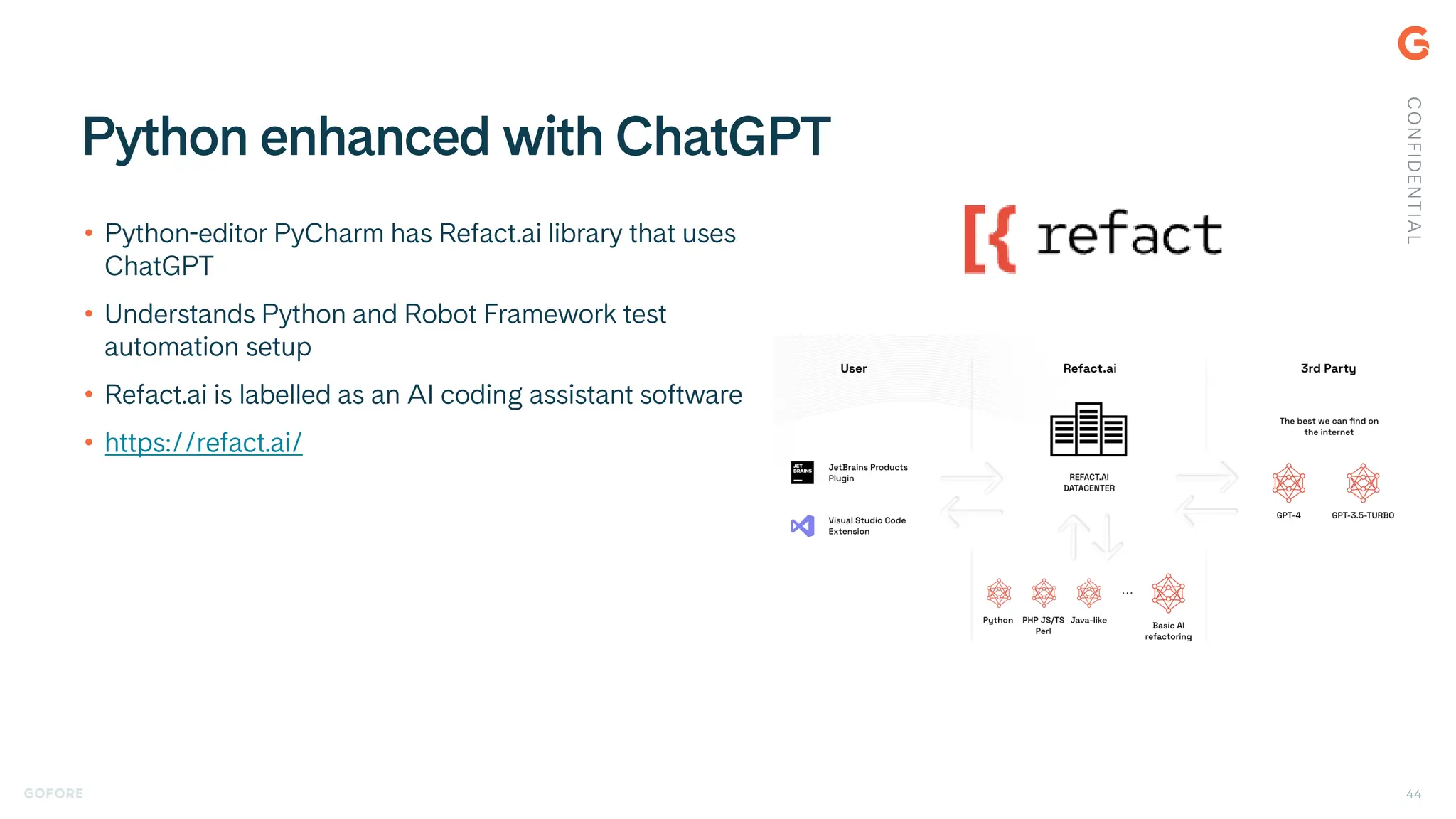

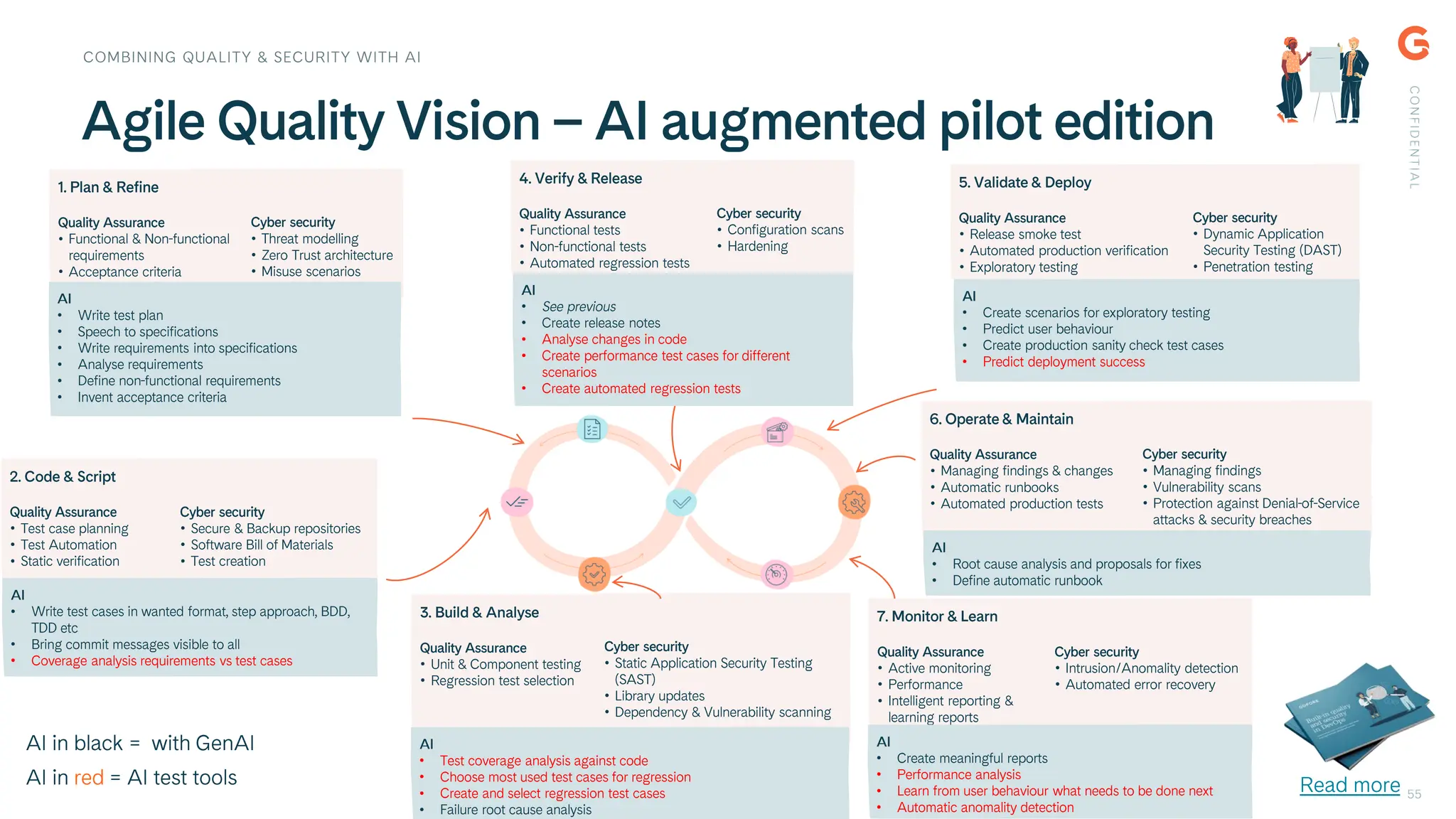

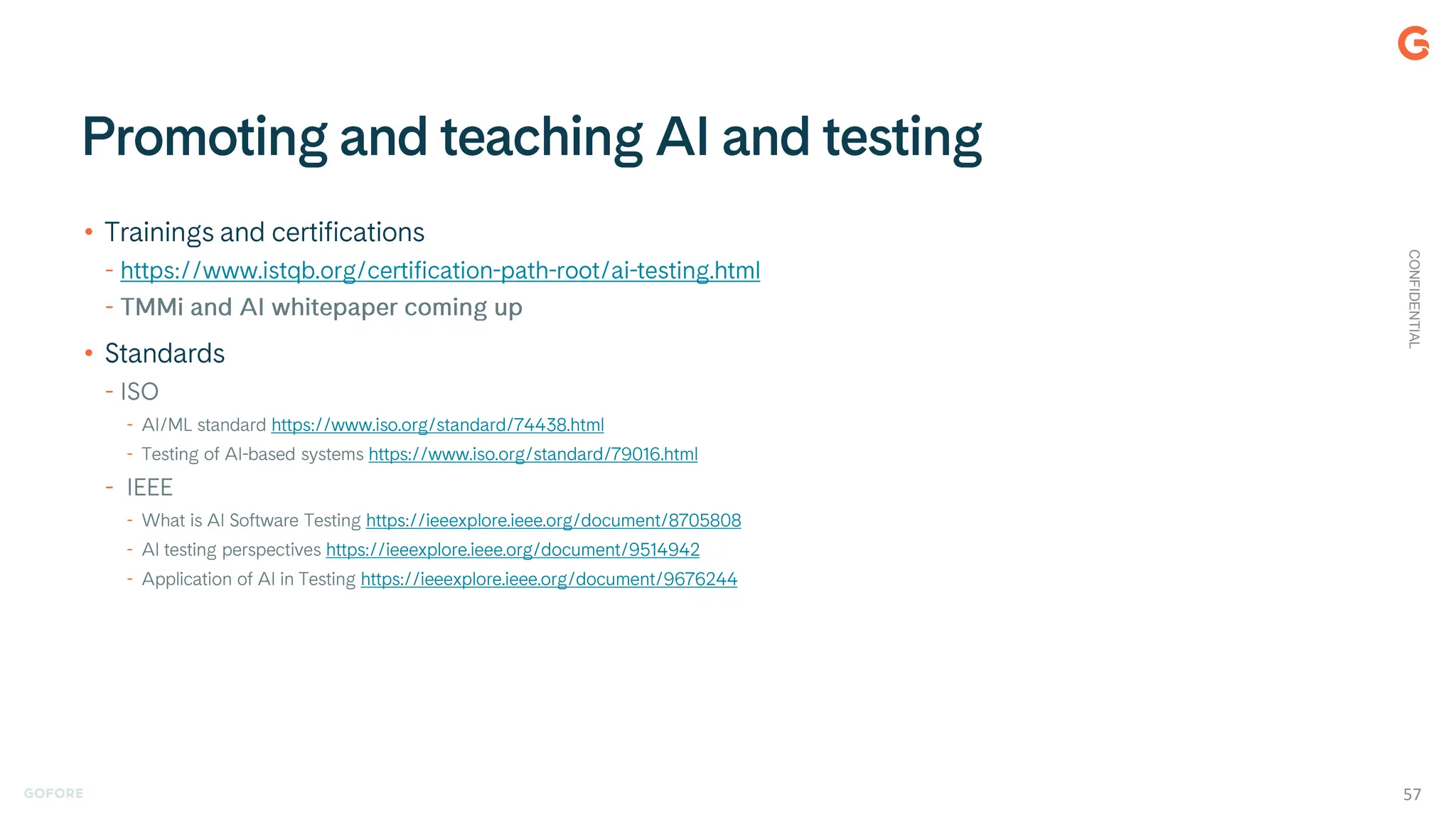

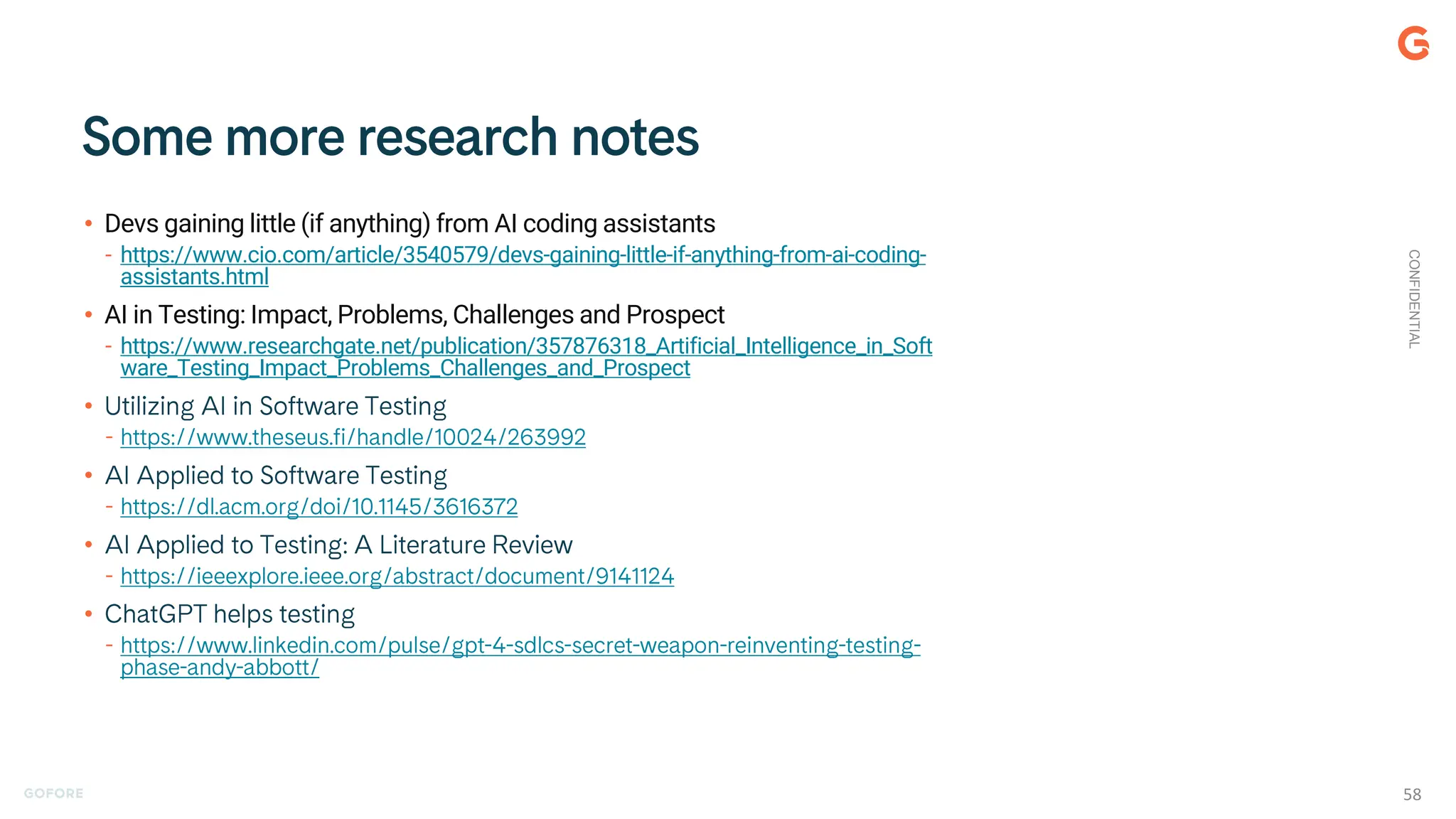

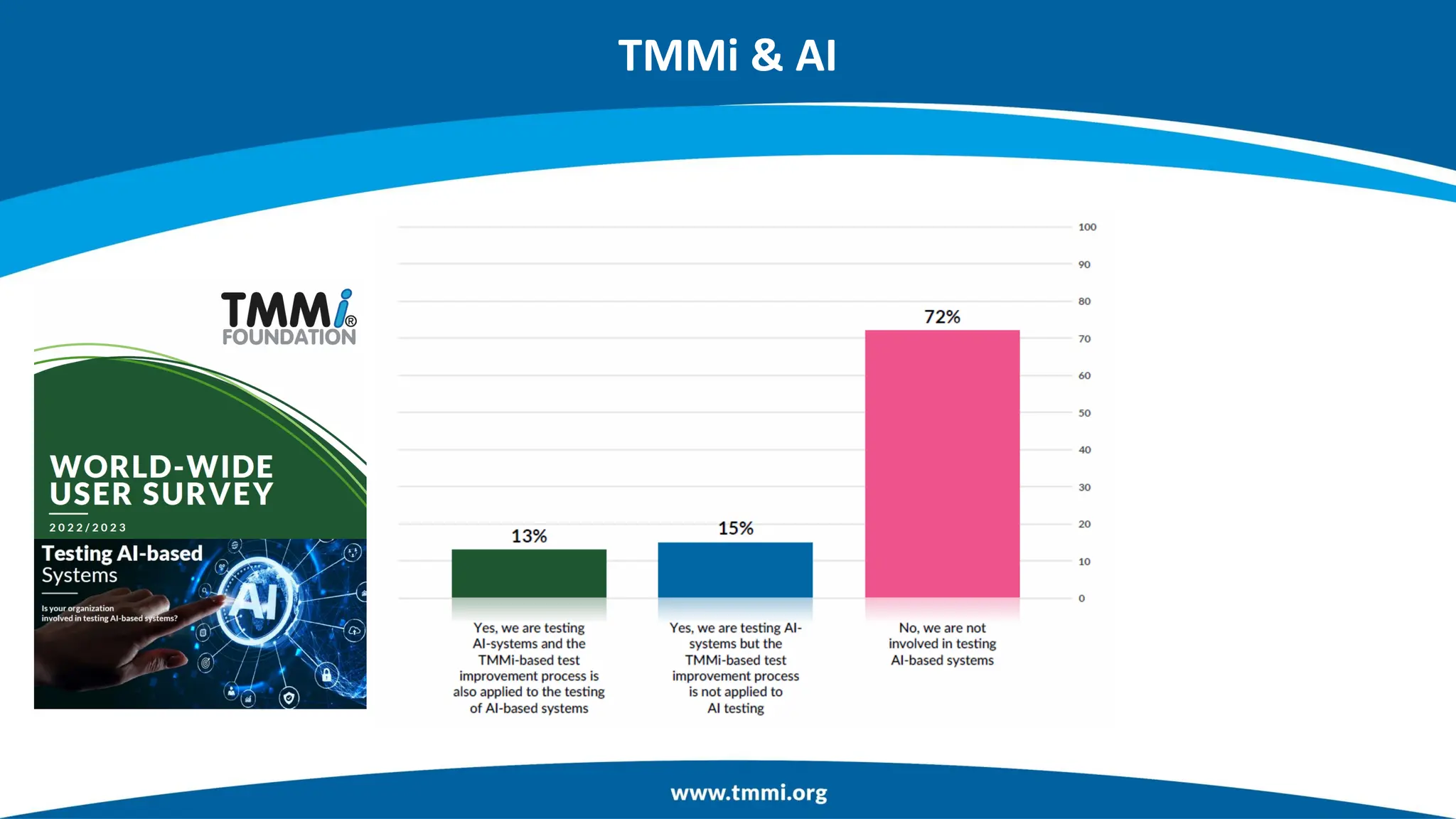

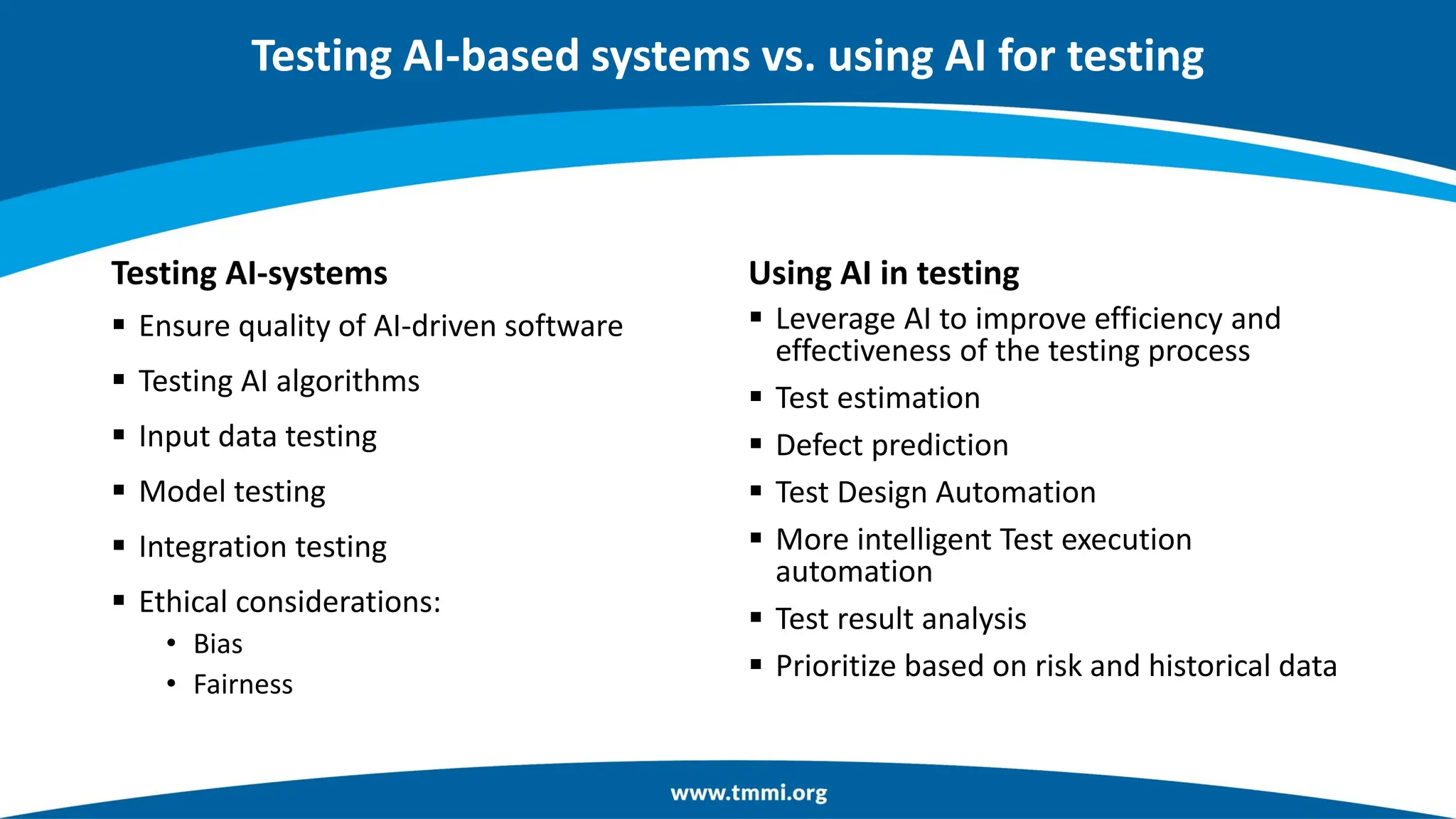

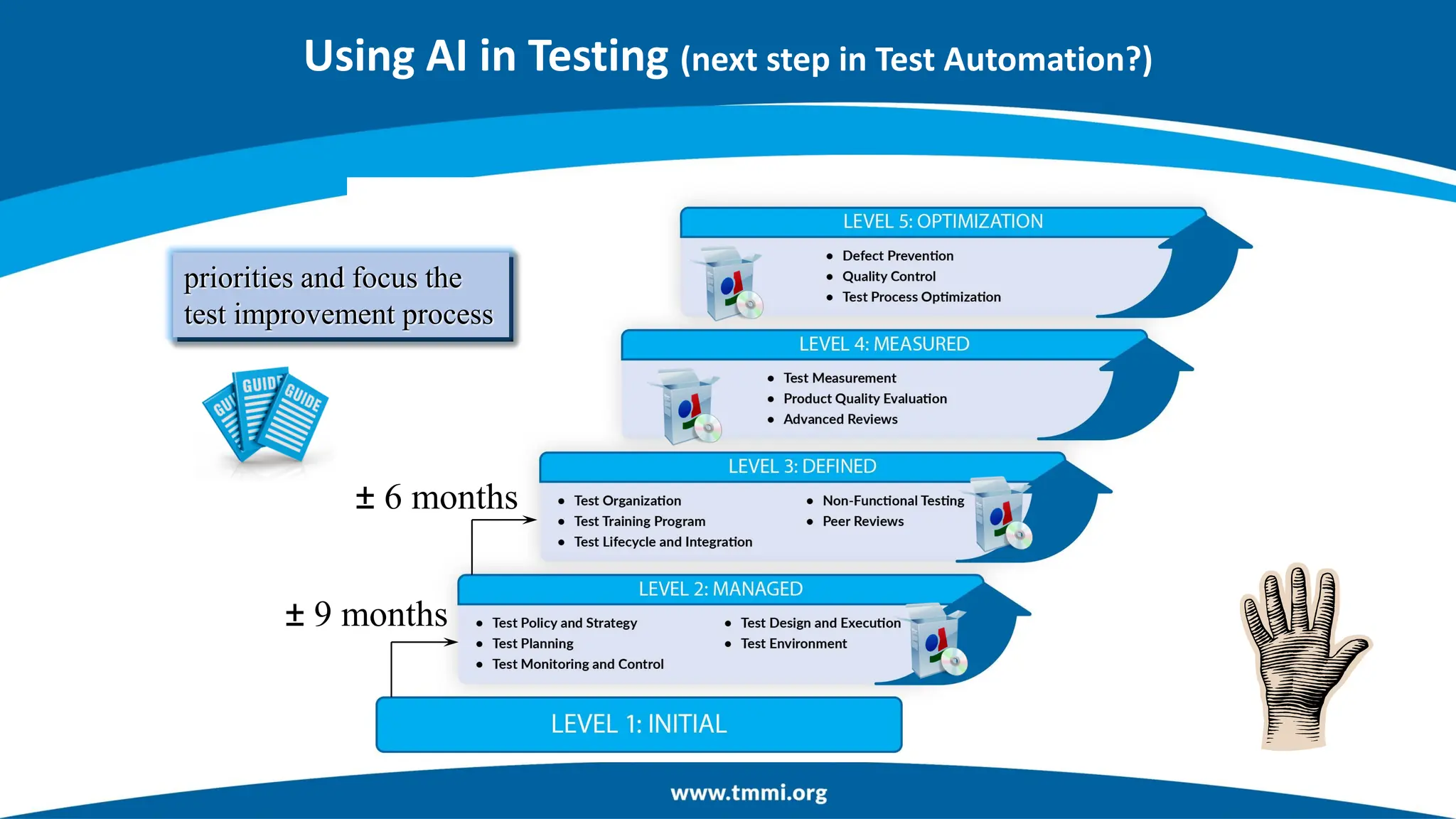

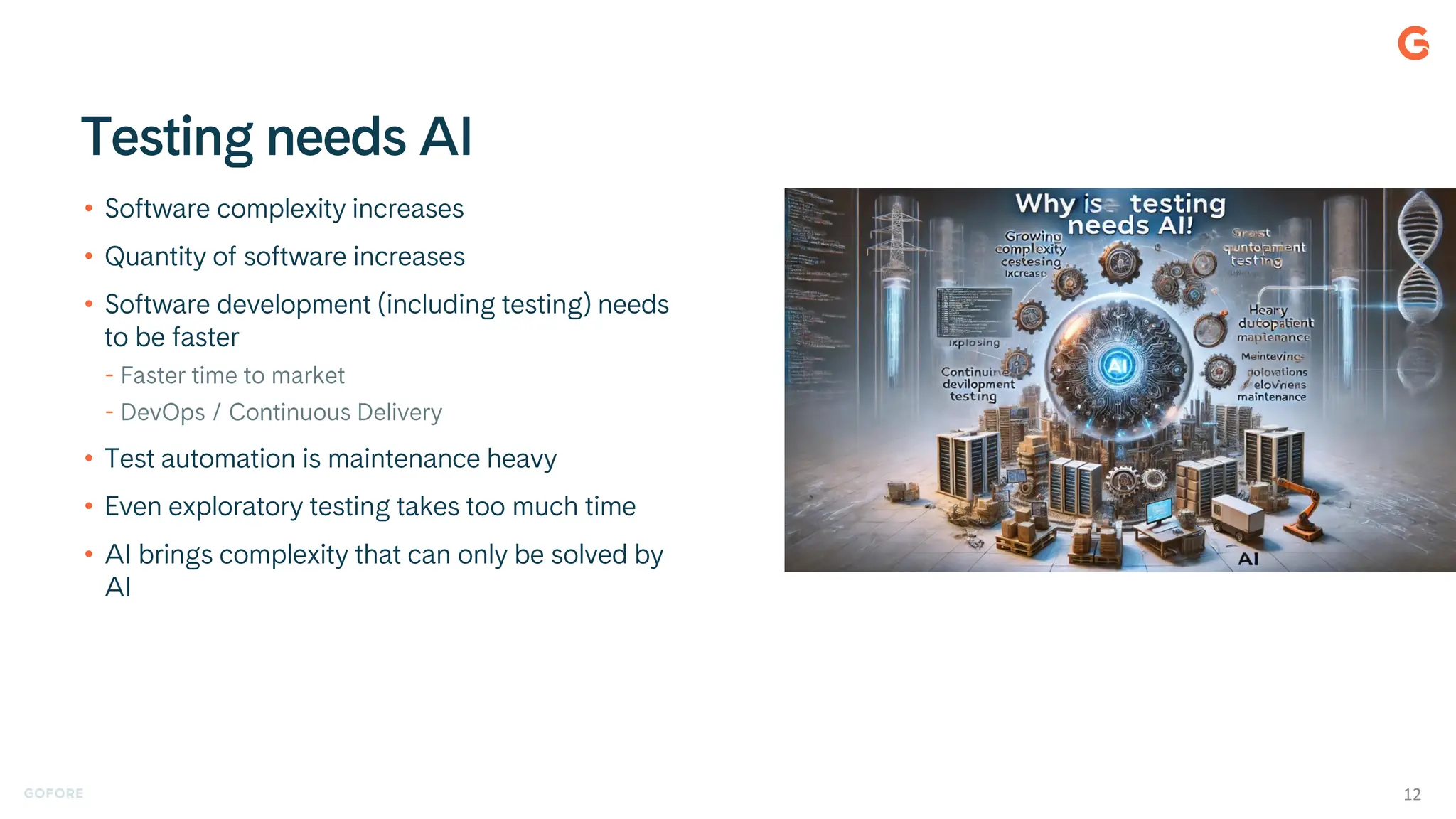

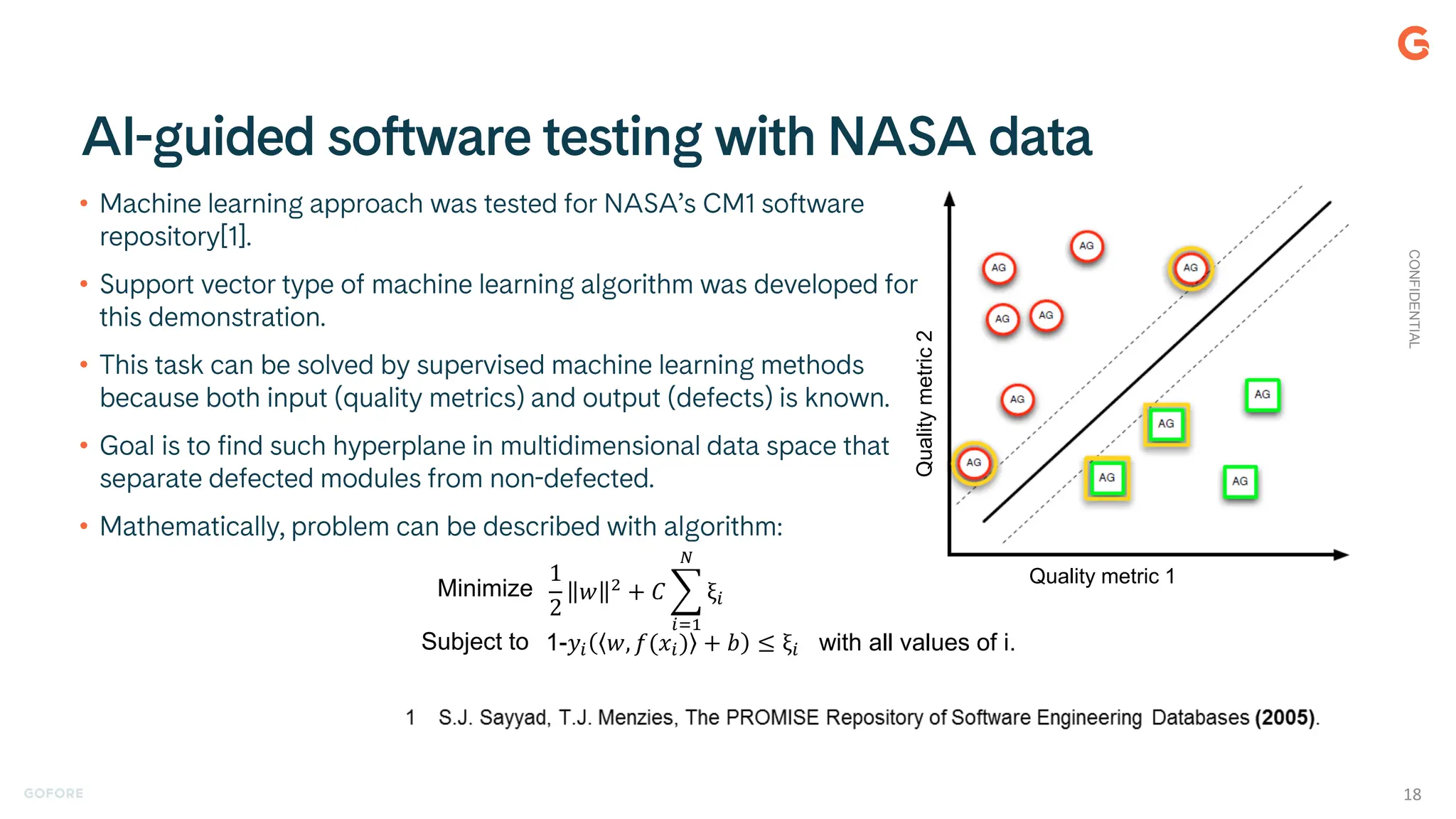

Test Formation conference 2025 by TMMi America online keynote delivery by Kari Kakkonen with topic How AI supports software testing. I discuss my initial enthusiasm to AI which is the NASA data AI case, then different scenarios where a tester would be interested in using AI with a few examples from commercial and open source for each scenario, and finally there's some research notes and TMMi and AI highlights, how to apply TMMi when testing an AI system.

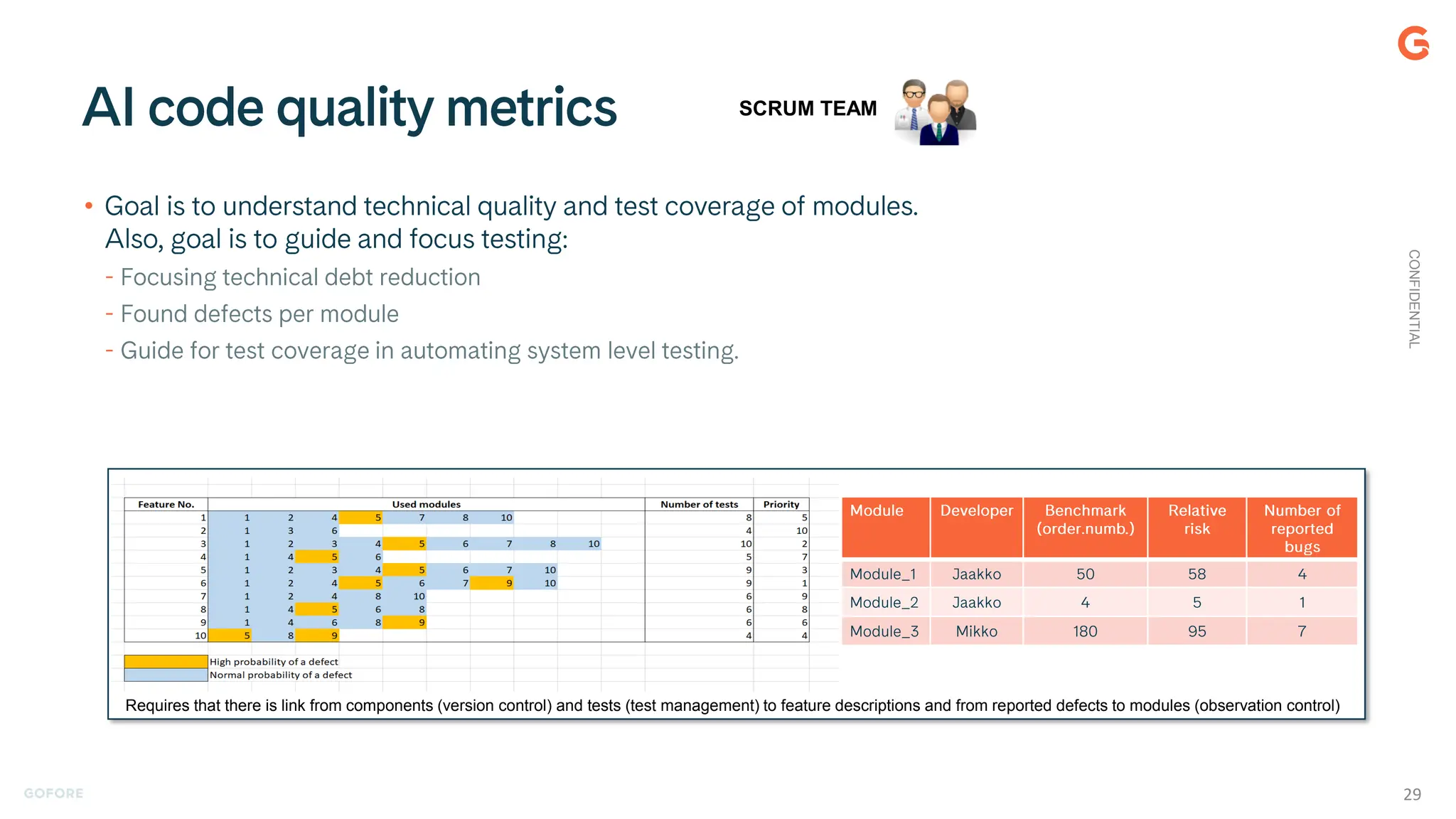

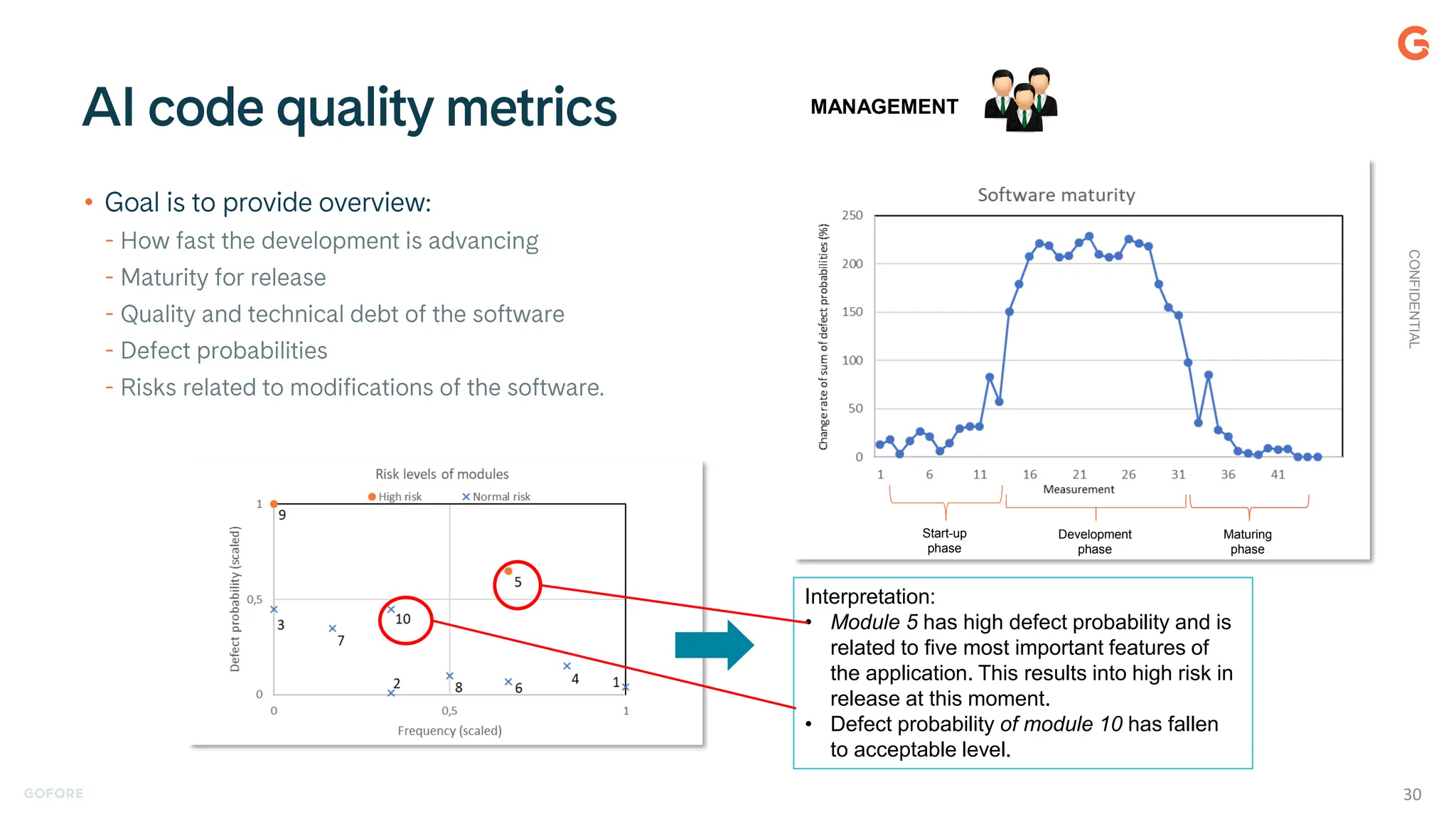

![• Several code quality metrics are

currently being used. The most popular

ones have been introduced in

1970.[1,2]

• Goal is to provide feedback:

1. for developers about code quality.

2. for testers about needed test coverage.

3. for management about the

development project

• Although traditional metrics are

extensively used, benefit of using them

has been questioned.[3]

1 T.J. McCabe, A Complexity Measure, IEEE Transactions on Software Engineering (1976) SE-2, 4, 308.

2 M.H. Halstead, Elements of Software Science, Elsevier (1977).

3 N.E. Fenton, S.L. Pfleeger, Software Metrics: A Rigorous & Practical Approach, International Thompson Press (1997).

4 S.J. Sayyad, T.J. Menzies, The PROMISE Repository of Software Engineering Databases (2005).

0

50

100

150

200

250

300

350

400

450

0 20 40 60 80 100 120

Number

of

lines.

Cyclomatic complexity

Scattering of defects in NASA CM1 project

OK Defected

Figure: NASA Metrics Data Program, CM1 Project[4]

In real applications defected modules do not separate from

non-defected by standard metrics.

C

O

N

F

I

D

E

N

T

I

A

L

17](https://image.slidesharecdn.com/howaisupportstestingkarikakkonentestformation2025v3-250410115915-81fa1f15/75/How-AI-supports-testing-Kari-Kakkonen-TestFormation-2025-17-2048.jpg)

![0,0

0,2

0,4

0,6

0,8

Tested on real data against NASA CM1 project[1]

Performance

(F)

Training set: 1595

Test set: 400

𝐹 =

2 ∗ 𝑎𝑐𝑐𝑢𝑟𝑎𝑐𝑦 ∗ 𝑟𝑒𝑐𝑎𝑙𝑙

𝑎𝑐𝑐𝑢𝑟𝑎𝑐𝑦 + 𝑟𝑒𝑐𝑎𝑙𝑙

CONFIDENTIAL

19](https://image.slidesharecdn.com/howaisupportstestingkarikakkonentestformation2025v3-250410115915-81fa1f15/75/How-AI-supports-testing-Kari-Kakkonen-TestFormation-2025-19-2048.jpg)