This textbook introduces fundamental concepts of database systems and is intended for use as a textbook in database courses. The sixth edition has been reorganized and updated with new content. Key changes include moving SQL earlier in the book, consolidating object-relational and object-oriented database content, expanding XML coverage, adding a new chapter on information retrieval, and reorganizing the normalization theory chapters. The book is suitable for both introductory and advanced database courses and provides flexibility for instructors to customize course content.

![62 Chapter 3 The Relational Data Model and Relational Database Constraints

A relation schema2 R, denoted by R(A1, A2, ..., An), is made up of a relation name R

and a list of attributes, A1, A2, ..., An. Each attribute Ai is the name of a role played

by some domain D in the relation schema R. D is called the domain of Ai and is

denoted by dom(Ai). A relation schema is used to describe a relation; R is called the

name of this relation. The degree (or arity) of a relation is the number of attributes

n of its relation schema.

A relation of degree seven, which stores information about university students,

would contain seven attributes describing each student. as follows:

STUDENT(Name, Ssn, Home_phone, Address, Office_phone, Age, Gpa)

Using the data type of each attribute, the definition is sometimes written as:

STUDENT(Name: string, Ssn: string, Home_phone: string, Address: string,

Office_phone: string, Age: integer, Gpa: real)

For this relation schema, STUDENT is the name of the relation, which has seven

attributes. In the preceding definition, we showed assignment of generic types such

as string or integer to the attributes. More precisely, we can specify the following

previously defined domains for some of the attributes of the STUDENT relation:

dom(Name) = Names; dom(Ssn) = Social_security_numbers; dom(HomePhone) =

USA_phone_numbers3, dom(Office_phone) = USA_phone_numbers, and dom(Gpa) =

Grade_point_averages. It is also possible to refer to attributes of a relation schema by

their position within the relation; thus, the second attribute of the STUDENT rela-

tion is Ssn, whereas the fourth attribute is Address.

A relation (or relation state)4 r of the relation schema R(A1, A2, ..., An), also

denoted by r(R), is a set of n-tuples r = {t1, t2, ..., tm}. Each n-tuple t is an ordered list

of n values t =v1, v2, ..., vn, where each value vi, 1 ≤ i ≤ n, is an element of dom

(Ai) or is a special NULL value. (NULL values are discussed further below and in

Section 3.1.2.) The ith value in tuple t, which corresponds to the attribute Ai, is

referred to as t[Ai] or t.Ai (or t[i] if we use the positional notation). The terms

relation intension for the schema R and relation extension for a relation state r(R)

are also commonly used.

Figure 3.1 shows an example of a STUDENT relation, which corresponds to the

STUDENT schema just specified. Each tuple in the relation represents a particular

student entity (or object). We display the relation as a table, where each tuple is

shown as a row and each attribute corresponds to a column header indicating a role

or interpretation of the values in that column. NULL values represent attributes

whose values are unknown or do not exist for some individual STUDENT tuple.

2A relation schema is sometimes called a relation scheme.

3With the large increase in phone numbers caused by the proliferation of mobile phones, most metropol-

itan areas in the U.S. now have multiple area codes, so seven-digit local dialing has been discontinued in

most areas. We changed this domain to Usa_phone_numbers instead of Local_phone_numbers which

would be a more general choice. This illustrates how database requirements can change over time.

4This has also been called a relation instance. We will not use this term because instance is also used

to refer to a single tuple or row.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-91-320.jpg)

![64 Chapter 3 The Relational Data Model and Relational Database Constraints

Dick Davidson

Barbara Benson

Rohan Panchal

Chung-cha Kim

422-11-2320

533-69-1238

489-22-1100

381-62-1245

NULL

(817)839-8461

(817)376-9821

(817)375-4409

3452 Elgin Road

7384 Fontana Lane

265 Lark Lane

125 Kirby Road

(817)749-1253

NULL

(817)749-6492

NULL

25

19

28

18

3.53

3.25

3.93

2.89

Benjamin Bayer 305-61-2435 (817)373-1616 2918 Bluebonnet Lane NULL 19 3.21

STUDENT

Name Ssn Home_phone Address Office_phone Age Gpa

Figure 3.2

The relation STUDENT from Figure 3.1 with a different order of tuples.

Ordering of Tuples in a Relation. A relation is defined as a set of tuples.

Mathematically, elements of a set have no order among them; hence, tuples in a rela-

tion do not have any particular order. In other words, a relation is not sensitive to

the ordering of tuples. However, in a file, records are physically stored on disk (or in

memory), so there always is an order among the records. This ordering indicates

first, second, ith, and last records in the file. Similarly, when we display a relation as

a table, the rows are displayed in a certain order.

Tuple ordering is not part of a relation definition because a relation attempts to rep-

resent facts at a logical or abstract level. Many tuple orders can be specified on the

same relation. For example, tuples in the STUDENT relation in Figure 3.1 could be

ordered by values of Name, Ssn, Age, or some other attribute. The definition of a rela-

tion does not specify any order: There is no preference for one ordering over another.

Hence, the relation displayed in Figure 3.2 is considered identical to the one shown in

Figure 3.1. When a relation is implemented as a file or displayed as a table, a particu-

lar ordering may be specified on the records of the file or the rows of the table.

Ordering of Values within a Tuple and an Alternative Definition of a

Relation. According to the preceding definition of a relation, an n-tuple is an

ordered list of n values, so the ordering of values in a tuple—and hence of attributes

in a relation schema—is important. However, at a more abstract level, the order of

attributes and their values is not that important as long as the correspondence

between attributes and values is maintained.

An alternative definition of a relation can be given, making the ordering of values

in a tuple unnecessary. In this definition, a relation schema R = {A1, A2, ..., An} is a

set of attributes (instead of a list), and a relation state r(R) is a finite set of mappings

r = {t1, t2, ..., tm}, where each tuple ti is a mapping from R to D, and D is the union

(denoted by ∪) of the attribute domains; that is, D = dom(A1) ∪ dom(A2) ∪ ... ∪

dom(An). In this definition, t[Ai] must be in dom(Ai) for 1 ≤ i ≤ n for each mapping

t in r. Each mapping ti is called a tuple.

According to this definition of tuple as a mapping, a tuple can be considered as a set

of (attribute, value) pairs, where each pair gives the value of the mapping

from an attribute Ai to a value vi from dom(Ai). The ordering of attributes is not](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-93-320.jpg)

![3.2 Relational Model Constraints and Relational Database Schemas 67

■ The uppercase letters Q, R, S denote relation names.

■ The lowercase letters q, r, s denote relation states.

■ The letters t, u, v denote tuples.

■ In general, the name of a relation schema such as STUDENT also indicates the

current set of tuples in that relation—the current relation state—whereas

STUDENT(Name, Ssn, ...) refers only to the relation schema.

■ An attribute A can be qualified with the relation name R to which it belongs

by using the dot notation R.A—for example, STUDENT.Name or

STUDENT.Age. This is because the same name may be used for two attributes

in different relations. However, all attribute names in a particular relation

must be distinct.

■ An n-tuple t in a relation r(R) is denoted by t = v1, v2, ..., vn, where vi is the

value corresponding to attribute Ai. The following notation refers to

component values of tuples:

■ Both t[Ai] and t.Ai (and sometimes t[i]) refer to the value vi in t for attribute

Ai.

■ Both t[Au, Aw, ..., Az] and t.(Au, Aw, ..., Az), where Au, Aw, ..., Az is a list of

attributes from R, refer to the subtuple of values vu, vw, ..., vz from t cor-

responding to the attributes specified in the list.

As an example, consider the tuple t = ‘Barbara Benson’, ‘533-69-1238’, ‘(817)839-

8461’, ‘7384 Fontana Lane’, NULL, 19, 3.25 from the STUDENT relation in Figure

3.1; we have t[Name] = ‘Barbara Benson’, and t[Ssn, Gpa, Age] = ‘533-69-1238’,

3.25, 19.

3.2 Relational Model Constraints

and Relational Database Schemas

So far, we have discussed the characteristics of single relations. In a relational data-

base, there will typically be many relations, and the tuples in those relations are usu-

ally related in various ways. The state of the whole database will correspond to the

states of all its relations at a particular point in time. There are generally many

restrictions or constraints on the actual values in a database state. These constraints

are derived from the rules in the miniworld that the database represents, as we dis-

cussed in Section 1.6.8.

In this section, we discuss the various restrictions on data that can be specified on a

relational database in the form of constraints. Constraints on databases can gener-

ally be divided into three main categories:

1. Constraints that are inherent in the data model. We call these inherent

model-based constraints or implicit constraints.

2. Constraints that can be directly expressed in schemas of the data model, typ-

ically by specifying them in the DDL (data definition language, see Section

2.3.1). We call these schema-based constraints or explicit constraints.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-96-320.jpg)

![68 Chapter 3 The Relational Data Model and Relational Database Constraints

3. Constraints that cannot be directly expressed in the schemas of the data

model, and hence must be expressed and enforced by the application pro-

grams. We call these application-based or semantic constraints or business

rules.

The characteristics of relations that we discussed in Section 3.1.2 are the inherent

constraints of the relational model and belong to the first category. For example, the

constraint that a relation cannot have duplicate tuples is an inherent constraint. The

constraints we discuss in this section are of the second category, namely, constraints

that can be expressed in the schema of the relational model via the DDL.

Constraints in the third category are more general, relate to the meaning as well as

behavior of attributes, and are difficult to express and enforce within the data

model, so they are usually checked within the application programs that perform

database updates.

Another important category of constraints is data dependencies, which include

functional dependencies and multivalued dependencies. They are used mainly for

testing the “goodness” of the design of a relational database and are utilized in a

process called normalization, which is discussed in Chapters 15 and 16.

The schema-based constraints include domain constraints, key constraints, con-

straints on NULLs, entity integrity constraints, and referential integrity constraints.

3.2.1 Domain Constraints

Domain constraints specify that within each tuple, the value of each attribute A

must be an atomic value from the domain dom(A). We have already discussed the

ways in which domains can be specified in Section 3.1.1. The data types associated

with domains typically include standard numeric data types for integers (such as

short integer, integer, and long integer) and real numbers (float and double-

precision float). Characters, Booleans, fixed-length strings, and variable-length

strings are also available, as are date, time, timestamp, and money, or other special

data types. Other possible domains may be described by a subrange of values from a

data type or as an enumerated data type in which all possible values are explicitly

listed. Rather than describe these in detail here, we discuss the data types offered by

the SQL relational standard in Section 4.1.

3.2.2 Key Constraints and Constraints on NULL Values

In the formal relational model, a relation is defined as a set of tuples. By definition,

all elements of a set are distinct; hence, all tuples in a relation must also be distinct.

This means that no two tuples can have the same combination of values for all their

attributes. Usually, there are other subsets of attributes of a relation schema R with

the property that no two tuples in any relation state r of R should have the same

combination of values for these attributes. Suppose that we denote one such subset

of attributes by SK; then for any two distinct tuples t1 and t2 in a relation state r of R,

we have the constraint that:

t1[SK]≠ t2[SK]](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-97-320.jpg)

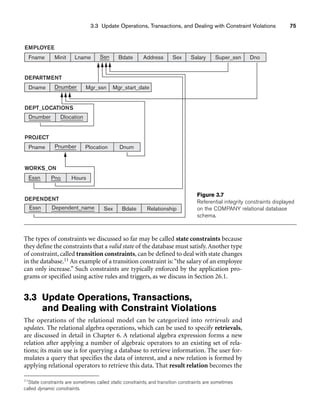

![Integrity constraints are specified on a database schema and are expected to hold on

every valid database state of that schema. In addition to domain, key, and NOT NULL

constraints, two other types of constraints are considered part of the relational

model: entity integrity and referential integrity.

3.2.4 Integrity, Referential Integrity,

and Foreign Keys

The entity integrity constraint states that no primary key value can be NULL. This

is because the primary key value is used to identify individual tuples in a relation.

Having NULL values for the primary key implies that we cannot identify some

tuples. For example, if two or more tuples had NULL for their primary keys, we may

not be able to distinguish them if we try to reference them from other relations.

Key constraints and entity integrity constraints are specified on individual relations.

The referential integrity constraint is specified between two relations and is used

to maintain the consistency among tuples in the two relations. Informally, the refer-

ential integrity constraint states that a tuple in one relation that refers to another

relation must refer to an existing tuple in that relation. For example, in Figure 3.6,

the attribute Dno of EMPLOYEE gives the department number for which each

employee works; hence, its value in every EMPLOYEE tuple must match the Dnumber

value of some tuple in the DEPARTMENT relation.

To define referential integrity more formally, first we define the concept of a foreign

key. The conditions for a foreign key, given below, specify a referential integrity con-

straint between the two relation schemas R1 and R2. A set of attributes FK in rela-

tion schema R1 is a foreign key of R1 that references relation R2 if it satisfies the

following rules:

1. The attributes in FK have the same domain(s) as the primary key attributes

PK of R2; the attributes FK are said to reference or refer to the relation R2.

2. A value of FK in a tuple t1 of the current state r1(R1) either occurs as a value

of PK for some tuple t2 in the current state r2(R2) or is NULL. In the former

case, we have t1[FK] = t2[PK], and we say that the tuple t1 references or

refers to the tuple t2.

In this definition, R1 is called the referencing relation and R2 is the referenced rela-

tion. If these two conditions hold, a referential integrity constraint from R1 to R2 is

said to hold. In a database of many relations, there are usually many referential

integrity constraints.

To specify these constraints, first we must have a clear understanding of the mean-

ing or role that each attribute or set of attributes plays in the various relation

schemas of the database. Referential integrity constraints typically arise from the

relationships among the entities represented by the relation schemas. For example,

consider the database shown in Figure 3.6. In the EMPLOYEE relation, the attribute

Dno refers to the department for which an employee works; hence, we designate Dno

to be a foreign key of EMPLOYEE referencing the DEPARTMENT relation. This means

that a value of Dno in any tuple t1 of the EMPLOYEE relation must match a value of

3.2 Relational Model Constraints and Relational Database Schemas 73](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-102-320.jpg)

![4.4 INSERT, DELETE, and UPDATE Statements in SQL 107

The default order is in ascending order of values. We can specify the keyword DESC

if we want to see the result in a descending order of values. The keyword ASC can be

used to specify ascending order explicitly. For example, if we want descending

alphabetical order on Dname and ascending order on Lname, Fname, the ORDER BY

clause of Q15 can be written as

ORDER BY D.Dname DESC, E.Lname ASC, E.Fname ASC

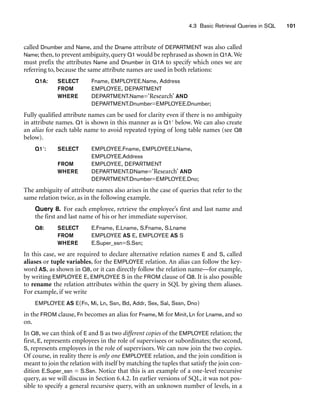

4.3.7 Discussion and Summary

of Basic SQL Retrieval Queries

A simple retrieval query in SQL can consist of up to four clauses, but only the first

two—SELECT and FROM—are mandatory. The clauses are specified in the follow-

ing order, with the clauses between square brackets [ ... ] being optional:

SELECT attribute list

FROM table list

[ WHERE condition ]

[ ORDER BY attribute list ];

The SELECT clause lists the attributes to be retrieved, and the FROM clause specifies

all relations (tables) needed in the simple query. The WHERE clause identifies the

conditions for selecting the tuples from these relations, including join conditions if

needed. ORDER BY specifies an order for displaying the results of a query. Two addi-

tional clauses GROUP BY and HAVING will be described in Section 5.1.8.

In Chapter 5, we will present more complex features of SQL retrieval queries. These

include the following: nested queries that allow one query to be included as part of

another query; aggregate functions that are used to provide summaries of the infor-

mation in the tables; two additional clauses (GROUP BY and HAVING) that can be

used to provide additional power to aggregate functions; and various types of joins

that can combine records from various tables in different ways.

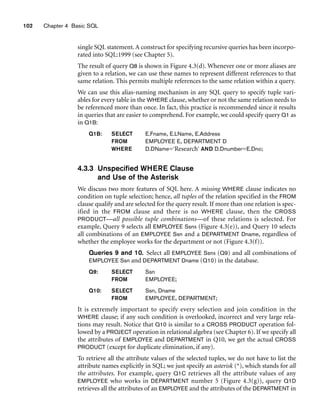

4.4 INSERT, DELETE, and UPDATE

Statements in SQL

In SQL, three commands can be used to modify the database: INSERT, DELETE, and

UPDATE. We discuss each of these in turn.

4.4.1 The INSERT Command

In its simplest form, INSERT is used to add a single tuple to a relation. We must spec-

ify the relation name and a list of values for the tuple. The values should be listed in

the same order in which the corresponding attributes were specified in the CREATE

TABLE command. For example, to add a new tuple to the EMPLOYEE relation shown](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-136-320.jpg)

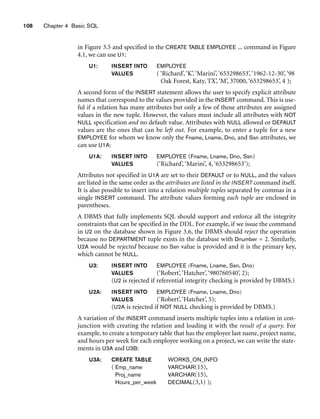

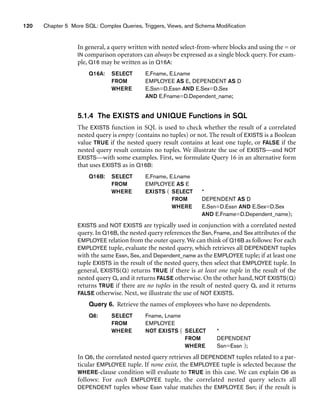

![5.1 More Complex SQL Retrieval Queries 129

to count the total number of employees whose salaries exceed $40,000 in each

department, but only for departments where more than five employees work. Here,

the condition (SALARY 40000) applies only to the COUNT function in the SELECT

clause. Suppose that we write the following incorrect query:

SELECT Dname, COUNT (*)

FROM DEPARTMENT, EMPLOYEE

WHERE Dnumber=Dno AND Salary40000

GROUP BY Dname

HAVING COUNT (*) 5;

This is incorrect because it will select only departments that have more than five

employees who each earn more than $40,000. The rule is that the WHERE clause is

executed first, to select individual tuples or joined tuples; the HAVING clause is

applied later, to select individual groups of tuples. Hence, the tuples are already

restricted to employees who earn more than $40,000 before the function in the

HAVING clause is applied. One way to write this query correctly is to use a nested

query, as shown in Query 28.

Query 28. For each department that has more than five employees, retrieve

the department number and the number of its employees who are making

more than $40,000.

Q28: SELECT Dnumber, COUNT (*)

FROM DEPARTMENT, EMPLOYEE

WHERE Dnumber=Dno AND Salary40000 AND

( SELECT Dno

FROM EMPLOYEE

GROUP BY Dno

HAVING COUNT (*) 5)

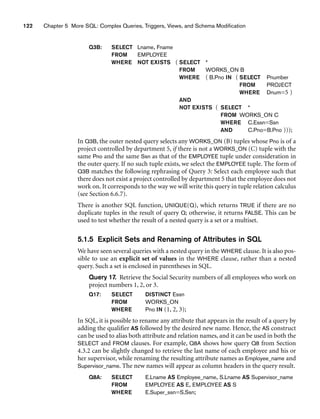

5.1.9 Discussion and Summary of SQL Queries

A retrieval query in SQL can consist of up to six clauses, but only the first two—

SELECT and FROM—are mandatory. The query can span several lines, and is ended

by a semicolon. Query terms are separated by spaces, and parentheses can be used to

group relevant parts of a query in the standard way. The clauses are specified in the

following order, with the clauses between square brackets [ ... ] being optional:

SELECT attribute and function list

FROM table list

[ WHERE condition ]

[ GROUP BY grouping attribute(s) ]

[ HAVING group condition ]

[ ORDER BY attribute list ];

The SELECT clause lists the attributes or functions to be retrieved. The FROM clause

specifies all relations (tables) needed in the query, including joined relations, but

not those in nested queries. The WHERE clause specifies the conditions for selecting

the tuples from these relations, including join conditions if needed. GROUP BY](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-158-320.jpg)

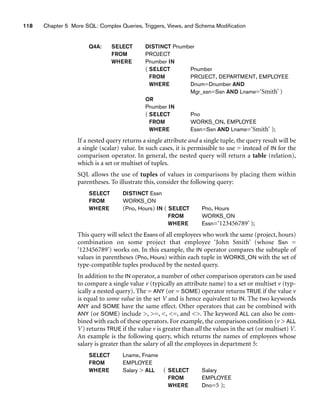

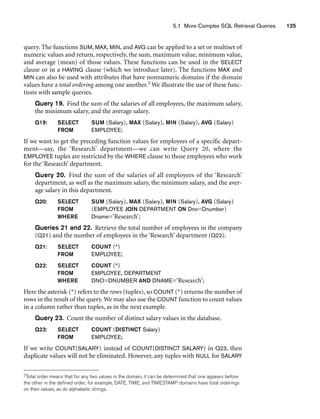

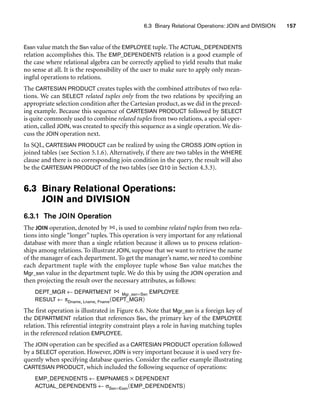

![140 Chapter 5 More SQL: Complex Queries, Triggers, Views, and Schema Modification

Table 5.2 Summary of SQL Syntax

CREATE TABLE table name ( column name column type [ attribute constraint ]

{ , column name column type [ attribute constraint ] }

[ table constraint { , table constraint } ] )

DROP TABLE table name

ALTER TABLE table name ADD column name column type

SELECT [ DISTINCT ] attribute list

FROM ( table name { alias } | joined table ) { , ( table name { alias } | joined table ) }

[ WHERE condition ]

[ GROUP BY grouping attributes [ HAVING group selection condition ] ]

[ ORDER BY column name [ order ] { , column name [ order ] } ]

attribute list ::= ( * | ( column name | function ( ( [ DISTINCT ] column name | * ) ) )

{ , ( column name | function ( ( [ DISTINCT] column name | * ) ) } ) )

grouping attributes ::= column name { , column name }

order ::= ( ASC | DESC )

INSERT INTO table name [ ( column name { , column name } ) ]

( VALUES ( constant value , { constant value } ) { , ( constant value { , constant value } ) }

| select statement )

DELETE FROM table name

[ WHERE selection condition ]

UPDATE table name

SET column name = value expression { , column name = value expression }

[ WHERE selection condition ]

CREATE [ UNIQUE] INDEX index name

ON table name ( column name [ order ] { , column name [ order ] } )

[ CLUSTER ]

DROP INDEX index name

CREATE VIEW view name [ ( column name { , column name } ) ]

AS select statement

DROP VIEW view name

NOTE: The commands for creating and dropping indexes are not part of standard SQL.

virtual or derived tables because they present the user with what appear to be tables;

however, the information in those tables is derived from previously defined tables.

Section 5.4 introduced the SQL ALTER TABLE statement, which is used for modify-

ing the database tables and constraints.

Table 5.2 summarizes the syntax (or structure) of various SQL statements. This sum-

mary is not meant to be comprehensive or to describe every possible SQL construct;

rather, it is meant to serve as a quick reference to the major types of constructs avail-

able in SQL. We use BNF notation, where nonterminal symbols are shown in angled

brackets ..., optional parts are shown in square brackets [...], repetitions are shown

in braces {...}, and alternatives are shown in parentheses (... | ... | ...).7

7The full syntax of SQL is described in many voluminous documents of hundreds of pages.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-169-320.jpg)

![148 Chapter 6 The Relational Algebra and Relational Calculus

where attribute name is the name of an attribute of R, comparison op is nor-

mally one of the operators {=, , ≤, , ≥, ≠}, and constant value is a constant value

from the attribute domain. Clauses can be connected by the standard Boolean oper-

ators and, or, and not to form a general selection condition. For example, to select

the tuples for all employees who either work in department 4 and make over

$25,000 per year, or work in department 5 and make over $30,000, we can specify

the following SELECT operation:

σ(Dno=4 AND Salary25000) OR (Dno=5 AND Salary30000)(EMPLOYEE)

The result is shown in Figure 6.1(a).

Notice that all the comparison operators in the set {=, , ≤, , ≥, ≠} can apply to

attributes whose domains are ordered values, such as numeric or date domains.

Domains of strings of characters are also considered to be ordered based on the col-

lating sequence of the characters. If the domain of an attribute is a set of unordered

values, then only the comparison operators in the set {=, ≠} can be used. An exam-

ple of an unordered domain is the domain Color = { ‘red’, ‘blue’, ‘green’, ‘white’, ‘yel-

low’, ...}, where no order is specified among the various colors. Some domains allow

additional types of comparison operators; for example, a domain of character

strings may allow the comparison operator SUBSTRING_OF.

In general, the result of a SELECT operation can be determined as follows. The

selection condition is applied independently to each individual tuple t in R. This

is done by substituting each occurrence of an attribute Ai in the selection condition

with its value in the tuple t[Ai]. If the condition evaluates to TRUE, then tuple t is

Fname Minit Lname Ssn Bdate Address Sex Salary Super_ssn Dno

Franklin

Jennifer

Ramesh

T Wong

Wallace

Narayan

333445555

987654321

666884444

1955-12-08

1941-06-20

1962-09-15

638 Voss, Houston, TX

291 Berry, Bellaire, TX

975 Fire Oak, Humble, TX

M

F

M

40000

43000

38000

888665555

888665555

333445555

5

4

5

Lname Fname Salary

Smith

Wong

Zelaya

Wallace

Narayan

English

Jabbar

Borg

John

Franklin

Alicia

Jennifer

Ramesh

Joyce

Ahmad

James

30000

40000

25000

43000

38000

25000

25000

30000

40000

25000

43000

38000

25000

55000

55000

Sex Salary

M

M

F

F

M

M

M

(c)

(b)

(a)

S

K

Figure 6.1

Results of SELECT and PROJECT operations. (a) σ(Dno=4 AND Salary25000) OR (Dno=5 AND Salary30000) (EMPLOYEE).

(b) πLname, Fname, Salary(EMPLOYEE). (c) πSex, Salary(EMPLOYEE).](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-177-320.jpg)

![6.3 Binary Relational Operations: JOIN and DIVISION 163

In general, the DIVISION operation is applied to two relations R(Z) ÷ S(X), where

the attributes of R are a subset of the attributes of S; that is, X ⊆ Z. Let Y be the set

of attributes of R that are not attributes of S; that is, Y = Z – X (and hence Z = X ∪

Y). The result of DIVISION is a relation T(Y) that includes a tuple t if tuples tR appear

in R with tR [Y] = t, and with tR [X] = tS for every tuple tS in S. This means that, for

a tuple t to appear in the result T of the DIVISION, the values in t must appear in R in

combination with every tuple in S. Note that in the formulation of the DIVISION

operation, the tuples in the denominator relation S restrict the numerator relation R

by selecting those tuples in the result that match all values present in the denomina-

tor. It is not necessary to know what those values are as they can be computed by

another operation, as illustrated in the SMITH_PNOS relation in the above example.

Figure 6.8(b) illustrates a DIVISION operation where X = {A}, Y = {B}, and Z = {A,

B}. Notice that the tuples (values) b1 and b4 appear in R in combination with all

three tuples in S; that is why they appear in the resulting relation T. All other values

of B in R do not appear with all the tuples in S and are not selected: b2 does not

appear with a2, and b3 does not appear with a1.

The DIVISION operation can be expressed as a sequence of π, ×, and – operations as

follows:

T1 ← πY(R)

T2 ← πY((S × T1) – R)

T ← T1 – T2

The DIVISION operation is defined for convenience for dealing with queries that

involve universal quantification (see Section 6.6.7) or the all condition. Most

RDBMS implementations with SQL as the primary query language do not directly

implement division. SQL has a roundabout way of dealing with the type of query

illustrated above (see Section 5.1.4, queries Q3A and Q3B). Table 6.1 lists the various

basic relational algebra operations we have discussed.

6.3.5 Notation for Query Trees

In this section we describe a notation typically used in relational systems to repre-

sent queries internally. The notation is called a query tree or sometimes it is known

as a query evaluation tree or query execution tree. It includes the relational algebra

operations being executed and is used as a possible data structure for the internal

representation of the query in an RDBMS.

A query tree is a tree data structure that corresponds to a relational algebra expres-

sion. It represents the input relations of the query as leaf nodes of the tree, and rep-

resents the relational algebra operations as internal nodes. An execution of the

query tree consists of executing an internal node operation whenever its operands

(represented by its child nodes) are available, and then replacing that internal node

by the relation that results from executing the operation. The execution terminates

when the root node is executed and produces the result relation for the query.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-192-320.jpg)

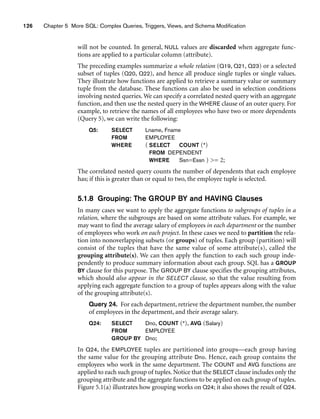

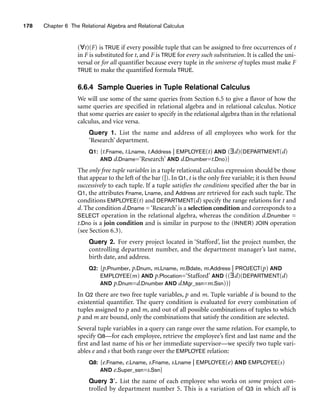

![164 Chapter 6 The Relational Algebra and Relational Calculus

Table 6.1 Operations of Relational Algebra

OPERATION PURPOSE NOTATION

SELECT Selects all tuples that satisfy the selection condition

from a relation R.

σselection condition(R)

PROJECT Produces a new relation with only some of the attrib-

utes of R, and removes duplicate tuples.

πattribute list(R)

THETA JOIN Produces all combinations of tuples from R1 and R2

that satisfy the join condition.

R1 join condition R2

EQUIJOIN Produces all the combinations of tuples from R1 and

R2 that satisfy a join condition with only equality

comparisons.

R1 join condition R2, OR

R1 (join attributes 1),

(join attributes 2) R2

NATURAL JOIN Same as EQUIJOIN except that the join attributes of R2

are not included in the resulting relation; if the join

attributes have the same names, they do not have to

be specified at all.

R1*join condition R2,

OR R1* (join attributes 1),

(join attributes 2) R2

OR R1 * R2

UNION Produces a relation that includes all the tuples in R1

or R2 or both R1 and R2; R1 and R2 must be union

compatible.

R1 ∪ R2

INTERSECTION Produces a relation that includes all the tuples in both

R1 and R2; R1 and R2 must be union compatible.

R1 ∩ R2

DIFFERENCE Produces a relation that includes all the tuples in R1

that are not in R2; R1 and R2 must be union compatible.

R1 – R2

CARTESIAN

PRODUCT

Produces a relation that has the attributes of R1 and

R2 and includes as tuples all possible combinations of

tuples from R1 and R2.

R1 × R2

DIVISION Produces a relation R(X) that includes all tuples t[X]

in R1(Z) that appear in R1 in combination with every

tuple from R2(Y), where Z = X ∪ Y.

R1(Z) ÷ R2(Y)

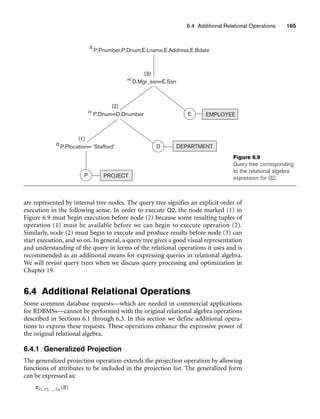

Figure 6.9 shows a query tree for Query 2 (see Section 4.3.1): For every project

located in ‘Stafford’, list the project number, the controlling department number, and

the department manager’s last name, address, and birth date. This query is specified

on the relational schema of Figure 3.5 and corresponds to the following relational

algebra expression:

πPnumber, Dnum, Lname, Address, Bdate(((σPlocation=‘Stafford’(PROJECT))

Dnum=Dnumber(DEPARTMENT)) Mgr_ssn=Ssn(EMPLOYEE))

In Figure 6.9, the three leaf nodes P, D, and E represent the three relations PROJECT,

DEPARTMENT, and EMPLOYEE. The relational algebra operations in the expression](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-193-320.jpg)

![6.5 Examples of Queries in Relational Algebra 171

relation are also kept in the result relation T(X, Y, Z). It is therefore the same as a

FULL OUTER JOIN on the common attributes.

Two tuples t1 in R and t2 in S are said to match if t1[X]=t2[X]. These will be com-

bined (unioned) into a single tuple in t. Tuples in either relation that have no

matching tuple in the other relation are padded with NULL values. For example, an

OUTER UNION can be applied to two relations whose schemas are STUDENT(Name,

Ssn, Department, Advisor) and INSTRUCTOR(Name, Ssn, Department, Rank). Tuples

from the two relations are matched based on having the same combination of values

of the shared attributes—Name, Ssn, Department. The resulting relation,

STUDENT_OR_INSTRUCTOR, will have the following attributes:

STUDENT_OR_INSTRUCTOR(Name, Ssn, Department, Advisor, Rank)

All the tuples from both relations are included in the result, but tuples with the same

(Name, Ssn, Department) combination will appear only once in the result. Tuples

appearing only in STUDENT will have a NULL for the Rank attribute, whereas tuples

appearing only in INSTRUCTOR will have a NULL for the Advisor attribute. A tuple

that exists in both relations, which represent a student who is also an instructor, will

have values for all its attributes.11

Notice that the same person may still appear twice in the result. For example, we

could have a graduate student in the Mathematics department who is an instructor

in the Computer Science department. Although the two tuples representing that

person in STUDENT and INSTRUCTOR will have the same (Name, Ssn) values, they

will not agree on the Department value, and so will not be matched. This is because

Department has two different meanings in STUDENT (the department where the per-

son studies) and INSTRUCTOR (the department where the person is employed as an

instructor). If we wanted to apply the OUTER UNION based on the same (Name, Ssn)

combination only, we should rename the Department attribute in each table to reflect

that they have different meanings and designate them as not being part of the

union-compatible attributes. For example, we could rename the attributes as

MajorDept in STUDENT and WorkDept in INSTRUCTOR.

6.5 Examples of Queries

in Relational Algebra

The following are additional examples to illustrate the use of the relational algebra

operations. All examples refer to the database in Figure 3.6. In general, the same

query can be stated in numerous ways using the various operations. We will state

each query in one way and leave it to the reader to come up with equivalent formu-

lations.

Query 1. Retrieve the name and address of all employees who work for the

‘Research’ department.

11Note that OUTER UNION is equivalent to a FULL OUTER JOIN if the join attributes are all the com-

mon attributes of the two relations.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-200-320.jpg)

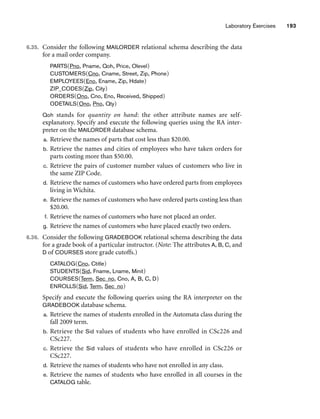

![6.6 The Tuple Relational Calculus 175

Our examples refer to the database shown in Figures 3.6 and 3.7. We will use the

same queries that were used in Section 6.5. Sections 6.6.6, 6.6.7, and 6.6.8 discuss

dealing with universal quantifiers and safety of expression issues. (Students inter-

ested in a basic introduction to tuple relational calculus may skip these sections.)

6.6.1 Tuple Variables and Range Relations

The tuple relational calculus is based on specifying a number of tuple variables.

Each tuple variable usually ranges over a particular database relation, meaning that

the variable may take as its value any individual tuple from that relation. A simple

tuple relational calculus query is of the form:

{t | COND(t)}

where t is a tuple variable and COND(t) is a conditional (Boolean) expression

involving t that evaluates to either TRUE or FALSE for different assignments of

tuples to the variable t. The result of such a query is the set of all tuples t that evalu-

ate COND(t) to TRUE. These tuples are said to satisfy COND(t). For example, to find

all employees whose salary is above $50,000, we can write the following tuple calcu-

lus expression:

{t | EMPLOYEE(t) AND t.Salary50000}

The condition EMPLOYEE(t) specifies that the range relation of tuple variable t is

EMPLOYEE. Each EMPLOYEE tuple t that satisfies the condition t.Salary50000 will

be retrieved. Notice that t.Salary references attribute Salary of tuple variable t; this

notation resembles how attribute names are qualified with relation names or aliases

in SQL, as we saw in Chapter 4. In the notation of Chapter 3, t.Salary is the same as

writing t[Salary].

The above query retrieves all attribute values for each selected EMPLOYEE tuple t. To

retrieve only some of the attributes—say, the first and last names—we write

{t.Fname, t.Lname | EMPLOYEE(t) AND t.Salary50000}

Informally, we need to specify the following information in a tuple relational calcu-

lus expression:

■ For each tuple variable t, the range relation R of t. This value is specified by

a condition of the form R(t). If we do not specify a range relation, then the

variable t will range over all possible tuples “in the universe” as it is not

restricted to any one relation.

■ A condition to select particular combinations of tuples. As tuple variables

range over their respective range relations, the condition is evaluated for

every possible combination of tuples to identify the selected combinations

for which the condition evaluates to TRUE.

■ A set of attributes to be retrieved, the requested attributes. The values of

these attributes are retrieved for each selected combination of tuples.

Before we discuss the formal syntax of tuple relational calculus, consider another

query.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-204-320.jpg)

![6.6 The Tuple Relational Calculus 179

[P.Pnumber,P.Dnum] [E.Lname,E.address,E.Bdate]

P.Dnum=D.Dnumber

P.Plocation=‘Stafford’

P D E

‘Stafford’

D.Mgr_ssn=E.Ssn

Figure 6.13

Query graph for Q2.

changed to some. In this case we need two join conditions and two existential

quantifiers.

Q0: {e.Lname, e.Fname | EMPLOYEE(e) AND ((∃x)(∃w)(PROJECT(x) AND

WORKS_ON(w) AND x.Dnum=5 AND w.Essn=e.Ssn AND

x.Pnumber=w.Pno))}

Query 4. Make a list of project numbers for projects that involve an employee

whose last name is ‘Smith’, either as a worker or as manager of the controlling

department for the project.

Q4: { p.Pnumber | PROJECT(p) AND (((∃e)(∃w)(EMPLOYEE(e)

AND WORKS_ON(w) AND w.Pno=p.Pnumber

AND e.Lname=‘Smith’ AND e.Ssn=w.Essn) )

OR

((∃m)(∃d)(EMPLOYEE(m) AND DEPARTMENT(d)

AND p.Dnum=d.Dnumber AND d.Mgr_ssn=m.Ssn

AND m.Lname=‘Smith’)))}

Compare this with the relational algebra version of this query in Section 6.5. The

UNION operation in relational algebra can usually be substituted with an OR con-

nective in relational calculus.

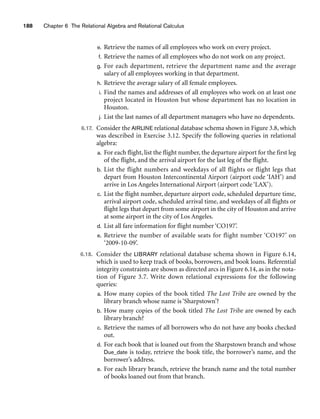

6.6.5 Notation for Query Graphs

In this section we describe a notation that has been proposed to represent relational

calculus queries that do not involve complex quantification in a graphical form.

These types of queries are known as select-project-join queries, because they only

involve these three relational algebra operations. The notation may be expanded to

more general queries, but we do not discuss these extensions here. This graphical

representation of a query is called a query graph. Figure 6.13 shows the query graph

for Q2. Relations in the query are represented by relation nodes, which are dis-

played as single circles. Constant values, typically from the query selection condi-

tions, are represented by constant nodes, which are displayed as double circles or

ovals. Selection and join conditions are represented by the graph edges (the lines

that connect the nodes), as shown in Figure 6.13. Finally, the attributes to be

retrieved from each relation are displayed in square brackets above each relation.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-208-320.jpg)

![8.5 A Sample UNIVERSITY EER Schema, Design Choices, and Formal Definitions 261

8.5.1 The UNIVERSITY Database Example

For our sample database application, consider a UNIVERSITY database that keeps

track of students and their majors, transcripts, and registration as well as of the uni-

versity’s course offerings. The database also keeps track of the sponsored research

projects of faculty and graduate students. This schema is shown in Figure 8.9. A dis-

cussion of the requirements that led to this schema follows.

For each person, the database maintains information on the person’s Name [Name],

Social Security number [Ssn], address [Address], sex [Sex], and birth date [Bdate].

Two subclasses of the PERSON entity type are identified: FACULTY and STUDENT.

Specific attributes of FACULTY are rank [Rank] (assistant, associate, adjunct,

research, visiting, and so on), office [Foffice], office phone [Fphone], and salary

[Salary]. All faculty members are related to the academic department(s) with which

they are affiliated [BELONGS] (a faculty member can be associated with several

departments, so the relationship is M:N). A specific attribute of STUDENT is [Class]

(freshman=1, sophomore=2, ..., graduate student=5). Each STUDENT is also related

to his or her major and minor departments (if known) [MAJOR] and [MINOR], to

the course sections he or she is currently attending [REGISTERED], and to the

courses completed [TRANSCRIPT]. Each TRANSCRIPT instance includes the grade

the student received [Grade] in a section of a course.

GRAD_STUDENT is a subclass of STUDENT, with the defining predicate Class = 5.

For each graduate student, we keep a list of previous degrees in a composite, multi-

valued attribute [Degrees]. We also relate the graduate student to a faculty advisor

[ADVISOR] and to a thesis committee [COMMITTEE], if one exists.

An academic department has the attributes name [Dname], telephone [Dphone], and

office number [Office] and is related to the faculty member who is its chairperson

[CHAIRS] and to the college to which it belongs [CD]. Each college has attributes

college name [Cname], office number [Coffice], and the name of its dean [Dean].

A course has attributes course number [C#], course name [Cname], and course

description [Cdesc]. Several sections of each course are offered, with each section

having the attributes section number [Sec#] and the year and quarter in which the

section was offered ([Year] and [Qtr]).10 Section numbers uniquely identify each sec-

tion. The sections being offered during the current quarter are in a subclass

CURRENT_SECTION of SECTION, with the defining predicate Qtr = Current_qtr and

Year = Current_year. Each section is related to the instructor who taught or is teach-

ing it ([TEACH]), if that instructor is in the database.

The category INSTRUCTOR_RESEARCHER is a subset of the union of FACULTY and

GRAD_STUDENT and includes all faculty, as well as graduate students who are sup-

ported by teaching or research. Finally, the entity type GRANT keeps track of

research grants and contracts awarded to the university. Each grant has attributes

grant title [Title], grant number [No], the awarding agency [Agency], and the starting

10We assume that the quarter system rather than the semester system is used in this university.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-290-320.jpg)

![8.5 A Sample UNIVERSITY EER Schema, Design Choices, and Formal Definitions 263

date [St_date]. A grant is related to one principal investigator [PI] and to all

researchers it supports [SUPPORT]. Each instance of support has as attributes the

starting date of support [Start], the ending date of the support (if known) [End],

and the percentage of time being spent on the project [Time] by the researcher being

supported.

8.5.2 Design Choices for Specialization/Generalization

It is not always easy to choose the most appropriate conceptual design for a database

application. In Section 7.7.3, we presented some of the typical issues that confront a

database designer when choosing among the concepts of entity types, relationship

types, and attributes to represent a particular miniworld situation as an ER schema.

In this section, we discuss design guidelines and choices for the EER concepts of

specialization/generalization and categories (union types).

As we mentioned in Section 7.7.3, conceptual database design should be considered

as an iterative refinement process until the most suitable design is reached. The fol-

lowing guidelines can help to guide the design process for EER concepts:

■ In general, many specializations and subclasses can be defined to make the

conceptual model accurate. However, the drawback is that the design

becomes quite cluttered. It is important to represent only those subclasses

that are deemed necessary to avoid extreme cluttering of the conceptual

schema.

■ If a subclass has few specific (local) attributes and no specific relationships, it

can be merged into the superclass. The specific attributes would hold NULL

values for entities that are not members of the subclass. A type attribute

could specify whether an entity is a member of the subclass.

■ Similarly, if all the subclasses of a specialization/generalization have few spe-

cific attributes and no specific relationships, they can be merged into the

superclass and replaced with one or more type attributes that specify the sub-

class or subclasses that each entity belongs to (see Section 9.2 for how this

criterion applies to relational databases).

■ Union types and categories should generally be avoided unless the situation

definitely warrants this type of construct, which does occur in some practi-

cal situations. If possible, we try to model using specialization/generalization

as discussed at the end of Section 8.4.

■ The choice of disjoint/overlapping and total/partial constraints on special-

ization/generalization is driven by the rules in the miniworld being modeled.

If the requirements do not indicate any particular constraints, the default

would generally be overlapping and partial, since this does not specify any

restrictions on subclass membership.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-292-320.jpg)

![264 Chapter 8 The Enhanced Entity-Relationship (EER) Model

As an example of applying these guidelines, consider Figure 8.6, where no specific

(local) attributes are shown. We could merge all the subclasses into the EMPLOYEE

entity type, and add the following attributes to EMPLOYEE:

■ An attribute Job_type whose value set {‘Secretary’, ‘Engineer’, ‘Technician’}

would indicate which subclass in the first specialization each employee

belongs to.

■ An attribute Pay_method whose value set {‘Salaried’,‘Hourly’} would indicate

which subclass in the second specialization each employee belongs to.

■ An attribute Is_a_manager whose value set {‘Yes’, ‘No’} would indicate

whether an individual employee entity is a manager or not.

8.5.3 Formal Definitions for the EER Model Concepts

We now summarize the EER model concepts and give formal definitions. A class11

is a set or collection of entities; this includes any of the EER schema constructs of

group entities, such as entity types, subclasses, superclasses, and categories. A

subclass S is a class whose entities must always be a subset of the entities in another

class, called the superclass C of the superclass/subclass (or IS-A) relationship. We

denote such a relationship by C/S. For such a superclass/subclass relationship, we

must always have

S ⊆ C

A specialization Z = {S1, S2, ..., Sn} is a set of subclasses that have the same super-

class G; that is, G/Si is a superclass/subclass relationship for i = 1, 2, ..., n. G is called

a generalized entity type (or the superclass of the specialization, or a

generalization of the subclasses {S1, S2, ..., Sn} ). Z is said to be total if we always (at

any point in time) have

Otherwise, Z is said to be partial. Z is said to be disjoint if we always have

Si ∩ Sj = ∅ (empty set) for i ≠ j

Otherwise, Z is said to be overlapping.

A subclass S of C is said to be predicate-defined if a predicate p on the attributes of

C is used to specify which entities in C are members of S; that is, S = C[p], where

C[p] is the set of entities in C that satisfy p. A subclass that is not defined by a pred-

icate is called user-defined.

A specialization Z (or generalization G) is said to be attribute-defined if a predicate

(A = ci), where A is an attribute of G and ci is a constant value from the domain of A,

n

∪ Si

= G

i=1

11The use of the word class here differs from its more common use in object-oriented programming lan-

guages such as C++. In C++, a class is a structured type definition along with its applicable functions

(operations).](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-293-320.jpg)

![8.6 Example of Other Notation: Representing Specialization and Generalization in UML Class Diagrams 265

is used to specify membership in each subclass Si in Z. Notice that if ci ≠ cj for i ≠ j,

and A is a single-valued attribute, then the specialization will be disjoint.

A category T is a class that is a subset of the union of n defining superclasses D1, D2,

..., Dn, n 1, and is formally specified as follows:

T ⊆ (D1 ∪ D2 ... ∪ Dn)

A predicate pi on the attributes of Di can be used to specify the members of each Di

that are members of T. If a predicate is specified on every Di, we get

T = (D1[p1] ∪ D2[p2] ... ∪ Dn[pn])

We should now extend the definition of relationship type given in Chapter 7 by

allowing any class—not only any entity type—to participate in a relationship.

Hence, we should replace the words entity type with class in that definition. The

graphical notation of EER is consistent with ER because all classes are represented

by rectangles.

8.6 Example of Other Notation: Representing

Specialization and Generalization in UML

Class Diagrams

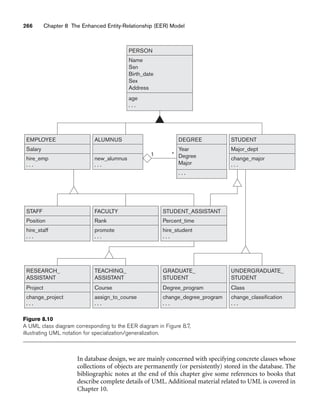

We now discuss the UML notation for generalization/specialization and inheri-

tance. We already presented basic UML class diagram notation and terminology in

Section 7.8. Figure 8.10 illustrates a possible UML class diagram corresponding to

the EER diagram in Figure 8.7. The basic notation for specialization/generalization

(see Figure 8.10) is to connect the subclasses by vertical lines to a horizontal line,

which has a triangle connecting the horizontal line through another vertical line to

the superclass. A blank triangle indicates a specialization/generalization with the

disjoint constraint, and a filled triangle indicates an overlapping constraint. The root

superclass is called the base class, and the subclasses (leaf nodes) are called leaf

classes.

The above discussion and example in Figure 8.10, and the presentation in Section

7.8 gave a brief overview of UML class diagrams and terminology. We focused on

the concepts that are relevant to ER and EER database modeling, rather than those

concepts that are more relevant to software engineering. In UML, there are many

details that we have not discussed because they are outside the scope of this book

and are mainly relevant to software engineering. For example, classes can be of var-

ious types:

■ Abstract classes define attributes and operations but do not have objects cor-

responding to those classes. These are mainly used to specify a set of attrib-

utes and operations that can be inherited.

■ Concrete classes can have objects (entities) instantiated to belong to the

class.

■ Template classes specify a template that can be further used to define other

classes.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-294-320.jpg)

![Exercises 277

■ An art object in the OTHER category has a Type (print, photo, etc.) and

Style.

■ ART_OBJECTs are categorized as either PERMANENT_COLLECTION

(objects that are owned by the museum) and BORROWED. Information

captured about objects in the PERMANENT_COLLECTION includes

Date_acquired, Status (on display, on loan, or stored), and Cost.

Information captured about BORROWED objects includes the Collection

from which it was borrowed, Date_borrowed, and Date_returned.

■ Information describing the country or culture of Origin (Italian, Egyptian,

American, Indian, and so forth) and Epoch (Renaissance, Modern,

Ancient, and so forth) is captured for each ART_OBJECT.

■ The museum keeps track of ARTIST information, if known: Name,

DateBorn (if known), Date_died (if not living), Country_of_origin, Epoch,

Main_style, and Description. The Name is assumed to be unique.

■ Different EXHIBITIONS occur, each having a Name, Start_date, and

End_date. EXHIBITIONS are related to all the art objects that were on dis-

play during the exhibition.

■ Information is kept on other COLLECTIONS with which the museum

interacts, including Name (unique), Type (museum, personal, etc.),

Description, Address, Phone, and current Contact_person.

Draw an EER schema diagram for this application. Discuss any assumptions

you make, and that justify your EER design choices.

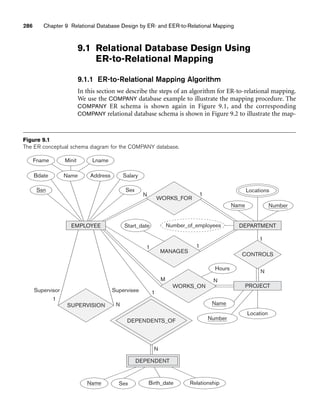

8.21. Figure 8.12 shows an example of an EER diagram for a small private airport

database that is used to keep track of airplanes, their owners, airport

employees, and pilots. From the requirements for this database, the follow-

ing information was collected: Each AIRPLANE has a registration number

[Reg#], is of a particular plane type [OF_TYPE], and is stored in a particular

hangar [STORED_IN]. Each PLANE_TYPE has a model number [Model], a

capacity [Capacity], and a weight [Weight]. Each HANGAR has a number

[Number], a capacity [Capacity], and a location [Location]. The database also

keeps track of the OWNERs of each plane [OWNS] and the EMPLOYEEs who

have maintained the plane [MAINTAIN]. Each relationship instance in OWNS

relates an AIRPLANE to an OWNER and includes the purchase date [Pdate].

Each relationship instance in MAINTAIN relates an EMPLOYEE to a service

record [SERVICE]. Each plane undergoes service many times; hence, it is

related by [PLANE_SERVICE] to a number of SERVICE records. A SERVICE

record includes as attributes the date of maintenance [Date], the number of

hours spent on the work [Hours], and the type of work done [Work_code].

We use a weak entity type [SERVICE] to represent airplane service, because

the airplane registration number is used to identify a service record. An

OWNER is either a person or a corporation. Hence, we use a union type (cat-

egory) [OWNER] that is a subset of the union of corporation

[CORPORATION] and person [PERSON] entity types. Both pilots [PILOT]

and employees [EMPLOYEE] are subclasses of PERSON. Each PILOT has](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-306-320.jpg)

![278 Chapter 8 The Enhanced Entity-Relationship (EER) Model

specific attributes license number [Lic_num] and restrictions [Restr]; each

EMPLOYEE has specific attributes salary [Salary] and shift worked [Shift]. All

PERSON entities in the database have data kept on their Social Security

number [Ssn], name [Name], address [Address], and telephone number

[Phone]. For CORPORATION entities, the data kept includes name [Name],

address [Address], and telephone number [Phone]. The database also keeps

track of the types of planes each pilot is authorized to fly [FLIES] and the

types of planes each employee can do maintenance work on [WORKS_ON].

Number Location

Capacity

Name Phone

Address

Name

Ssn

Phone

Add ress

Lic_num

Restr

Date/workcode

1

N

N

1

N

1

PLANE_TYPE

Model Capacity

Pdate

Weight

MAINTAIN

M

M

N

OF_TYPE

STORED_IN

N

M

OWNS

FLIES

WORKS_ON

N

N

M

Reg#

Date

Hours

HANGAR

PILOT

EMPLOYEE

Salary

PLANE_SERVICE

SERVICE

Workcode

AIRPLANE

Shift

U

CORPORATION PERSON

OWNER

Figure 8.12

EER schema for a SMALL_AIRPORT database.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-307-320.jpg)

![10.3 Use of UML Diagrams as an Aid to Database Design Specification 333

Object: Class or Actor

Lifeline

Focus of control/activation Message to self

Message

Object: Class or Actor

Object: Class or Actor

Object deconstruction termination

Return

Figure 10.9

The sequence diagram notation.

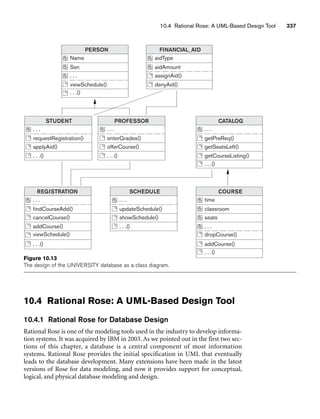

H. Statechart Diagrams. Statechart diagrams describe how an object’s state

changes in response to external events.

To describe the behavior of an object, it is common in most object-oriented tech-

niques to draw a statechart diagram to show all the possible states an object can get

into in its lifetime. The UML statecharts are based on David Harel’s8 statecharts.

They show a state machine consisting of states, transitions, events, and actions and

are very useful in the conceptual design of the application that works against a data-

base of stored objects.

The important elements of a statechart diagram shown in Figure 10.10 are as follows:

■ States. Shown as boxes with rounded corners, they represent situations in

the lifetime of an object.

■ Transitions. Shown as solid arrows between the states, they represent the

paths between different states of an object. They are labeled by the event-

name [guard] /action; the event triggers the transition and the action results

from it. The guard is an additional and optional condition that specifies a

condition under which the change of state may not occur.

■ Start/Initial State. Shown by a solid circle with an outgoing arrow to a state.

■ Stop/Final State. Shown as a double-lined filled circle with an arrow point-

ing into it from a state.

Statechart diagrams are useful in specifying how an object’s reaction to a message

depends on its state. An event is something done to an object such as receiving a

message; an action is something that an object does such as sending a message.

8See Harel (1987).](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-362-320.jpg)

![10.3 Use of UML Diagrams as an Aid to Database Design Specification 335

charge of processing student aid applications for which the students have to apply.

Assume that we have to design a database that maintains the data about students,

professors, courses, financial aid, and so on. We also want to design some of the

applications that enable us to do course registration, financial aid application pro-

cessing, and maintaining of the university-wide course catalog by the registrar’s

office. The above requirements may be depicted by a series of UML diagrams.

As mentioned previously, one of the first steps involved in designing a database is to

gather customer requirements by using use case diagrams. Suppose one of the

requirements in the UNIVERSITY database is to allow the professors to enter grades

for the courses they are teaching and for the students to be able to register for

courses and apply for financial aid. The use case diagram corresponding to these use

cases can be drawn as shown in Figure 10.8.

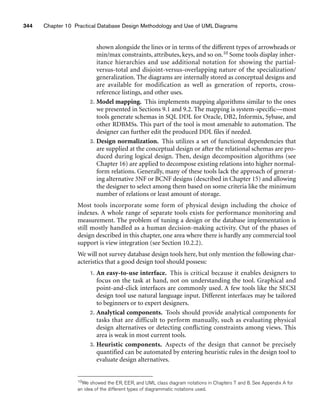

Another helpful element when designing a system is to graphically represent some

of the states the system can be in, to visualize the various states the system can be in

during the course of an application. For example, in our UNIVERSITY database the

various states that the system goes through when the registration for a course with

50 seats is opened can be represented by the statechart diagram in Figure 10.11. This

shows the states of a course while enrollment is in process. The first state sets the

count of students enrolled to zero. During the enrolling state, the Enroll student

transition continues as long as the count of enrolled students is less than 50. When

the count reaches 50, the state to close the section is entered. In a real system, addi-

tional states and/or transitions could be added to allow a student to drop a section

and any other needed actions.

Next, we can design a sequence diagram to visualize the execution of the use cases.

For the university database, the sequence diagram corresponds to the use case:

student requests to register and selects a particular course to register is shown in Figure

Enroll student [count 50]

Enroll student/set count = 0

Course enrollment Enrolling

Entry/register student

Section closing

Canceled

Cancel

Cancel

Cancel Count = 50

Exit/closesection

Do/enroll students

Figure 10.11

A sample statechart

diagram for the

UNIVERSITY

database.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-364-320.jpg)

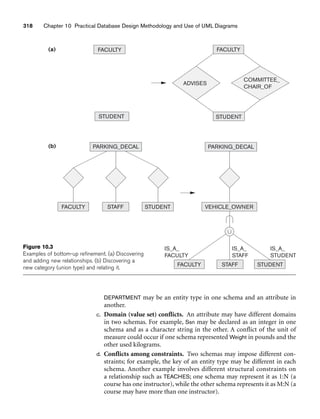

![336 Chapter 10 Practical Database Design Methodology and Use of UML Diagrams

getSeatsLeft

getPreq = true

[getSeatsLeft =

True]/update

Schedule

:Registration

requestRegistration getCourseListing

selectCourse addCourse getPreReq

:Student

:Catalog :Course :Schedule

Figure 10.12

A sequence diagram for the UNIVERSITY database.

10.12. The catalog is first browsed to get course listings. Then, when the student

selects a course to register in, prerequisites and course capacity are checked, and the

course is then added to the student’s schedule if the prerequisites are met and there

is space in the course.

These UML diagrams are not the complete specification of the UNIVERSITY data-

base. There will be other use cases for the various applications of the actors, includ-

ing registrar, student, professor, and so on. A complete methodology for how to

arrive at the class diagrams from the various diagrams we illustrated in this section

is outside our scope here. Design methodologies remain a matter of judgment and

personal preferences. However, the designer should make sure that the class dia-

gram will account for all the specifications that have been given in the form of the

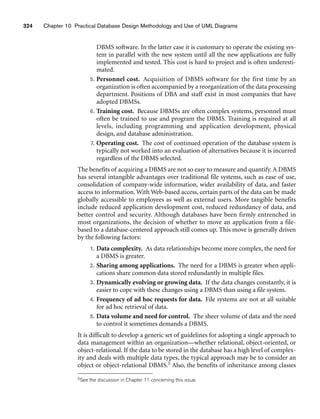

use cases, statechart, and sequence diagrams. The class diagram in Figure 10.13

shows a possible class diagram for this application, with the structural relationships

and the operations within the classes. These classes will need to be implemented to

develop the UNIVERSITY database, and together with the operations they will

implement the complete class schedule/enrollment/aid application. Only some of

the attributes and methods (operations) are shown in Figure 10.13. It is likely that

these class diagrams will be modified as more details are specified and more func-

tions evolve in the UNIVERSITY application.](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-365-320.jpg)

![11.1 Overview of Object Database Concepts 359

struct CollegeDegreeMajor: string, Degree: string, Year: date. To create

complex nested type structures in the object model, the collection type con-

structors are needed, which we discuss next. Notice that the type construc-

tors atom and struct are the only ones available in the original (basic)

relational model.

3. Collection (or multivalued) type constructors include the set(T), list(T),

bag(T), array(T), and dictionary(K,T) type constructors. These allow part

of an object or literal value to include a collection of other objects or values

when needed. These constructors are also considered to be type generators

because many different types can be created. For example, set(string),

set(integer), and set(Employee) are three different types that can be created

from the set type constructor.All the elements in a particular collection value

must be of the same type. For example, all values in a collection of type

set(string) must be string values.

The atom constructor is used to represent all basic atomic values, such as integers,

real numbers, character strings, Booleans, and any other basic data types that the

system supports directly. The tuple constructor can create structured values and

objects of the form a1:i1, a2:i2, ..., an:in, where each aj is an attribute name10 and

each ij is a value or an OID.

The other commonly used constructors are collectively referred to as collection

types, but have individual differences among them. The set constructor will create

objects or literals that are a set of distinct elements {i1, i2, ..., in}, all of the same type.

The bag constructor (sometimes called a multiset) is similar to a set except that the

elements in a bag need not be distinct. The list constructor will create an ordered list

[i1, i2, ..., in] of OIDs or values of the same type. A list is similar to a bag except that

the elements in a list are ordered, and hence we can refer to the first, second, or jth

element. The array constructor creates a single-dimensional array of elements of

the same type. The main difference between array and list is that a list can have an

arbitrary number of elements whereas an array typically has a maximum size.

Finally, the dictionary constructor creates a collection of two tuples (K, V), where

the value of a key K can be used to retrieve the corresponding value V.

The main characteristic of a collection type is that its objects or values will be a

collection of objects or values of the same type that may be unordered (such as a set or

a bag) or ordered (such as a list or an array). The tuple type constructor is often

called a structured type, since it corresponds to the struct construct in the C and

C++ programming languages.

An object definition language (ODL)11 that incorporates the preceding type con-

structors can be used to define the object types for a particular database application.

In Section 11.3 we will describe the standard ODL of ODMG, but first we introduce

10Also called an instance variable name in OO terminology.

11This corresponds to the DDL (data definition language) of the database system (see Chapter 2).](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-388-320.jpg)

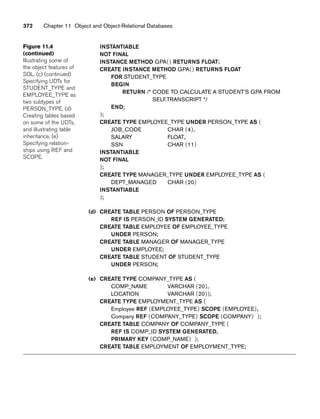

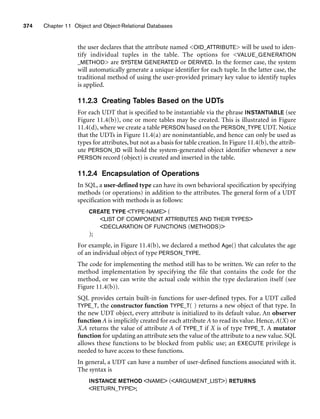

![11.2 Object-Relational Features: Object Database Extensions to SQL 371

Figure 11.4

Illustrating some of the object

features of SQL. (a) Using UDTs

as types for attributes such as

Address and Phone, (b) Specifying

UDT for PERSON_TYPE, (c)

Specifying UDTs for STUDENT_TYPE

and EMPLOYEE_TYPE as two sub-

types of PERSON_TYPE

(a) CREATE TYPE STREET_ADDR_TYPE AS (

NUMBER VARCHAR (5),

STREET_NAME VARCHAR (25),

APT_NO VARCHAR (5),

SUITE_NO VARCHAR (5)

);

CREATE TYPE USA_ADDR_TYPE AS (

STREET_ADDR STREET_ADDR_TYPE,

CITY VARCHAR (25),

ZIP VARCHAR (10)

);

CREATE TYPE USA_PHONE_TYPE AS (

PHONE_TYPE VARCHAR (5),

AREA_CODE CHAR (3),

PHONE_NUM CHAR (7)

);

(b) CREATE TYPE PERSON_TYPE AS (

NAME VARCHAR (35),

SEX CHAR,

BIRTH_DATE DATE,

PHONES USA_PHONE_TYPE ARRAY [4],

ADDR USA_ADDR_TYPE

INSTANTIABLE

NOT FINAL

REF IS SYSTEM GENERATED

INSTANCE METHOD AGE() RETURNS INTEGER;

CREATE INSTANCE METHOD AGE() RETURNS INTEGER

FOR PERSON_TYPE

BEGIN

RETURN /* CODE TO CALCULATE A PERSON’S AGE FROM

TODAY’S DATE AND SELF.BIRTH_DATE */

END;

);

(c) CREATE TYPE GRADE_TYPE AS (

COURSENO CHAR (8),

SEMESTER VARCHAR (8),

YEAR CHAR (4),

GRADE CHAR

);

CREATE TYPE STUDENT_TYPE UNDER PERSON_TYPE AS (

MAJOR_CODE CHAR (4),

STUDENT_ID CHAR (12),

DEGREE VARCHAR (5),

TRANSCRIPT GRADE_TYPE ARRAY [100] (continues)](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-400-320.jpg)

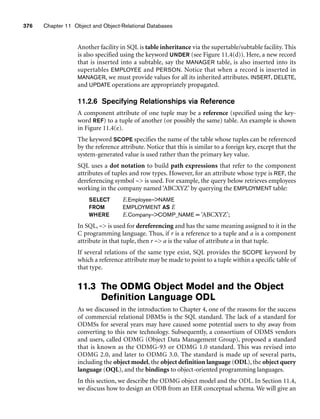

![11.2 Object-Relational Features: Object Database Extensions to SQL 373

by using the keyword ROW. For example, we could use the following instead of

declaring STREET_ADDR_TYPE as a separate type as in Figure 11.4(a):

CREATE TYPE USA_ADDR_TYPE AS (

STREET_ADDR ROW ( NUMBER VARCHAR (5),

STREET_NAME VARCHAR (25),

APT_NO VARCHAR (5),

SUITE_NO VARCHAR (5) ),

CITY VARCHAR (25),

ZIP VARCHAR (10)

);

To allow for collection types in order to create complex-structured objects, four

constructors are now included in SQL: ARRAY, MULTISET, LIST, and SET. These are

similar to the type constructors discussed in Section 11.1.3. In the initial specifica-

tion of SQL/Object, only the ARRAY type was specified, since it can be used to sim-

ulate the other types, but the three additional collection types were included in the

latest version of the SQL standard. In Figure 11.4(b), the PHONES attribute of

PERSON_TYPE has as its type an array whose elements are of the previously defined

UDT USA_PHONE_TYPE. This array has a maximum of four elements, meaning

that we can store up to four phone numbers per person. An array can also have no

maximum number of elements if desired.

An array type can have its elements referenced using the common notation of square

brackets. For example, PHONES[1] refers to the first location value in a PHONES

attribute (see Figure 11.4(b)). A built-in function CARDINALITY can return the cur-

rent number of elements in an array (or any other collection type). For example,

PHONES[CARDINALITY (PHONES)] refers to the last element in the array.

The commonly used dot notation is used to refer to components of a ROW TYPE or

a UDT. For example, ADDR.CITY refers to the CITY component of an ADDR attribute

(see Figure 11.4(b)).

11.2.2 Object Identifiers Using Reference Types

Unique system-generated object identifiers can be created via the reference type in

the latest version of SQL. For example, in Figure 11.4(b), the phrase:

REF IS SYSTEM GENERATED

indicates that whenever a new PERSON_TYPE object is created, the system will

assign it a unique system-generated identifier. It is also possible not to have a

system-generated object identifier and use the traditional keys of the basic relational

model if desired.

In general, the user can specify that system-generated object identifiers for the indi-

vidual rows in a table should be created. By using the syntax:

REF IS OID_ATTRIBUTE VALUE_GENERATION_METHOD ;](https://image.slidesharecdn.com/fundamentalsofdatabasesystems6thedition-220615064515-a72cc74c/85/Fundamentals_of_Database_Systems-_6th_Edition-pdf-402-320.jpg)

![11.5 The Object Query Language OQL 405

Note that query Q12 also illustrates how attribute, relationship, and operation

inheritance applies to queries. Although S is an iterator that ranges over the extent

GRAD_STUDENTS, we can write S.Majors_in because the Majors_in relationship is

inherited by GRAD_STUDENT from STUDENT via extends (see Figure 11.10). Finally,

to illustrate the exists quantifier, query Q13 answers the following question: Does

any graduate Computer Science major have a 4.0 GPA? Here, again, the operation gpa

is inherited by GRAD_STUDENT from STUDENT via extends.

Q13: exists G in

( select S

from S in GRAD_STUDENTS

where S.Majors_in.Dname = ‘Computer Science’ )

: G.Gpa = 4;

Ordered (Indexed) Collection Expressions. As we discussed in Section 11.3.3,

collections that are lists and arrays have additional operations, such as retrieving the

ith, first, and last elements. Additionally, operations exist for extracting a subcollec-

tion and concatenating two lists. Hence, query expressions that involve lists or

arrays can invoke these operations. We will illustrate a few of these operations using

sample queries. Q14 retrieves the last name of the faculty member who earns the

highest salary:

Q14: first ( select struct(facname: F.name.Lname, salary: F.Salary)

from F in FACULTY

order by salary desc );

Q14 illustrates the use of the first operator on a list collection that contains the

salaries of faculty members sorted in descending order by salary. Thus, the first ele-

ment in this sorted list contains the faculty member with the highest salary. This

query assumes that only one faculty member earns the maximum salary. The next

query, Q15, retrieves the top three Computer Science majors based on GPA.

Q15: ( select struct( last_name: S.name.Lname, first_name: S.name.Fname,