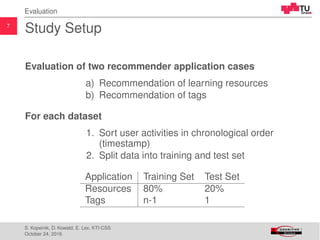

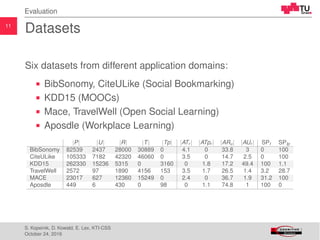

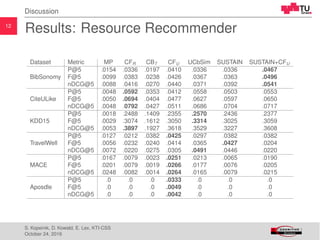

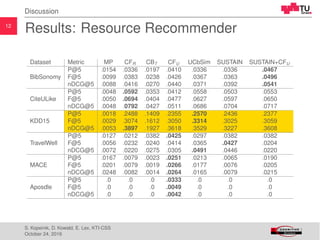

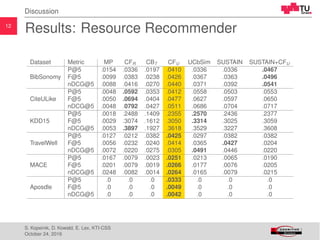

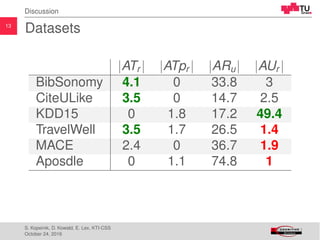

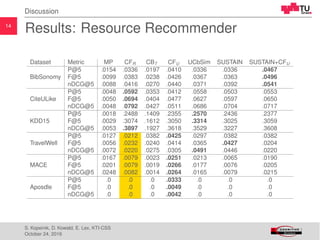

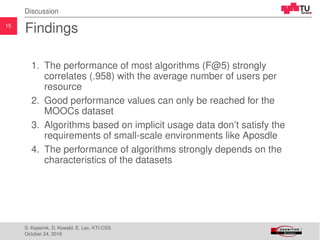

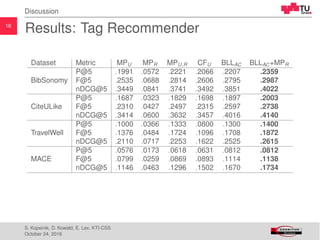

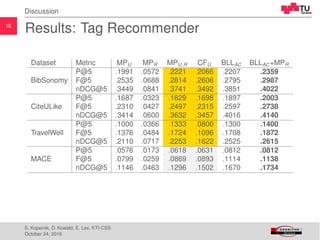

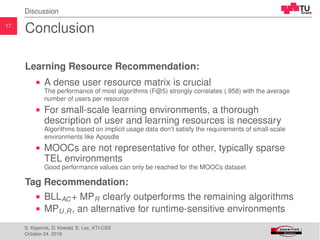

The document summarizes a comparative study of recommender systems in technology-enhanced learning (TEL). It compares the performance of various recommendation algorithms (e.g. most popular, collaborative filtering) on six TEL datasets. The results show that algorithm performance depends strongly on dataset characteristics, with the number of users per resource being crucial. A hybrid approach combining cognitive and popularity methods worked best for tag recommendations.

![3

Introduction

Recommender Systems (RS)

... are software components that suggest items of interest or of

relevance to a user’s needs [Kon, 2004, Ricci et al., 2011].

Recommendations are related to decision making processes:

Ease information overload

Sales assistance

Popular examples: Amazon.com, YouTube, Netflix, Tripadvisor,

Last.fm, ...

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-3-320.jpg)

![4

Introduction

RS in Technology Enhanced Learning

. . . are adaptational tasks to fit the learner’s

needs [H¨am¨al¨ainen and Vinni, 2010].

Typical recommendation services include:

Peer recommendations

Activity recommendations

Learning resource recommendations

Tag recommendations

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-4-320.jpg)

![5

Introduction

Motivation

So far there are no generally suggested or commonly

applied recommender system in TEL [Khribi et al., 2015]

Learning data is sparse, especially in informal learning

environments [Manouselis et al., 2011]

Available data varies greatly, but available implicit usage

data typically includes learner ids, information about

learning resources, timestamps [Verbert et al., 2012]

Research Question 1

How accurate do state-of-the-art resource recommendation algorithms,

using only implicit usage data, perform on different TEL datasets?

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-5-320.jpg)

![6

Introduction

Motivation

Lack of learning object meta-data

Shifting the task to the crowd [Bateman et al., 2007]

Tagging is a mechanism to collectively annotate

learning objects [Xu et al., 2006]

fosters reflection and deep learning

[Kuhn et al., 2012]

needs to be done regularly and thoroughly

Research Question 2

Which computationally inexpensive state-of-the-art tag recommendation

algorithm performs best on TEL datasets?

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-6-320.jpg)

![8

Evaluation

Algorithms

Well-established, computationally inexpensive tag and

resource recommendation strategies

Most Popular (MP) [J¨aschke et al., 2007]

. . . counts frequency of occurrence

Collaborative Filtering (CF) [Schafer et al., 2007]

. . . calculates neighbourhood of users or items

Content-based Filtering (CB) [Basilico and Hofmann, 2004]

. . . calculates similarity of user profiles and item

content

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-8-320.jpg)

![9

Evaluation

Algorithms

Approaches that have been suggested in the context of TEL

Usage Context-based Similarity (UCbSim)

[Niemann and Wolpers, 2013]

. . . calculates item similarities based on

co-occurrences in user sessions

Base Level Learning Equation (BLLAC) [Kowald et al., 2015]

. . . mimics human semantic memory retrieval as a

function of recency and frequency of tag use

Sustain [Seitlinger et al., 2015]

. . . simulates category learning as a dynamic

clustering approach

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-9-320.jpg)

![10

Evaluation

Metrics [Marinho et al., 2012, Sakai, 2007]

Recall (R)

The proportion of correctly recommended items to

all items relevant to the user.

Precision (P)

The proportion of correctly recommended items to

all recommended items.

F-measure (F)

The harmonic mean of R and P.

Discounted Cumulative Gain (nDCG)

A ranking quality metric that calculates usefulness

scores of items based on relevance and position.

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-10-320.jpg)

![19

Discussion

References I

[Kon, 2004] (2004).

Introduction to recommender systems: Algorithms and evaluation.

ACM Trans. Inf. Syst., 22(1):1–4.

[Basilico and Hofmann, 2004] Basilico, J. and Hofmann, T. (2004).

Unifying collaborative and content-based filtering.

In Proc. of ICML’04, page 9. ACM.

[Bateman et al., 2007] Bateman, S., Brooks, C., Mccalla, G., and Brusilovsky, P. (2007).

Applying collaborative tagging to e-learning.

In Proceedings of the 16th international world wide web conference (WWW2007).

[H¨am¨al¨ainen and Vinni, 2010] H¨am¨al¨ainen, W. and Vinni, M. (2010).

Classifiers for educational data mining.

Handbook of Educational Data Mining, Chapman & Hall/CRC Data Mining and Knowledge Discovery Series, pages 57–71.

[J¨aschke et al., 2007] J¨aschke, R., Marinho, L., Hotho, A., Schmidt-Thieme, L., and Stumme, G. (2007).

Tag recommendations in folksonomies.

In Proc. of PKDD’07, pages 506–514. Springer.

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-24-320.jpg)

![20

Discussion

References II

[Khribi et al., 2015] Khribi, M. K., Jemni, M., and Nasraoui, O. (2015).

Recommendation systems for personalized technology-enhanced learning.

In Ubiquitous Learning Environments and Technologies, pages 159–180. Springer.

[Kowald et al., 2015] Kowald, D., Kopeinik, S., Seitlinger, P., Ley, T., Albert, D., and Trattner, C. (2015).

Refining frequency-based tag reuse predictions by means of time and semantic context.

In Mining, Modeling, and Recommending’Things’ in Social Media, pages 55–74. Springer.

[Kuhn et al., 2012] Kuhn, A., McNally, B., Schmoll, S., Cahill, C., Lo, W.-T., Quintana, C., and Delen, I. (2012).

How students find, evaluate and utilize peer-collected annotated multimedia data in science inquiry with zydeco.

In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pages 3061–3070. ACM.

[Manouselis et al., 2011] Manouselis, N., Drachsler, H., Vuorikari, R., Hummel, H., and Koper, R. (2011).

Recommender systems in technology enhanced learning.

In Recommender systems handbook, pages 387–415. Springer.

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-25-320.jpg)

![21

Discussion

References III

[Marinho et al., 2012] Marinho, L. B., Hotho, A., J¨aschke, R., Nanopoulos, A., Rendle, S., Schmidt-Thieme, L., Stumme, G.,

and Symeonidis, P. (2012).

Recommender systems for social tagging systems.

Springer Science & Business Media.

[Niemann and Wolpers, 2013] Niemann, K. and Wolpers, M. (2013).

Usage context-boosted filtering for recommender systems in tel.

In Scaling up Learning for Sustained Impact, pages 246–259. Springer.

[Ricci et al., 2011] Ricci, F., Rokach, L., and Shapira, B. (2011).

Introduction to recommender systems handbook.

Springer.

[Sakai, 2007] Sakai, T. (2007).

On the reliability of information retrieval metrics based on graded relevance.

Information processing & management, 43(2):531–548.

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-26-320.jpg)

![22

Discussion

References IV

[Schafer et al., 2007] Schafer, J. B., Frankowski, D., Herlocker, J., and Sen, S. (2007).

Collaborative filtering recommender systems.

In The adaptive web, pages 291–324. Springer.

[Seitlinger et al., 2015] Seitlinger, P., Kowald, D., Kopeinik, S., Hasani-Mavriqi, I., Ley, T., and Lex, E. (2015).

Attention please! a hybrid resource recommender mimicking attention-interpretation dynamics.

arXiv preprint arXiv:1501.07716.

[Verbert et al., 2012] Verbert, K., Manouselis, N., Drachsler, H., and Duval, E. (2012).

Dataset-driven research to support learning and knowledge analytics.

Educational Technology & Society, 15(3):133–148.

[Xu et al., 2006] Xu, Z., Fu, Y., Mao, J., and Su, D. (2006).

Towards the semantic web: Collaborative tag suggestions.

In Collaborative web tagging workshop at WWW2006, Edinburgh, Scotland.

S. Kopeinik, D. Kowald, E. Lex, KTI-CSS

October 24, 2016](https://image.slidesharecdn.com/dc-pres-161024090130/85/EC-TEL-2016-Which-Algorithms-Suit-Which-Learning-Environments-27-320.jpg)