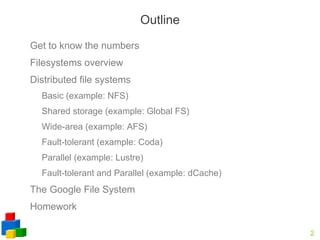

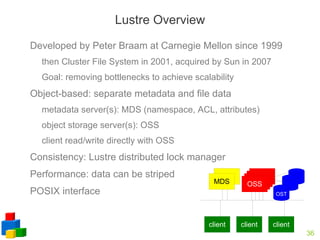

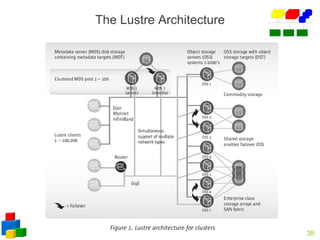

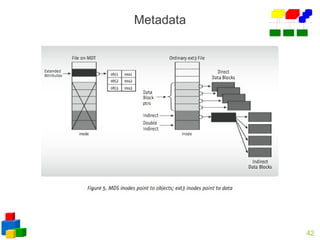

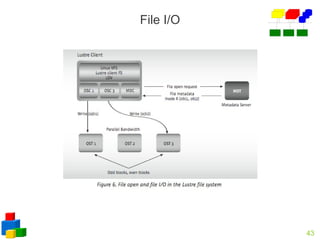

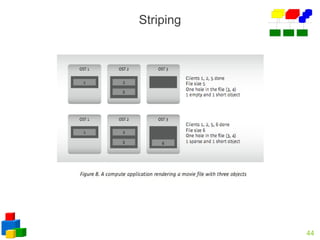

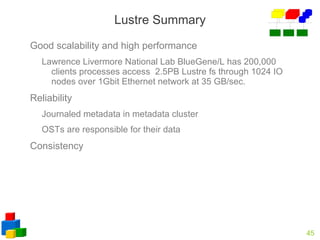

The document provides an overview of distributed file systems, including NFS, AFS, Lustre, and others. It discusses key aspects like scalability, consistency, caching, replication, and fault tolerance. Lustre is highlighted as an example of a distributed file system that aims to remove bottlenecks and achieve high scalability through an object-based design with separate metadata and storage servers.