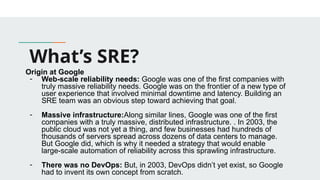

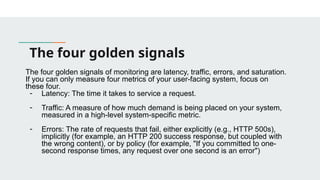

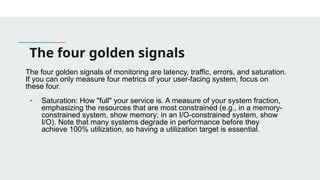

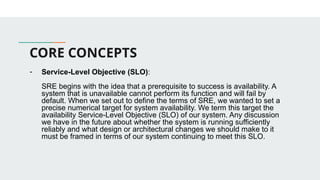

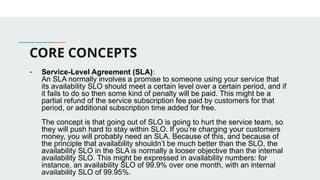

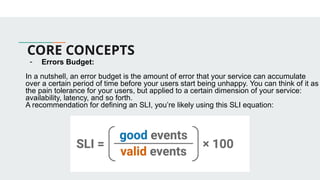

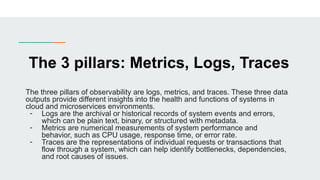

This presentation introduces the key principles of DevOps and Site Reliability Engineering (SRE). Learn how modern teams use automation, monitoring, CI/CD, and reliability practices to build scalable and resilient systems. Perfect for beginners and professionals seeking to understand the culture, tools, and mindset behind high-performing tech teams.