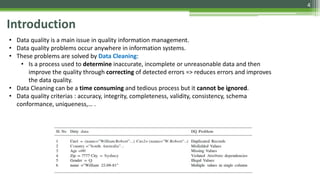

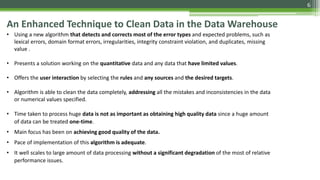

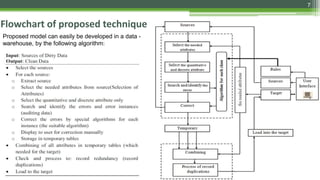

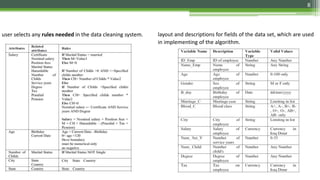

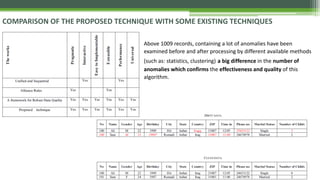

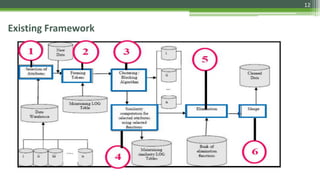

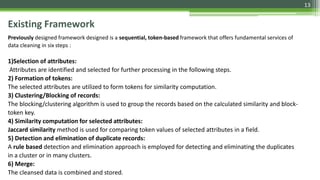

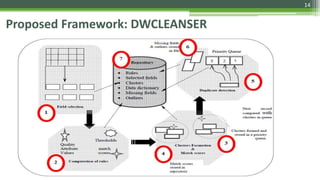

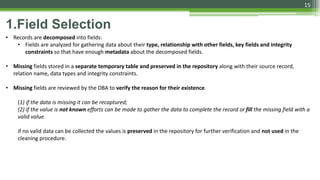

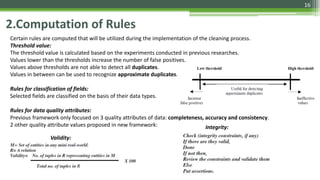

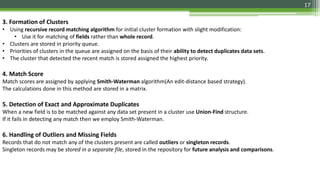

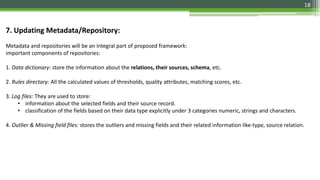

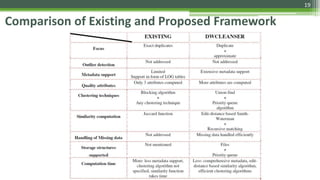

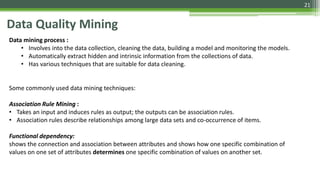

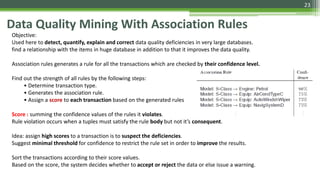

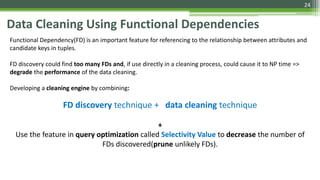

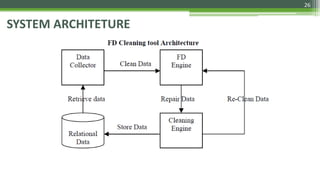

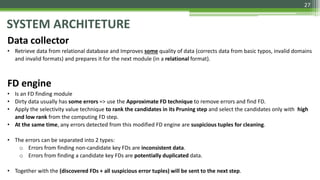

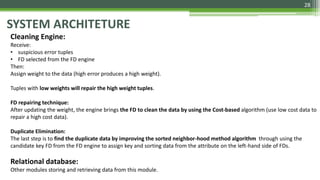

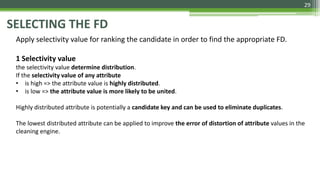

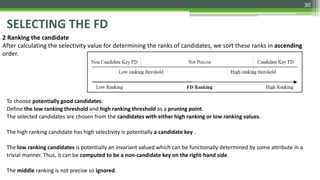

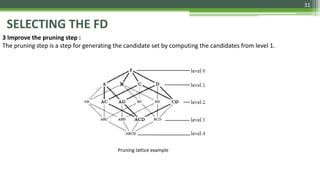

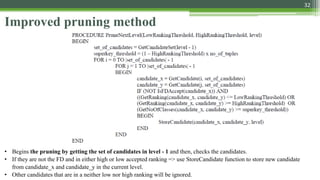

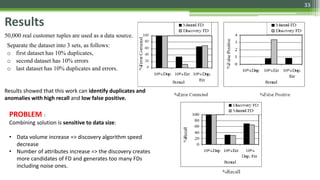

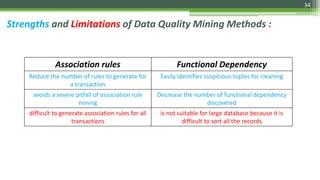

The document discusses various data cleaning techniques essential for ensuring data quality in information systems, highlighting methods such as DWcleanser for detecting approximate duplicates and employing data quality mining with association rules and functional dependencies. An enhanced algorithm for cleaning data warehouses is presented, focusing on accuracy and efficiency in correcting errors, as well as a systematic approach for managing metadata and outlier records. The frameworks and techniques described aim to address common data quality issues while minimizing processing time and improving overall data integrity.