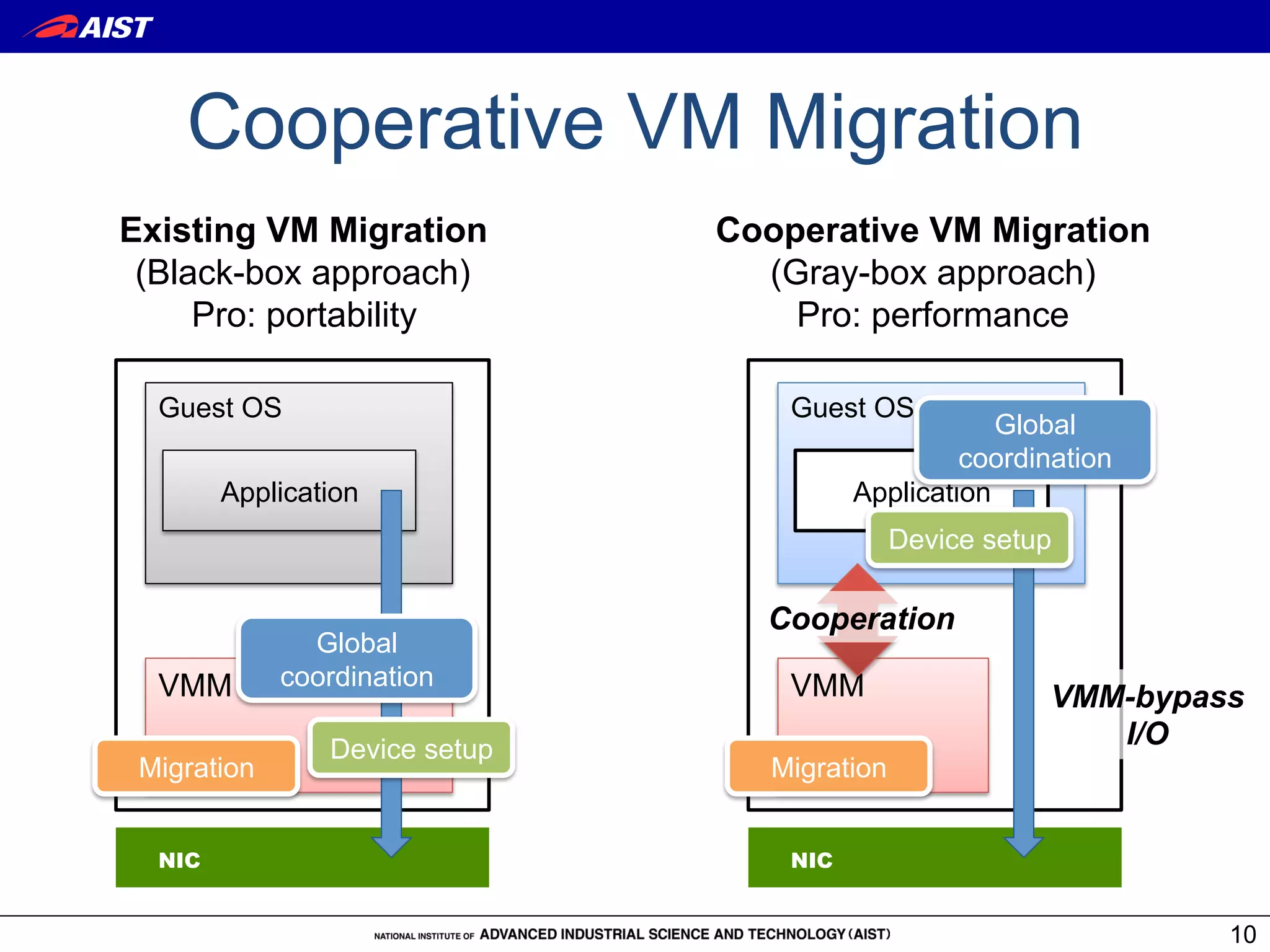

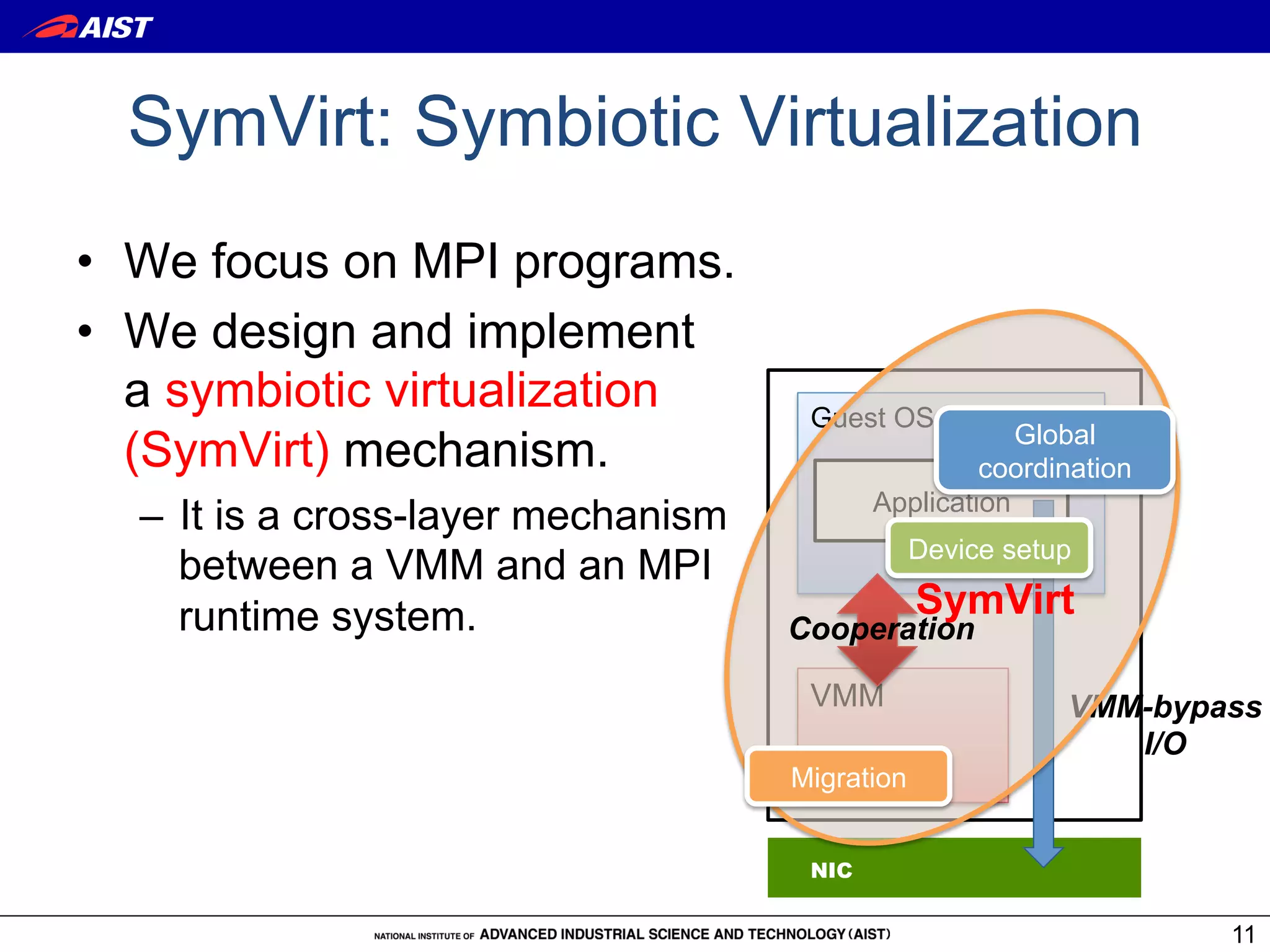

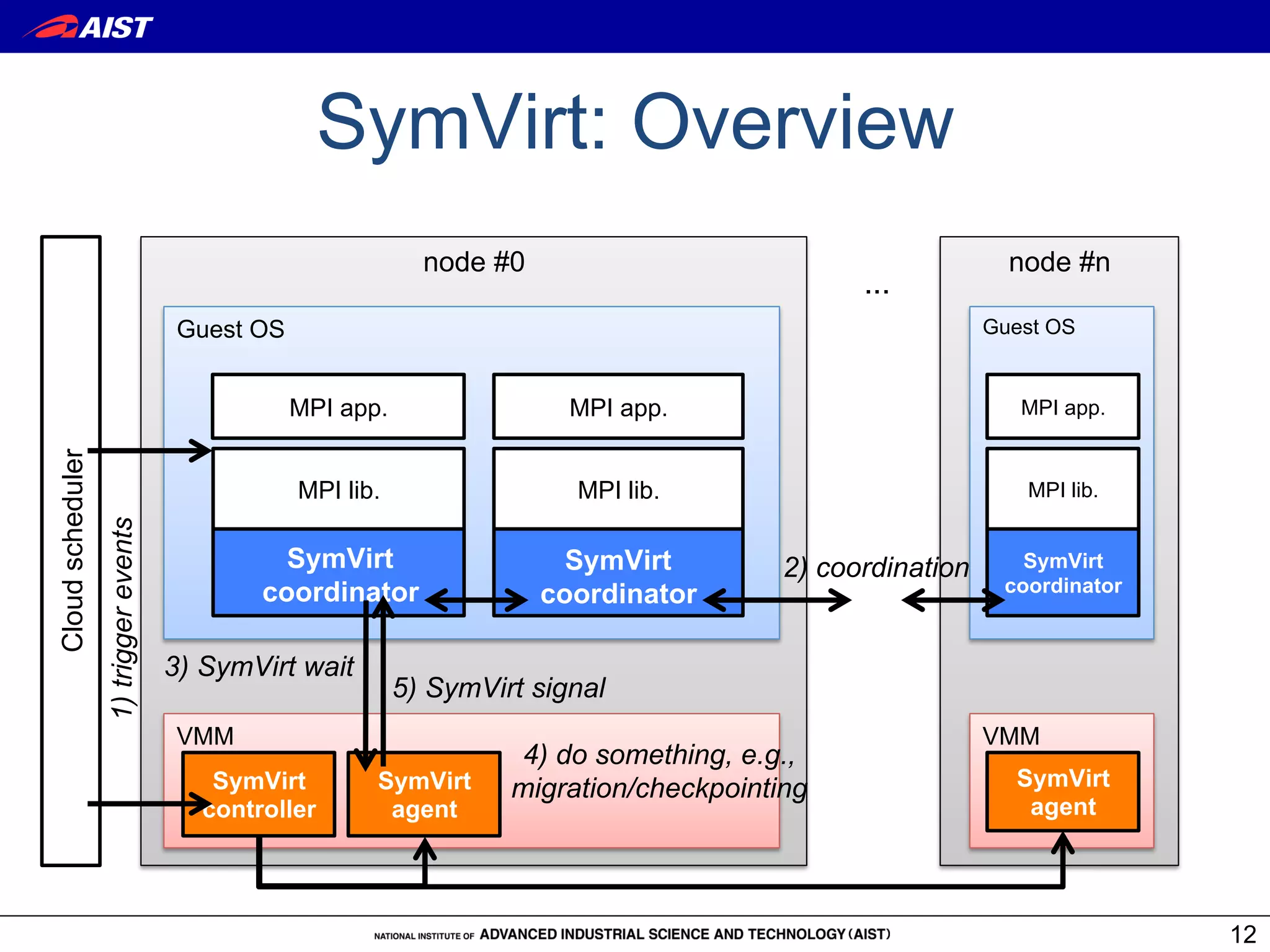

1) Cooperative VM migration allows live migration of VMs with VMM-bypass I/O devices like InfiniBand adapters.

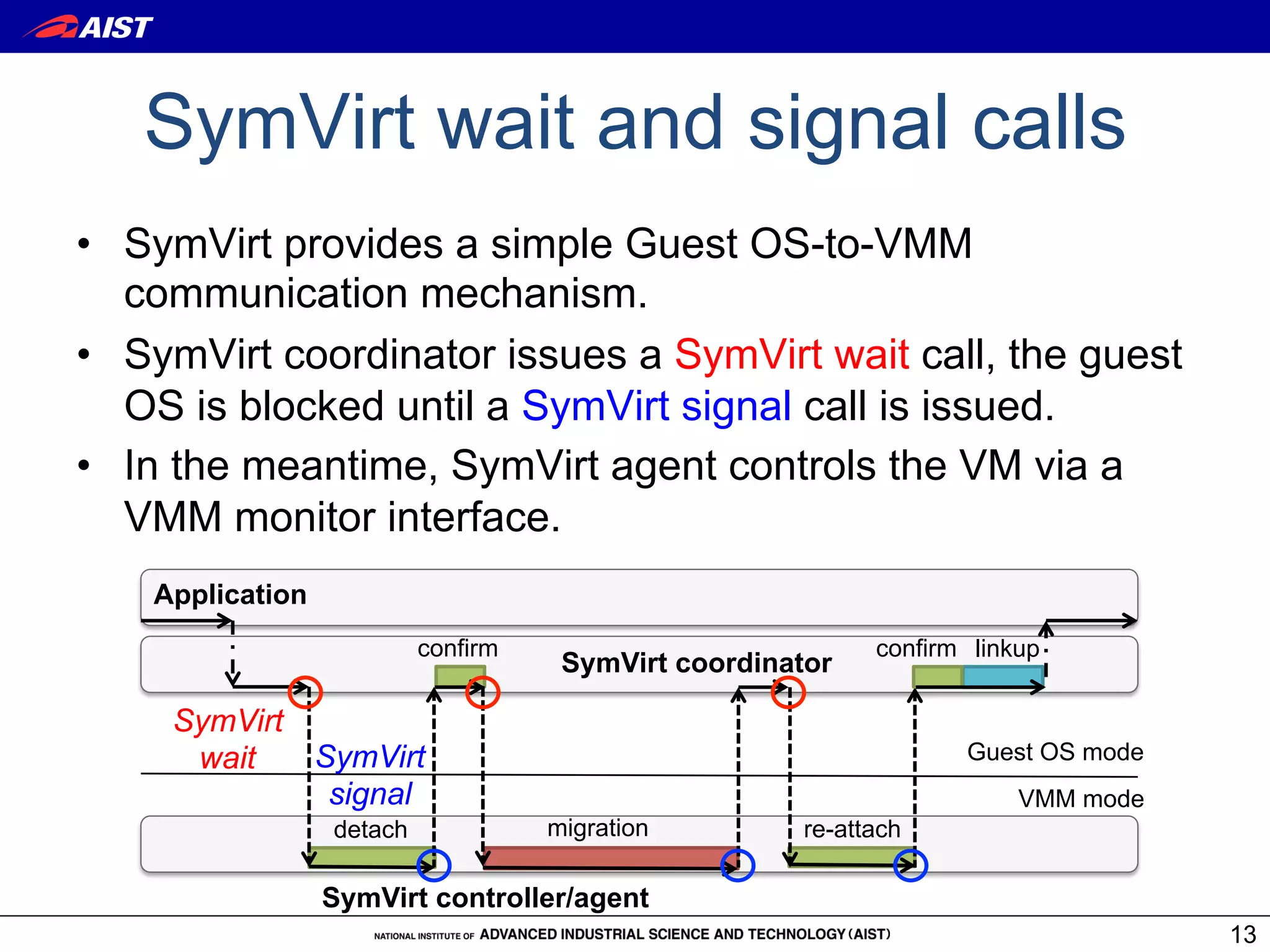

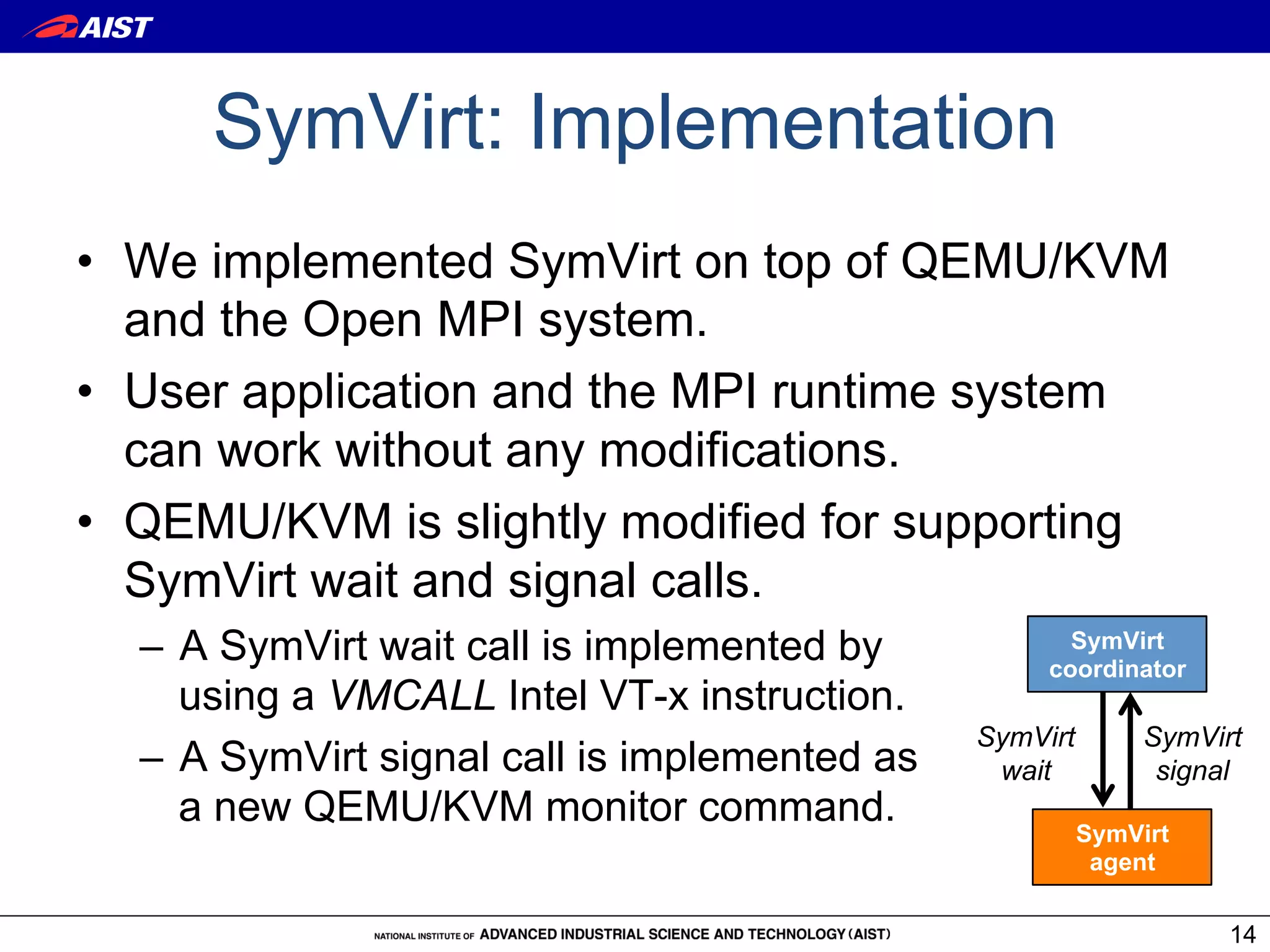

2) SymVirt enables coordination between the guest OS and VMM to safely detach and reattach devices during migration.

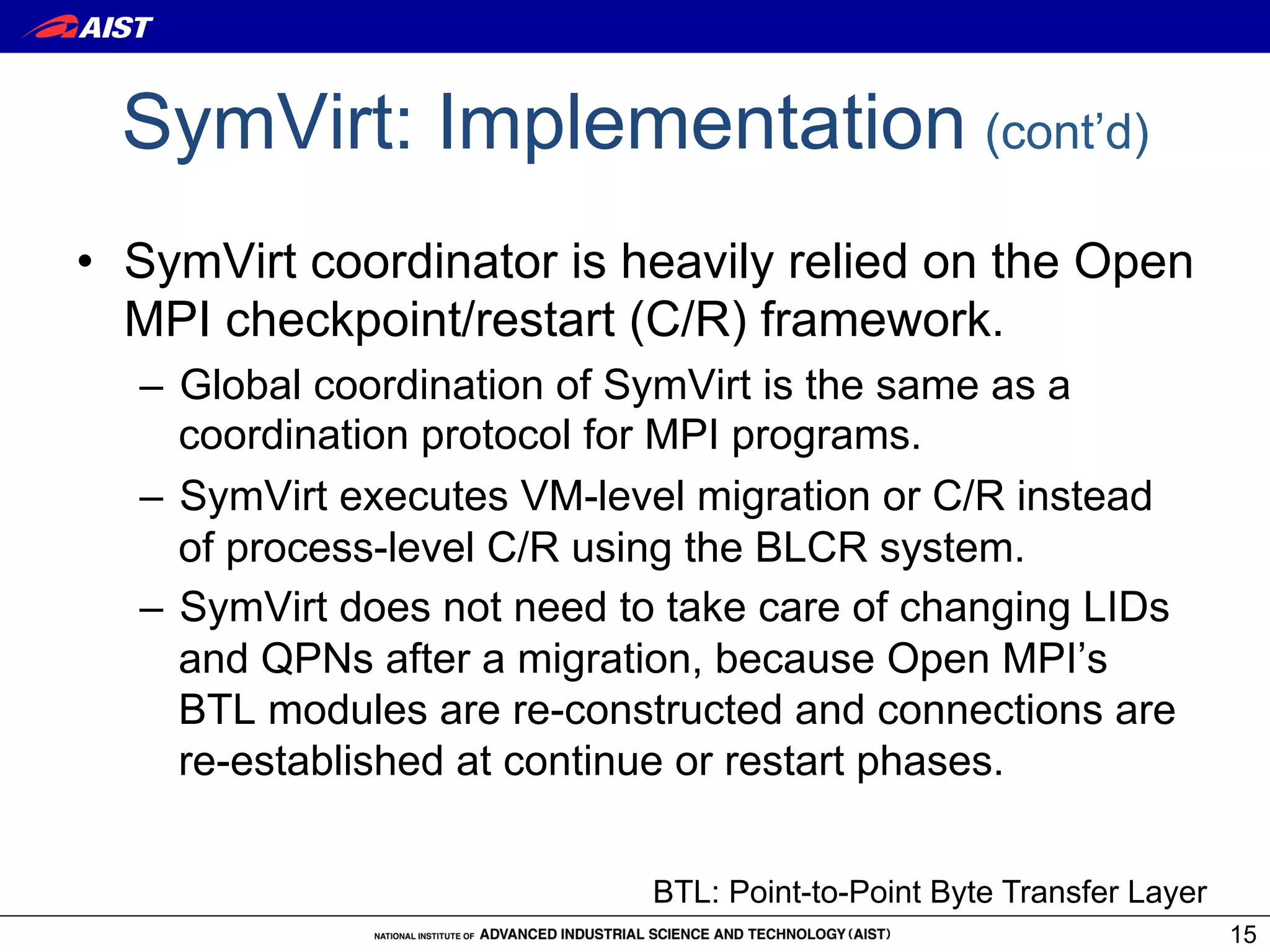

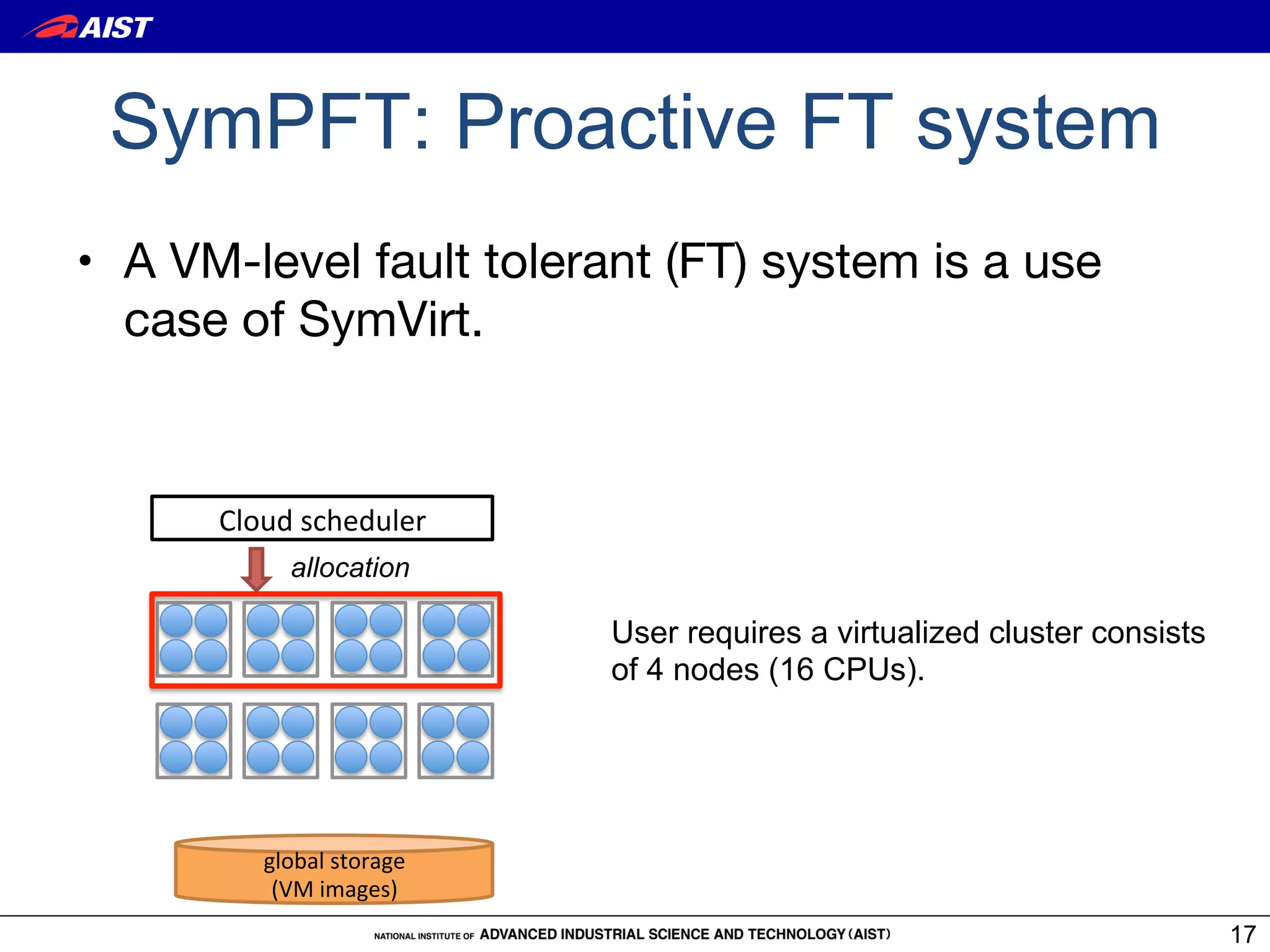

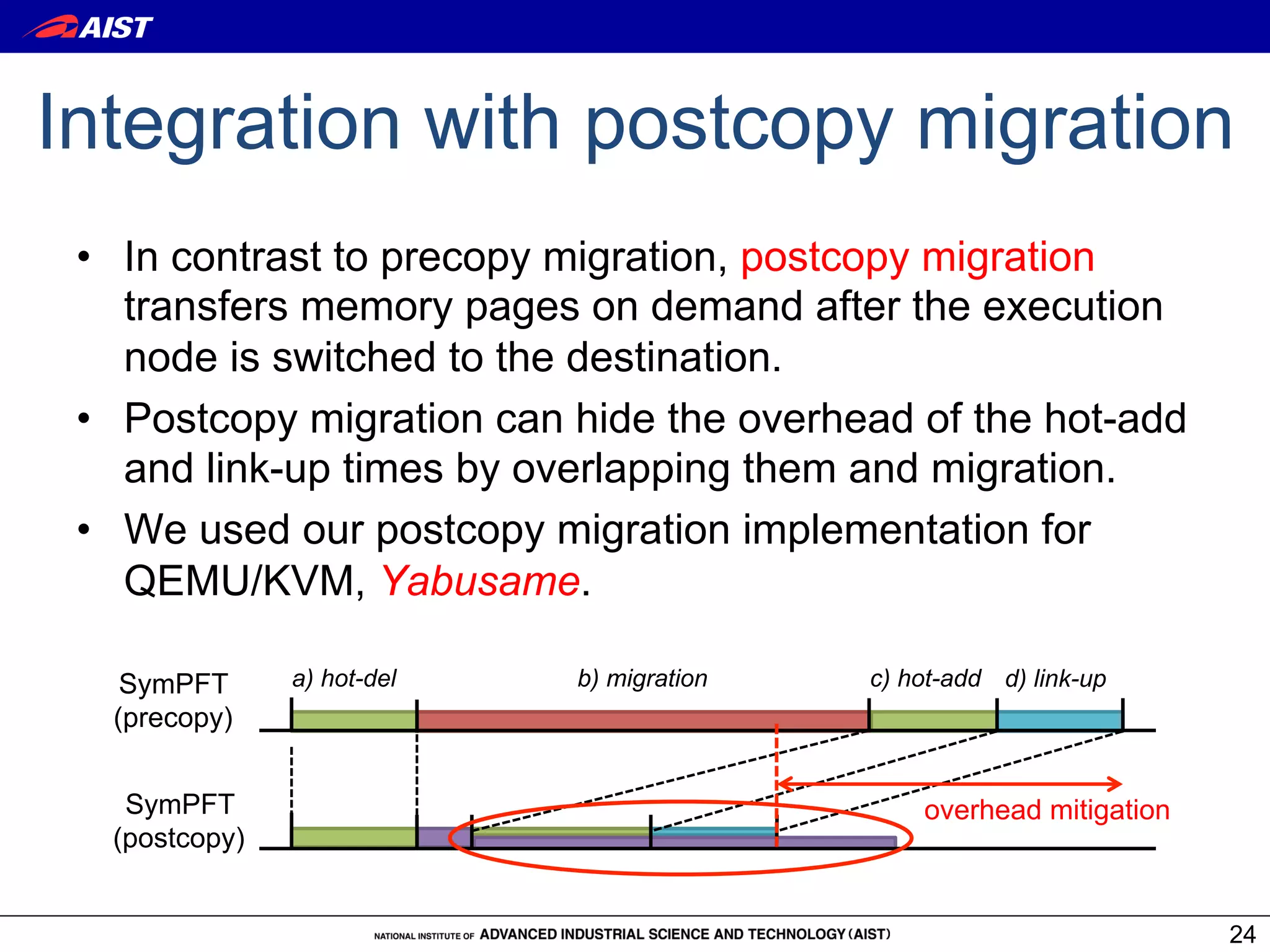

3) Experiments show SymVirt enables fault-tolerant live migration with minimal overhead for HPC workloads on an InfiniBand cluster. Postcopy migration further reduces downtime during migration.

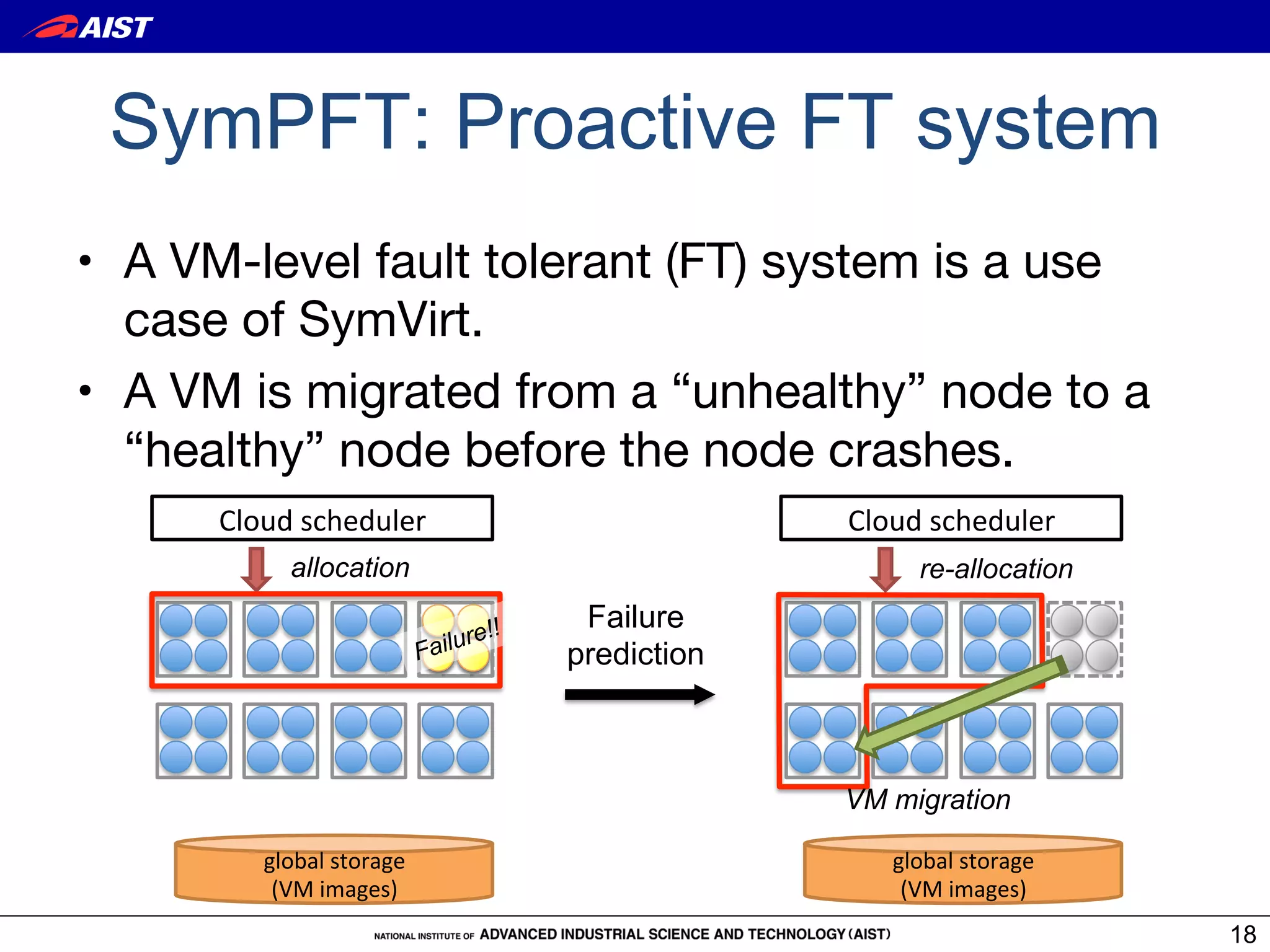

![Motivating Observation

• Performance evaluation of HPC cloud

– (Para-)virtualized I/O incurs a large overhead.

– PCI passthrough significantly mitigate the overhead.

KVM (IB) KVM (virtio)

300

BMM (IB) BMM (10GbE) VM1

VM1

KVM (IB) KVM (virtio)

250

Guest OS Guest OS

Execution time [seconds]

200 Physical Guest

driver driver

150

100 VMM VMM

50 Physical

driver

0

BT CG EP FT LU

The overhead of I/O virtualization on the NAS IB QDR HCA 10GbE NIC

Parallel Benchmarks 3.3.1 class C, 64 processes.

BMM: Bare Metal Machine

5](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/75/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-5-2048.jpg)

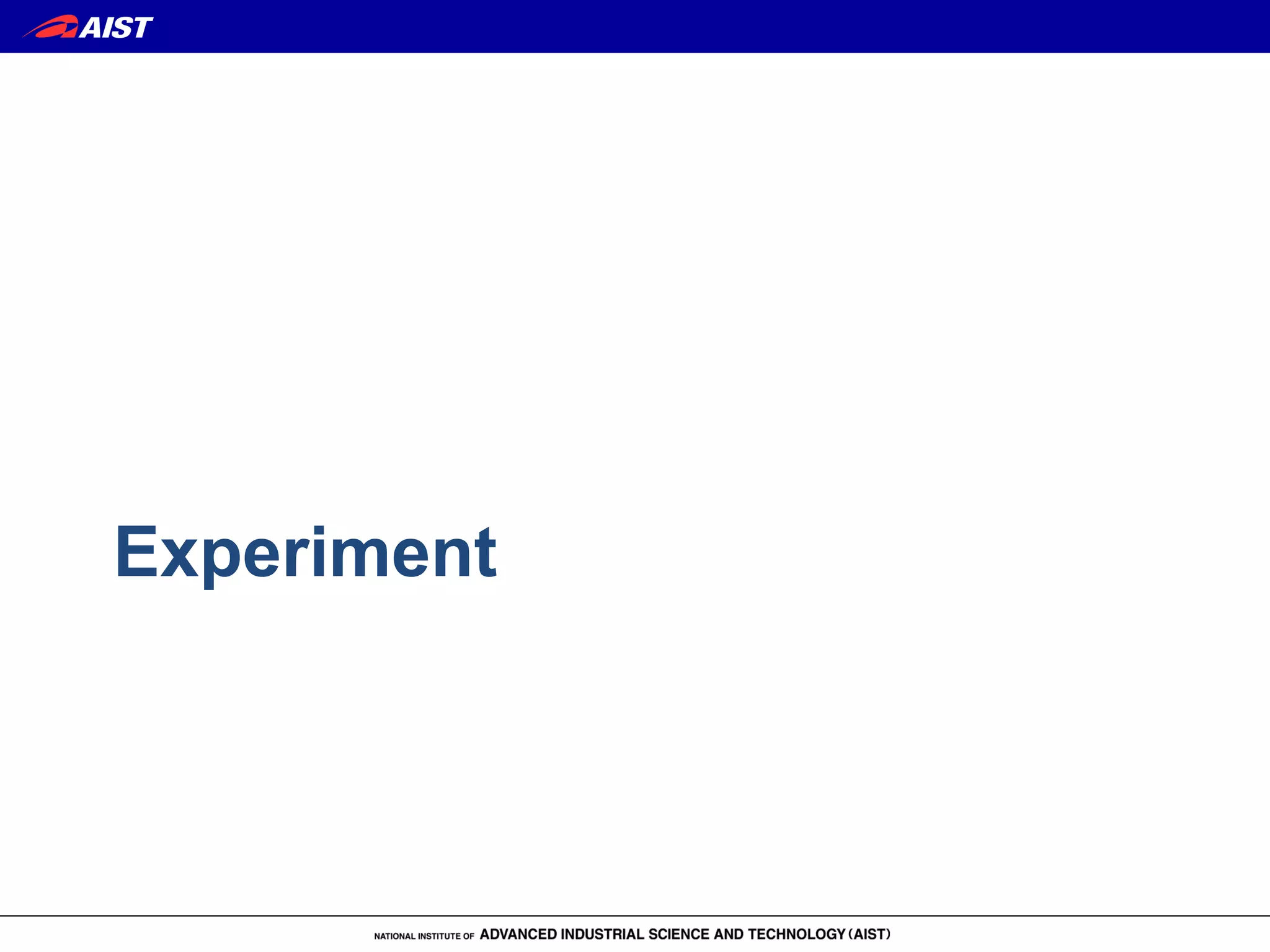

![SymVirt: Implementation (cont’d)

• SymVirt controller and agent are written in Python.

import symvirt! !

agent_list = [migrate_from]! # device attach!

ctl = symvirt.Controller(agent_list)! ctl.append_agent(migrate_to)!

! ctl.wait_all()!

# device detach! kwargs = {'pci_id':'04:00.0',

ctl.wait_all()! 'tag':'vf0'}!

kwargs = {'tag':'vf0'}! ctl.device_attach(**kwargs)!

ctl.device_detach(**kwargs)! ctl.signal()!

ctl.signal()! !

! ctl.close()

# vm migration!

ctl.wait_all()!

kwargs = {'postcopy':True, 'uri':'tcp:%s:%d' !

% (migrate_to[0], migrate_port)}!

ctl.migrate(**kwargs)!

ctl.remove_agent(migrate_from)!

16](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/75/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-16-2048.jpg)

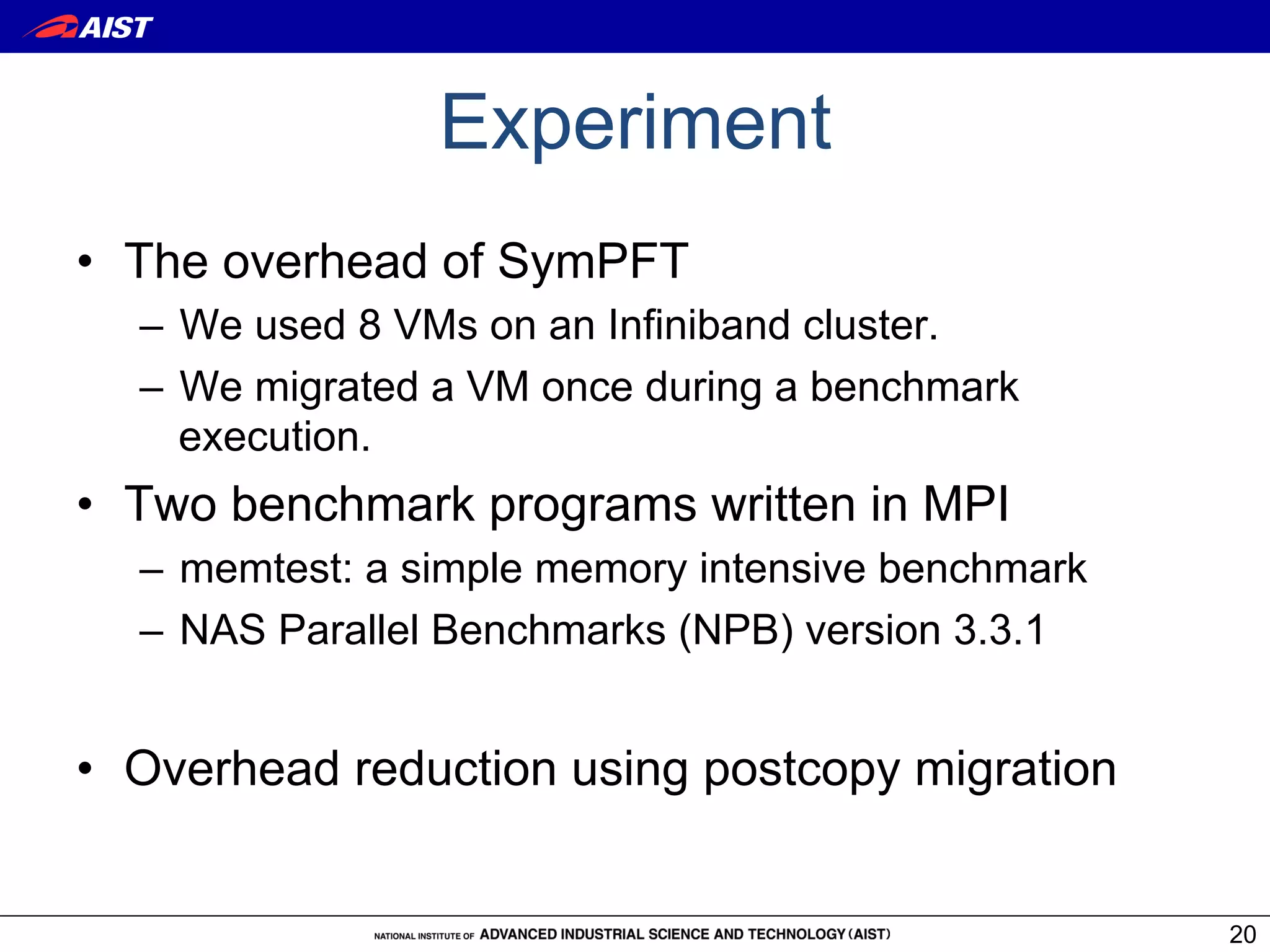

![Result: memtest

• The migration time is dependent on the memory footprint.

– The migration throughput is less than 3 Gbps.

• Both hotplug and link-up times are approximately constant.

– The link-up time is not a negligible overhead. c.f., Ethernet

100

migration hotplug linkup

80

Execution Time

[Seconds]

44.2 53.7

60 35.9 38.7

40

14.6 13.5 12.5 11.3

20

28.5 28.5 28.5 28.6

0

2GB 4GB 8GB 16GB

memory footprint

This result does not include our proceeding.

22](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/75/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-22-2048.jpg)

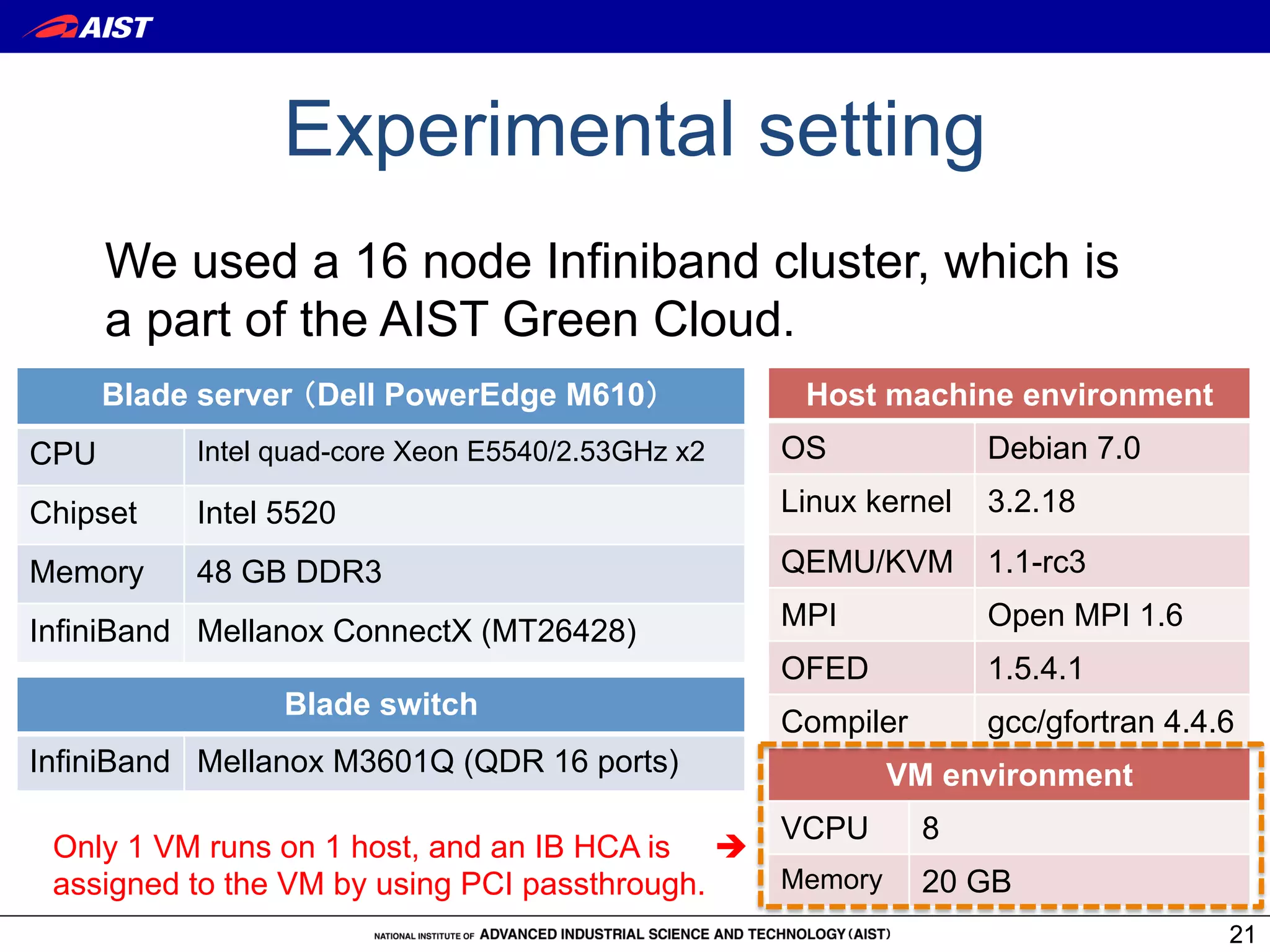

![Result: NAS Parallel Benchmarks

1400

linkup postcopy migration

1200 hotplug precopy migration

+105 s

Execution time [seconds]

application

1000

There is no overhead +103 s

800 during normal operations

+97 s

+299 s

600

400

The overhead is proportional

200 to the memory footprint.

0

baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy

BT CG FT LU

Transferred Memory Size during VM Migration [MB]

BT CG FT LU

4417 3394 15678 2348

23](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/75/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-23-2048.jpg)

![Result: Effect of postcopy migration

1400

linkup postcopy migration

-15 %

1200 hotplug precopy migration

Execution time [seconds]

application -13 s

1000

800 -14 %

-53 %

600

400

Postcopy migration can hide the

200 overhead of hotplug and link-up by

0 overlapping them and migration.

baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy baseline precopy postcopy

BT CG FT LU

Transferred Memory Size during VM Migration [MB]

BT CG FT LU

4417 3394 15678 2348

25](https://image.slidesharecdn.com/esci2012-takano-121011145155-phpapp02/75/Cooperative-VM-Migration-for-a-virtualized-HPC-Cluster-with-VMM-bypass-I-O-devices-25-2048.jpg)