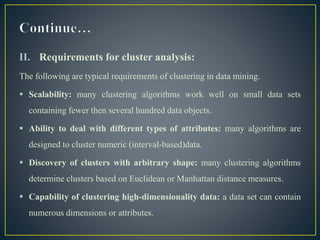

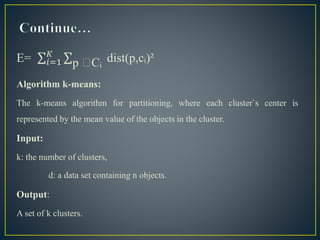

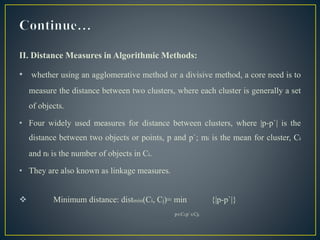

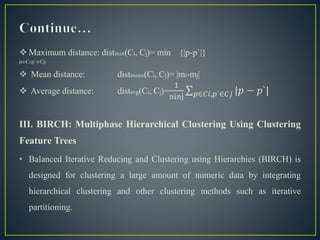

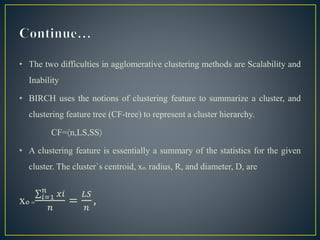

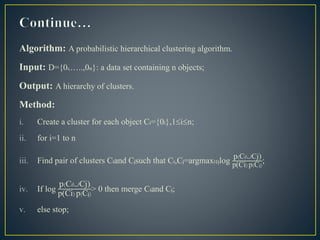

The document discusses different clustering techniques used for grouping large amounts of data. It covers partitioning methods like k-means and k-medoids that organize data into exclusive groups. It also describes hierarchical methods like agglomerative and divisive clustering that arrange data into nested groups or trees. Additionally, it mentions density-based and grid-based clustering and provides algorithms for different clustering approaches.