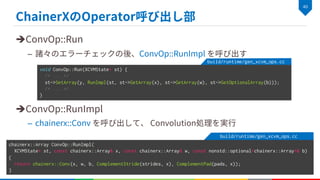

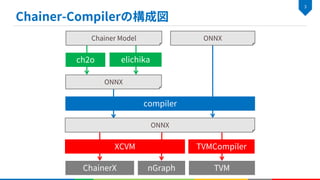

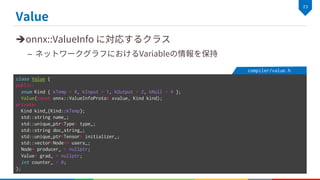

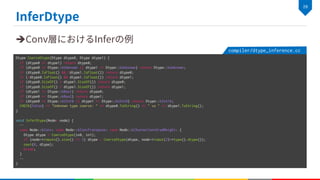

- The document describes the process of compiling an ONNX model to XCVM using the Chainer compiler. It involves parsing the ONNX model, applying optimization passes like fusion and constant propagation, lowering it to an XCVM program, and then executing the program on XCVM to run the model.

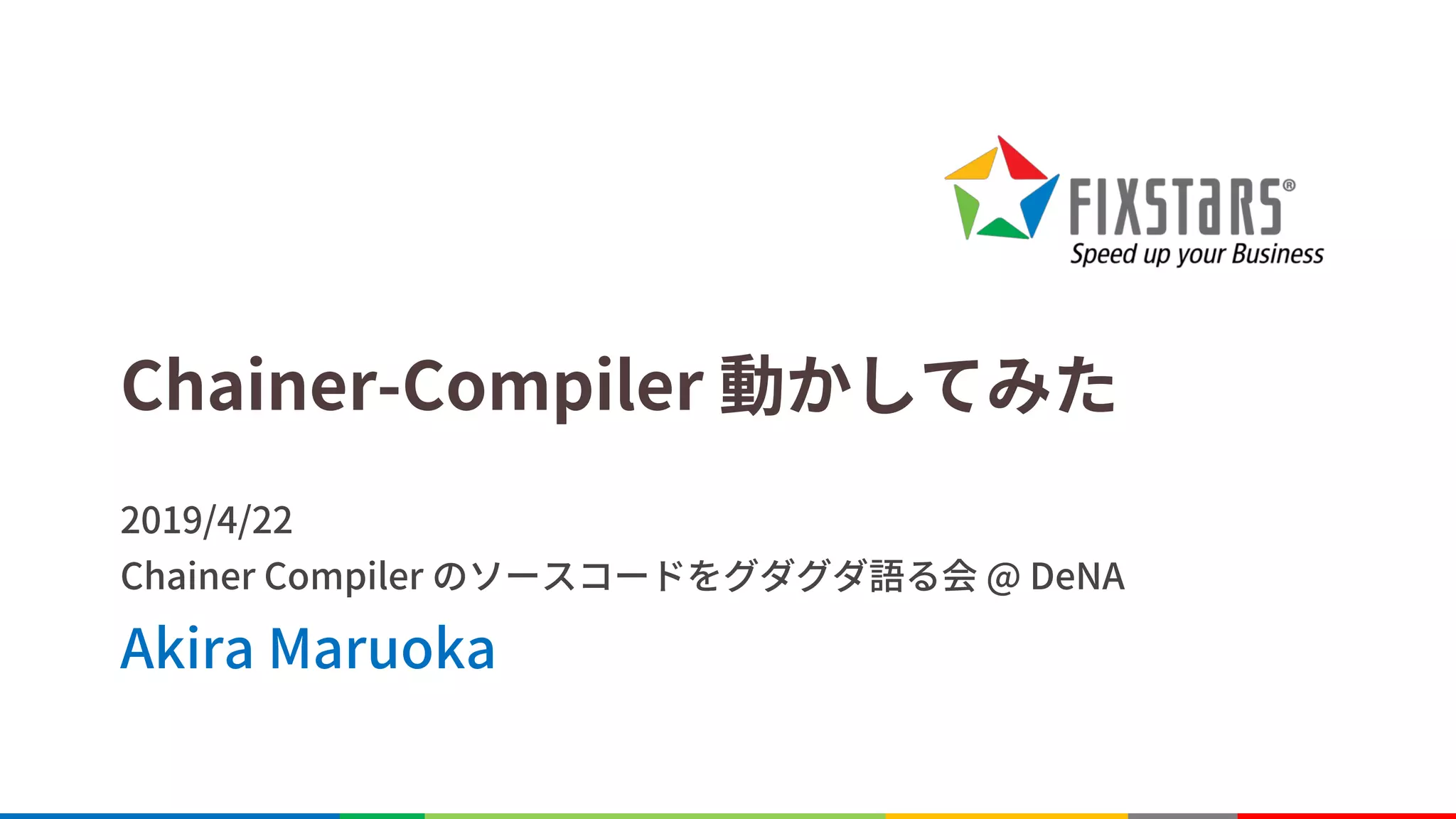

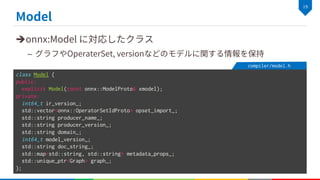

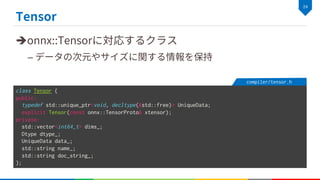

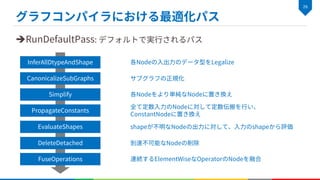

- The compiler represents the model as a Model object containing a Graph. Nodes in the graph are represented by Node objects. Values are represented by Value objects. Tensor data is stored in Tensor objects.

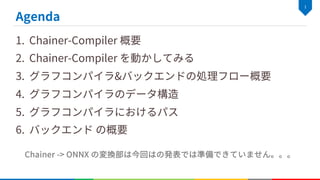

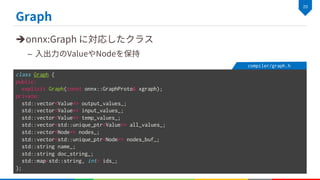

- The XCVM execution engine takes the compiled XCVM program and runs it, interpreting each XCVM operation by calling methods on classes like ConvOp that implement the operations.

![è

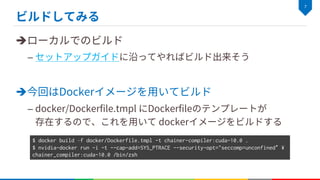

=================================== FAILURES ===================================

____________________________ TestPReLU.test_output _____________________________

self = <tests.functions_tests.test_activations.TestPReLU testMethod=test_output>

def test_output(self):

> self.expect(self.model, self.x)

third_party/onnx-chainer/tests/functions_tests/test_activations.py:61:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

third_party/onnx-chainer/tests/helper.py:106: in expect

self.check_out_values(test_path, input_names=graph_input_names)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

test_path = 'out/opset7/test_prelu', input_names = ['Input_0']

def check_model_expect(test_path, input_names=None):

if not ONNXRUNTIME_AVAILABLE:

> raise ImportError('ONNX Runtime is not found on checking module.')

E ImportError: ONNX Runtime is not found on checking module.

third_party/onnx-chainer/onnx_chainer/testing/test_onnxruntime.py:39: ImportError](https://image.slidesharecdn.com/20190422-chainer-compilerpublic-190422093843/85/Chainer-Compiler-9-320.jpg)

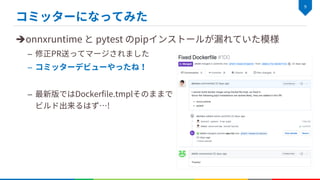

![auto pads = [&node]() {

std::vector<int64_t> pads = node.pads();

+

+ // Complement from auto_pad

+ if (pads.size() == 0) {

+ if (node.auto_pad() == "SAME_UPPER") {

+ const Value* weight = node.input(1);

+ const int pad_ndim = (weight->type().ndim() - 2)*2;

+ CHECK_GT(pad_ndim, 0) << weight->type().DebugString();

+ pads.resize(pad_ndim);

+ for (int i = 0; i < pad_ndim/2; ++i) {

+ pads[i] = pads[i+pad_ndim/2] = weight->type().dims()[i+2] / 2;

+ }

+ }

+ }

compiler/emitter.cc:167

Verifying the result...

OK: Plus214_Output_0

Elapsed: 23.395 msec

OK!](https://image.slidesharecdn.com/20190422-chainer-compilerpublic-190422093843/85/Chainer-Compiler-13-320.jpg)

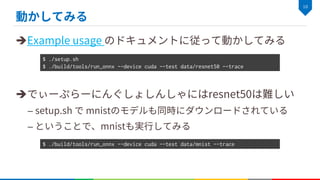

![è

–

class NodeBase {

public:

enum OpType {

kIdentity,

kNeg,

...

};

protected:

std::vector<float> activation_alpha_;

bool was_activation_alpha_set_ = false;

std::vector<float> activation_beta_;

...

};

build/compiler/gen_node_base.h

class NodeDef(object):

def __init__(self, op_type, num_inputs, num_outputs, **kwargs):

self.op_type = op_type

self.num_inputs = num_inputs

self.num_outputs = num_outputs

self.attributes = kwargs

self.attributes.update(CHAINER_COMPILERX_GLOBAL_ATTRS)

self.attr_defs = {} # To be filled after parsed.

NODES.append(self)

NodeDef('Identity', 1, 1)

NodeDef('Neg', 1, 1)

…

def gen_gen_node_base_h():

public_lines = []

private_lines = []

public_lines.append('enum OpType {‘)

for node in NODES:

public_lines.append('k%s,' % (node.op_type))

…

compiler/gen_node.py](https://image.slidesharecdn.com/20190422-chainer-compilerpublic-190422093843/85/Chainer-Compiler-23-320.jpg)

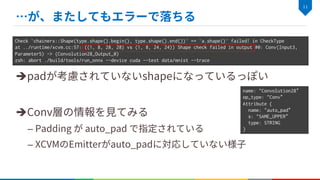

![è

–

void DoConstantPropagation(Graph* graph, Node* node) {

/* Nodeの入力を集める処理 */

for (size_t i = 0; i < next_values.size(); ++i) {

auto& next_value = next_values[i];

GraphBuilder gb(graph, "Const", node->output(i));

if (next_value->is_tensor()) {

gb.Op(Node::kConstant, {}, node->output(i))->producer()->set_tensor_value(next_value->ReleaseTensor());

}

}

/* 置き換え前NodeのDetach */

}

void PropagateConstants(Graph* graph) {

bool replaced = true;

while (replaced) {

replaced = false;

for (Node* node : graph->GetLiveNodes()) {

if (!HasConstantInputsOnly(*node)) continue;

if (MaybePropagateConstant(graph, node)) { replaced = true; }

}

}

}

compiler/constant_propagation.cc](https://image.slidesharecdn.com/20190422-chainer-compilerpublic-190422093843/85/Chainer-Compiler-32-320.jpg)

{

/* ... */

};

FuseAllConnectedNodes("nvrtc", graph, 2, is_fusable);

}

void FuseOperations(Graph* graph, bool use_tvm, bool use_ngraph) {

/* subgraphのfusion */

if (use_ngraph) { FuseNGraphOperations(graph); }

if (use_tvm) { FuseTVMOperations(graph); }

FuseElementwiseOperations(graph);

}

compiler/fusion.cc](https://image.slidesharecdn.com/20190422-chainer-compilerpublic-190422093843/85/Chainer-Compiler-33-320.jpg)

![è

–

è

–

InOuts Run(const InOuts& inputs) {

if (trace_level()) std::cerr << "Running XCVM..." << std::endl;

InOuts outputs = xcvm_->Run(inputs, xcvm_opts_);

/* ... */

return outputs;

}

compiler/run_onnx.cc

void XCVM::Run(XCVMState* state) {

/* Stateの初期化 */

while (true) {

int pc = state->pc();

if (pc >= program_.size()) break;

XCVMOp* op = program_[pc].get();

try {

op->Run(state);

} catch (...) {

std::cerr << "Exception in " << op->debug_info() << std::endl;

throw;

}

compiler/run_onnx.cc](https://image.slidesharecdn.com/20190422-chainer-compilerpublic-190422093843/85/Chainer-Compiler-39-320.jpg)