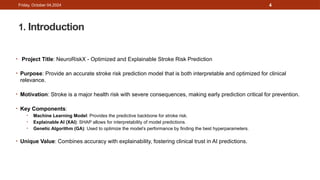

The document outlines a final year project focused on a brain stroke prediction system titled 'NeuroRiskX', aiming to provide an accurate and explainable prediction model using machine learning and genetic algorithms. Key features include the use of a Random Forest classifier for its stability and interpretability, along with SHAP for explainability and a genetic algorithm for hyperparameter optimization. The project design includes a clear architecture, a detailed dataset discussion, and a feasibility analysis indicating low operational costs suited for clinical applications.