Embed presentation

Downloaded 17 times

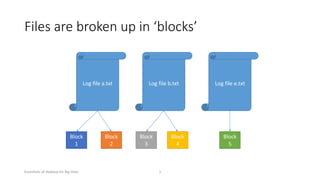

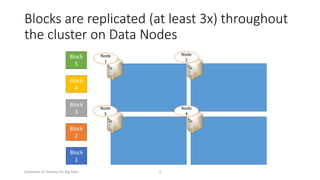

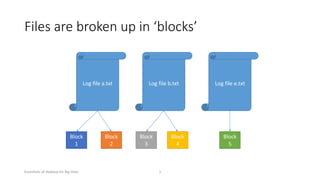

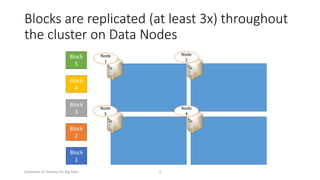

Files in Hadoop are broken into blocks that are replicated across multiple Data Nodes for redundancy. The document shows how log files a.txt, b.txt and e.txt are each broken into multiple blocks that are stored on different Data Nodes, with each block replicated at least 3 times to ensure the data is not lost if a Node fails.