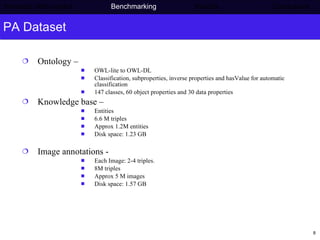

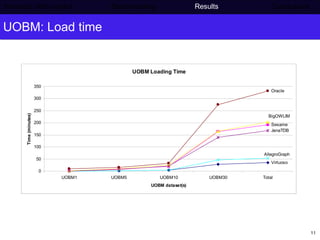

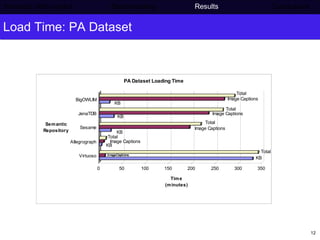

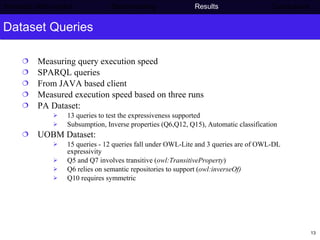

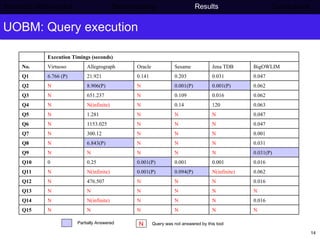

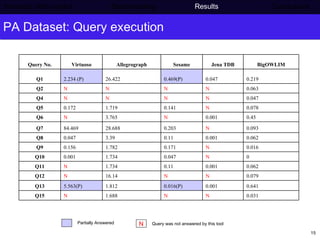

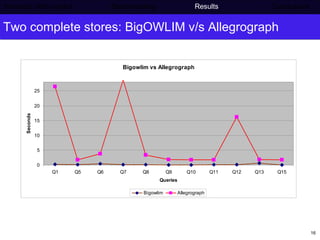

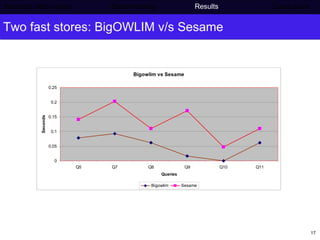

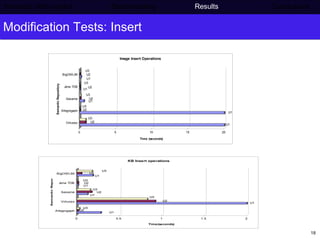

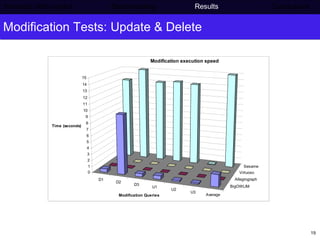

The document summarizes benchmarking tests conducted on several semantic repositories using the Press Association dataset and University Ontology Benchmark dataset. BigOWLIM demonstrated the best average query response times and answered the most queries, but was slower for loading and modification tests. Sesame, Jena, Virtuoso and Oracle also had sub-second response times for most queries. Allegrograph answered more queries than those four but had the highest average response times. Further benchmarking on larger datasets and additional parameters was recommended.