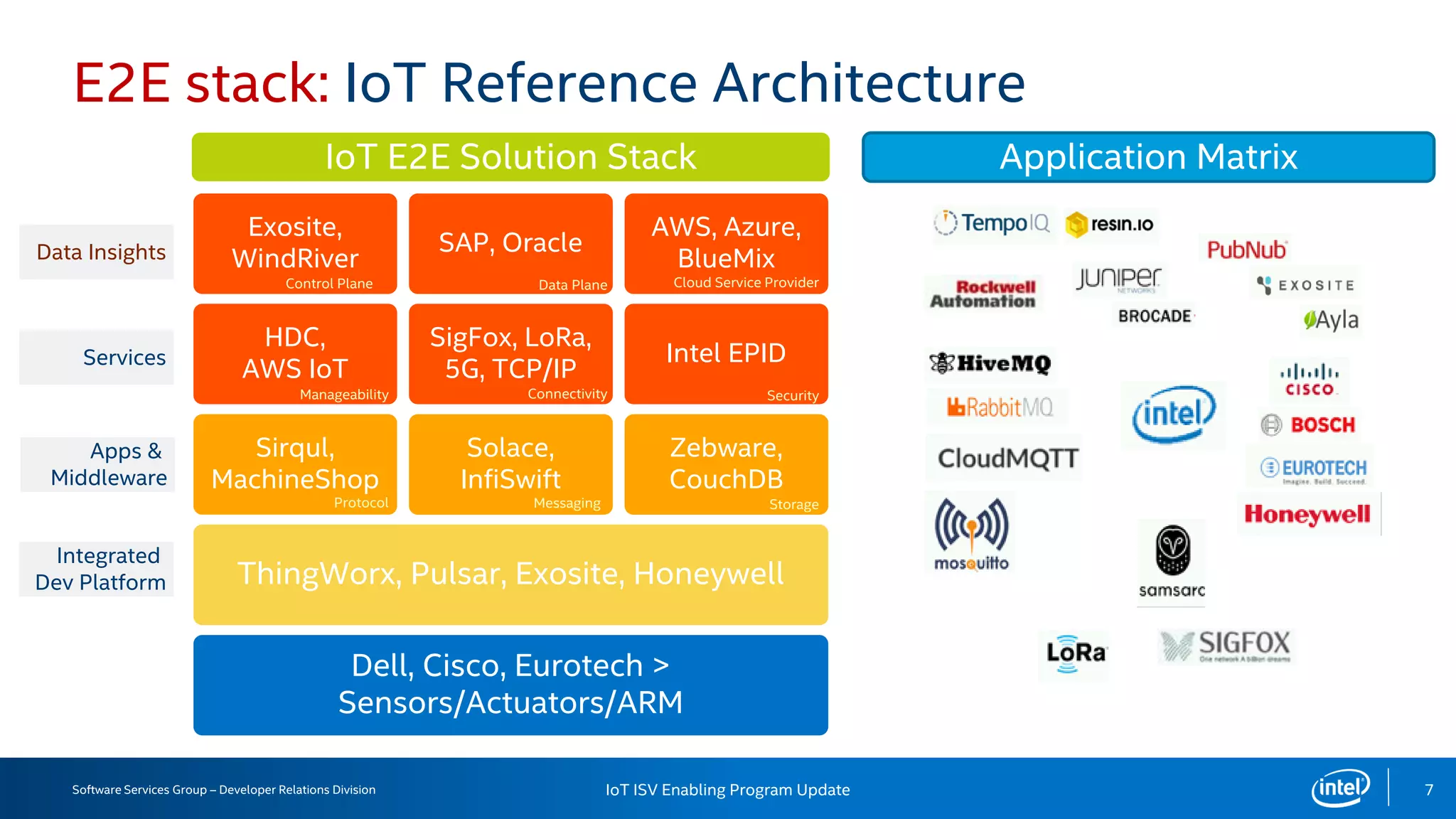

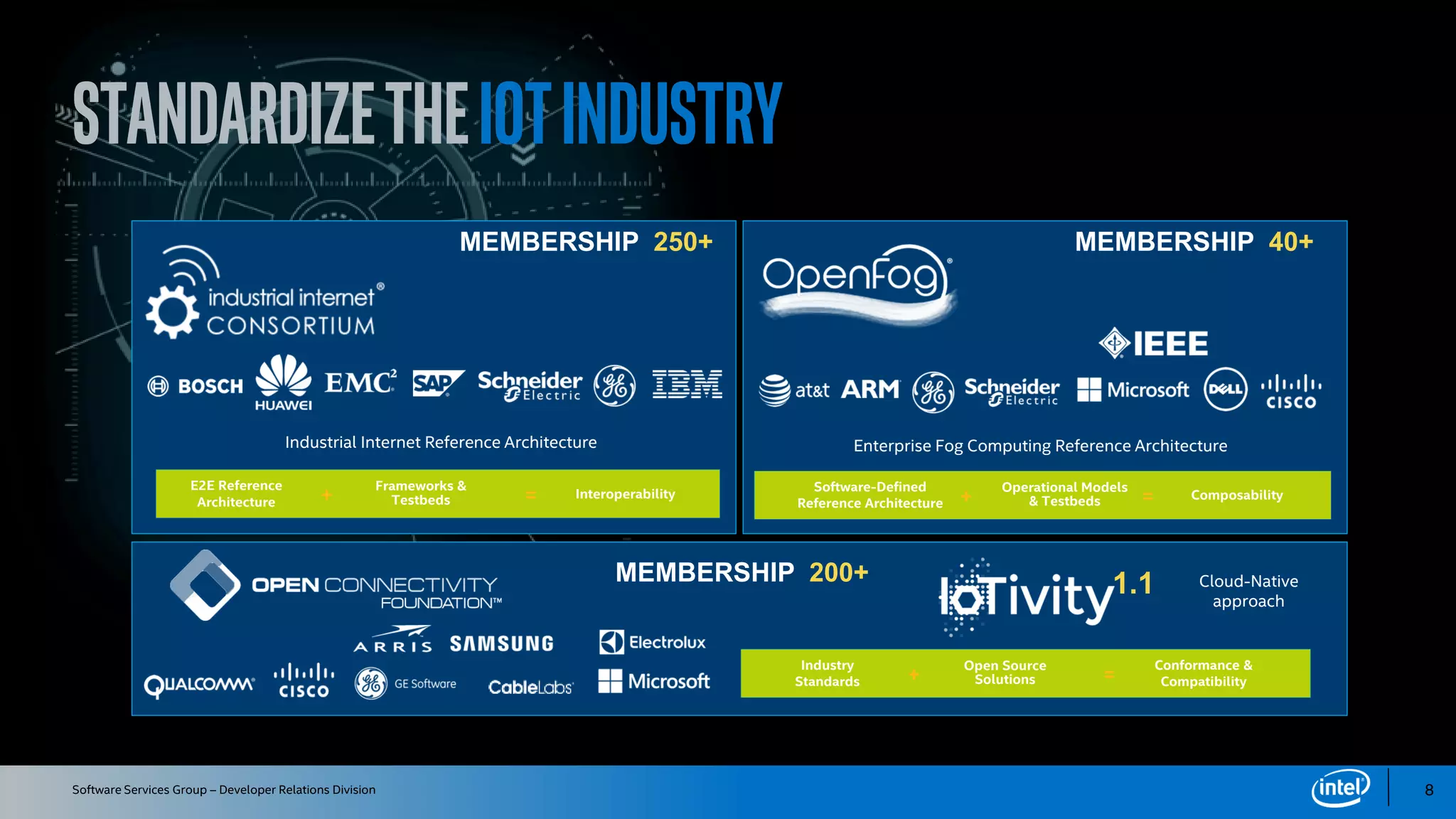

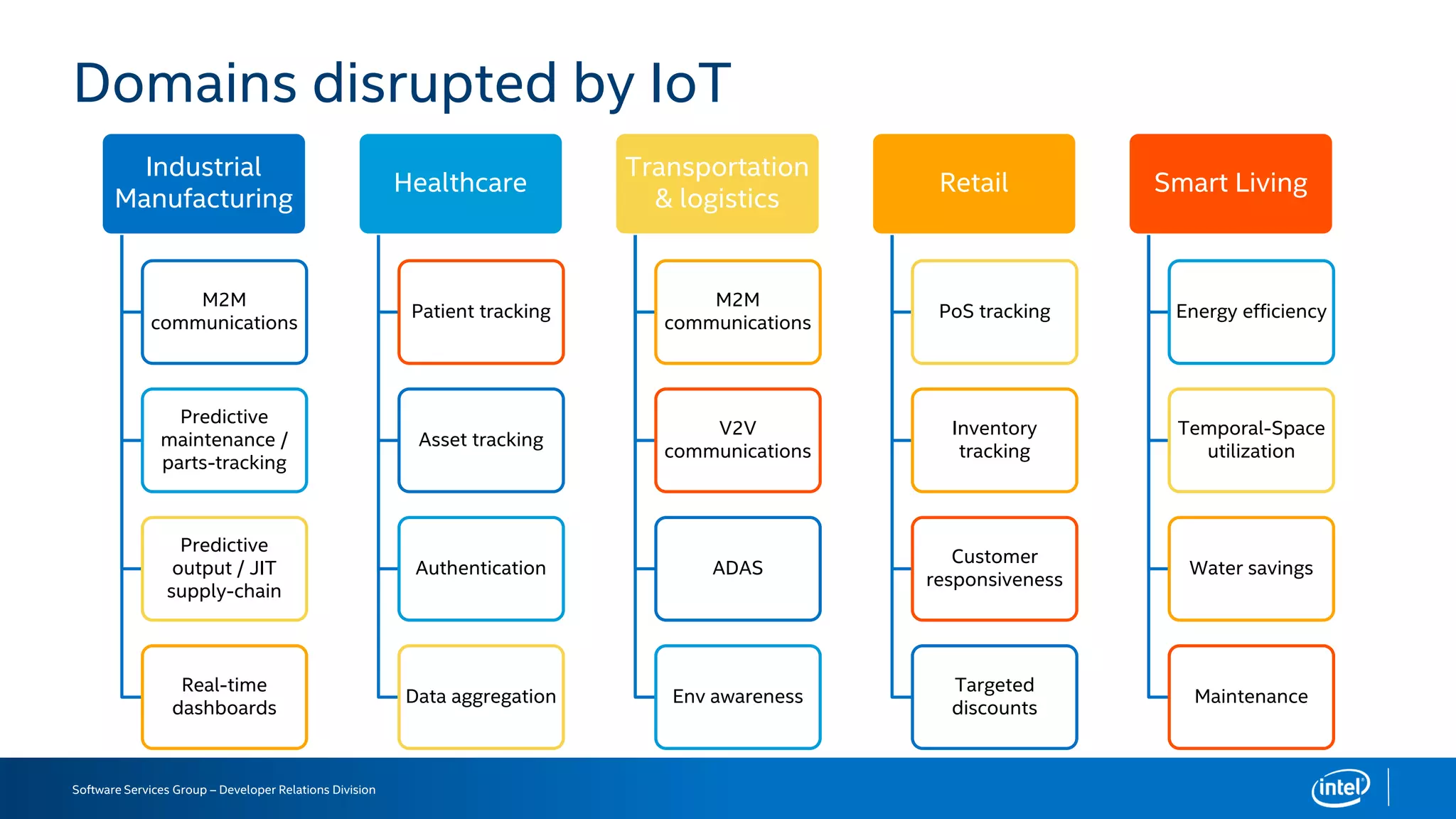

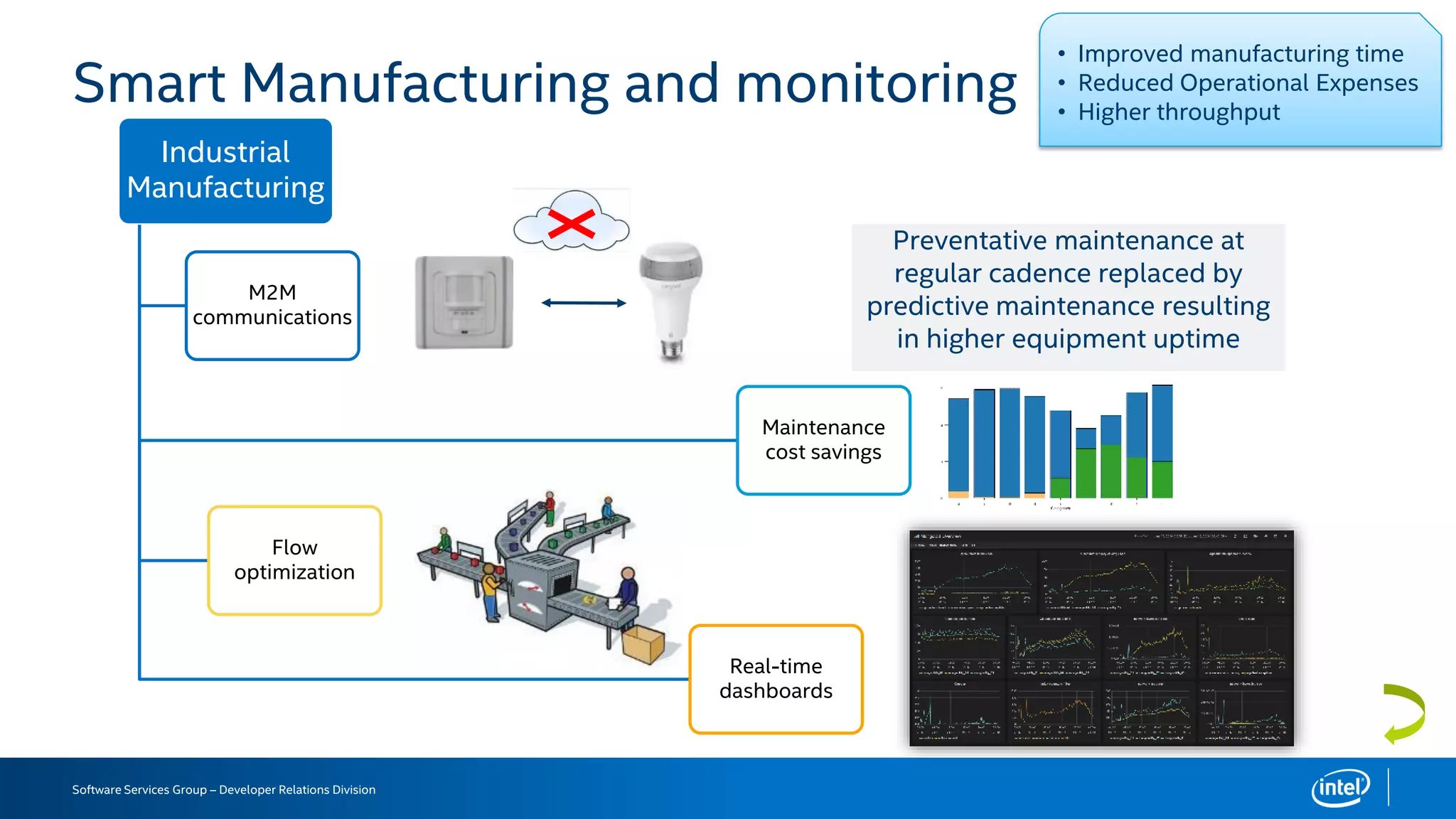

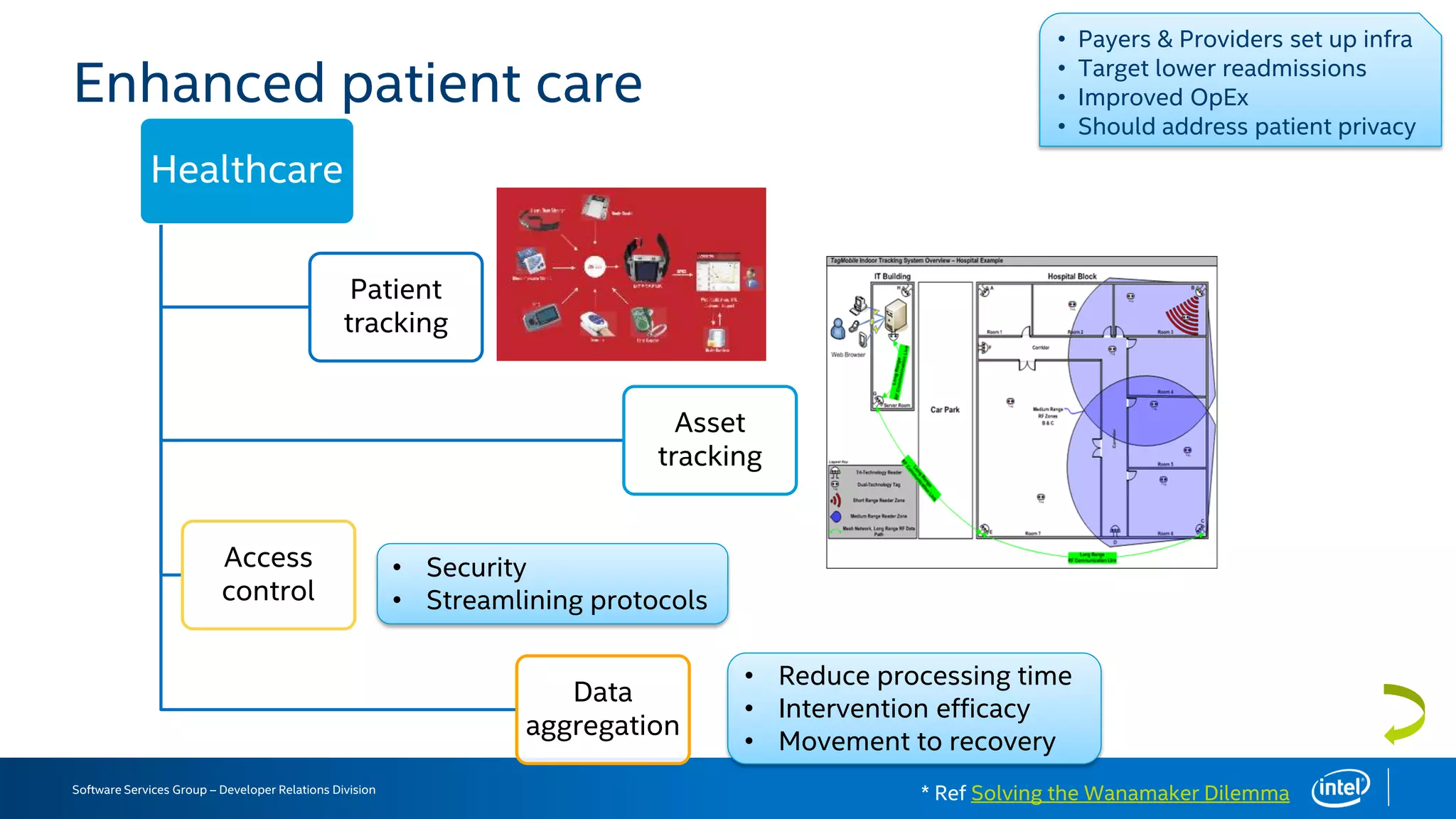

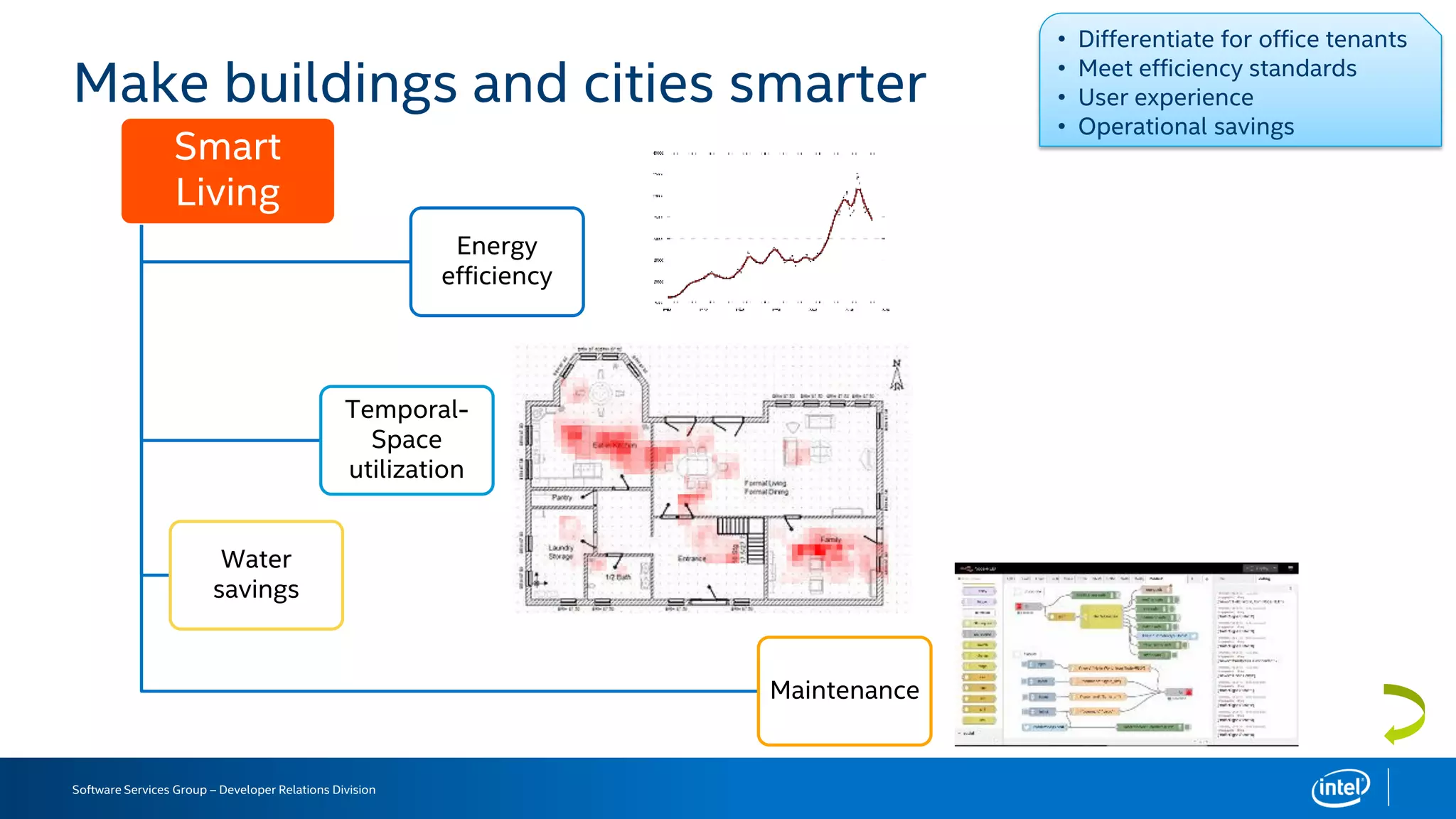

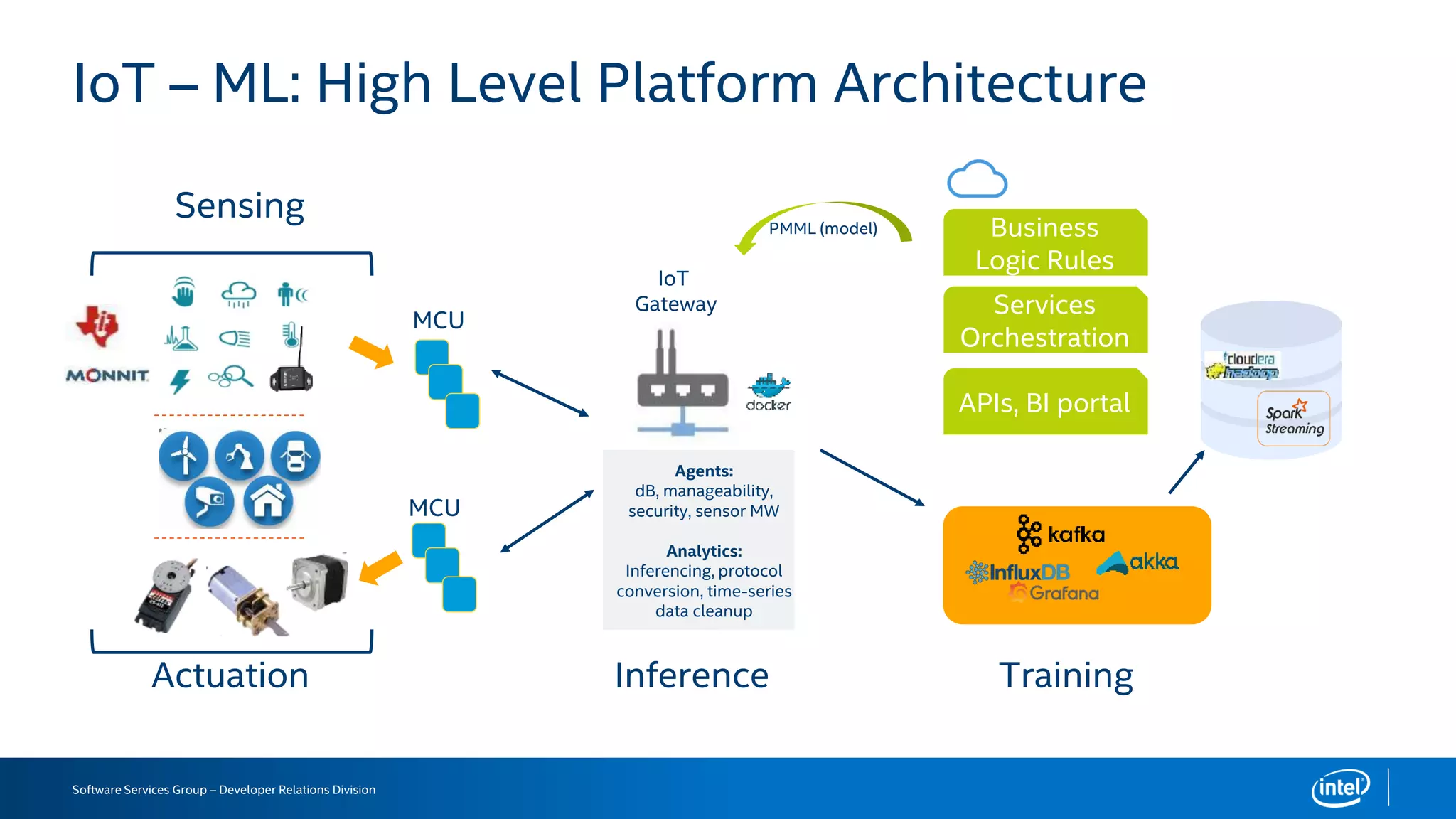

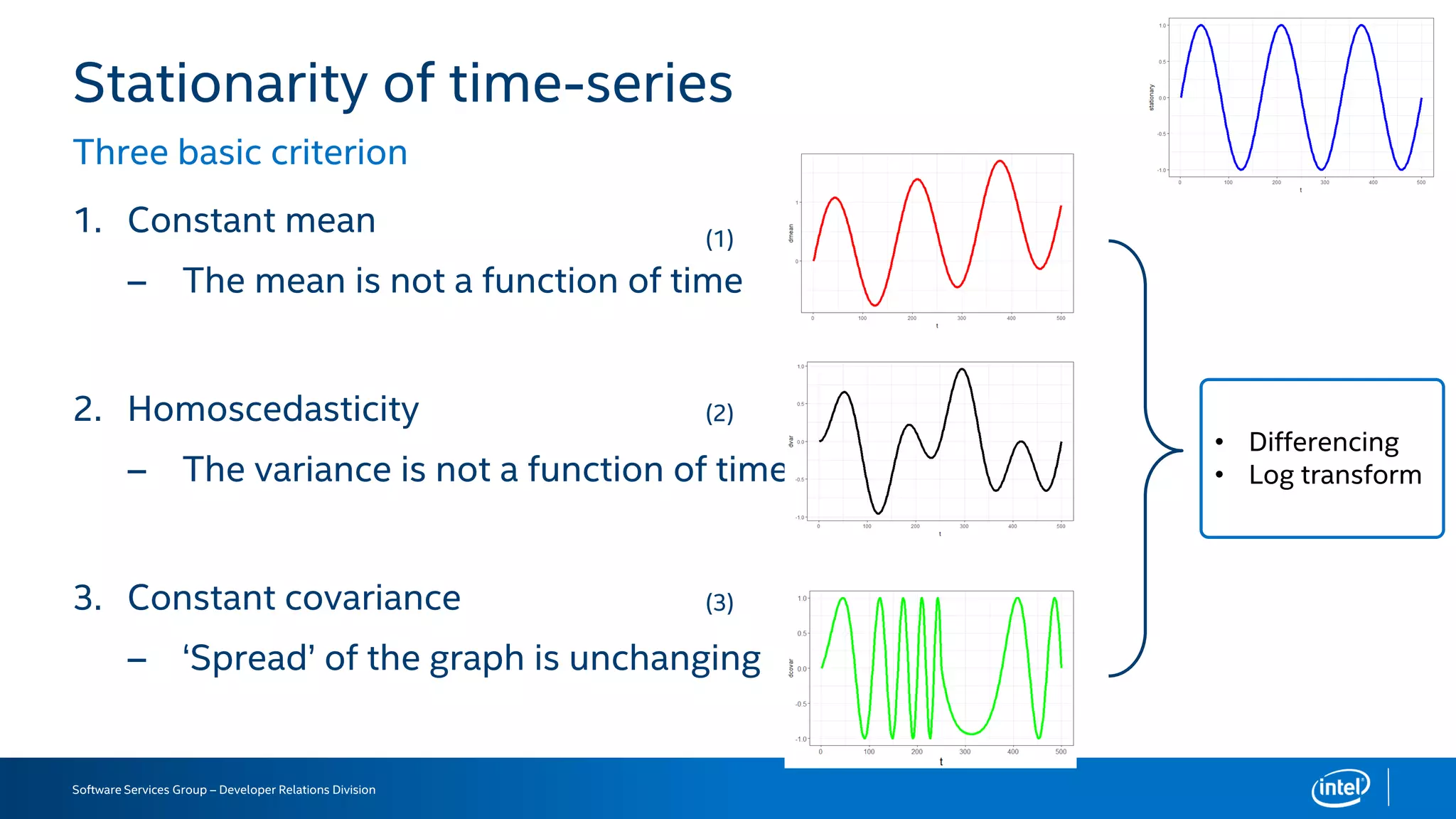

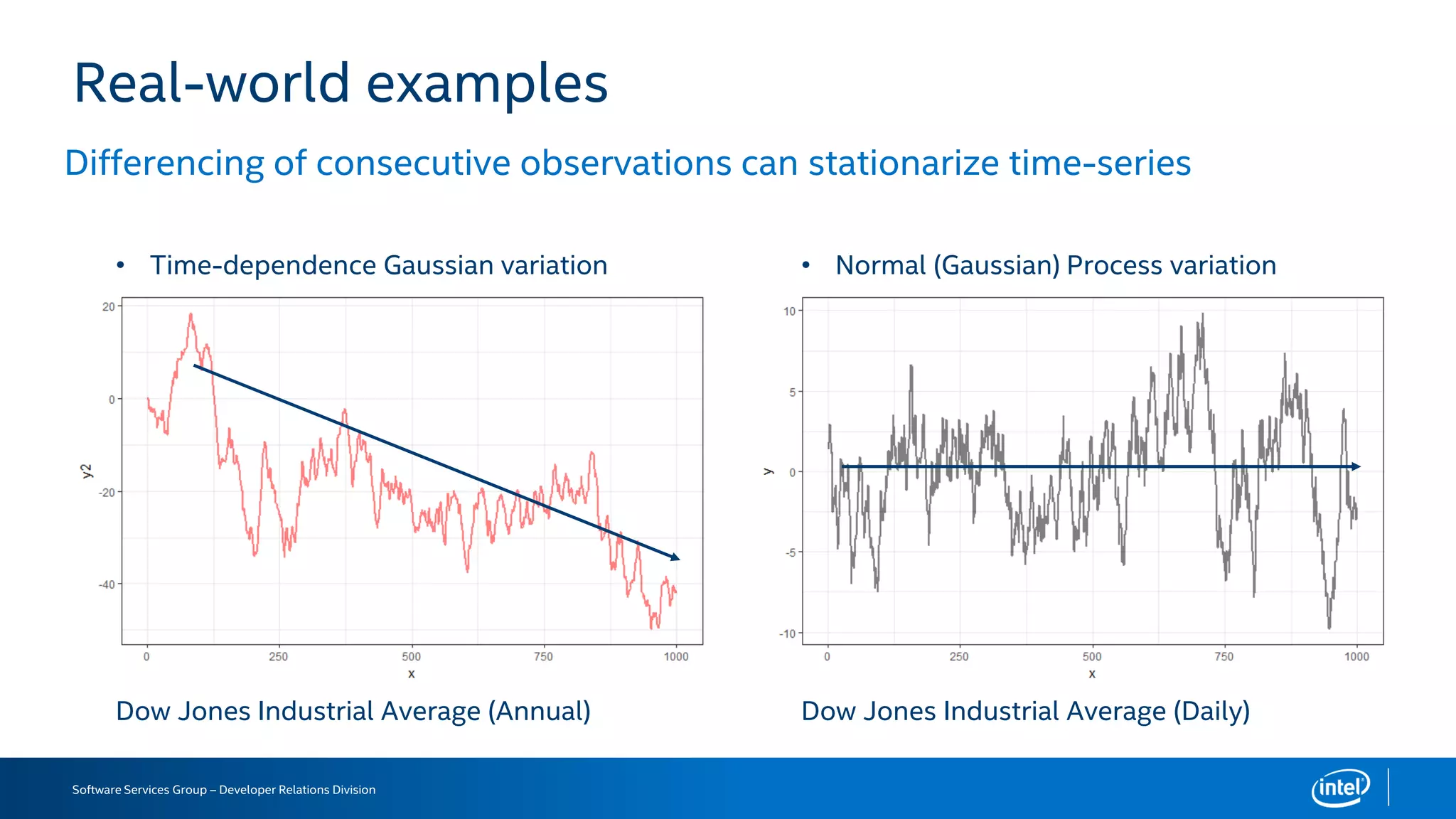

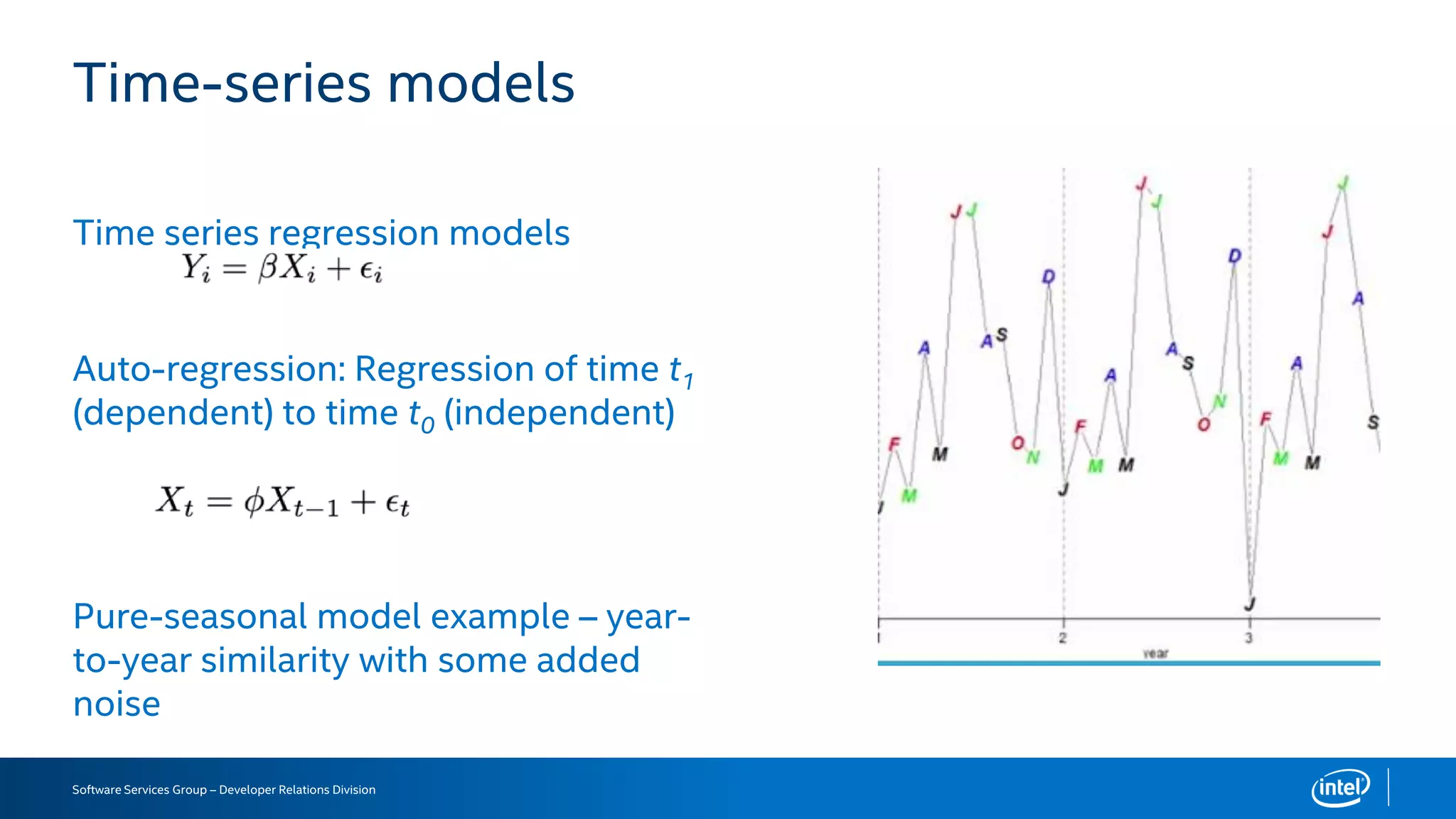

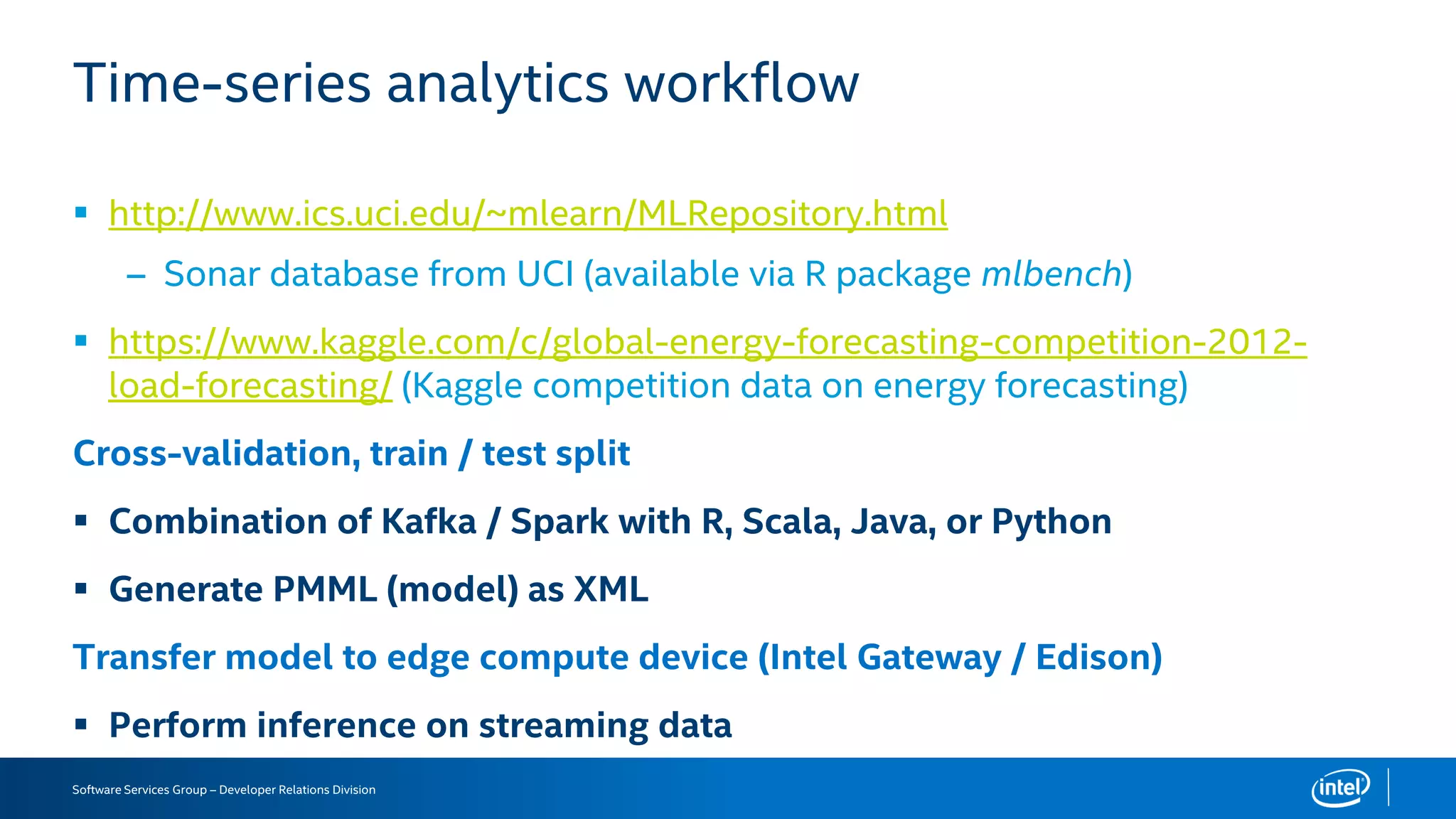

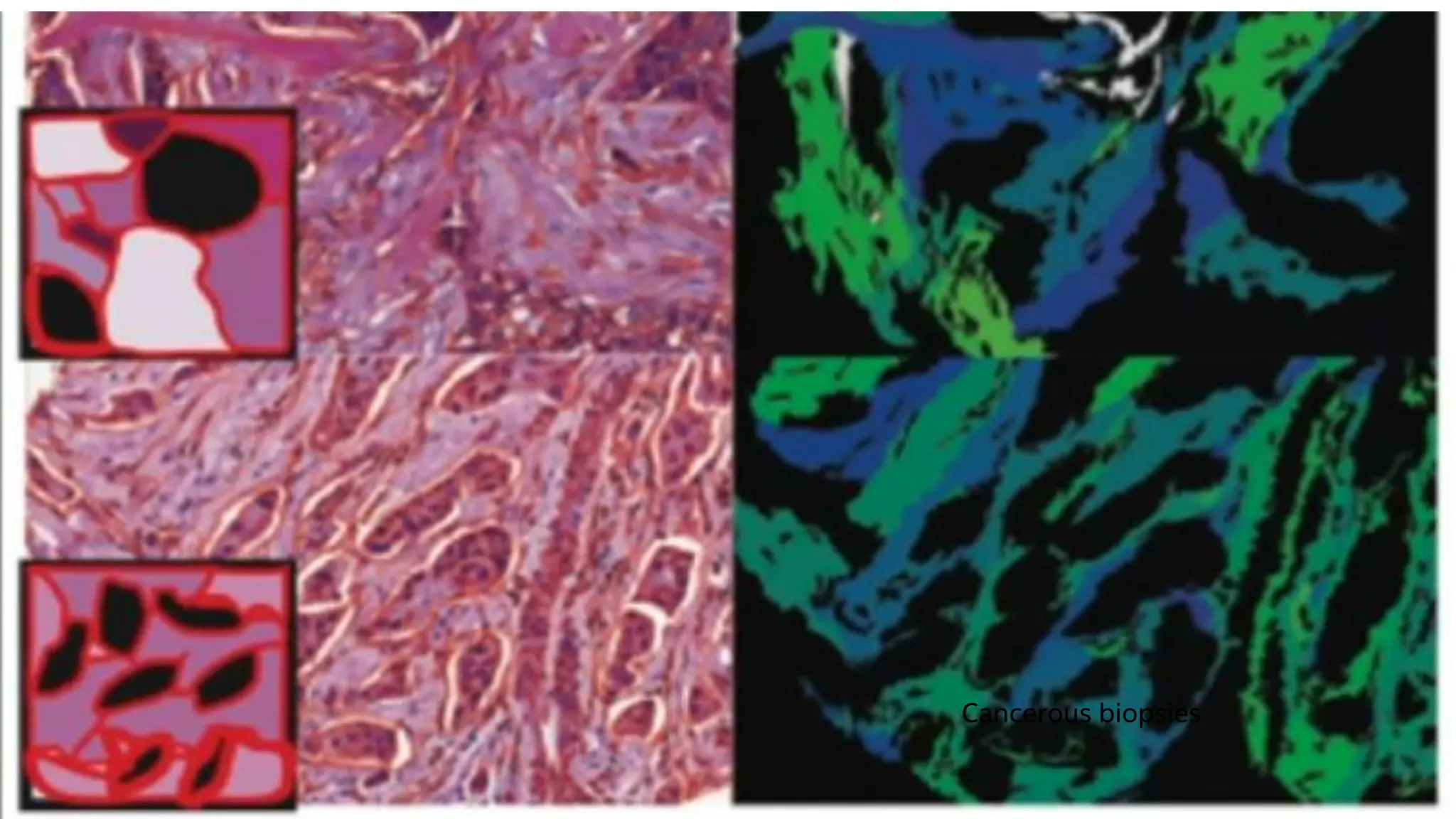

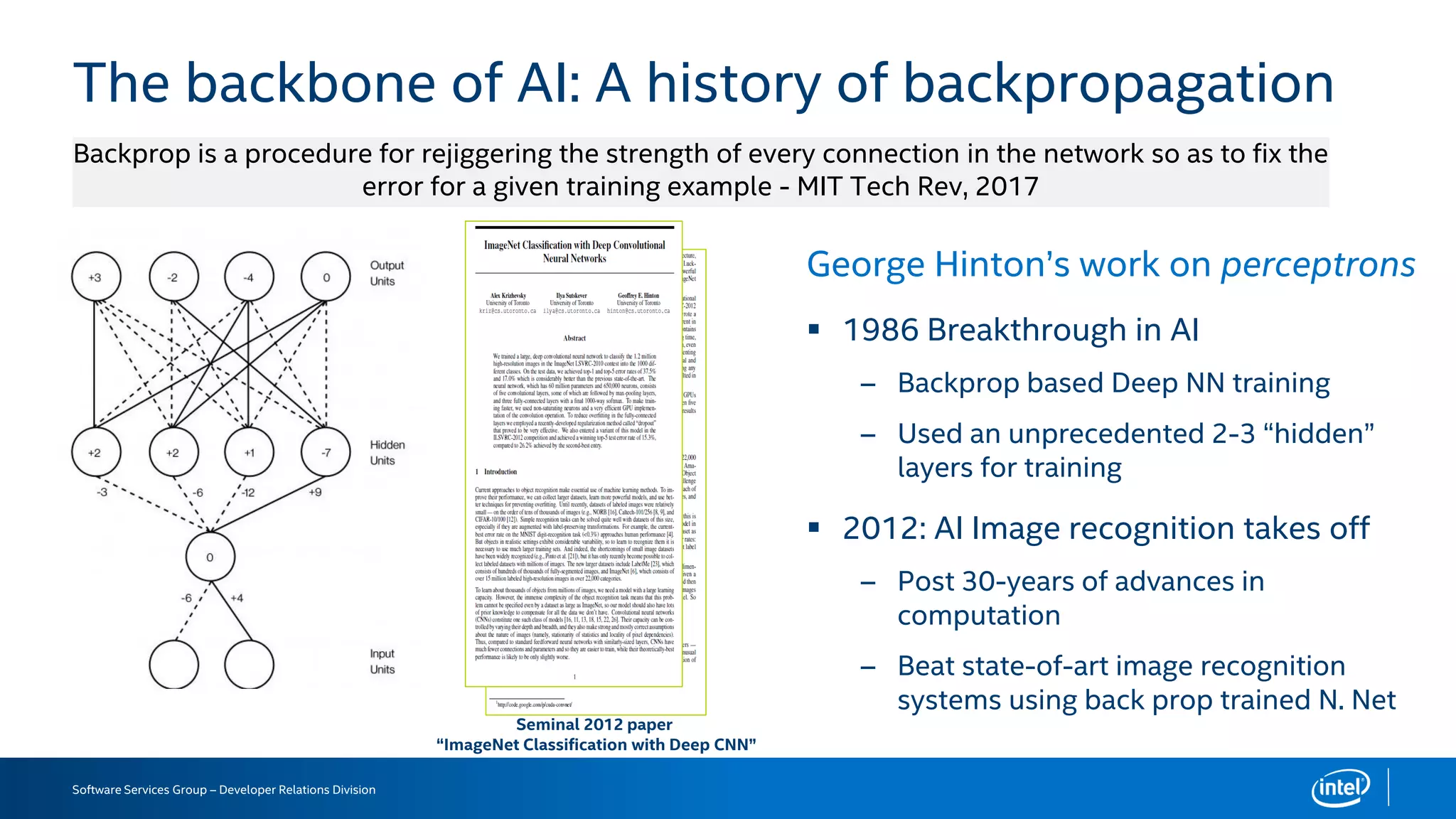

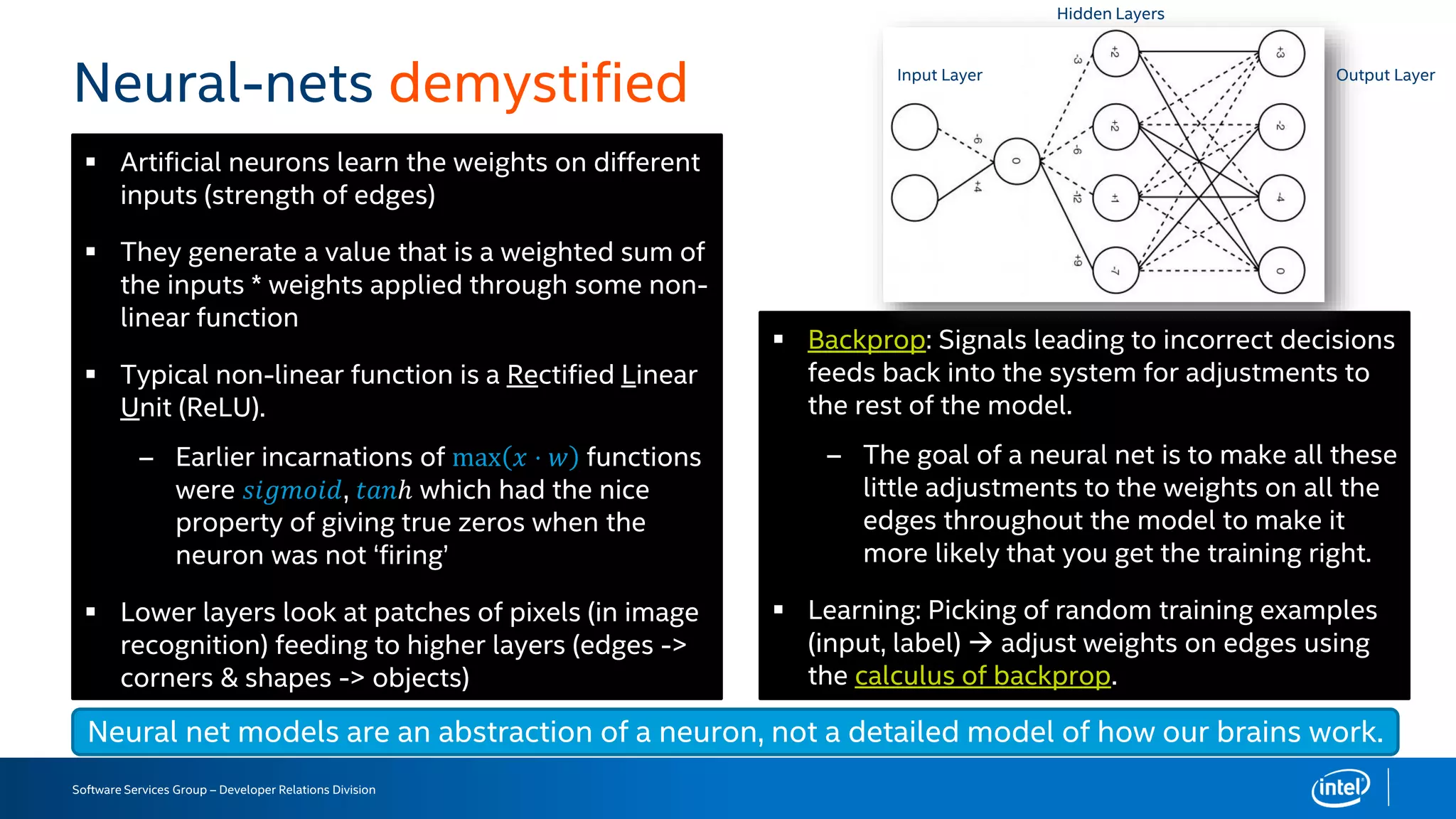

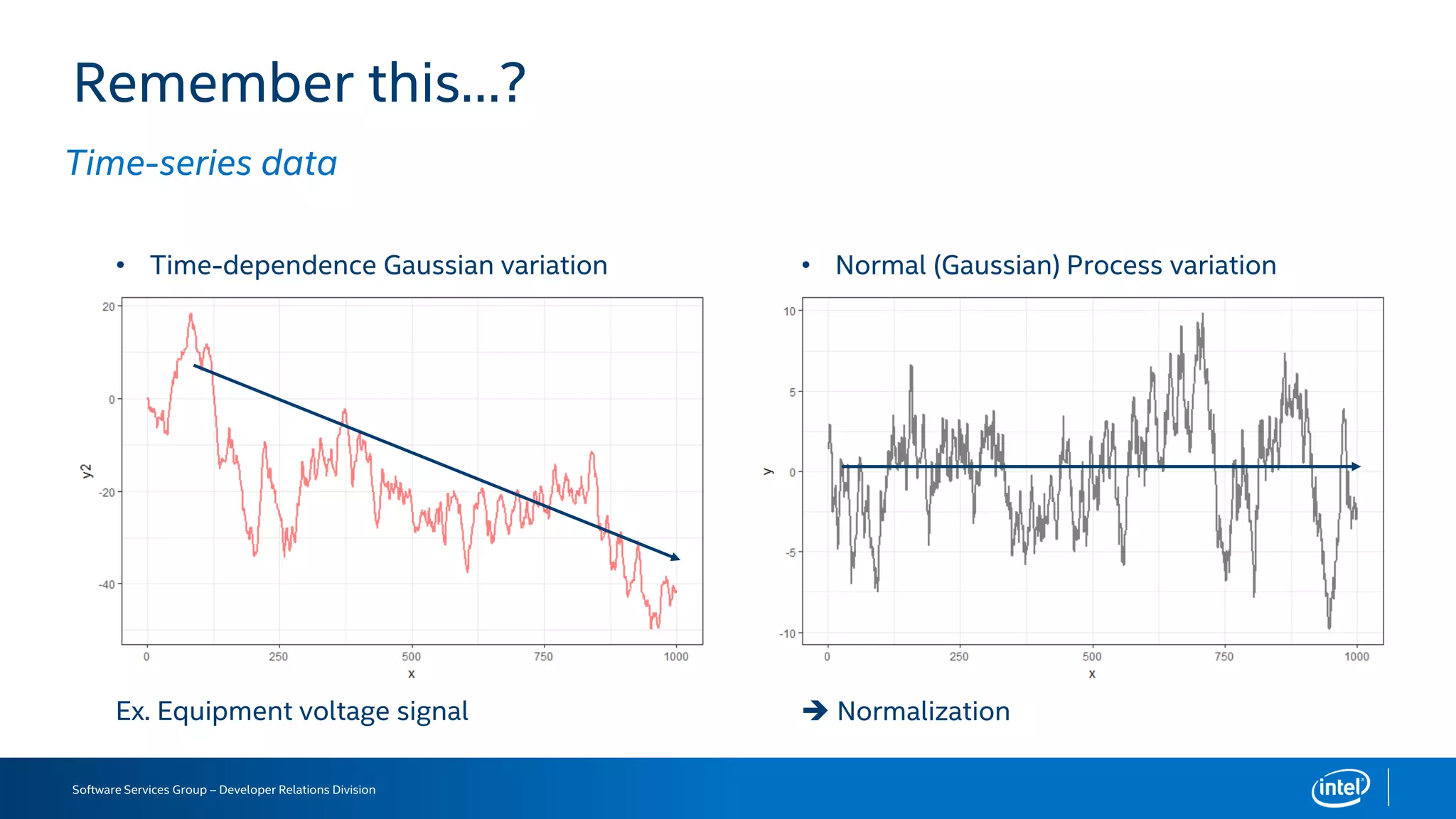

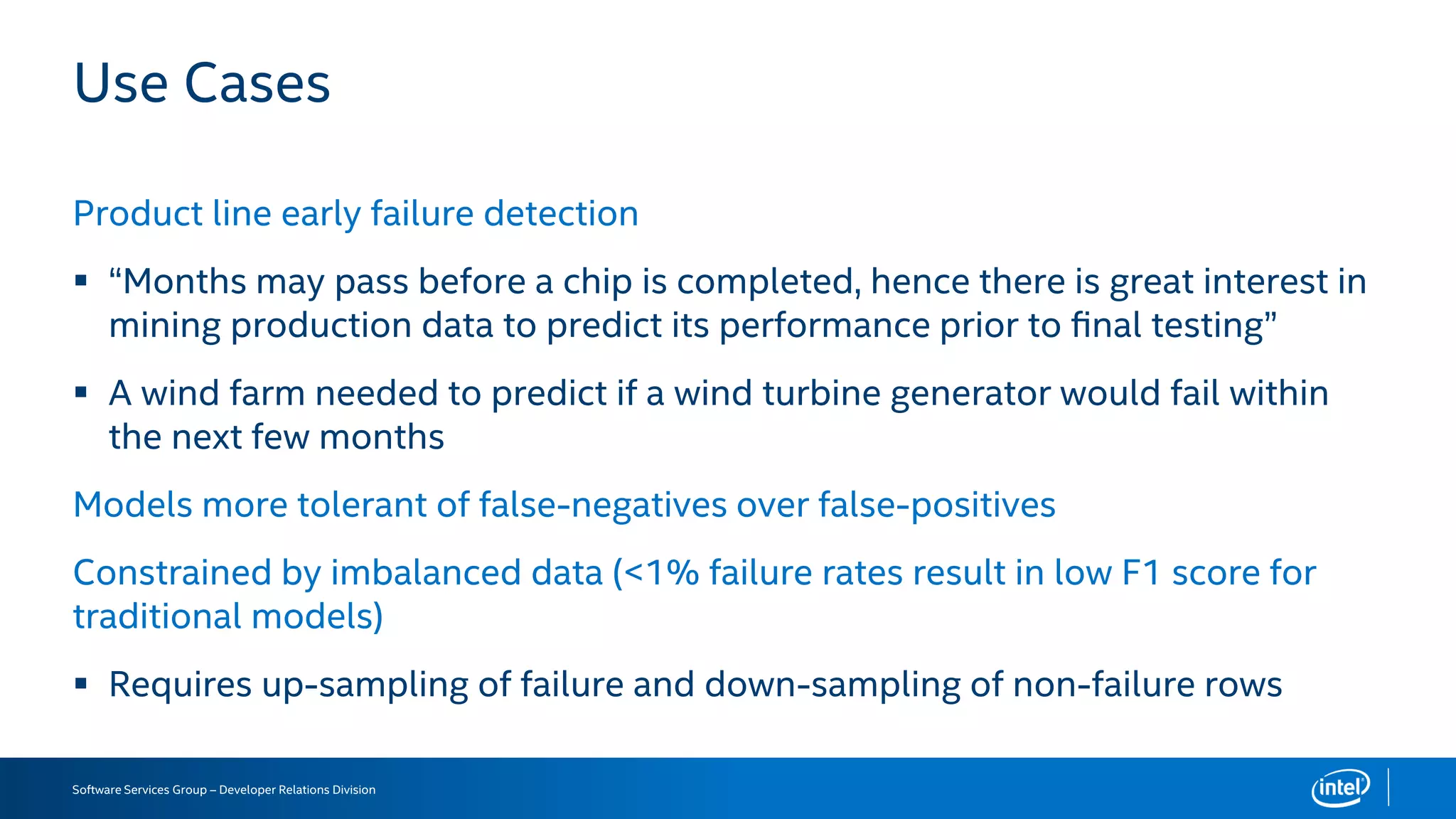

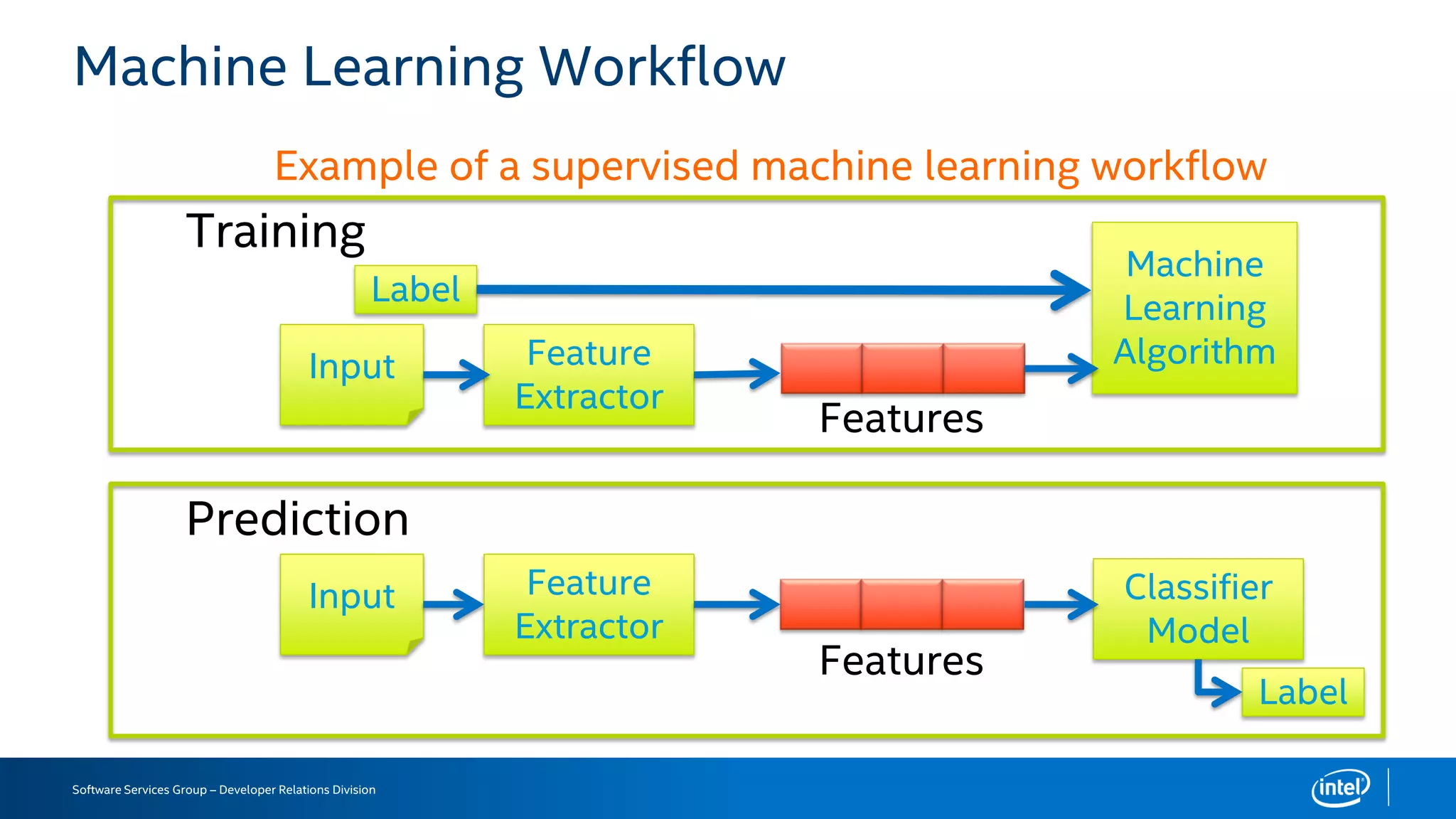

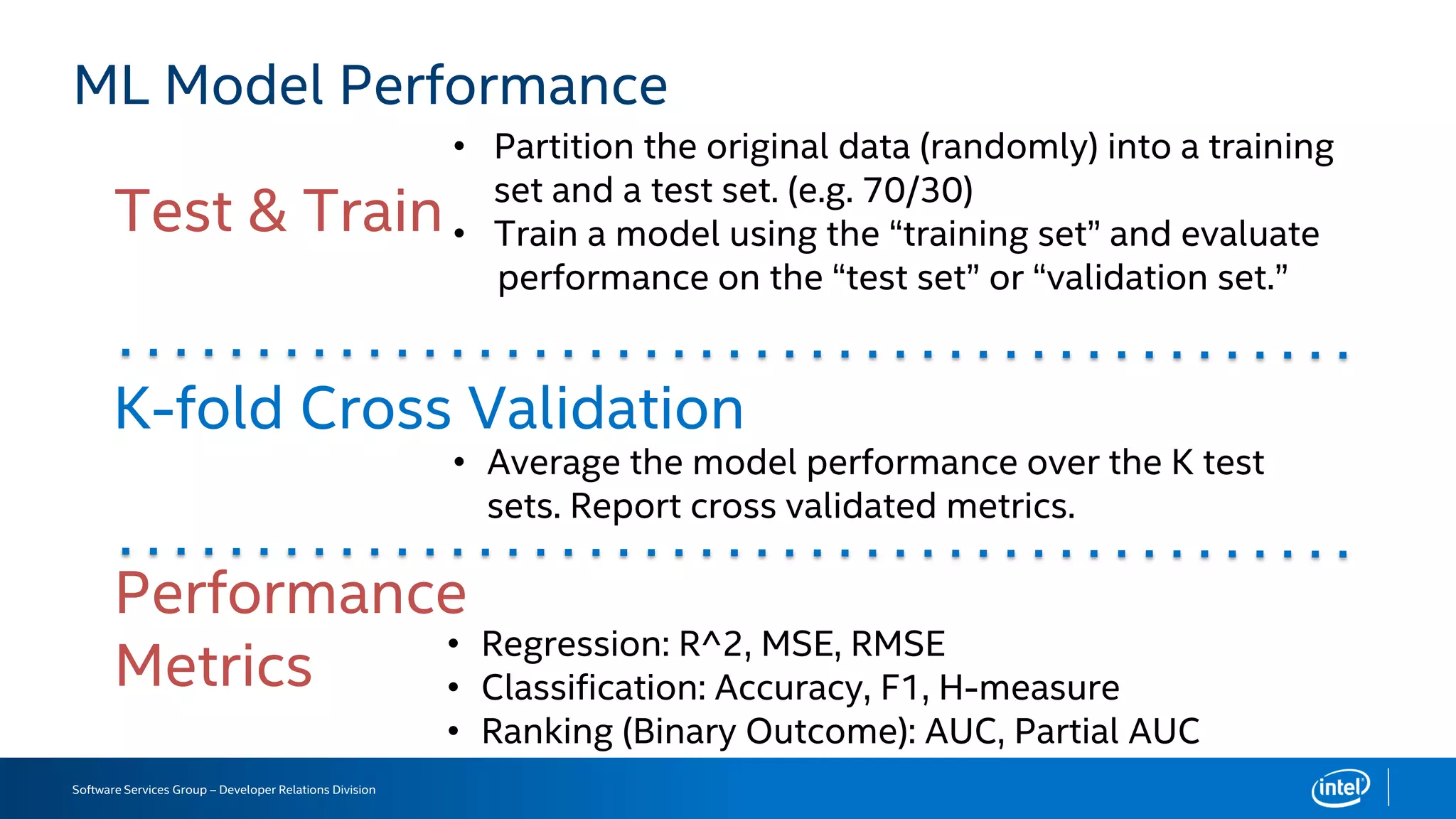

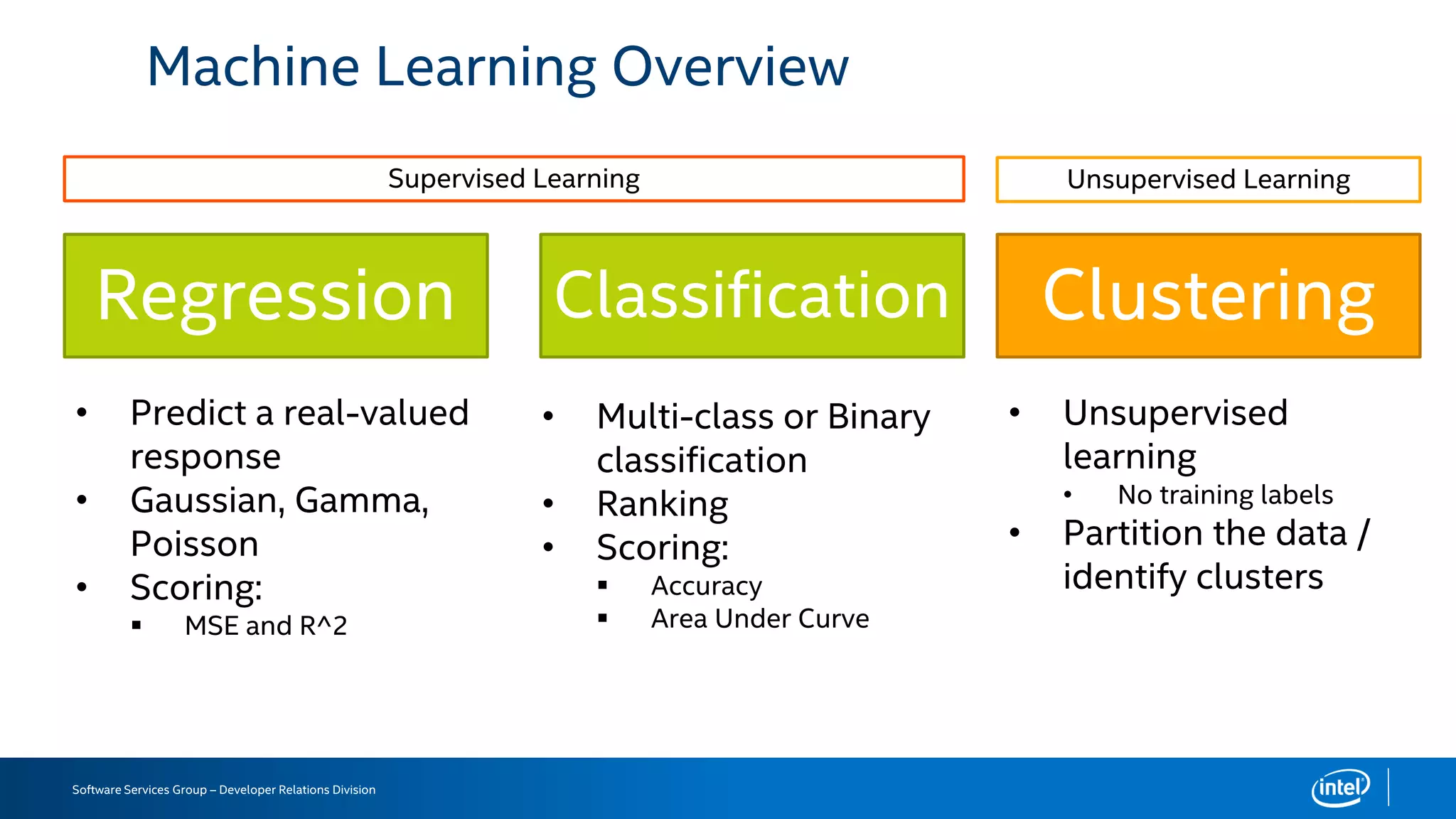

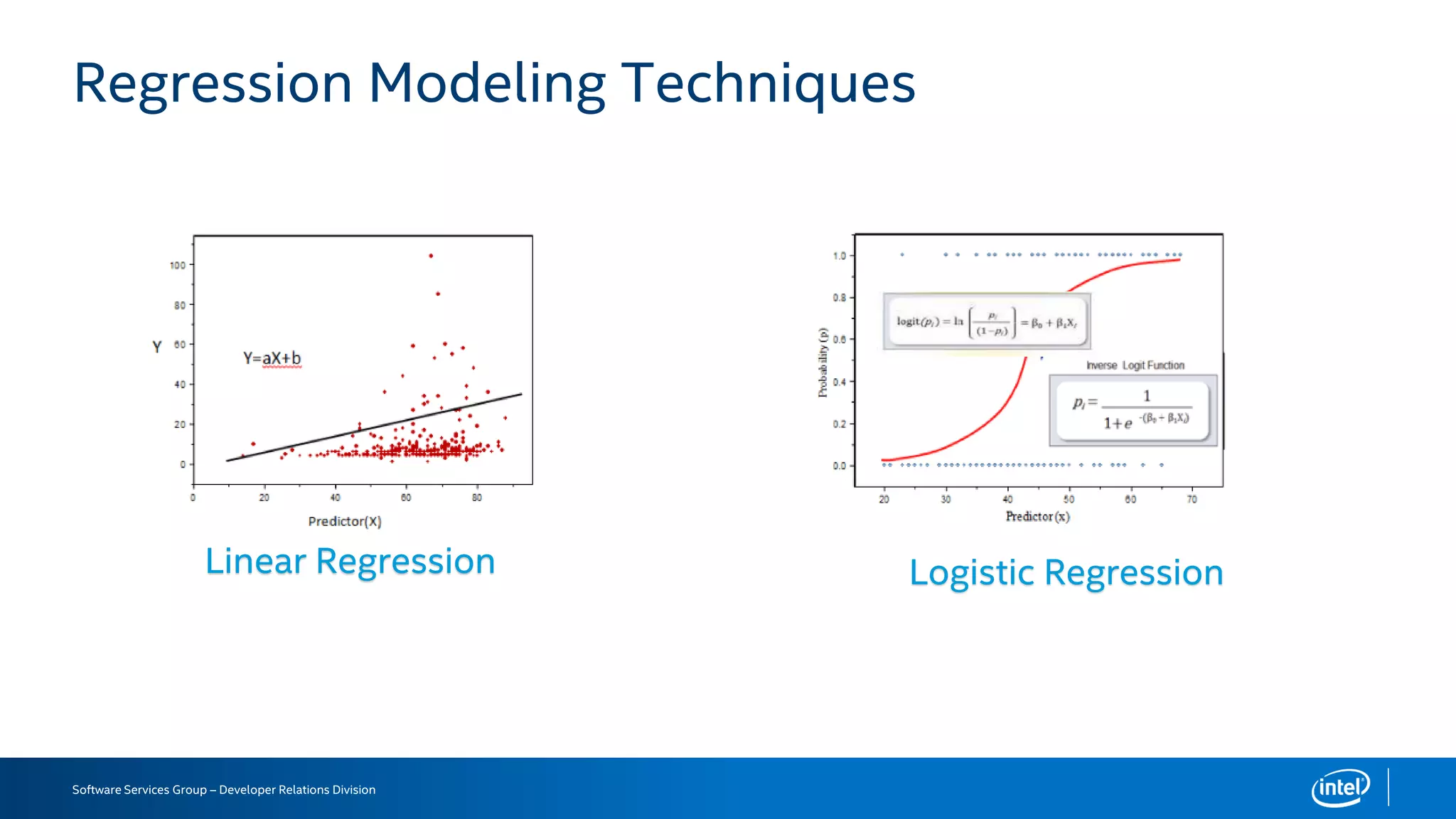

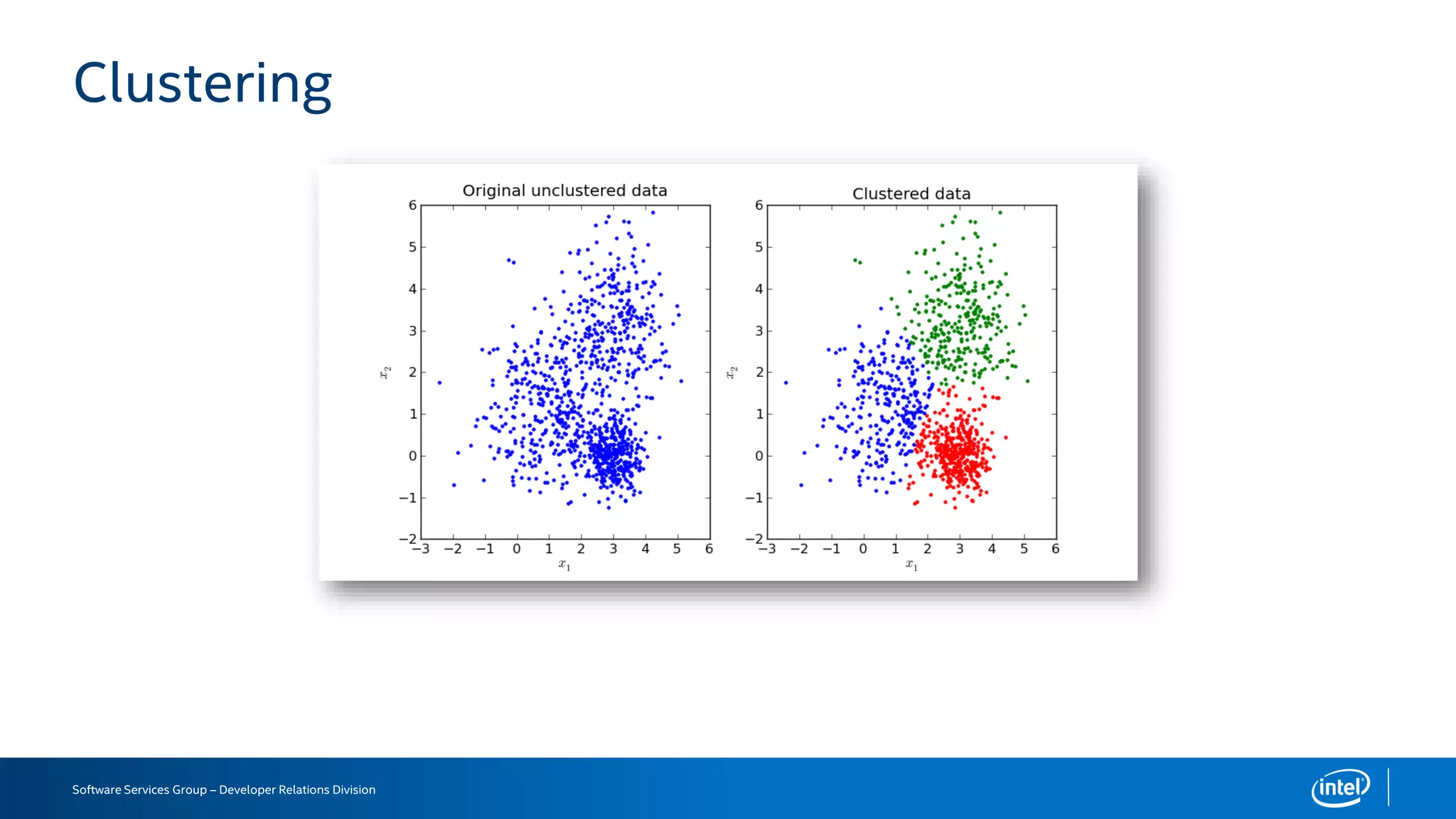

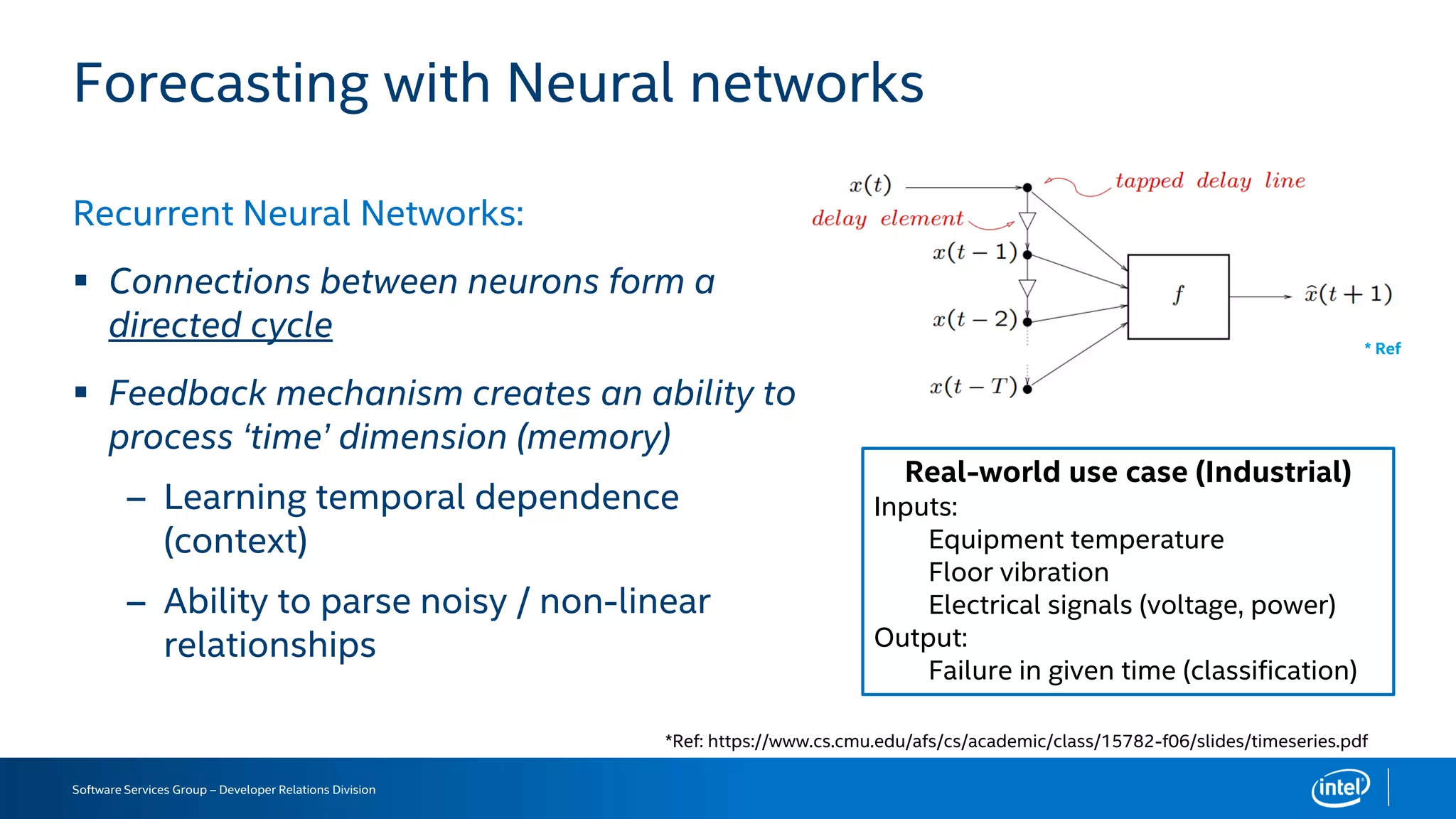

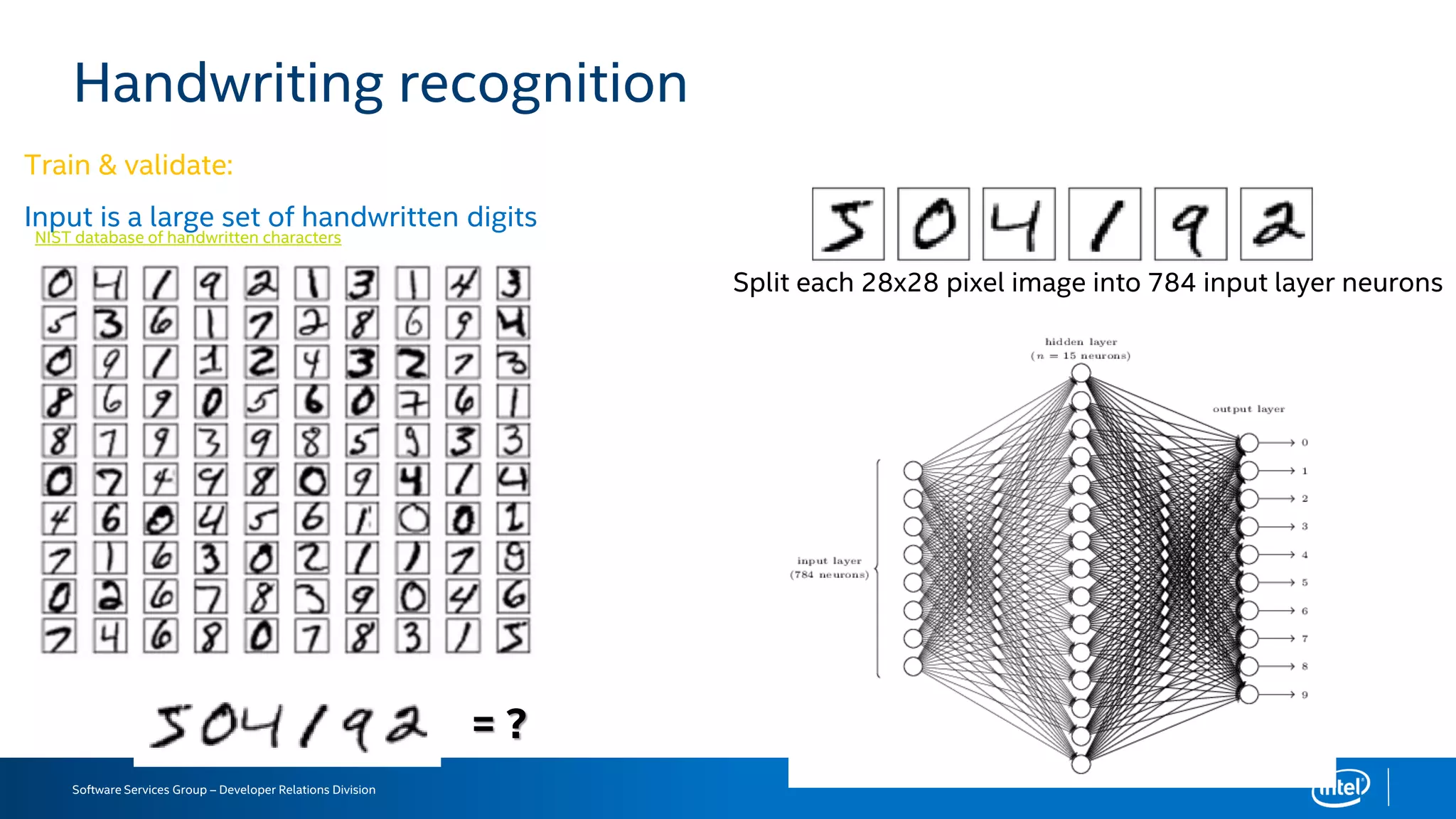

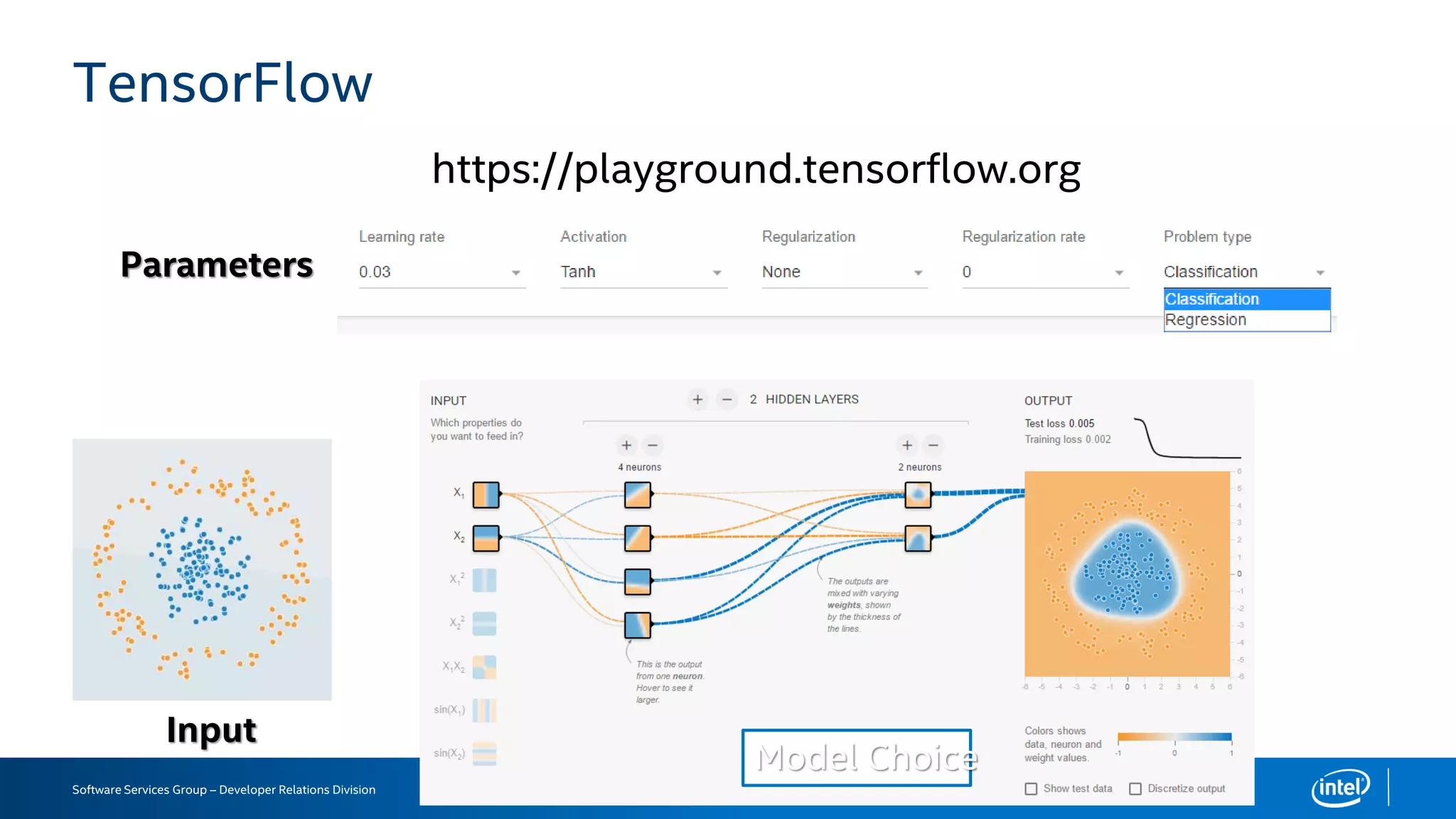

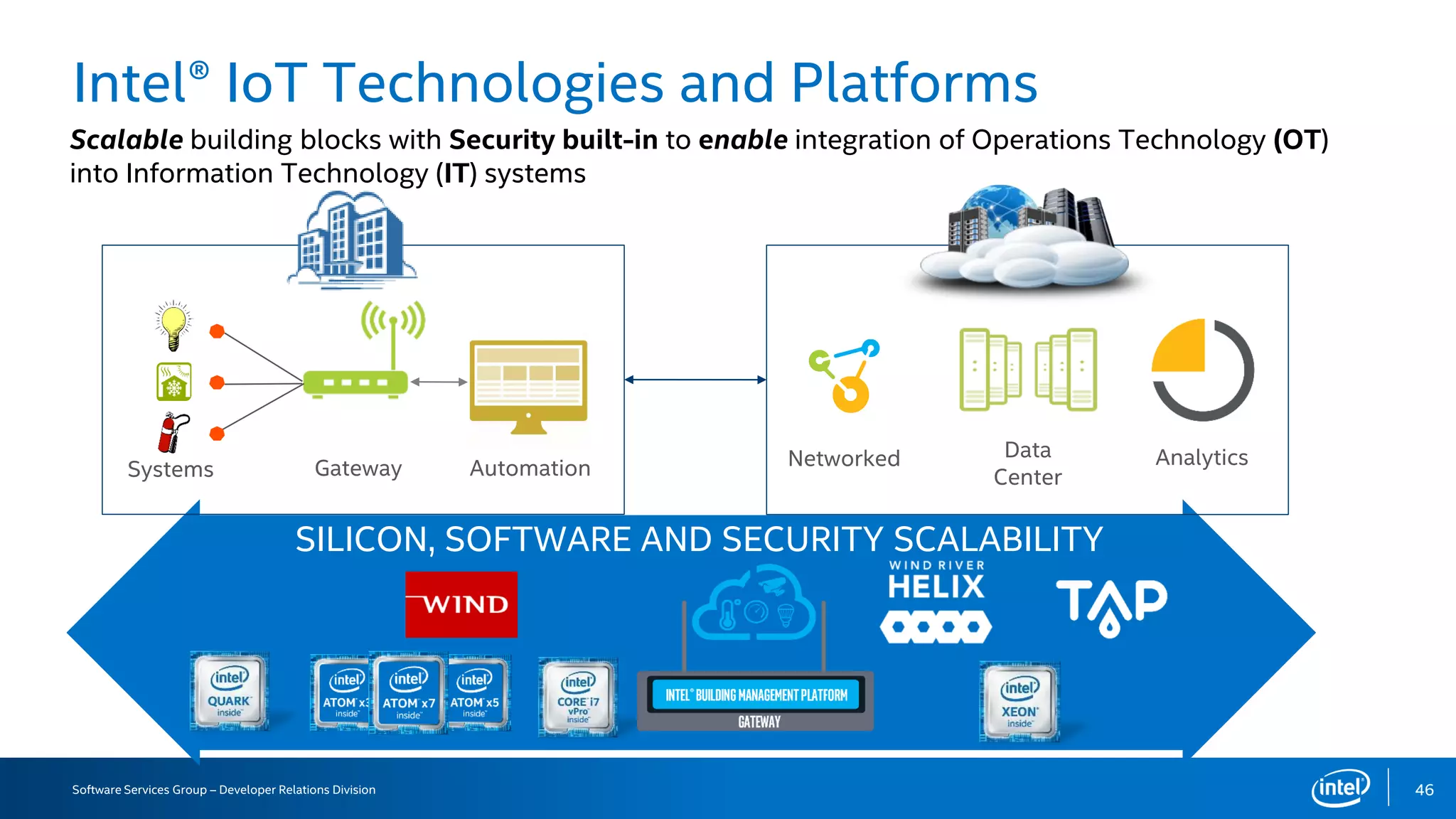

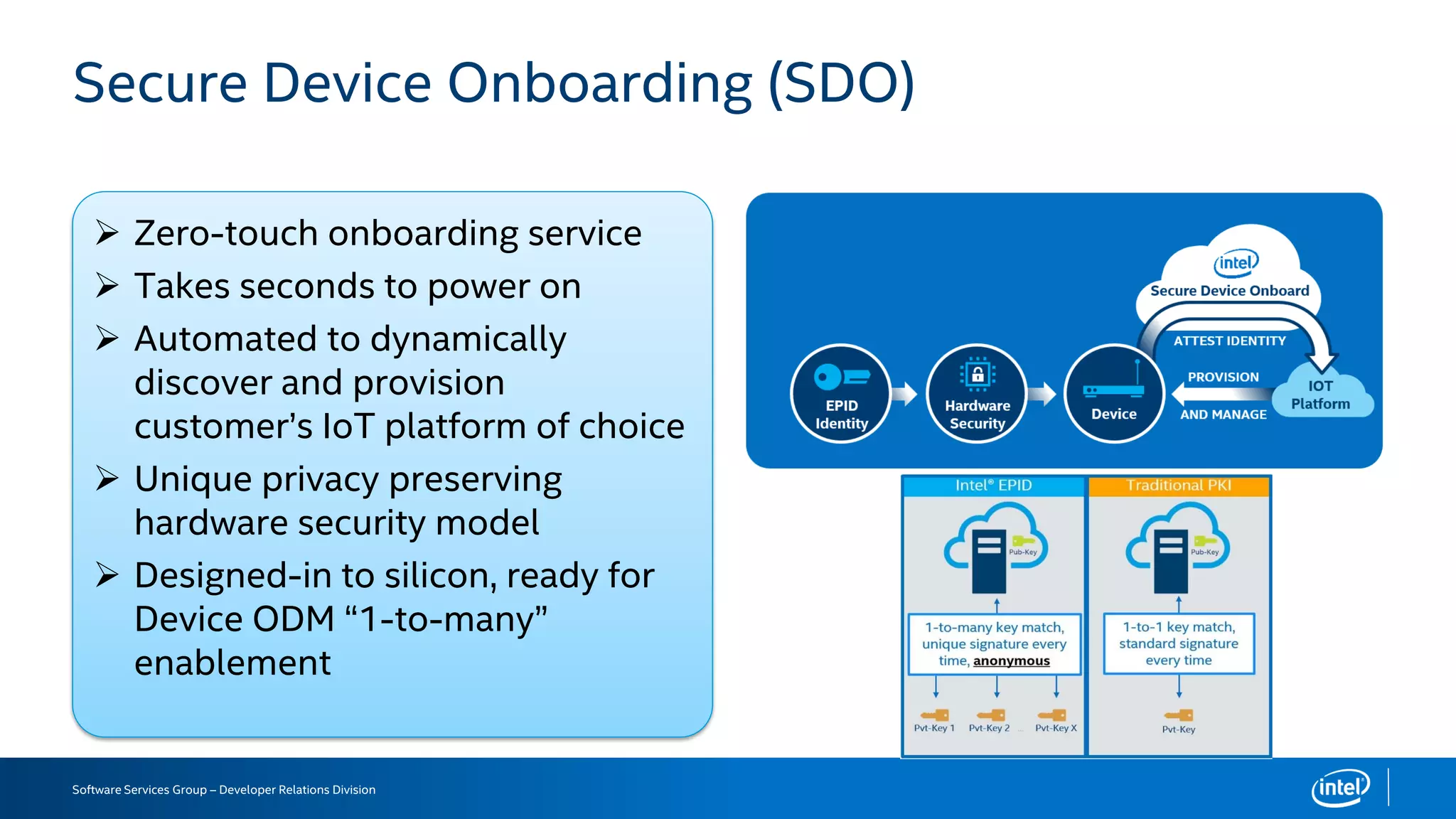

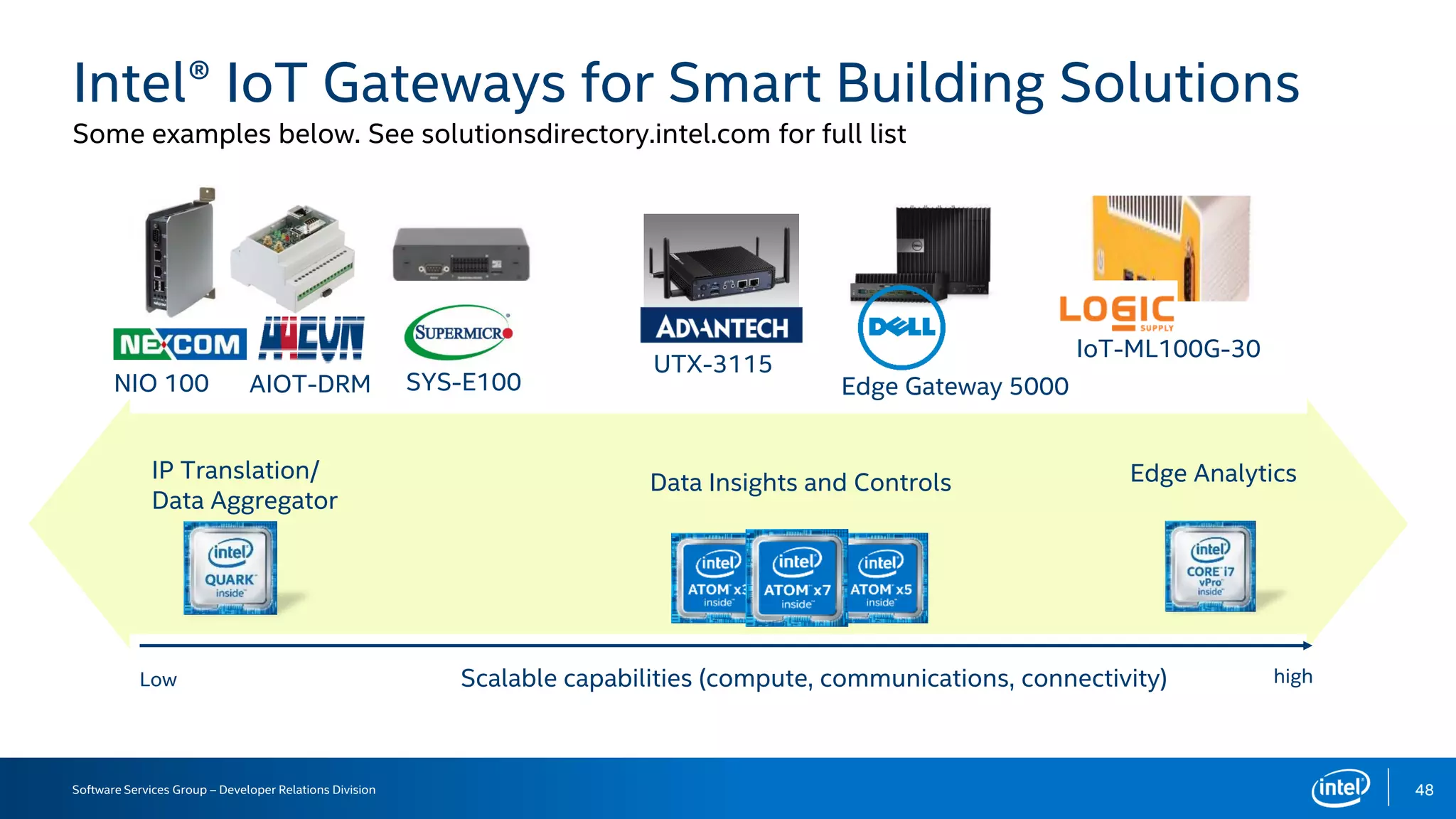

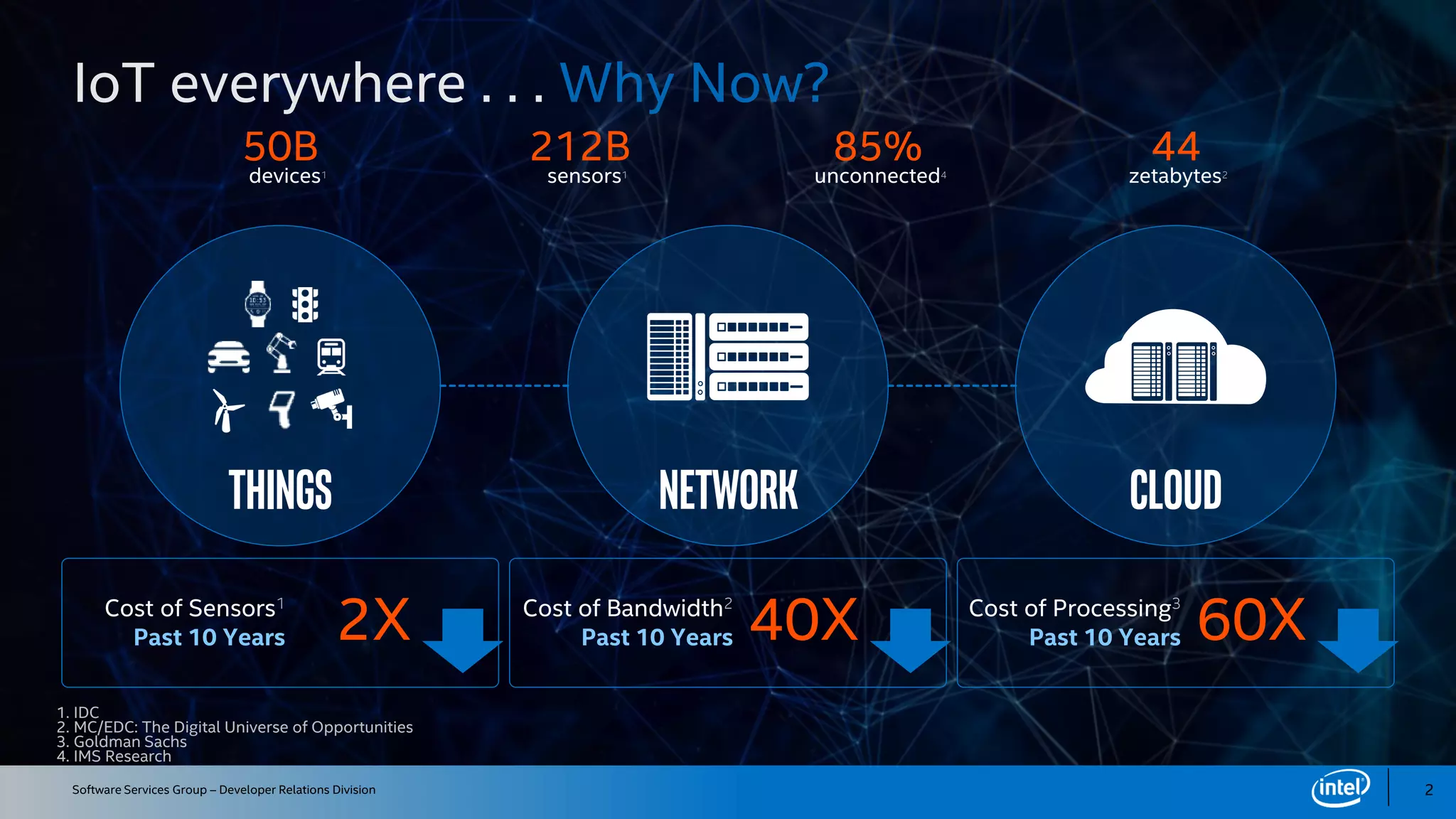

The document discusses the applications of machine learning in the Internet of Things (IoT), highlighting financial, healthcare, and smart manufacturing use cases. It covers the architecture necessary for IoT solutions, the importance of time-series data analysis, and machine learning workflow including model training and performance metrics. The document emphasizes Intel's commitment to IoT technologies and platforms to enhance integration and security.

![- Karl Steinbuch, German computer science pioneer, 1966

“In a few decades time,

computers will be

interwoven into almost

every industrial product.”

[Ref: https://en.wikipedia.org/wiki/Karl_Steinbuch]](https://image.slidesharecdn.com/aplicationsformachinelearninginiot-intelglobaldevfestoct2017-171213235138/75/Aplications-for-machine-learning-in-IoT-4-2048.jpg)