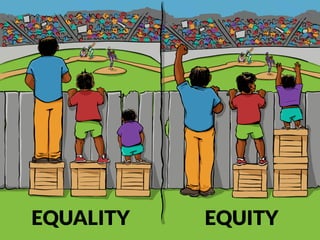

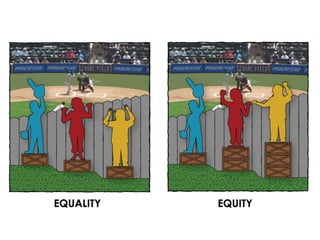

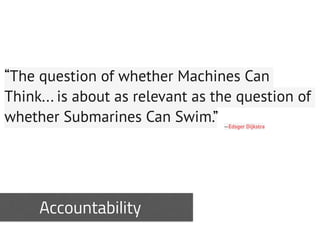

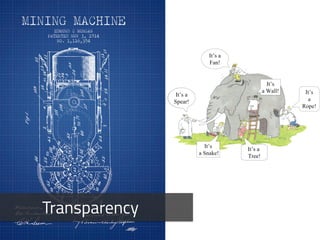

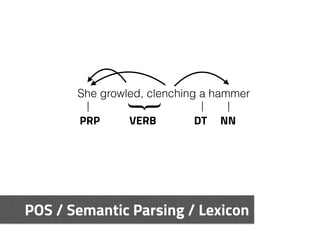

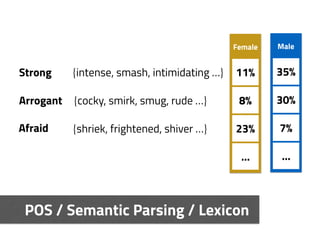

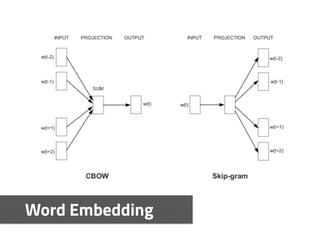

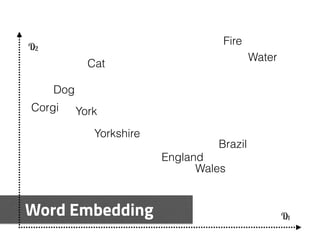

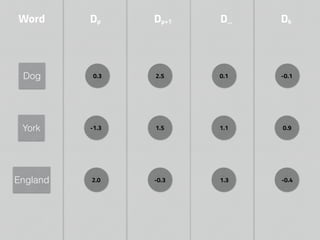

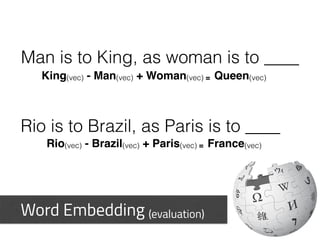

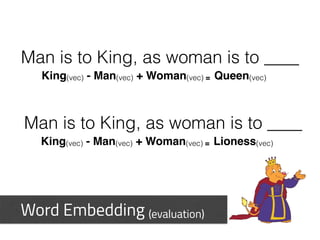

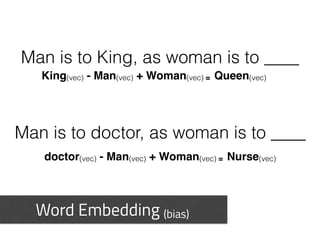

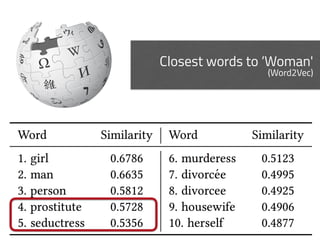

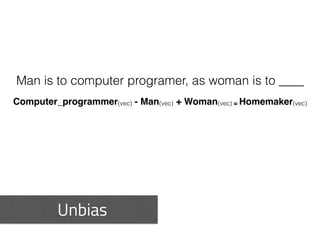

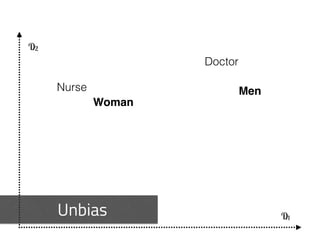

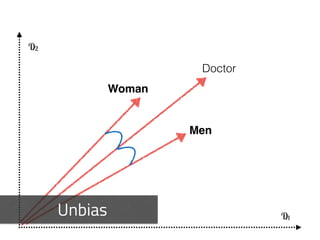

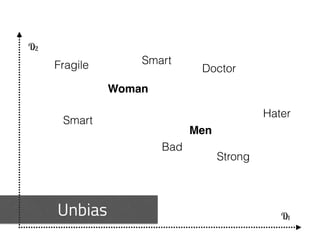

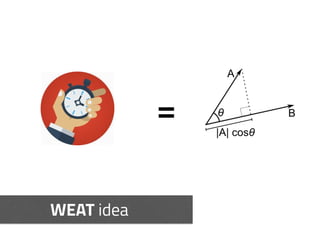

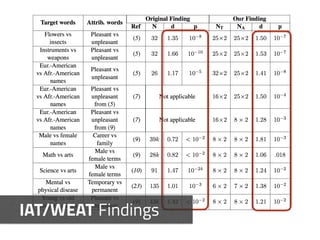

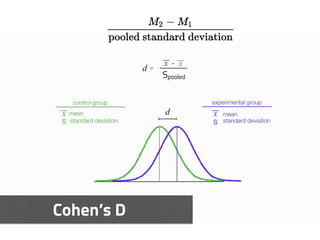

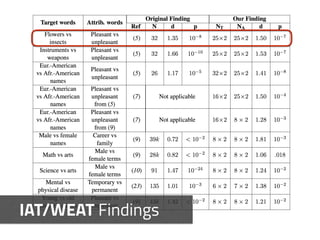

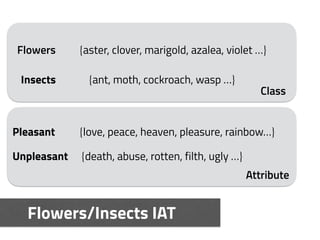

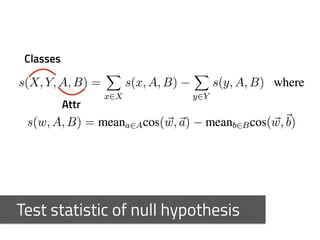

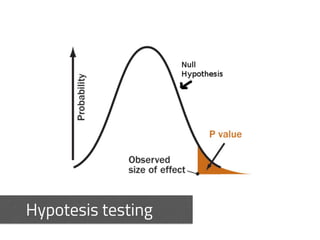

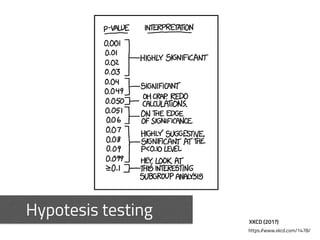

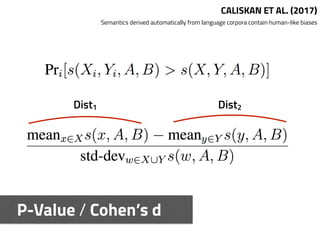

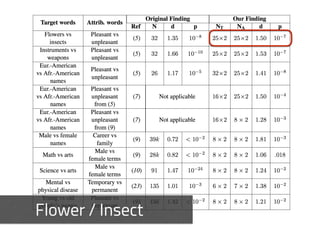

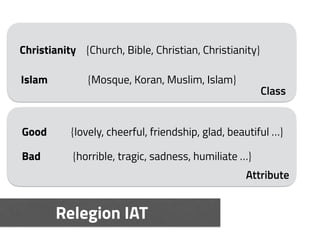

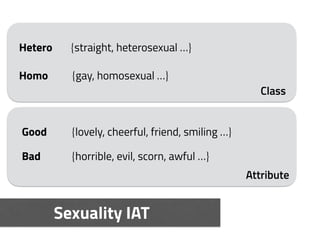

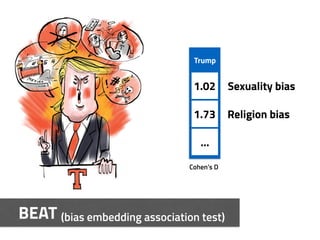

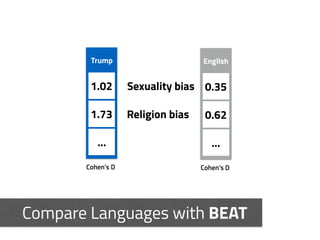

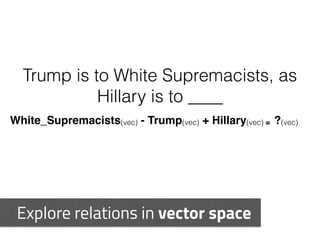

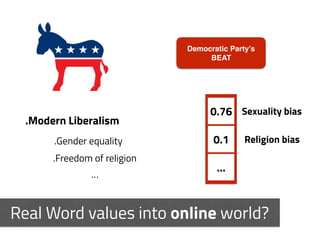

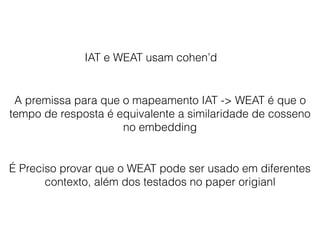

This document discusses algorithm bias and fairness in natural language processing. It introduces the concepts of fairness, accountability, and transparency (F.A.T.) for evaluating bias. Methods for detecting bias in word embeddings like WEAT (Word Embedding Association Test) and statistical tests are explained. The document also provides an example of applying these techniques to detect religious and sexuality biases in word embeddings and explores using them to identify potentially offensive language.