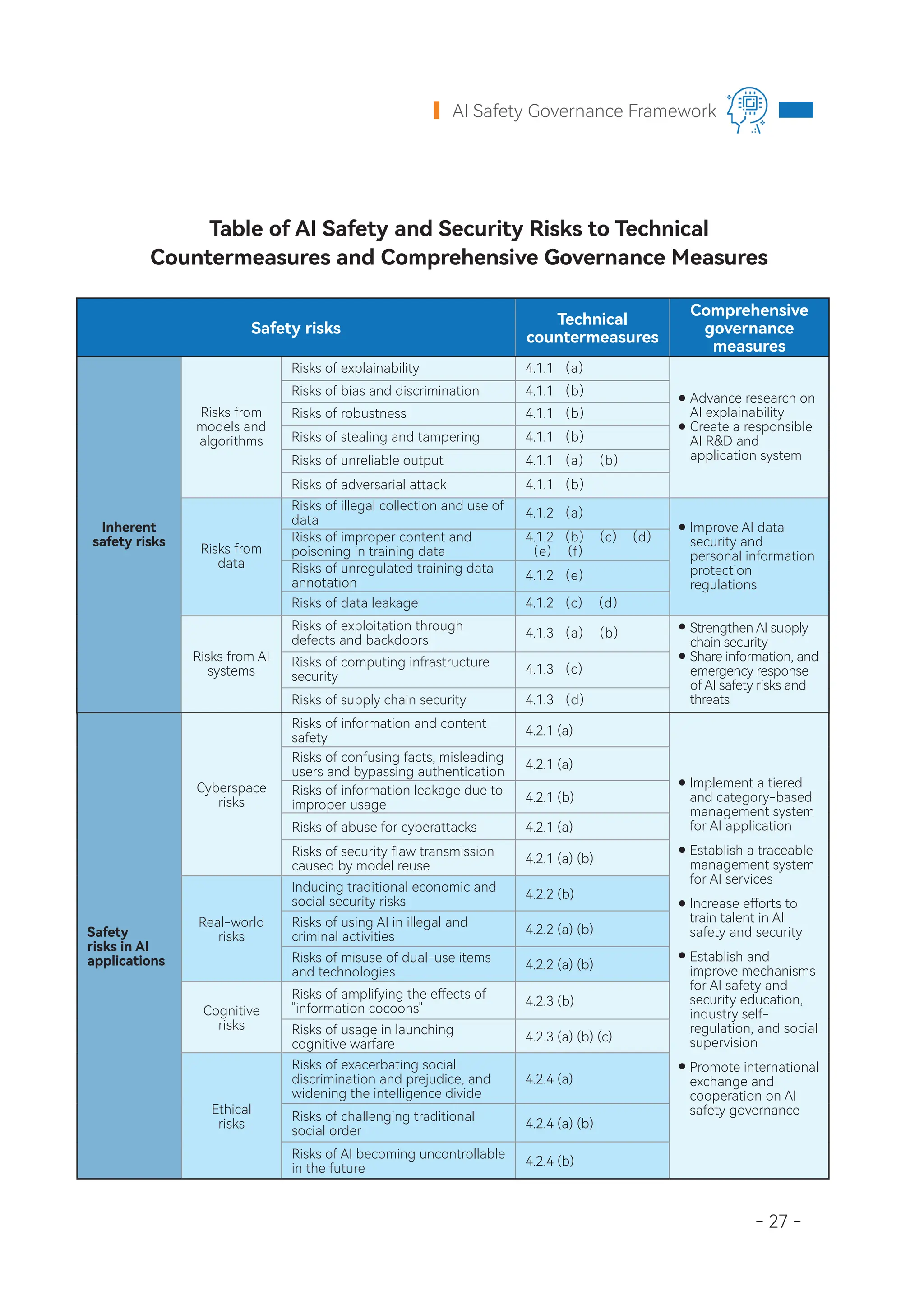

The document outlines a comprehensive framework for Artificial Intelligence (AI) safety governance, emphasizing a collaborative approach among stakeholders to address inherent safety risks and prevent misuse. It categorizes risks associated with AI technology and applications, including biases, data security, and the potential for malicious use, and proposes various technological and management measures to mitigate these risks. The goal is to develop AI responsibly and ensure its benefits are equitably distributed while safeguarding societal and individual interests.