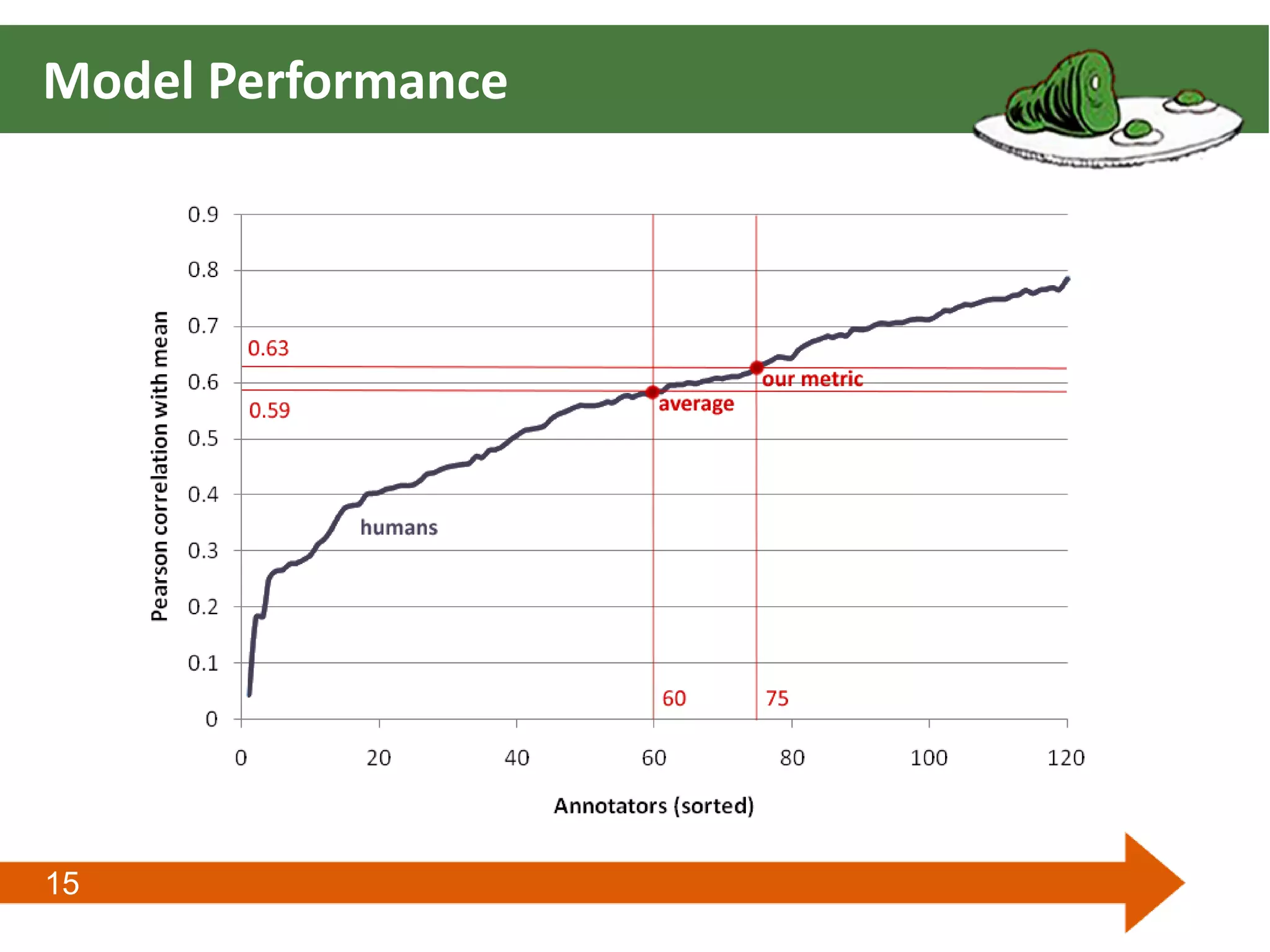

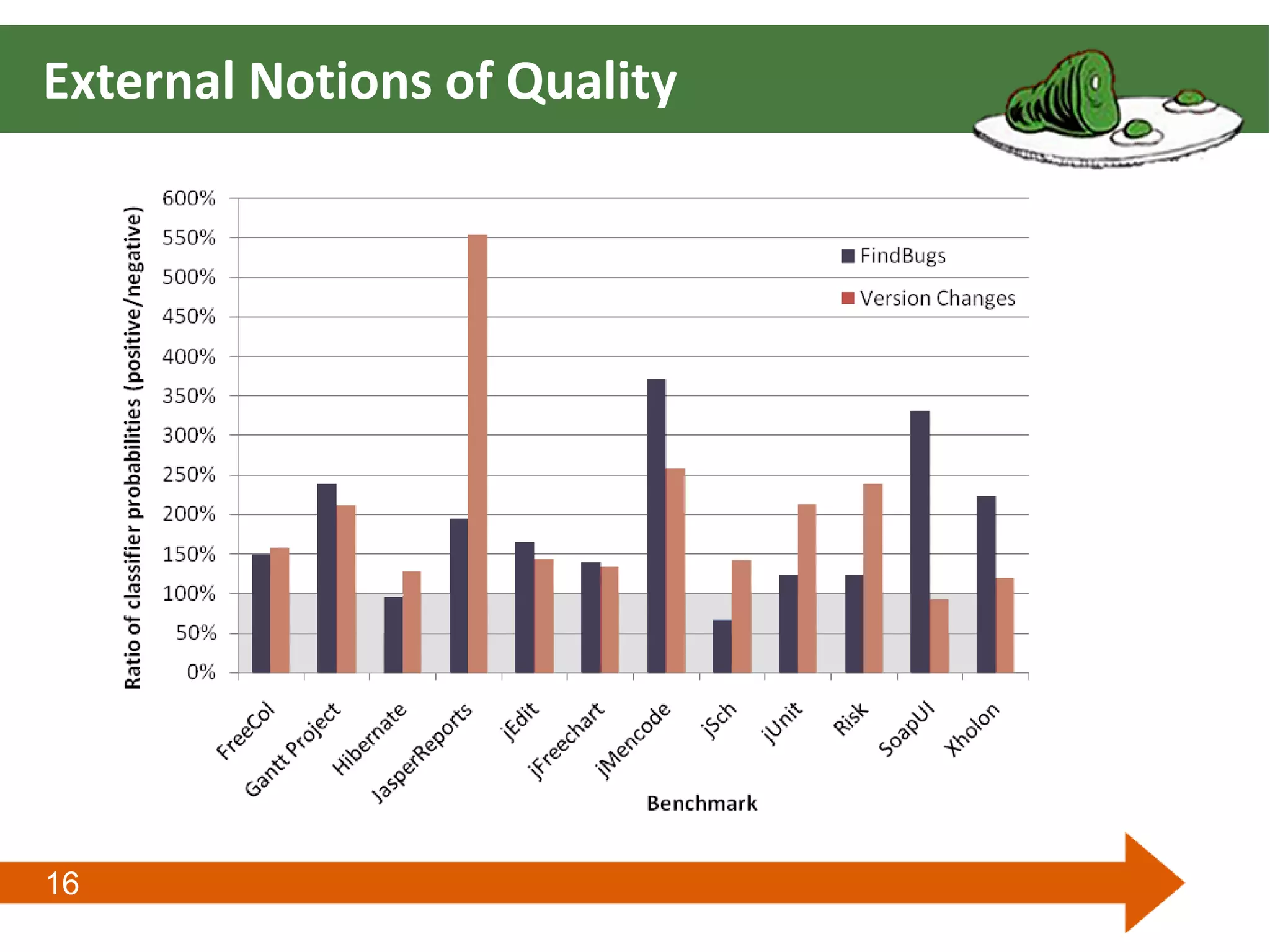

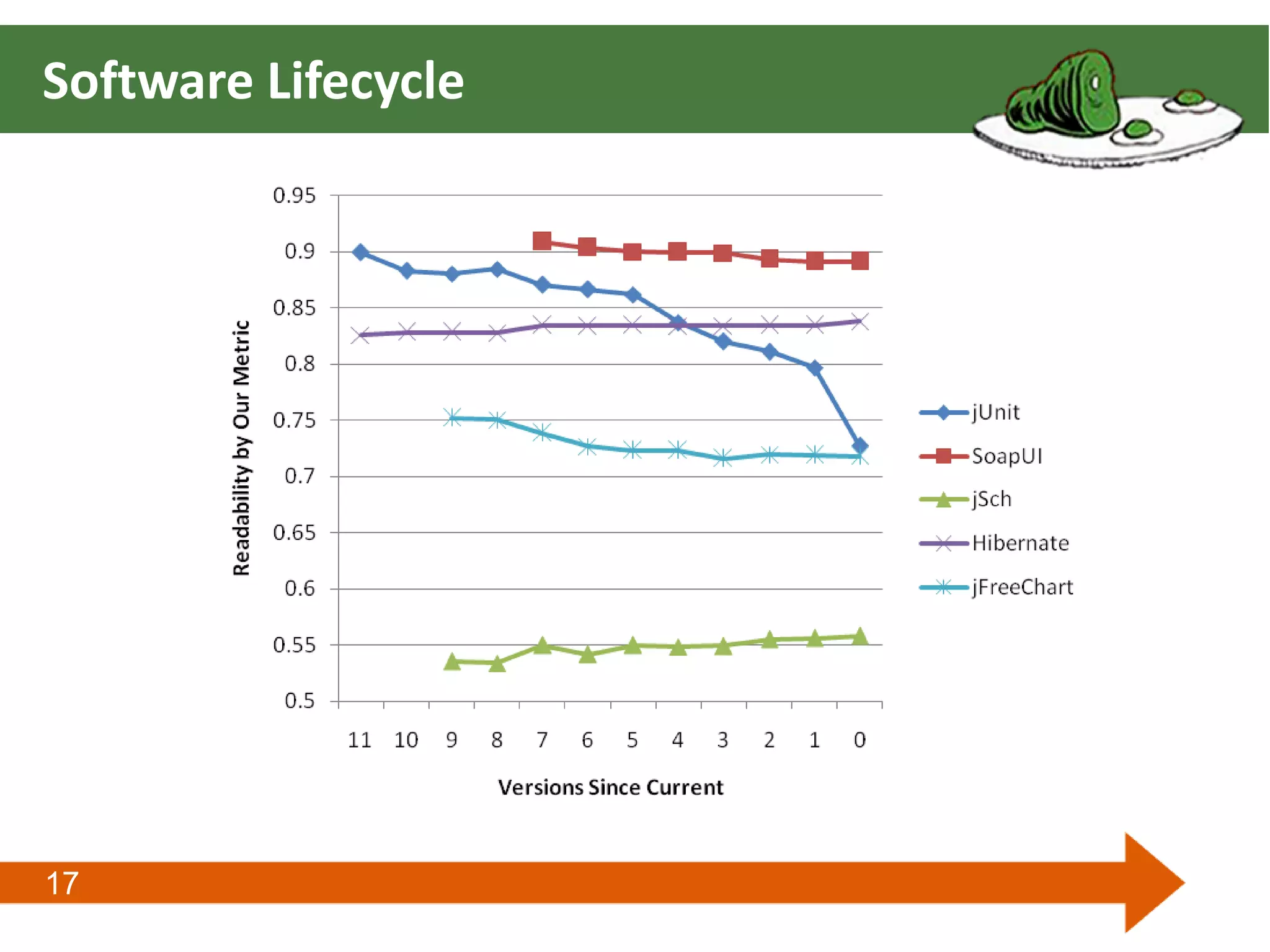

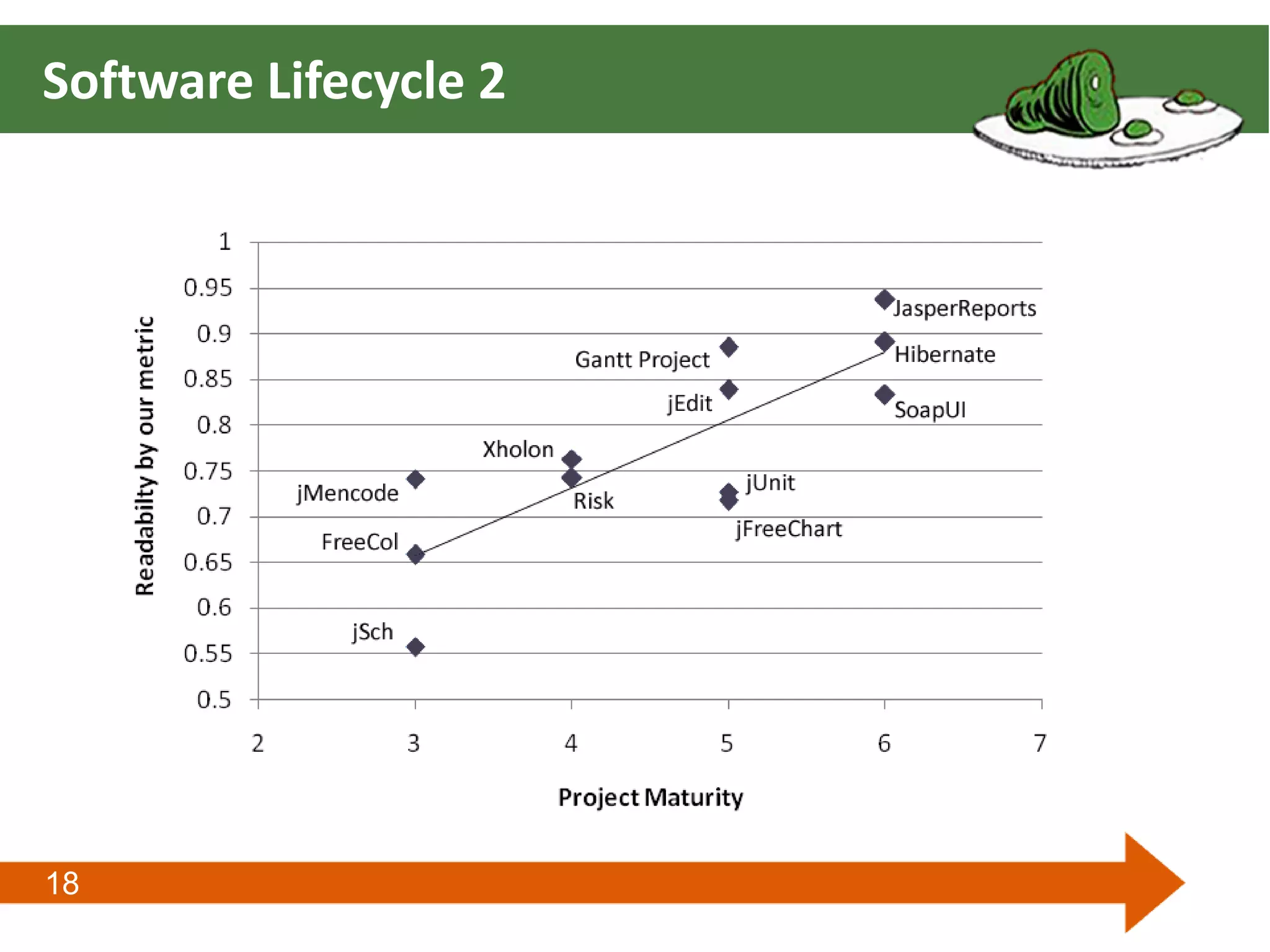

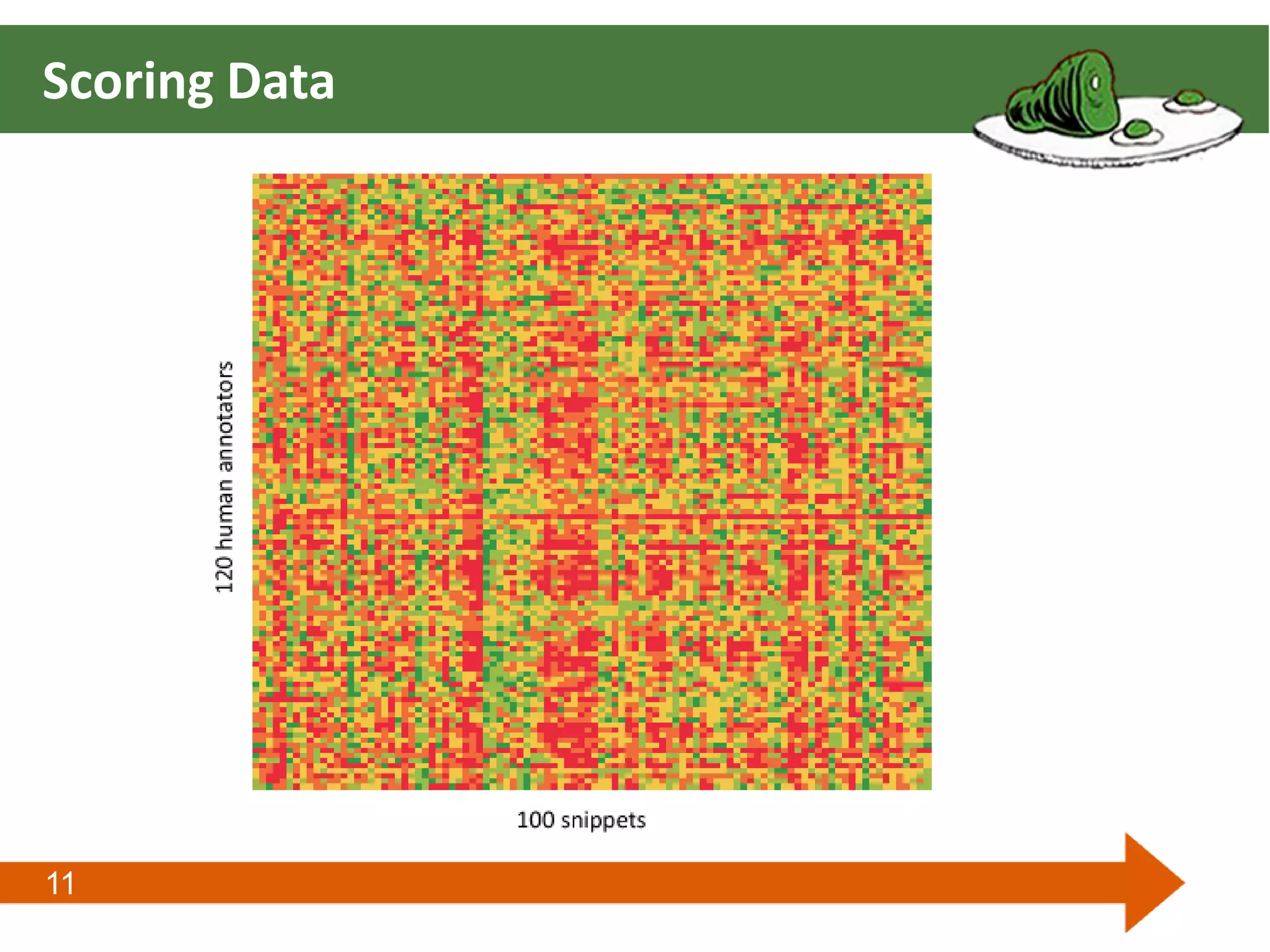

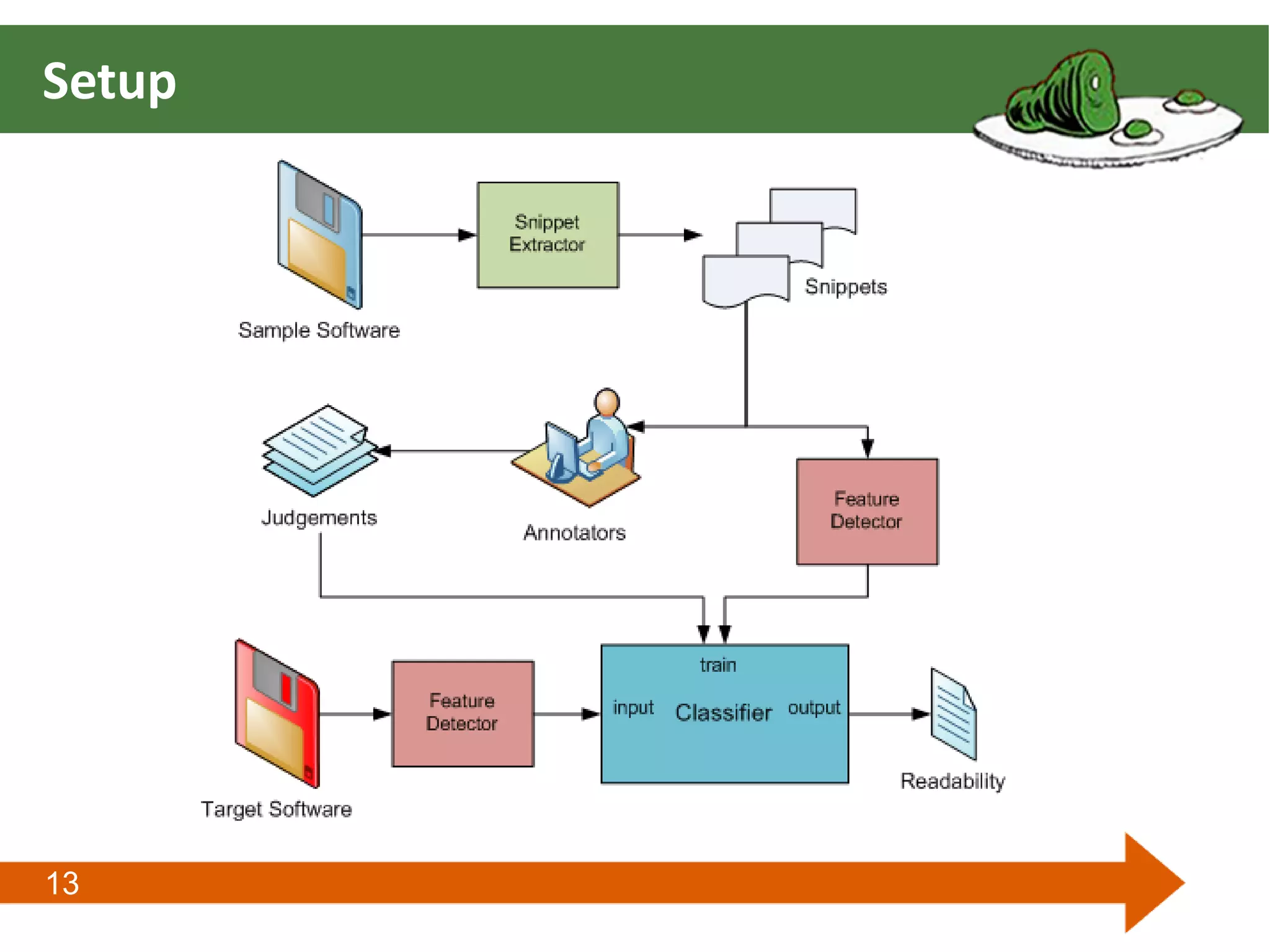

This document presents a metric for measuring software readability. It hypothesizes that using a simple set of local code features, an accurate model of readability can be derived from human judgments of readability. The document outlines acquiring human readability judgments, extracting a predictive model from those judgments, evaluating the model's performance, and correlating readability with external notions of software quality and the software lifecycle.

![14

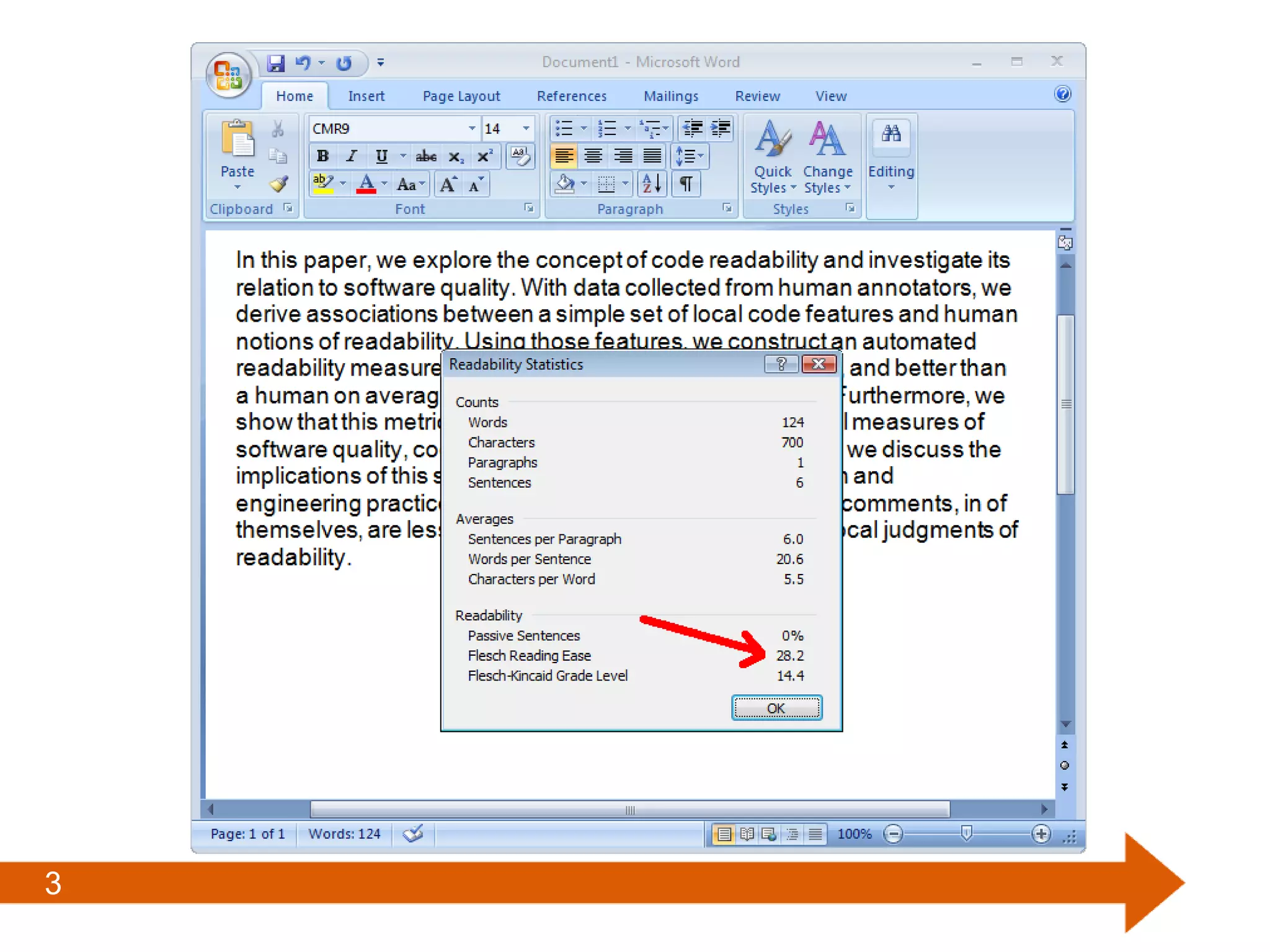

Features

We choose “local” code features

Line length

Length of identifier names

Comment density

Blank lines

Presence of numbers

[and 20 others]](https://image.slidesharecdn.com/isstareadability-101029005401-phpapp01/75/A-Metric-for-Code-Readability-14-2048.jpg)