The document provides a comprehensive overview of functional testing in software development, detailing its definition, importance, and the benefits it offers in ensuring software quality and user satisfaction. It covers various aspects such as prerequisites for setup, core concepts, types of functional testing, tools, environment configuration, and future trends in testing. Overall, it emphasizes the critical role of functional testing in validating that software meets its specified requirements and performs intended functions reliably.

![2. Enter a keyword (e.g., product name).

3. Click on the search button.

4. Verify that relevant search results are displayed.

Templates:

• Test Case Template:

vbnet

Copy code

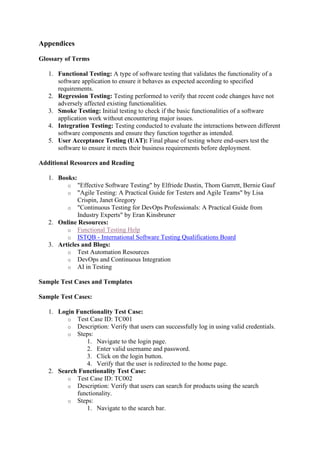

Test Case ID: [Unique ID]

Description: [Brief description of the test case]

Preconditions: [Any prerequisites needed for executing the test case]

Steps:

1. [Step 1]

2. [Step 2]

3. [Step 3]

4. [Step 4]

Expected Result: [Expected outcome after executing the test steps]

Actual Result: [Actual outcome observed during test execution]

Status: [Pass/Fail/Blocked]

• Test Plan Template:

less

Copy code

Project Name: [Project Name]

Test Plan ID: [Unique ID]

Objective: [Objective of the test plan]

Scope: [In-scope items for testing]

Out of Scope: [Out-of-scope items not covered by this test plan]

Schedule: [Timeline for test execution]

Resources: [Testing tools, environments, and personnel involved]

Risks and Assumptions: [Potential risks and assumptions made during

testing]

These resources provide a comprehensive toolkit for understanding, implementing, and

improving functional testing practices in software development projects.](https://image.slidesharecdn.com/acomprehensiveguidetofunctionaltesting-240712092435-2c9665db/85/A-Comprehensive-Guide-To-Functional-Testing-32-320.jpg)