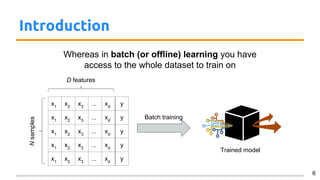

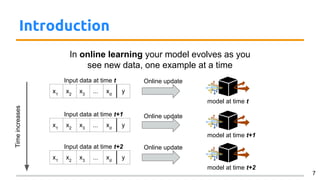

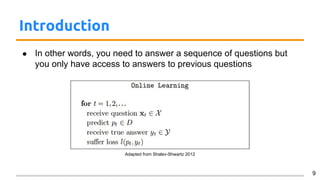

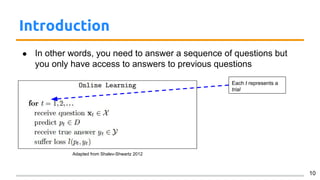

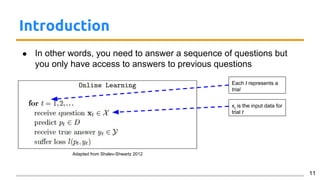

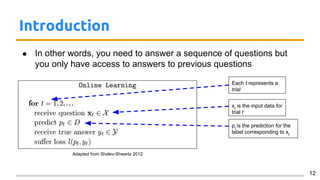

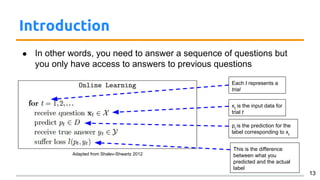

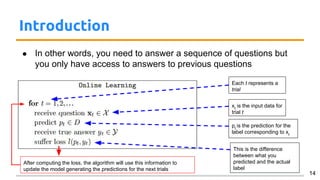

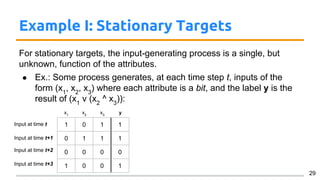

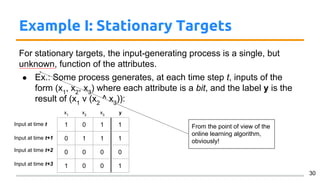

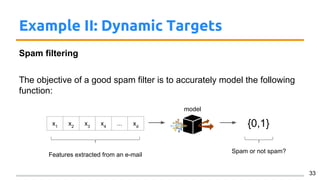

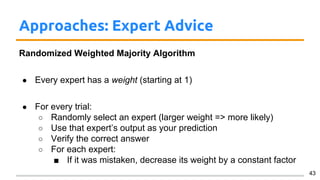

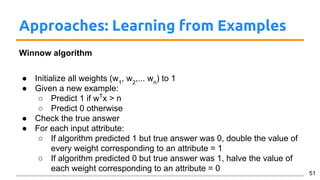

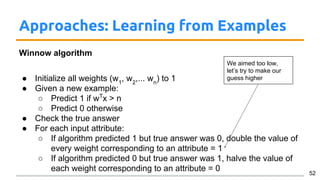

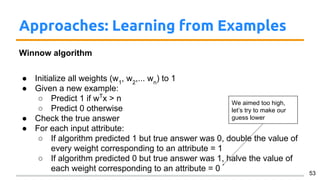

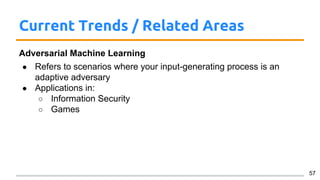

The document discusses online learning in machine learning, emphasizing its process of training models with streaming data where predictions are made sequentially based on past inputs. It outlines various use cases, such as real-time recommendation systems and spam detection, and differentiates between stationary and dynamic targets for learning. Additionally, several approaches to online learning, including the use of expert advice and algorithms like the winnow algorithm, are explored.