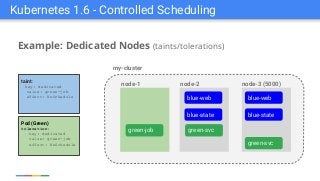

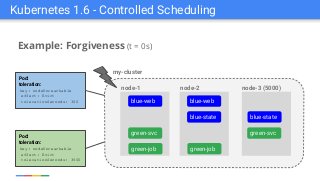

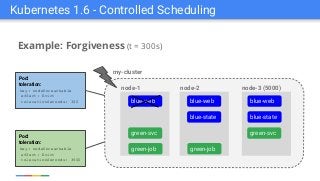

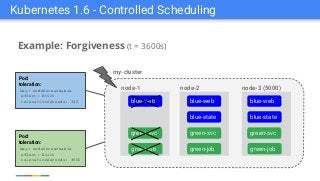

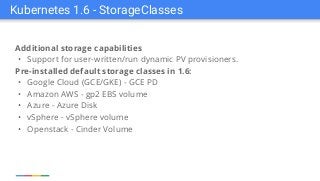

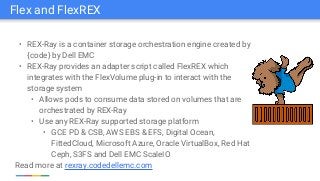

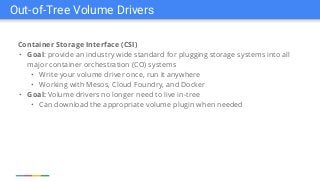

A public {code} Webinar for the larger {code} Community where Matthew De Lio, Product Manager at Google for the Kubernetes project and Google Container Engine, joins us to talk about Kubernetes and to give an update on what's new in Kubernetes 1.6.

The webinar recording can be found here:

https://youtu.be/tCgyxji5txs?list=PLbssOJyyvHuWiBQAg9EFWH570timj2fxt