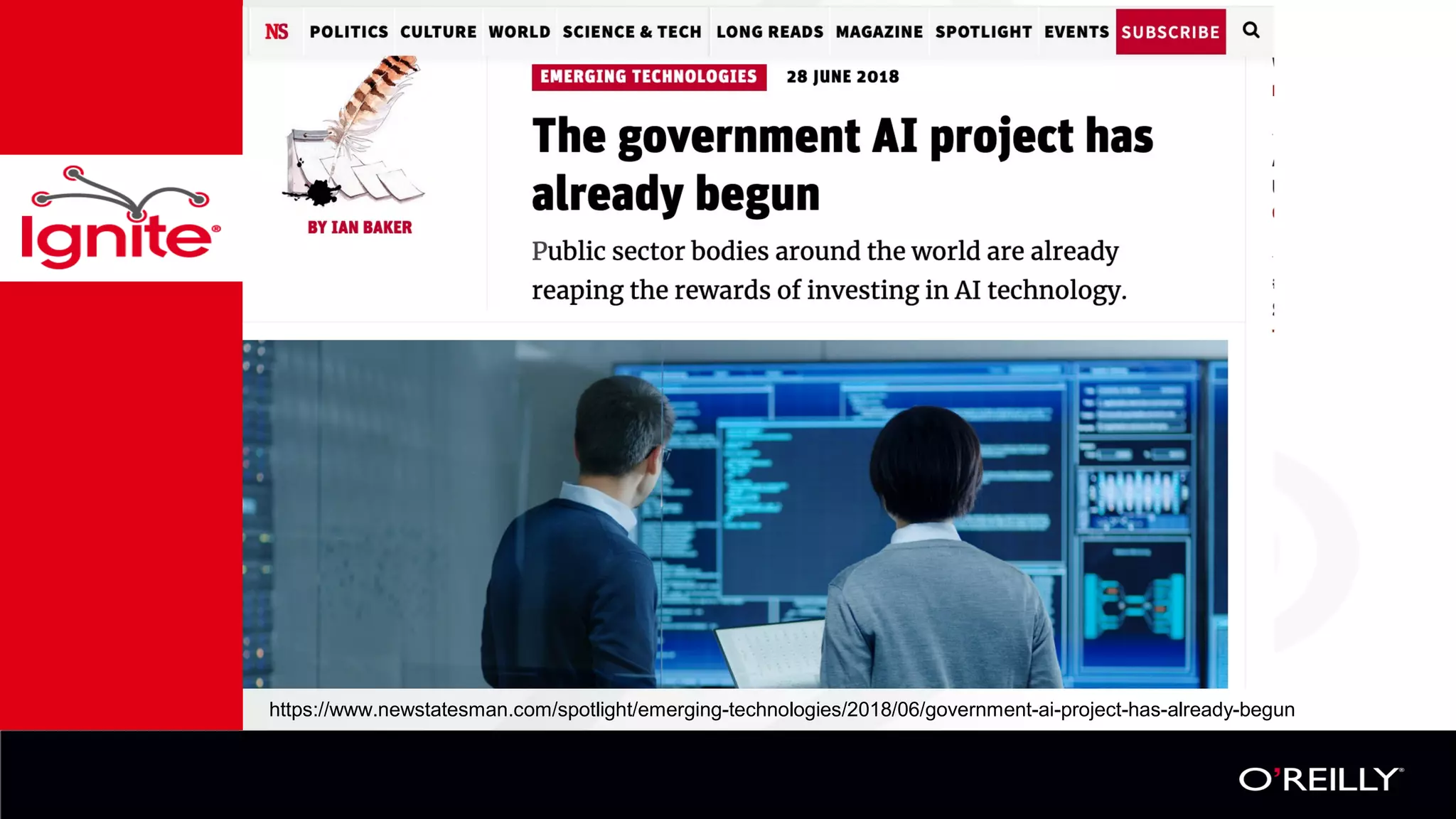

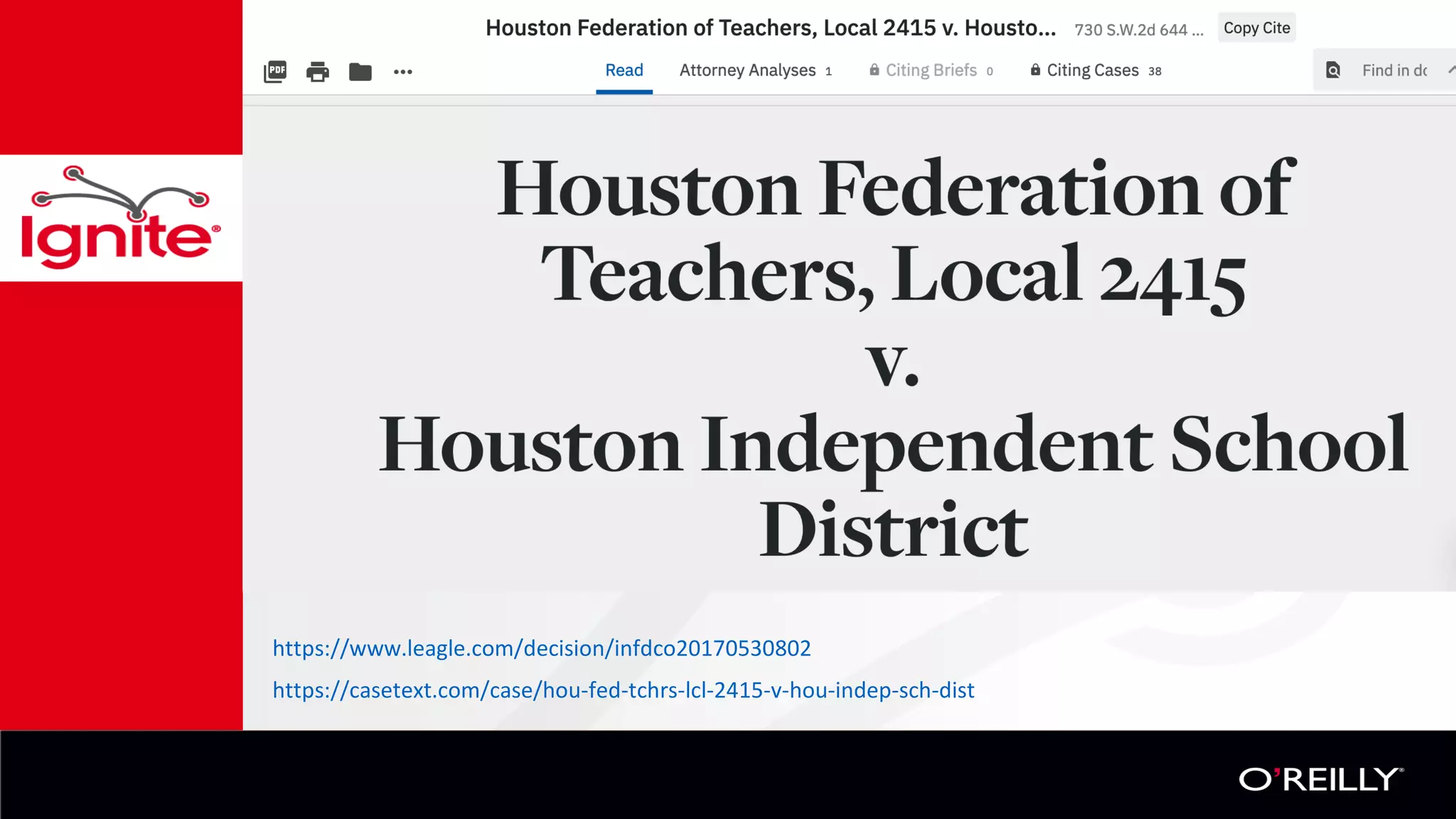

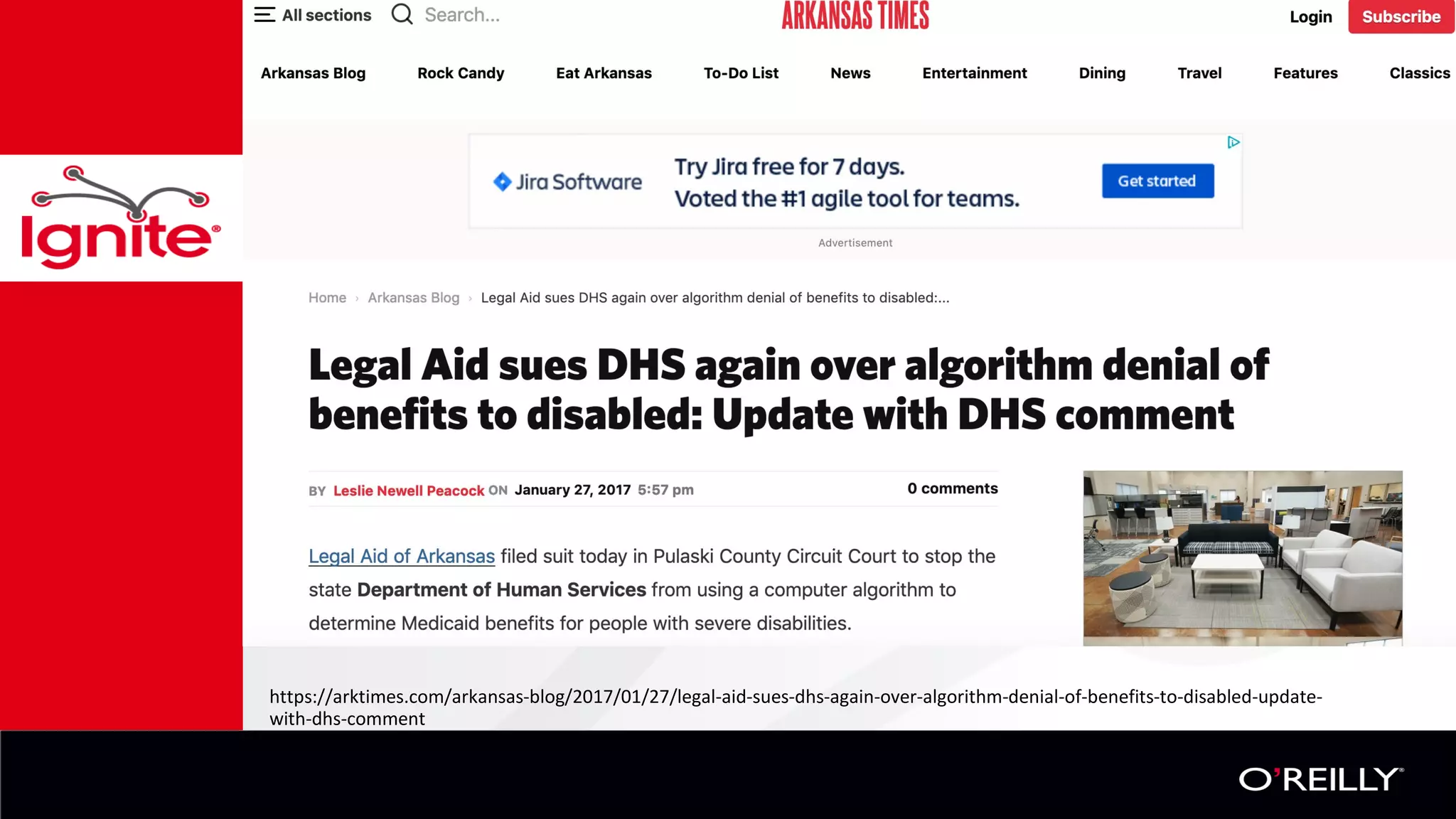

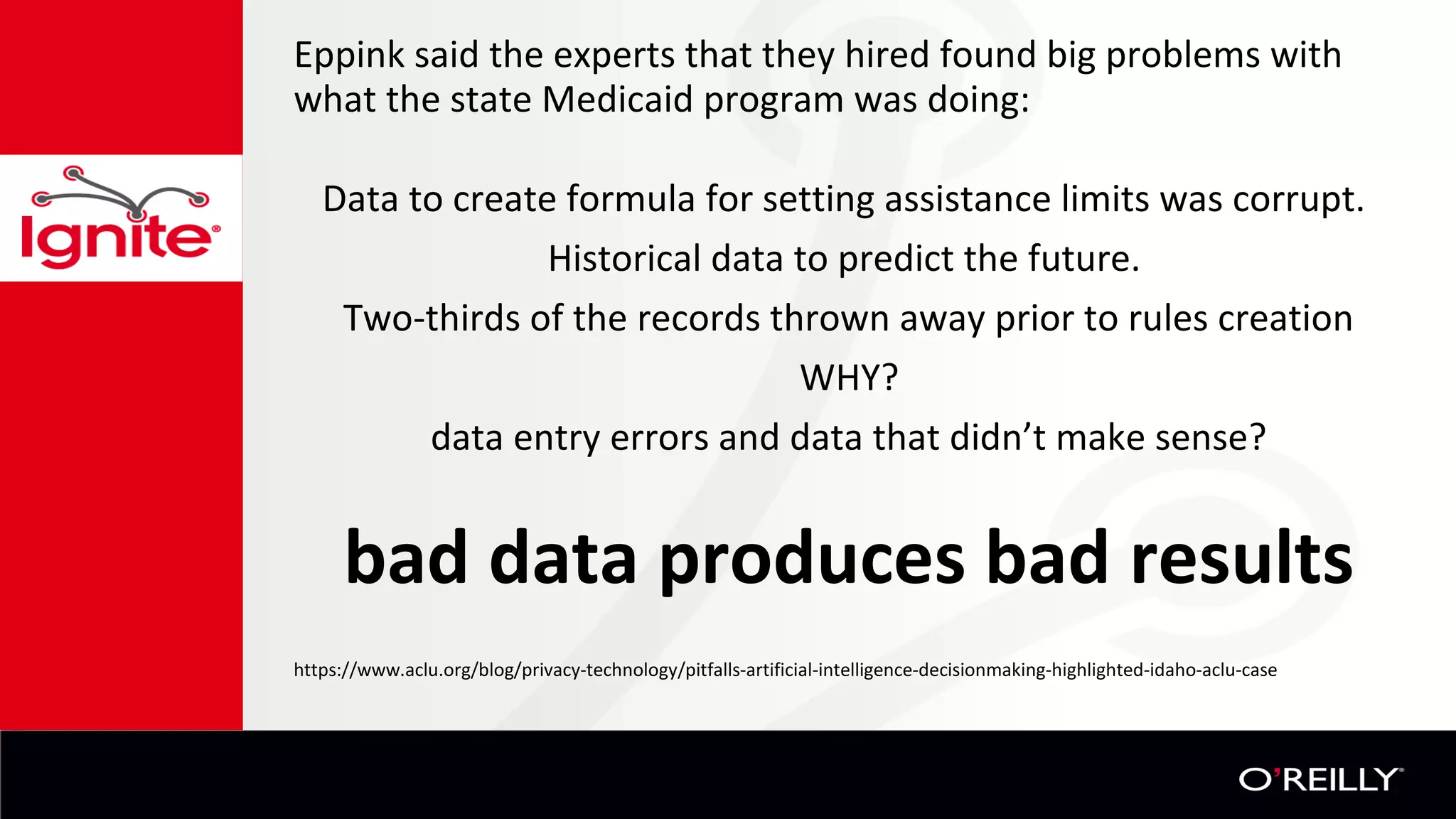

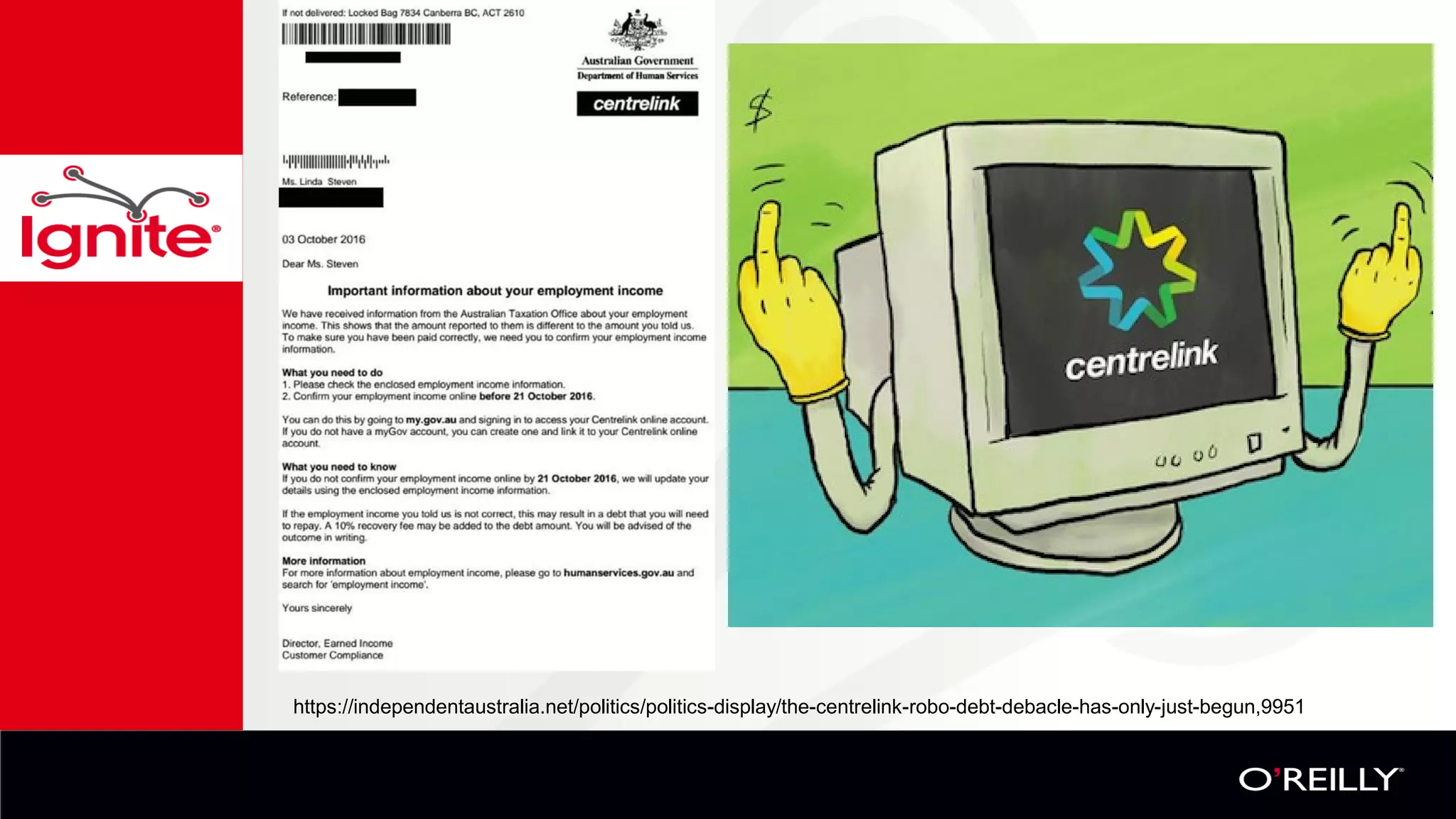

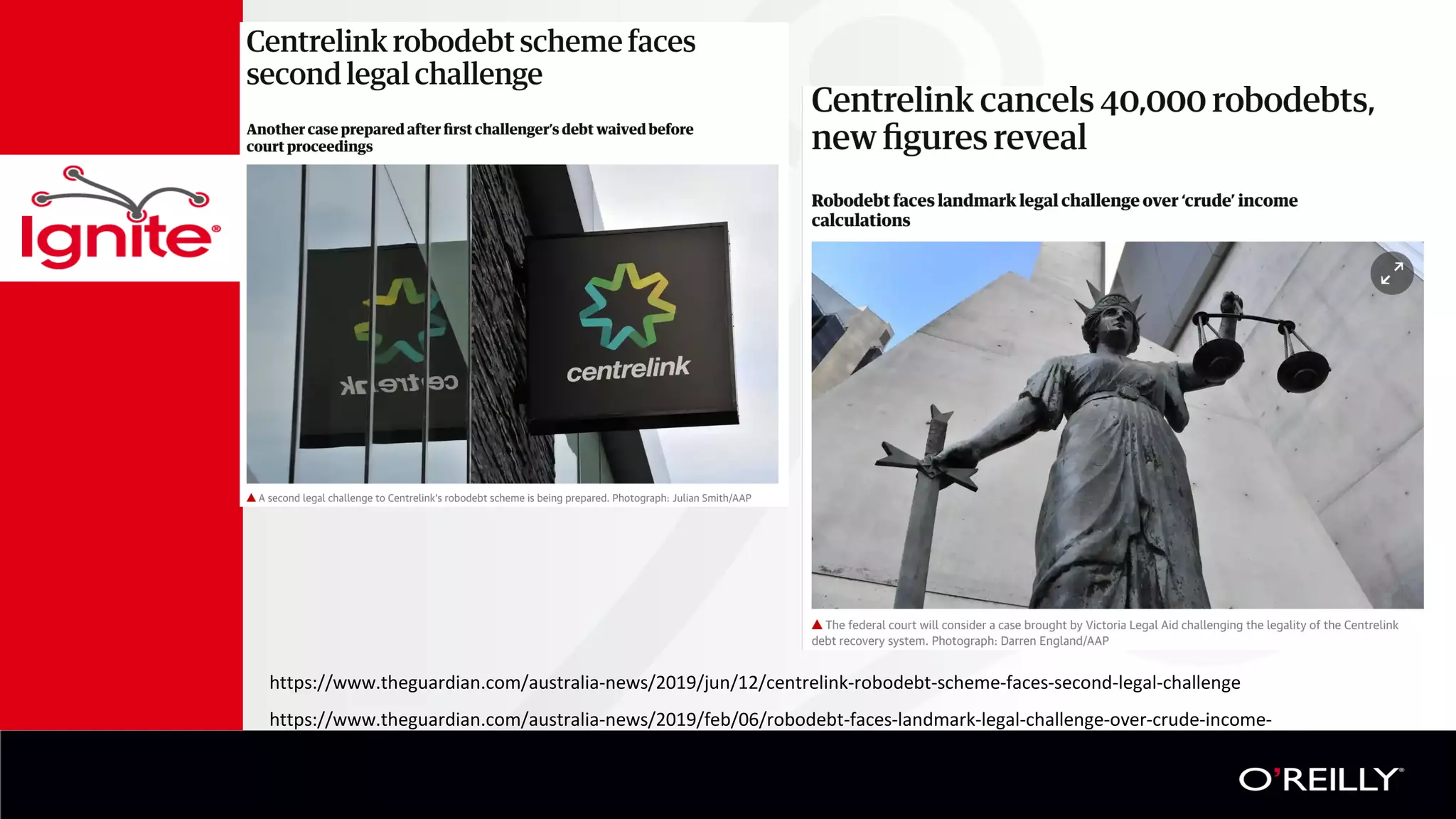

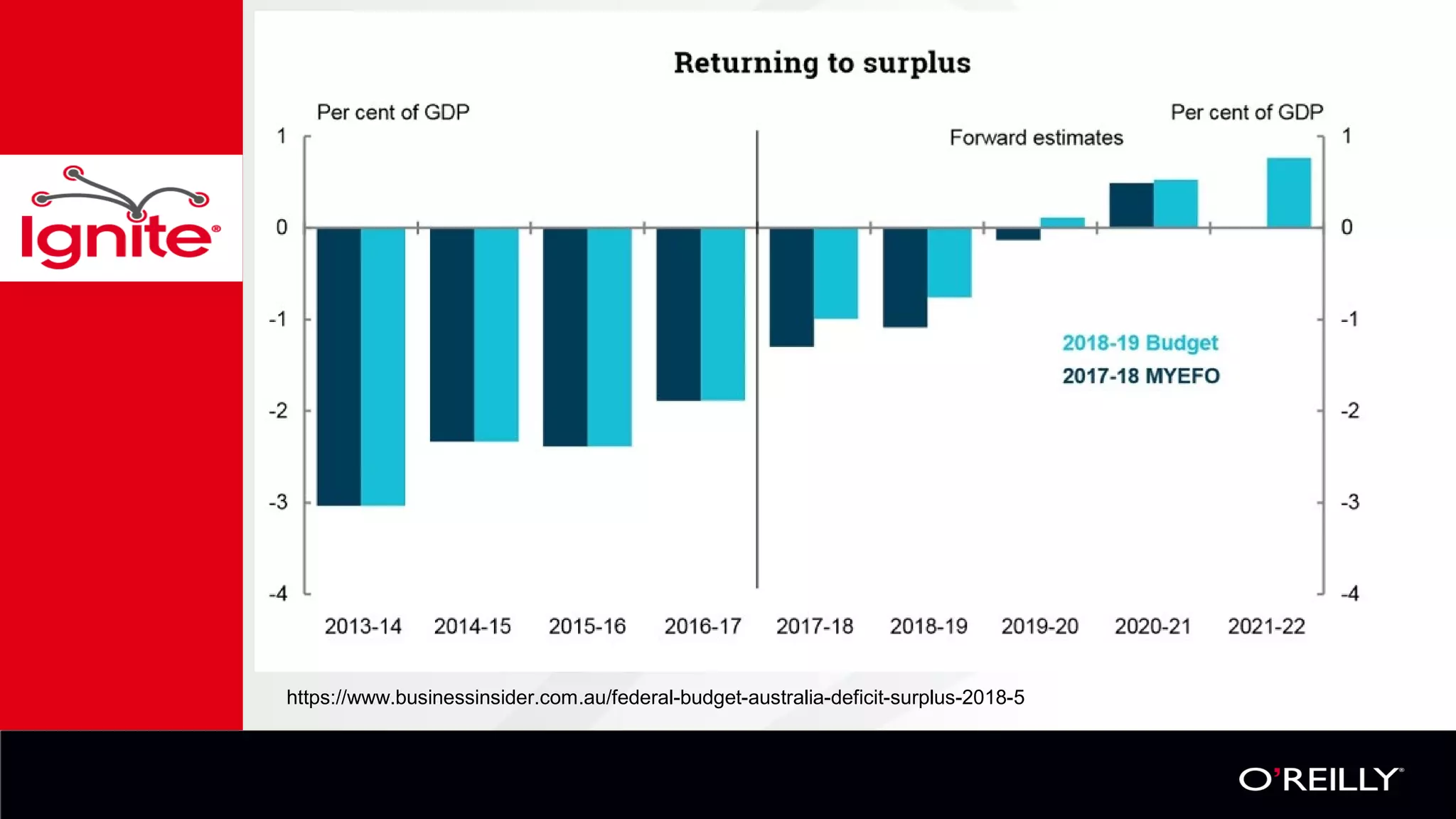

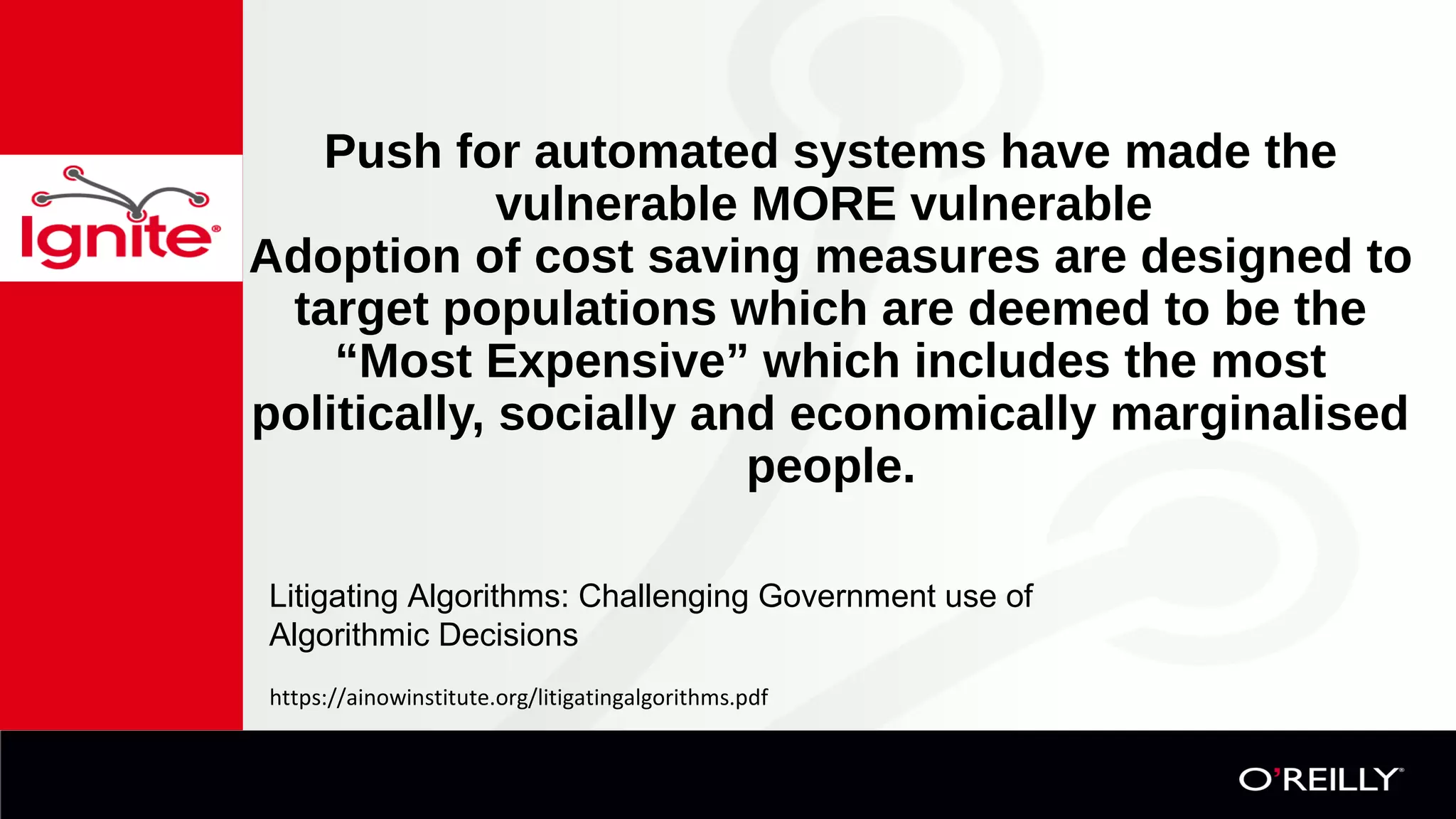

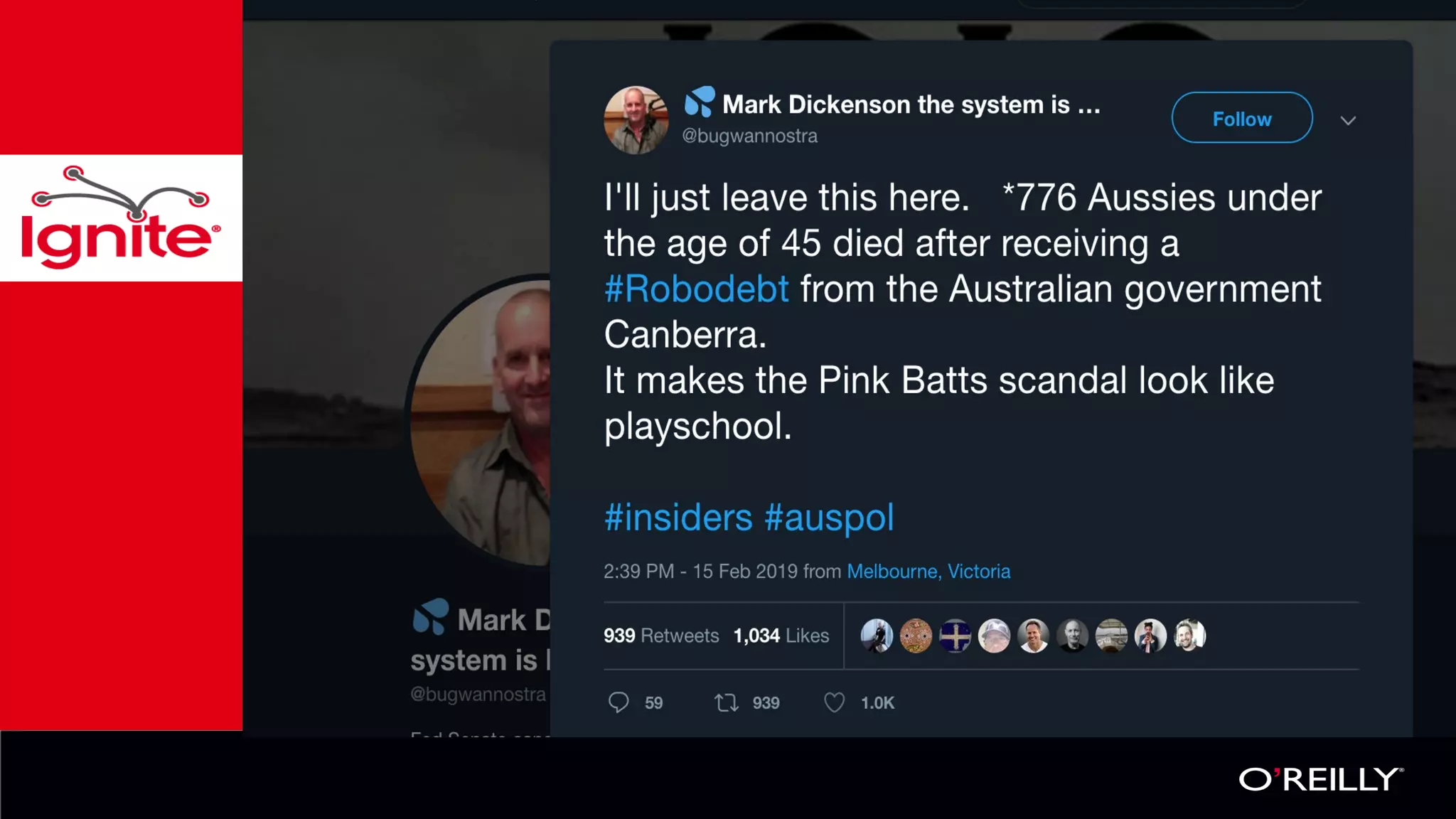

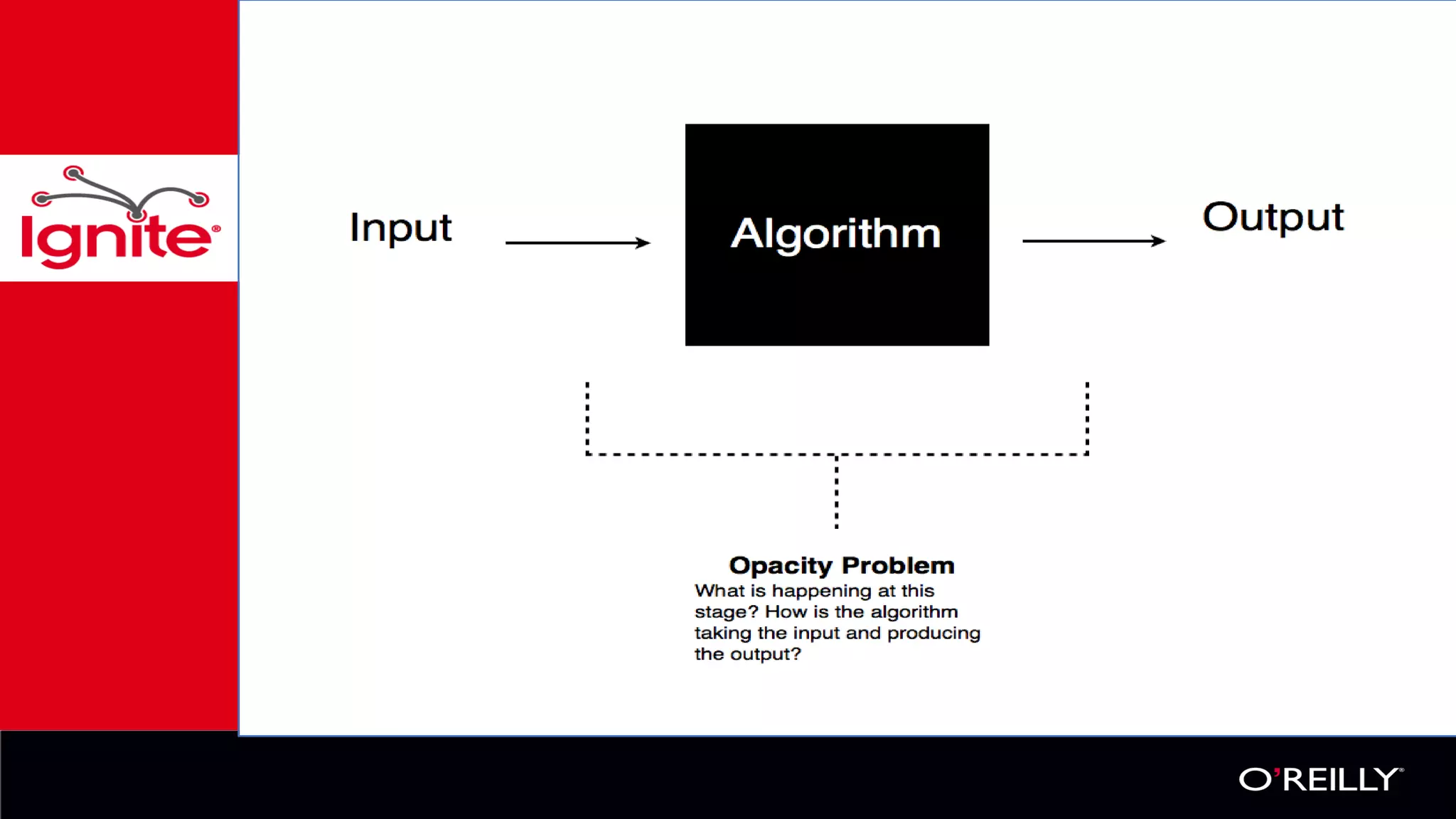

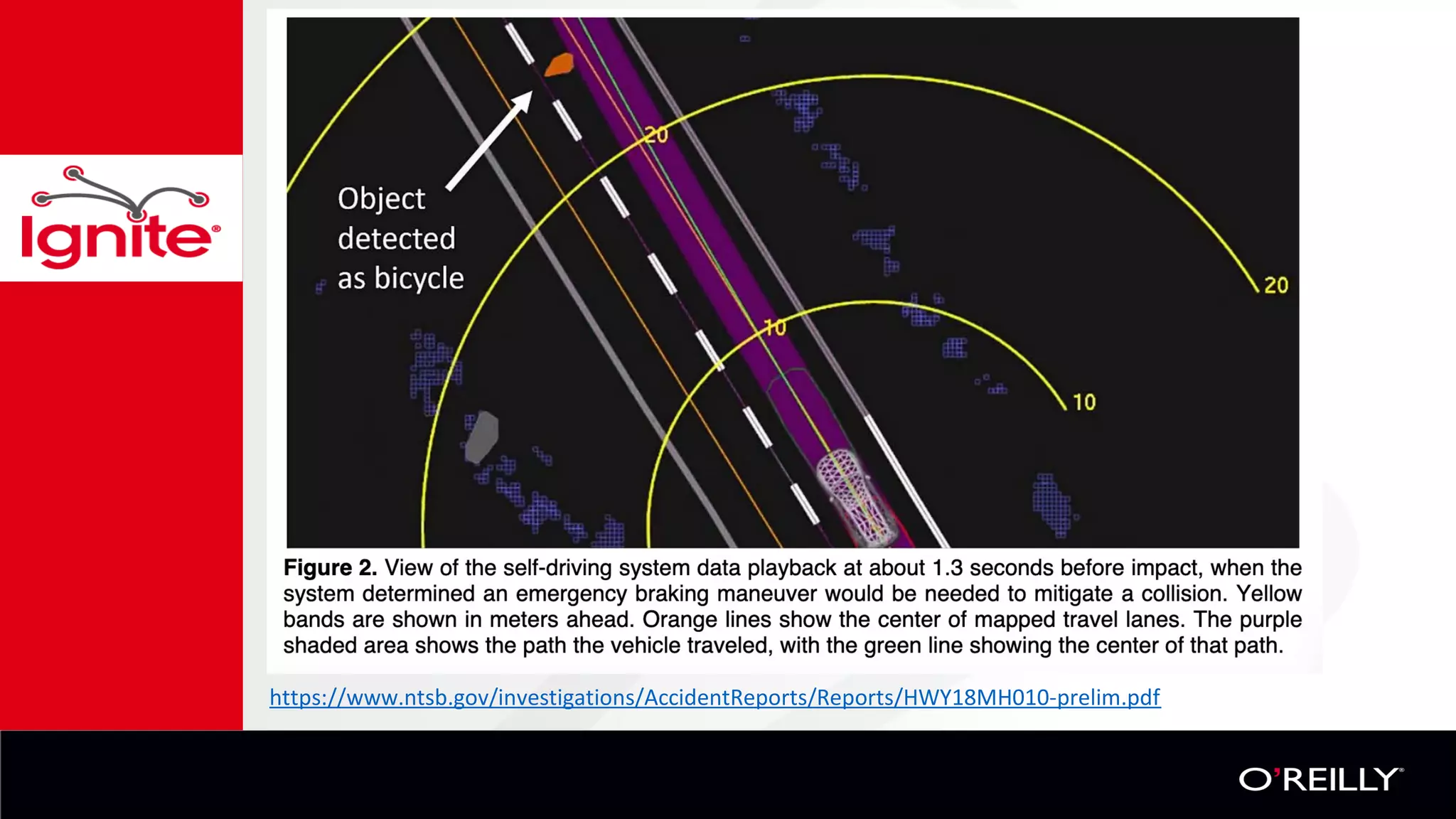

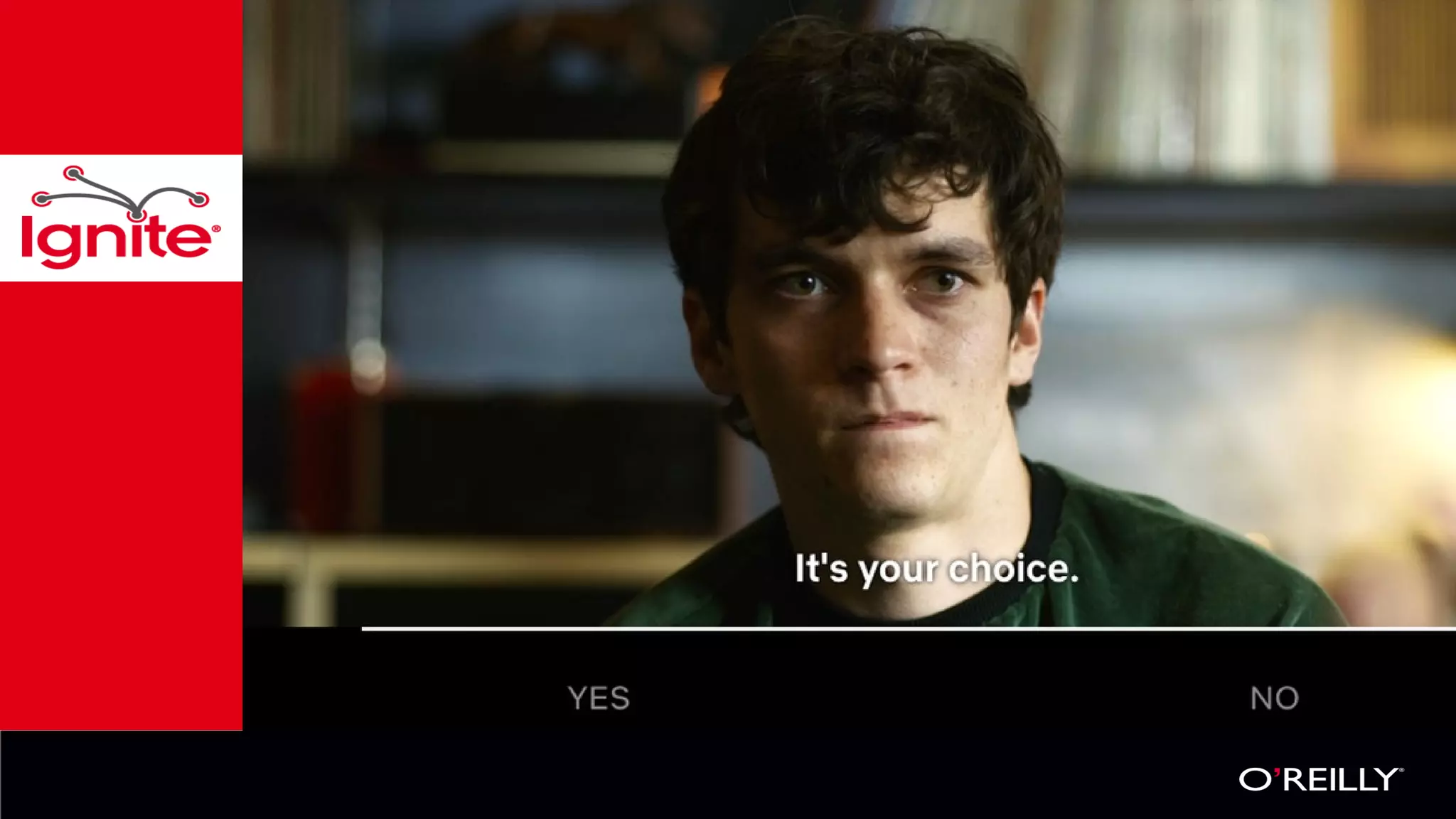

The document discusses the challenges associated with algorithmic decision-making in government programs, highlighting issues such as corrupt data impacting Medicaid assistance limits. It emphasizes the bad outcomes resulting from poor data quality and notes the negative effects of automated systems on vulnerable populations. The content suggests a push for accountability and the option for individuals to communicate with humans in governmental processes to mitigate these issues.