This document discusses metrics, key performance indicators (KPIs), and dashboards for measuring and monitoring project performance. It covers selecting the right metrics and KPIs, understanding how to present data visually through dashboards, and using metrics to provide stakeholders with the key information they need to make decisions. The goal is to move beyond simply providing more information to ensuring the right information reaches the intended audiences efficiently.

![Cover design: © Wiley

Cover image: © 2017 Dundas Data Visualization, Inc. All rights reserved.

This book is printed on acid-free paper.

Copyright © 2017 by International Institute for Learning, Inc., New York,

New York. All rights reserved.

Published by John Wiley & Sons, Inc., Hoboken, New Jersey

Published simultaneously in Canada

No part of this publication may be reproduced, stored in a retrieval system, or transmitted

in any form or by any means, electronic, mechanical, photocopying, recording, scanning,

or otherwise, except as permitted under Section 107 or 108 of the 1976 United States

Copyright Act, without either the prior written permission of the Publisher, or authorization

through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222

Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600, or on the web

at www.copyright.com. Requests to the Publisher for permission should be addressed to

the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030,

(201) 748-6011, fax (201) 748-6008, or online at www.wiley.com/go/permissions.

Limit of Liability/Disclaimer of Warranty: While the publisher and author have used

their best efforts in preparing this book, they make no representations or warranties with

the respect to the accuracy or completeness of the contents of this book and specifically

disclaim any implied warranties of merchantability or fitness for a particular purpose. No

warranty may be created or extended by sales representatives or written sales materials.

The advice and strategies contained herein may not be suitable for your situation. You

should consult with a professional where appropriate. Neither the publisher nor the

author shall be liable for damages arising herefrom.

For general information about our other products and services, please contact our

Customer Care Department within the United States at (800) 762-2974, outside the

United States at (317) 572-3993 or fax (317) 572-4002.

Wiley publishes in a variety of print and electronic formats and by print-on-demand.

Some material included with standard print versions of this book may not be included

in e-books or in print-on-demand. If this book refers to media such as a CD or DVD that

is not included in the version you purchased, you may download this material at http://

booksupport.wiley.com. For more information about Wiley products, visit www.wiley.com.

Library of Congress Cataloging-in-Publication Data

Names: Kerzner, Harold, author.

Title: Project management metrics, KPIs, and dashboards : a guide to

measuring and monitoring project performance / Harold Kerzner, Ph.D., Sr.

Executive Director for Project Management, The International Institute for

Learning.

Description: Third edition. | Hoboken, New Jersey : John Wiley & Sons, Inc.,

[2017] | Includes index. |

Identifiers: LCCN 2017022057 (print) | LCCN 2017030981 (ebook) | ISBN

9781119427506 (pdf) | ISBN 9781119427322 (epub) | ISBN 9781119427285 (pbk.)

Subjects: LCSH: Project management. | Project management–Quality control. |

Performance standards. | Work measurement.

Classification: LCC HD69.P75 (ebook) | LCC HD69.P75 K492 2017 (print) | DDC

658.4/04–dc23

LC record available at https://lccn.loc.gov/2017022057

Printed in the United States of America

10 9 8 7 6 5 4 3 2 1](https://image.slidesharecdn.com/projectmanagementmetrics-kpis-anddashboardsaguidetomeasuringandmonitoringprojectperformance-231112195000-7390cb3a/75/Project-management-metrics-KPIs-and-dashboards-_-a-guide-to-measuring-and-monitoring-project-performance-pdf-3-2048.jpg)

![17

1.4 PROJECT MANAGEMENT METHODOLOGIES AND FRAMEWORKS

agile, is adaptive, and involves the client every part of the way—is start-

ing to emerge. Many of the heavyweight methodologists were resistant

to the introduction of these “lightweight” or “agile” methodologies.6

These methodologies use an informal communication style. Unlike

heavyweight methodologies, lightweight projects have only a few rules,

practices, and documents. Projects are designed and built on face-to-face

discussions, meetings, and the flow of information to the clients. The

immediate difference of using light methodologies is that they are much

less documentation-oriented, usually emphasizing a smaller amount of

documentation for the project.

Heavy Methodologies

The traditional project management methodologies (i.e., the systems

development life cycle [SDLC] approach) are considered bureaucratic or

“predictive” in nature and have resulted in many unsuccessful projects.

These heavy methodologies are becoming less popular. These method-

ologies are so laborious that the whole pace of design, development, and

deployment slows down—and nothing gets done. Project managers tend

to predict every milestone because they want to foresee every technical

detail (i.e., software code or engineering detail). This leads managers to

start demanding many types of specifications, plans, reports, checkpoints,

and schedules. Heavy methodologies attempt to plan a large part of a

project in great detail over a long span of time. This works well until things

start changing, and the project managers inherently try to resist change.

Frameworks

More and more companies today, especially those that wish to compete

in the global marketplace as business solution providers, are using frame-

works rather than methodologies.

■ Framework: The individual segments, principles, pieces, or compo-

nents of the processes needed to complete a project. This can include

forms, guidelines, checklists, and templates.

■ Methodology: The orderly structuring or grouping of the segments

or framework elements. This can appear as policies, procedures, or

guidelines.

Frameworks focus on a series of processes that must be done on all

projects. Each process is supported by a series of forms, guidelines, tem-

plates, checklists, and metrics that can be applied to a particular client’s

business needs. The metrics will be determined jointly by the project

manager, the client, and the various stakeholders.

6Martin Fowler, The New Methodology, Thought Works, 2001. Available at www.martinfowler

.com/articles.](https://image.slidesharecdn.com/projectmanagementmetrics-kpis-anddashboardsaguidetomeasuringandmonitoringprojectperformance-231112195000-7390cb3a/75/Project-management-metrics-KPIs-and-dashboards-_-a-guide-to-measuring-and-monitoring-project-performance-pdf-27-2048.jpg)

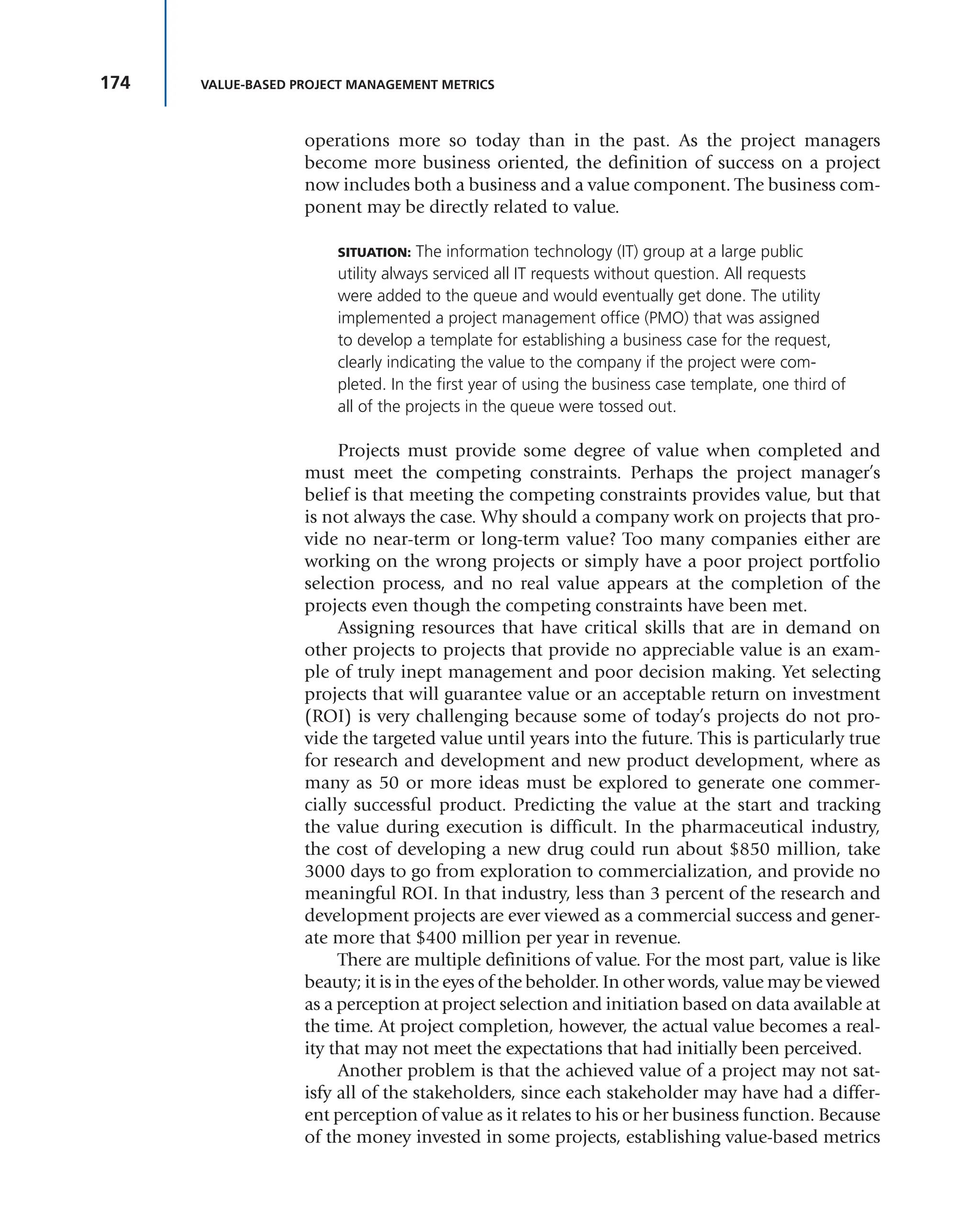

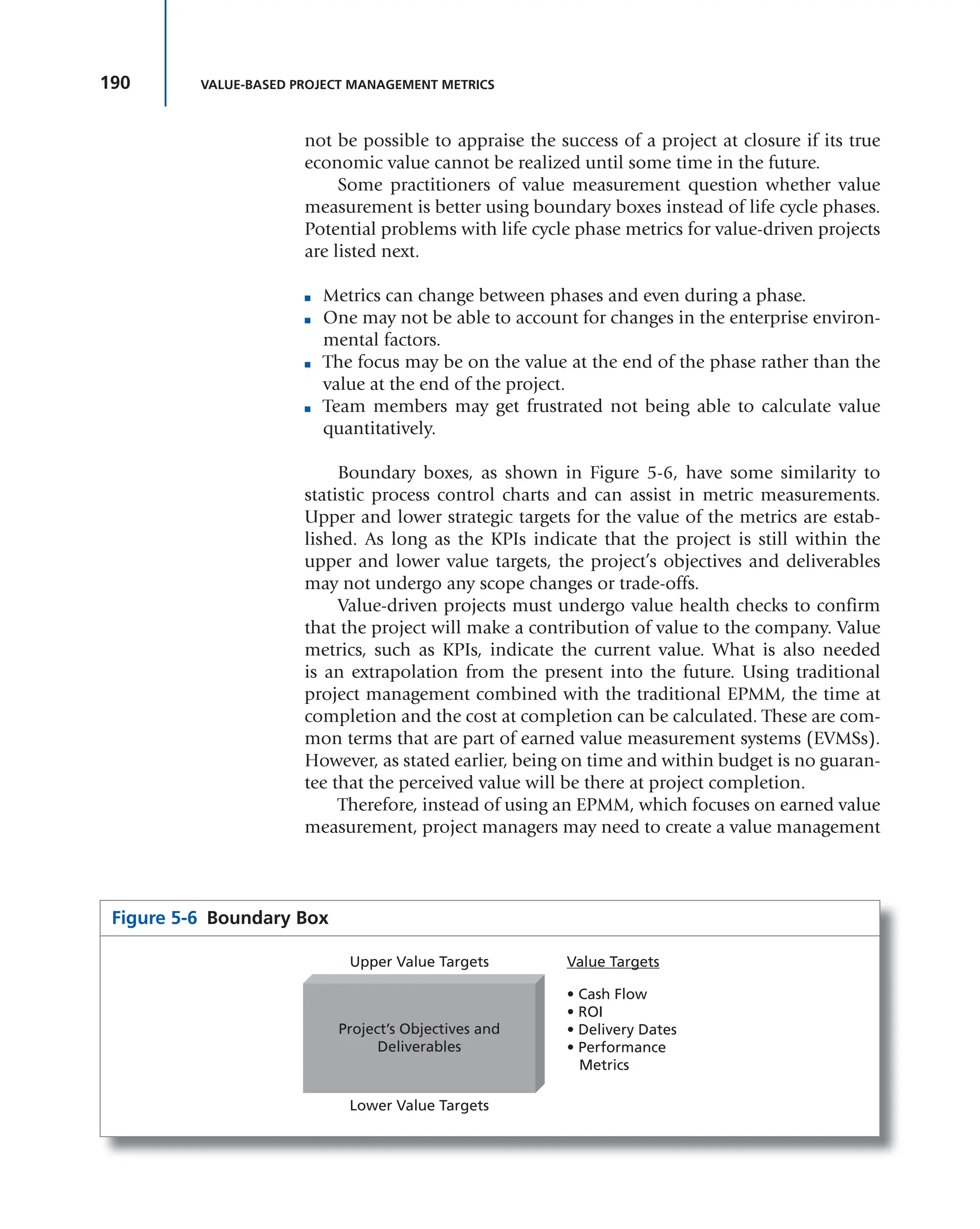

![192 VALUE-BASED PROJECT MANAGEMENT METRICS

can consider many attributes to represent value, but not all of the value

attributes are equal in importance.

Unlike the use of quality as the solitary parameter, value allows a

company to better measure the degree to which the project will satisfy

its objectives. Quality can be regarded as an attribute of value along with

other attributes. Today, every company has quality and produces qual-

ity in some form. This is necessary for survival. What differentiates one

company from another, however, are the other attributes, components,

or factors used to define value. Some of these attributes might include

price, timing, image, reputation, customer service, and sustainability.

In today’s world, customers make decisions to hire a contractor based

on the value they expect to receive and the price they must pay to receive

this value. Actually, the value may be more of a “perceived” value that may

be based on trade-offs on the attributes selected as part of the client’s defi-

nition of value. The client may perceive the value of the project to be used inter-

nally in his or her company or pass it on to customers through the client’s customer

value management (CVM) program. If the project manager’s organization

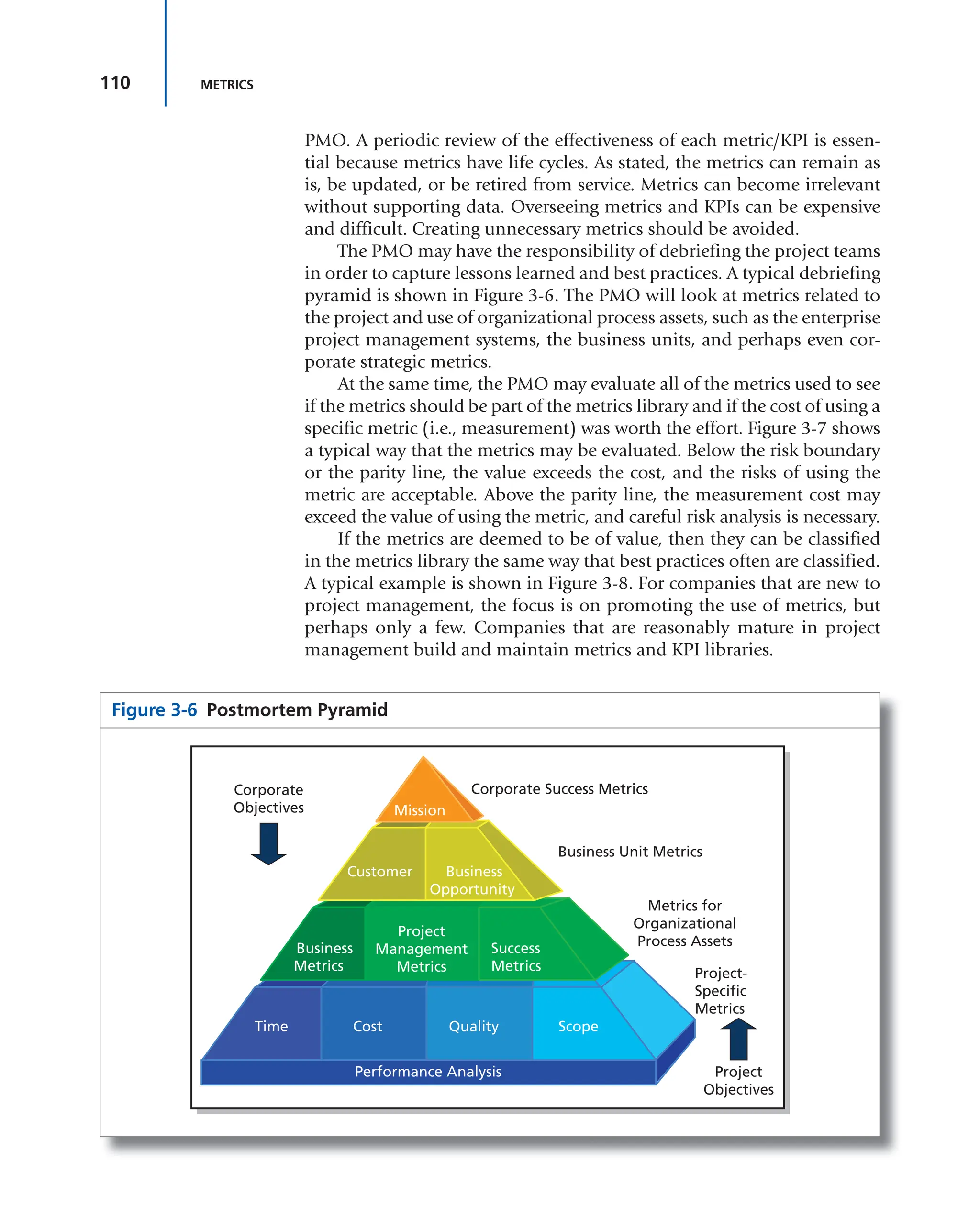

does not or cannot offer recognized value to clients and stakeholders, then

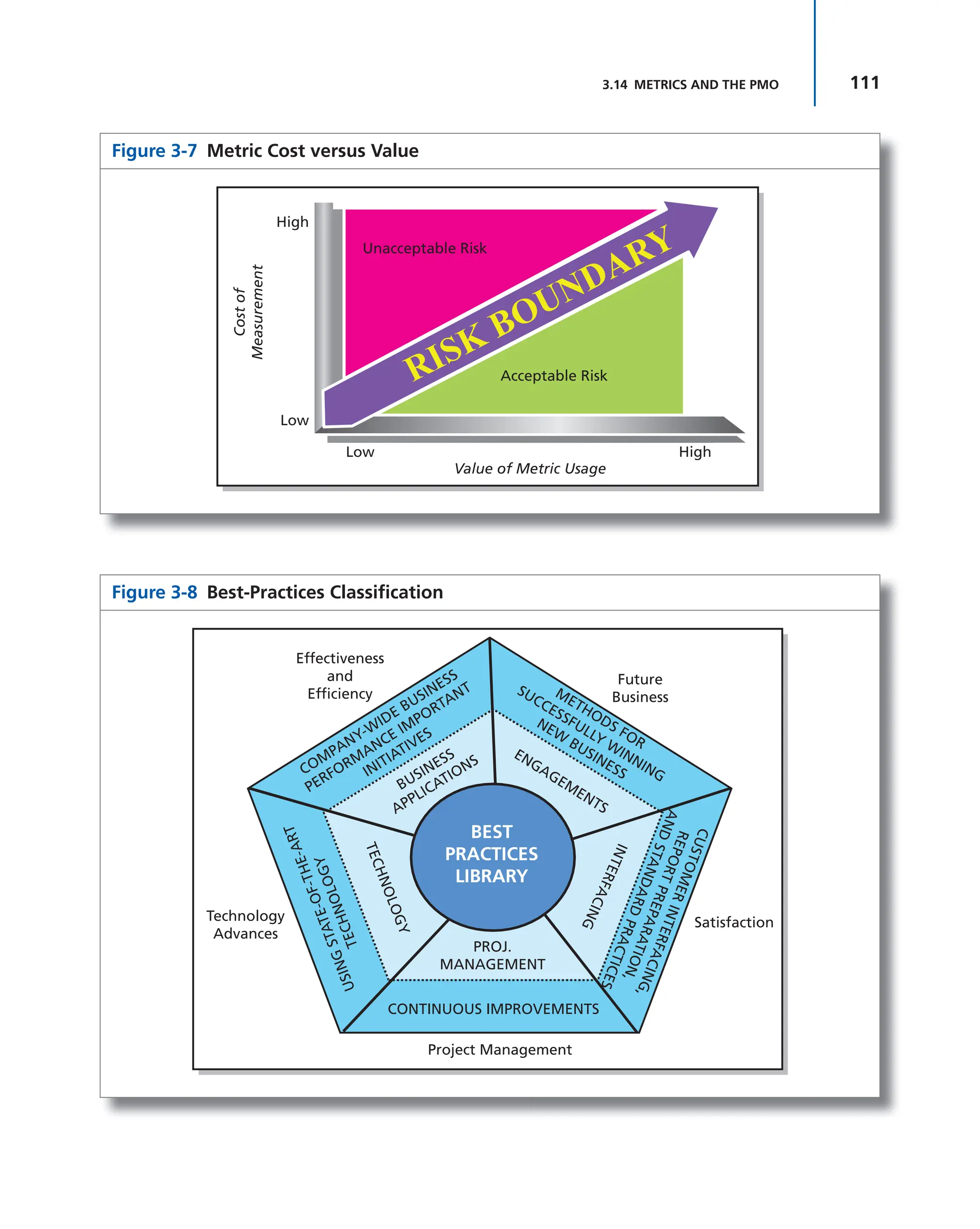

the project manager will not be able to extract value (i.e., loyalty) from

them in return. Over time, they will defect to other contractors.

The importance of value is clear. According to a study by the American

Productivity and Quality Center:

Although customer satisfaction is still measured and used in decision

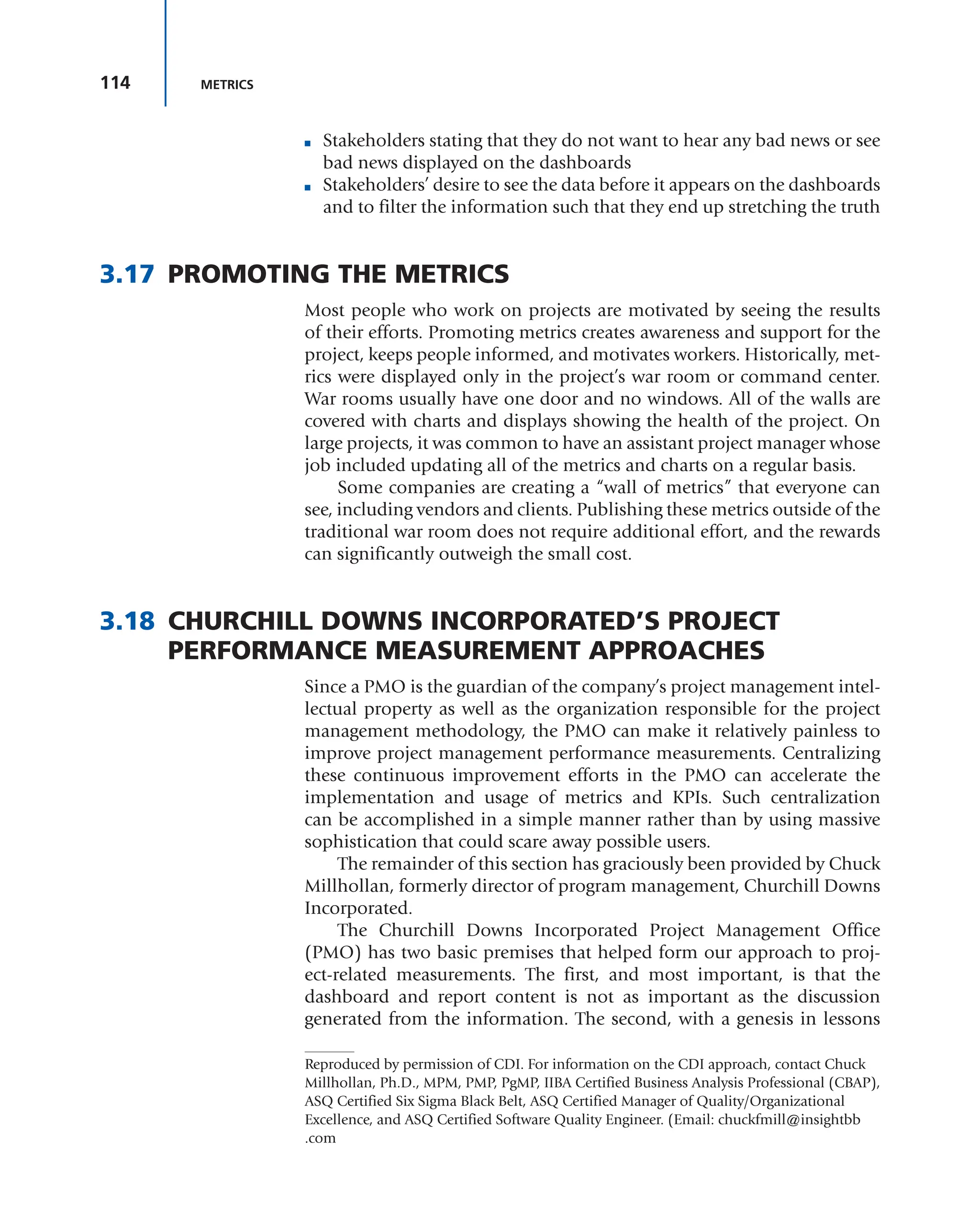

making, the majority of partner organizations [used in this study] have

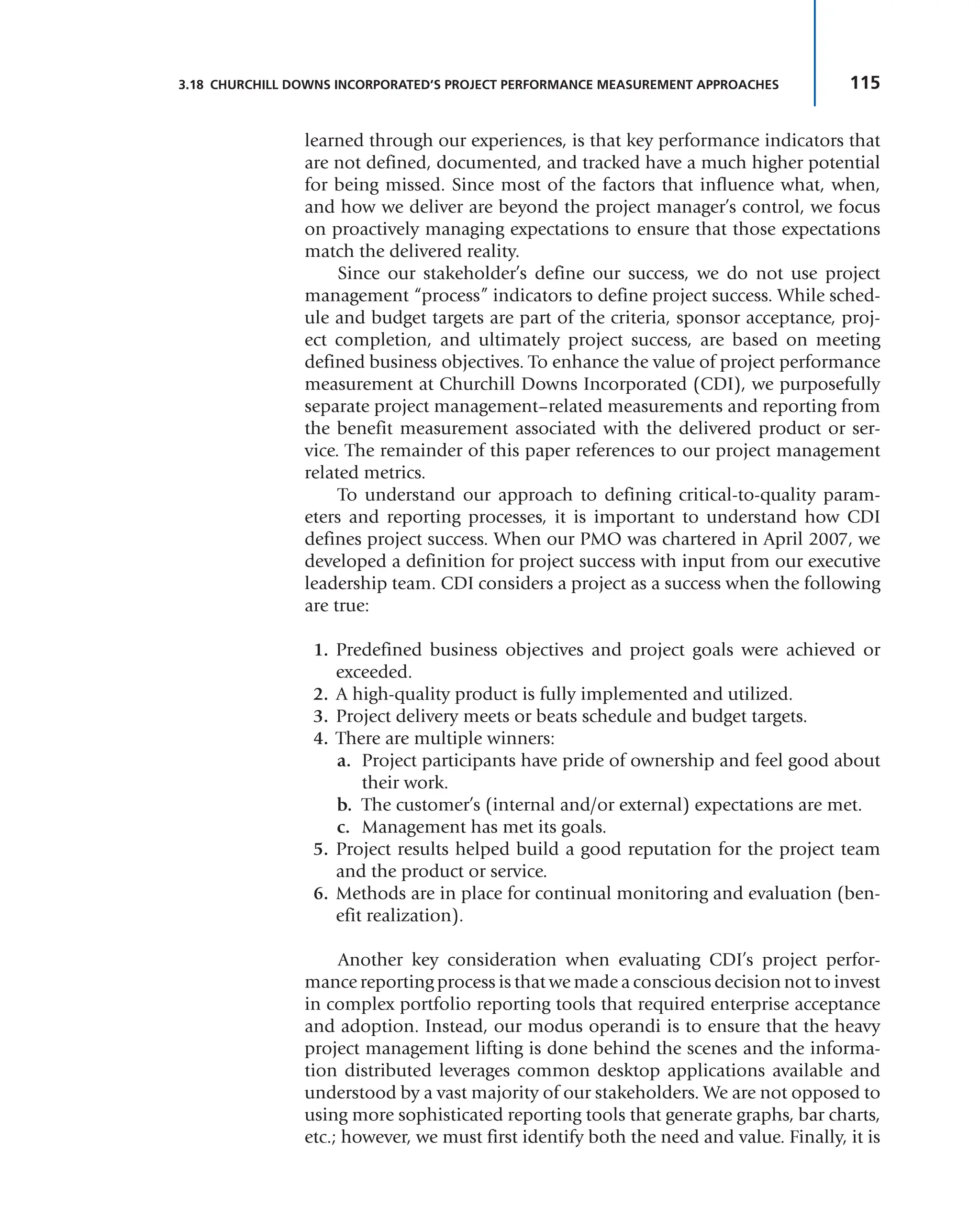

shifted their focus from customer satisfaction to customer value.6

Project managers of the future must consider themselves as the cre-

ators of value. In the courses I teach, I define a “project” as “a set of values

scheduled for sustainable realization.” Project managers therefore must

establish the correct metrics so that the client and stakeholders can track

the value that will be created. Measuring and reporting customer value

throughout the project is now a competitive necessity. If it is done cor-

rectly, it will build emotional bonds with your clients.

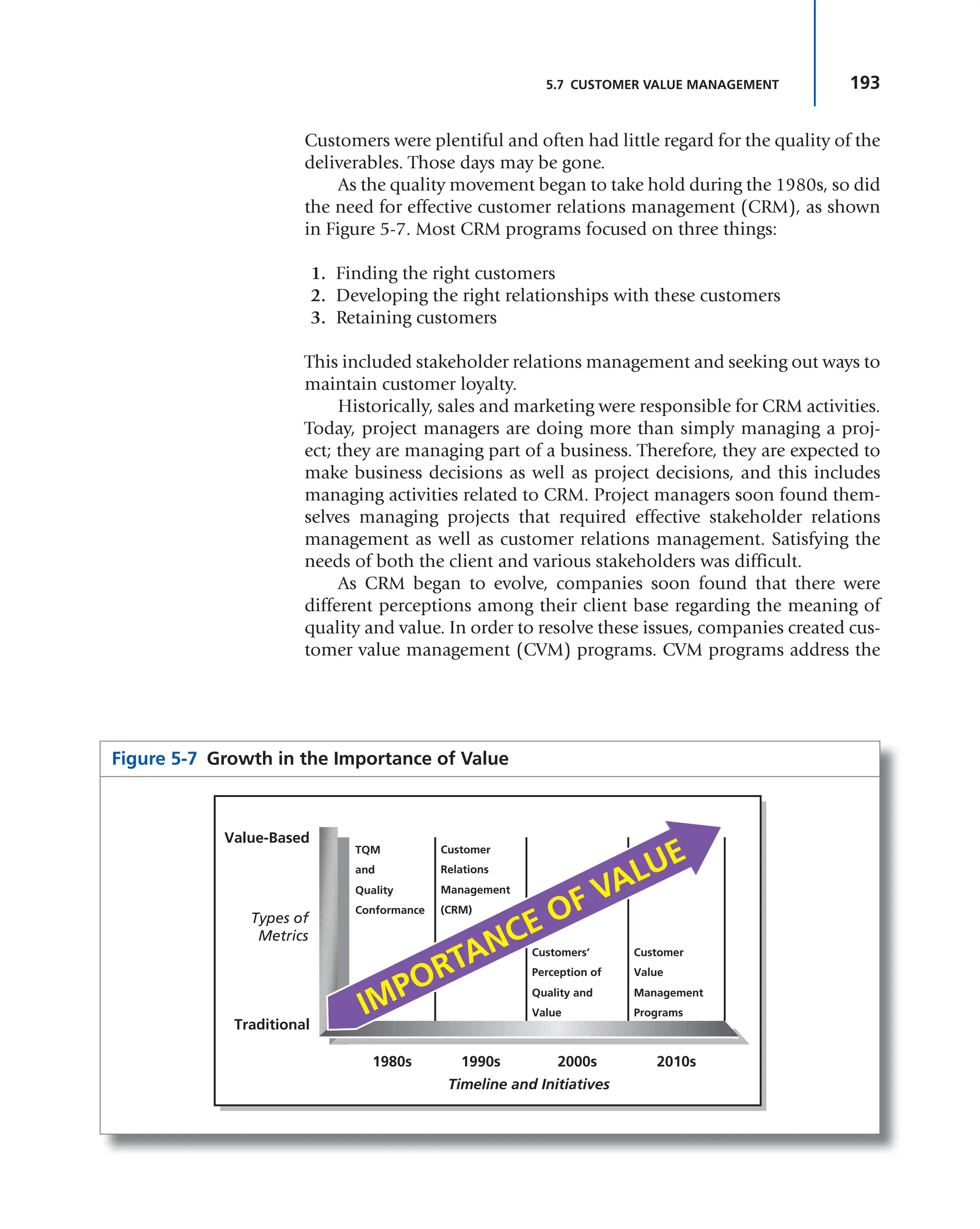

5.7 CUSTOMER VALUE MANAGEMENT

For decades, many companies believed that they had an endless supply

of potential customers. Companies called this the “doorknob” approach,

whereby they expected to find a potential customer behind every door.

Under this approach, customer loyalty was nice to have but not a necessity.

6. American Productivity and Quality Center, “Customer Value Measurement: Gaining

Strategic Advantage,” 1999, p. 8.](https://image.slidesharecdn.com/projectmanagementmetrics-kpis-anddashboardsaguidetomeasuringandmonitoringprojectperformance-231112195000-7390cb3a/75/Project-management-metrics-KPIs-and-dashboards-_-a-guide-to-measuring-and-monitoring-project-performance-pdf-200-2048.jpg)

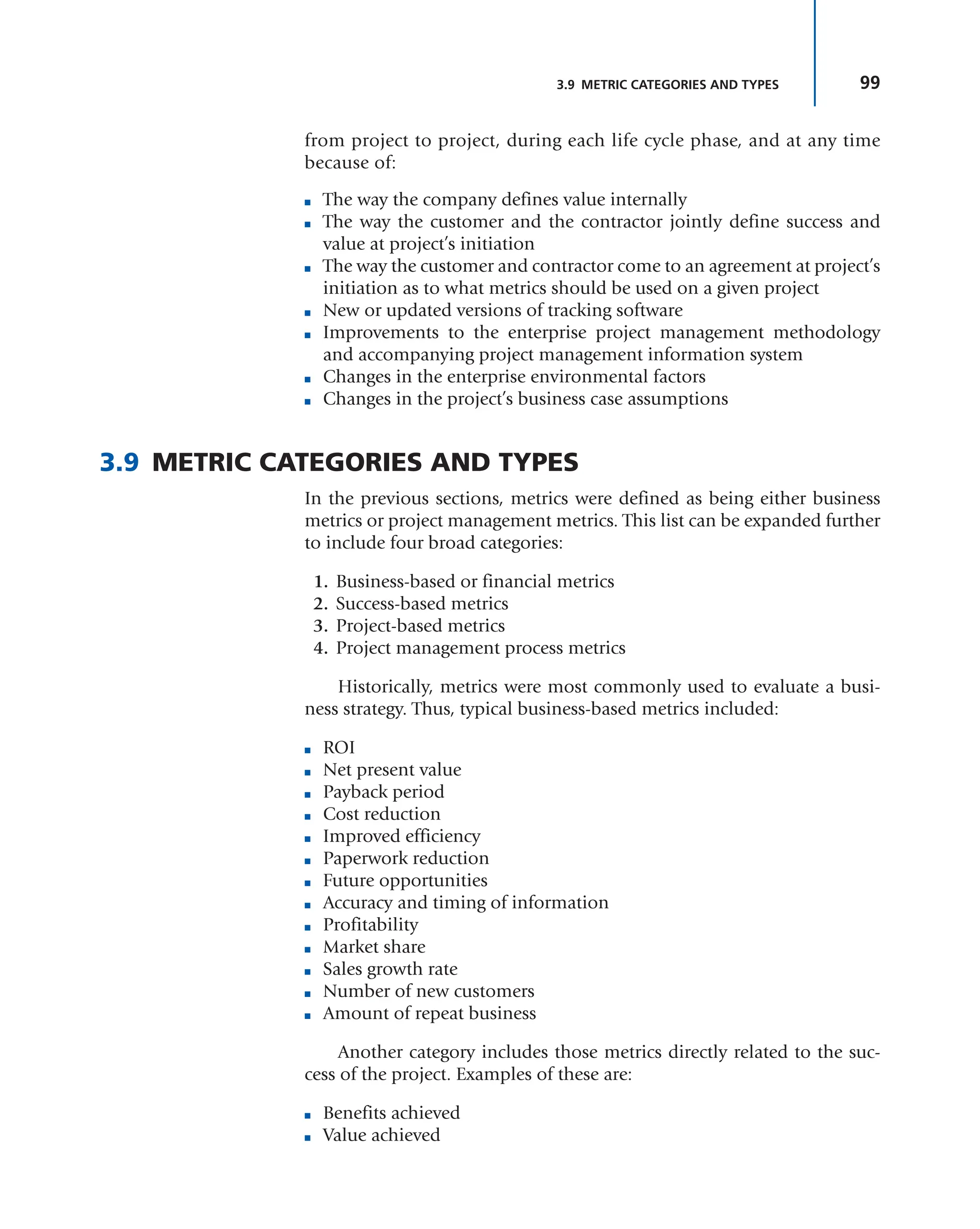

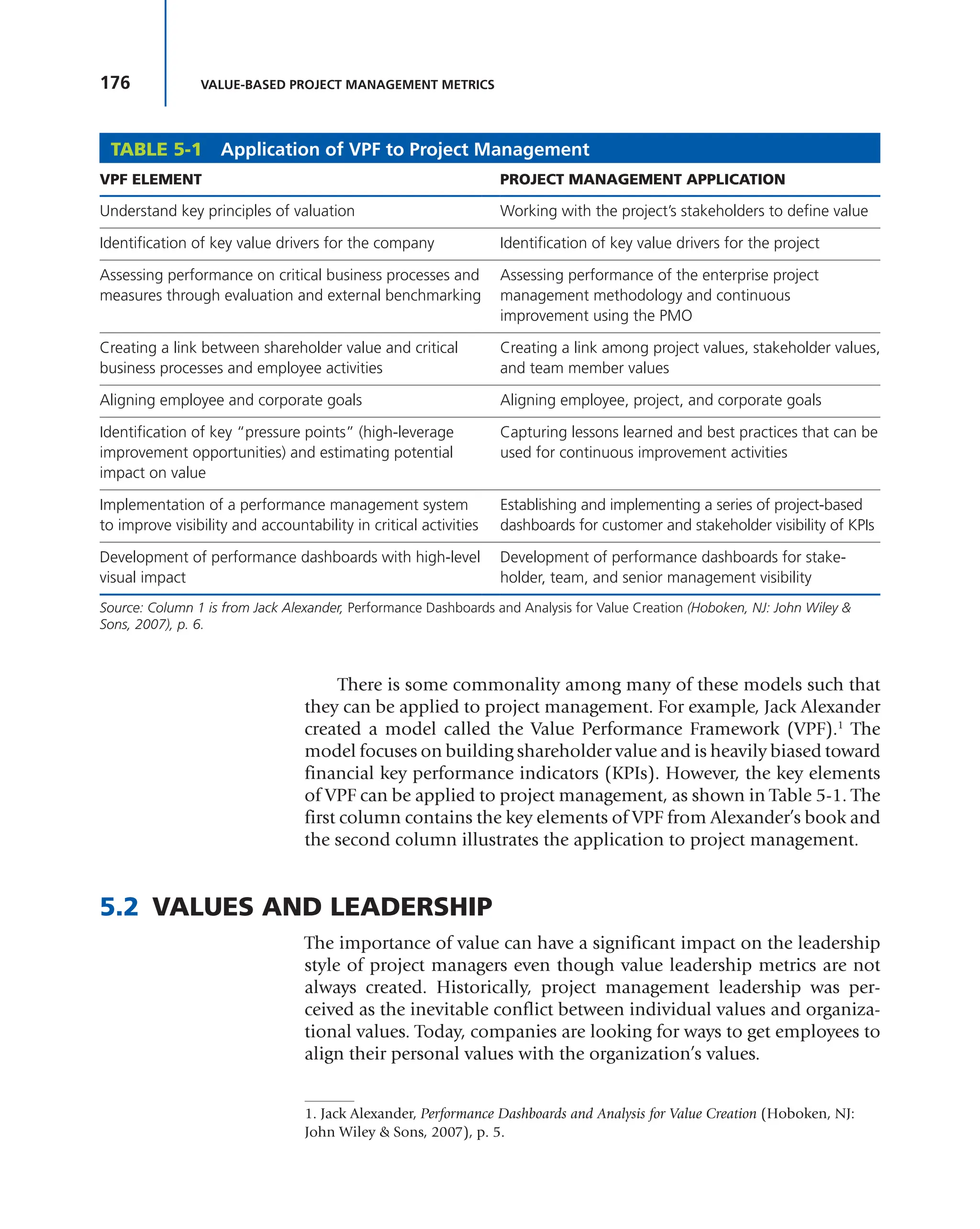

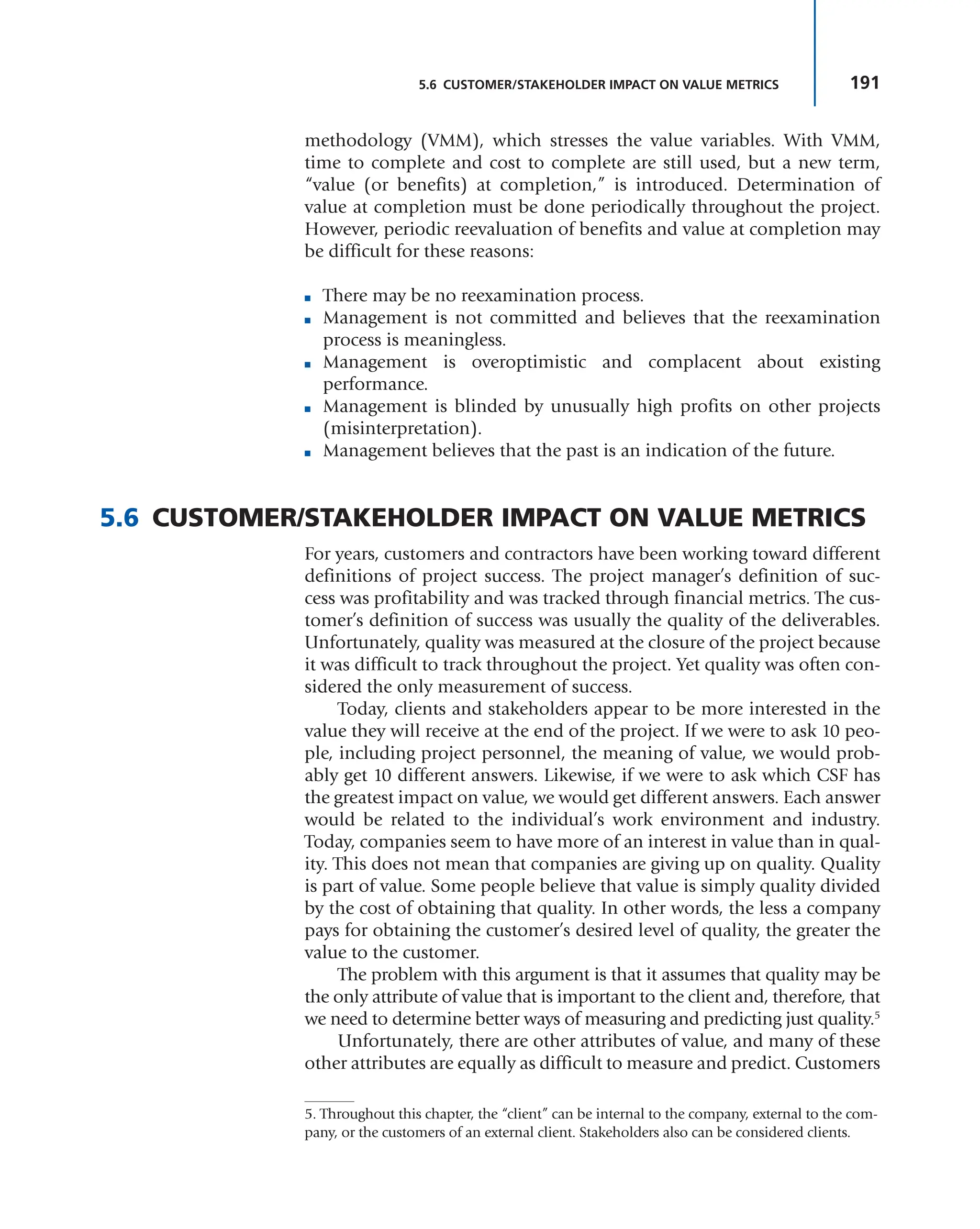

![199

5.8 THE RELATIONSHIP BETWEEN PROJECT MANAGEMENT AND VALUE

■ Trade-offs are made without considering the impact on the final value.

■ Quality and value are considered as synonymous; quality is considered

the only value attribute.

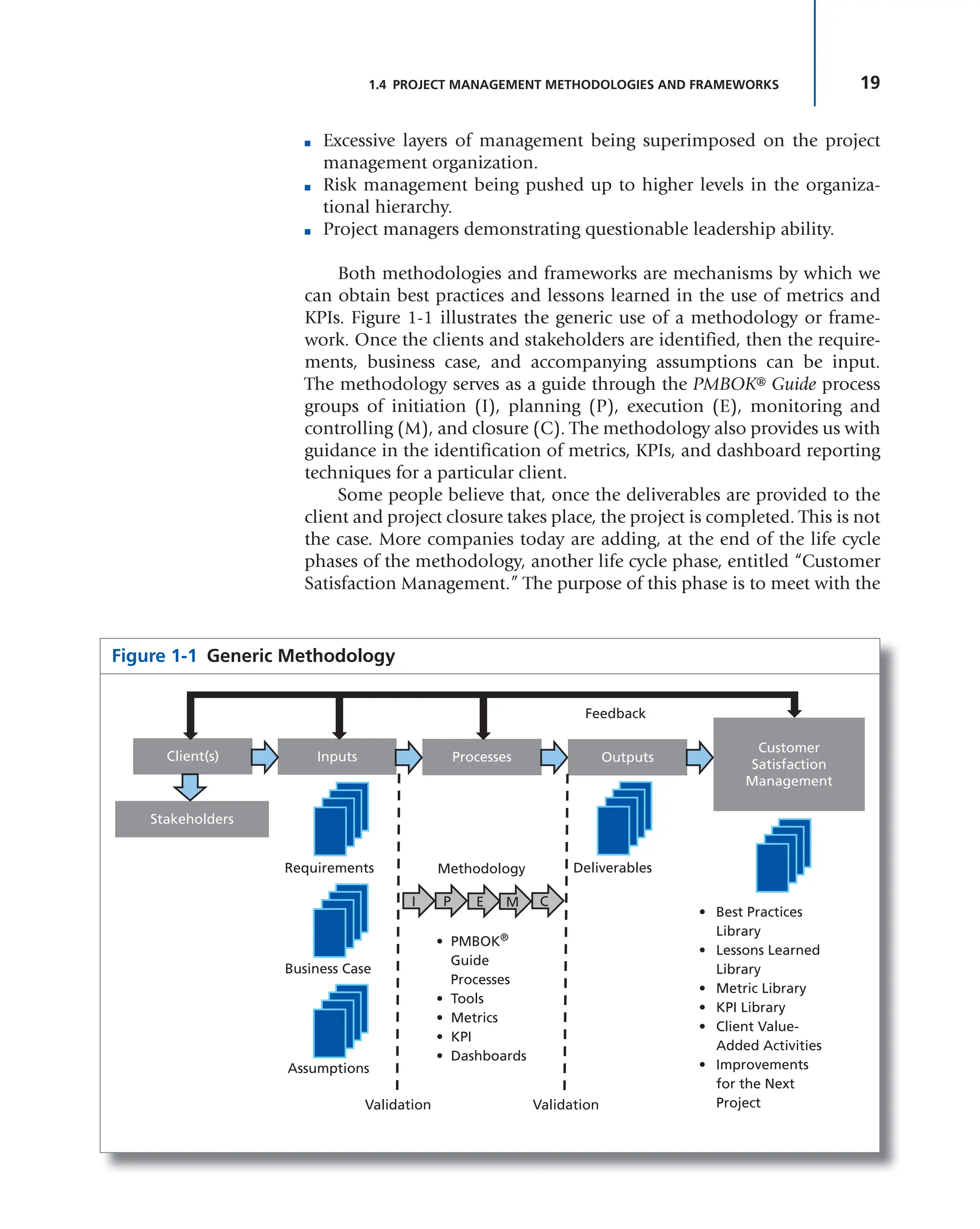

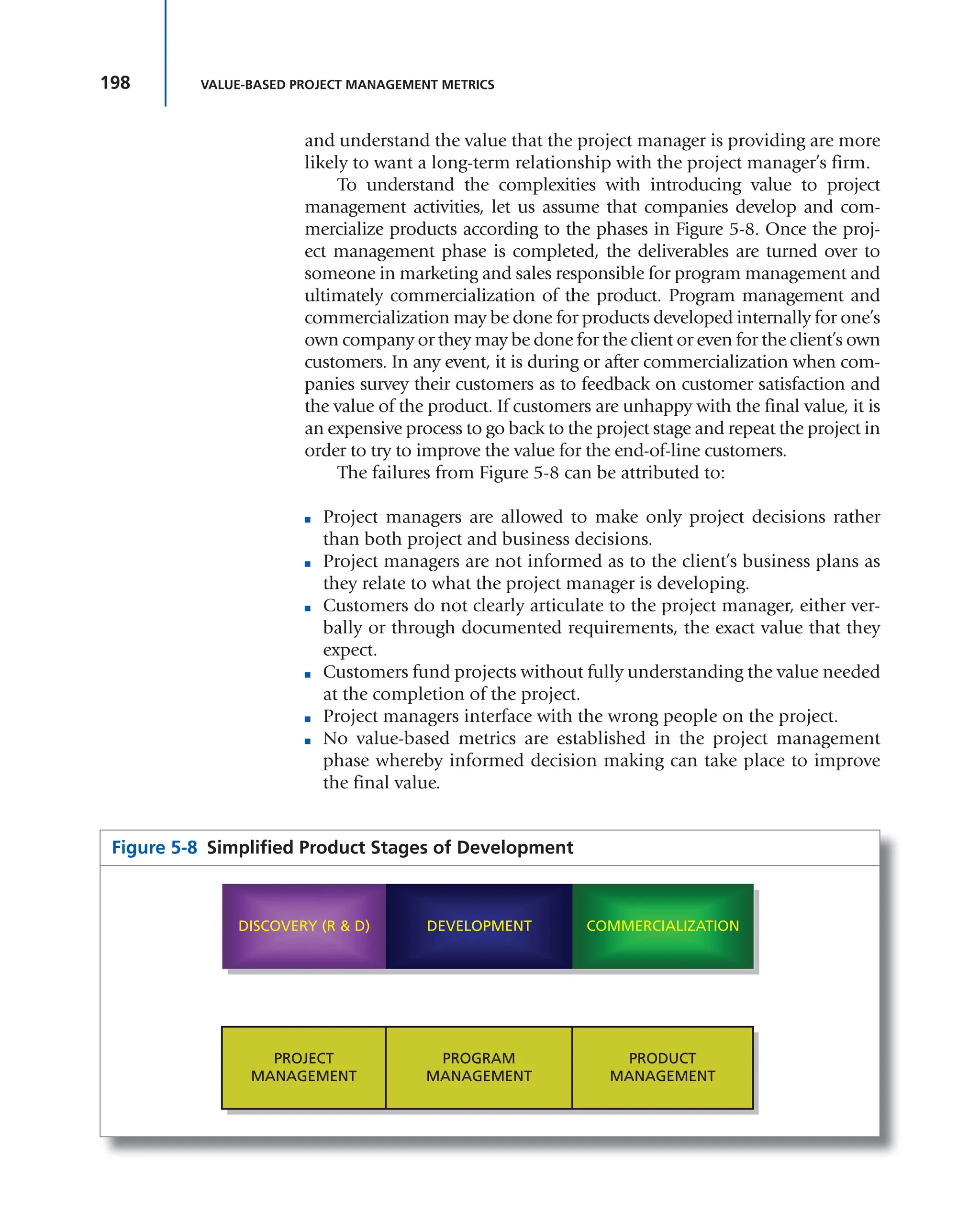

Returning to Figure 5-7, it can be seen that as CVM activities are

approached, traditional metrics are replaced with value-based metrics.

The value metric should not be used to replace other metrics that people

may be comfortable using for tracking project performance. Instead, it

should be used to support other metrics being used. As stated by a health-

care IT consulting company:

[The need for a value metric] “hits directly on the point” that so many

of us consultants encounter on a regular basis surrounding the fact that

often the metrics that are utilized to ensure success of projects and pro-

grams are too complex, don’t really demonstrate the key factors of what

the sponsors, stakeholders, investors, client, etc., “need” in order to dem-

onstrate value and success in a project and do not always “circle back”

to the mission, vision, and goals of the project at the initial kick-off. What

we have found is that the majority of our clients are constantly in search

of something simple, streamlined, and transparent (truly just as simple

as that). In addition, they generally request a “pretty picture” format;

something that is clear, concise and easy and quick to glance at in order

to determine areas that require immediate attention. They crave for the

red, yellow, green. They yearn for a crisp measure to state what the “cur-

rent state” truly is [and whether the desired value is being achieved]. They

are tired and weary of the “circles,” they are exhausted of the mounds of

paperwork, and they want to take it back to the straight facts.

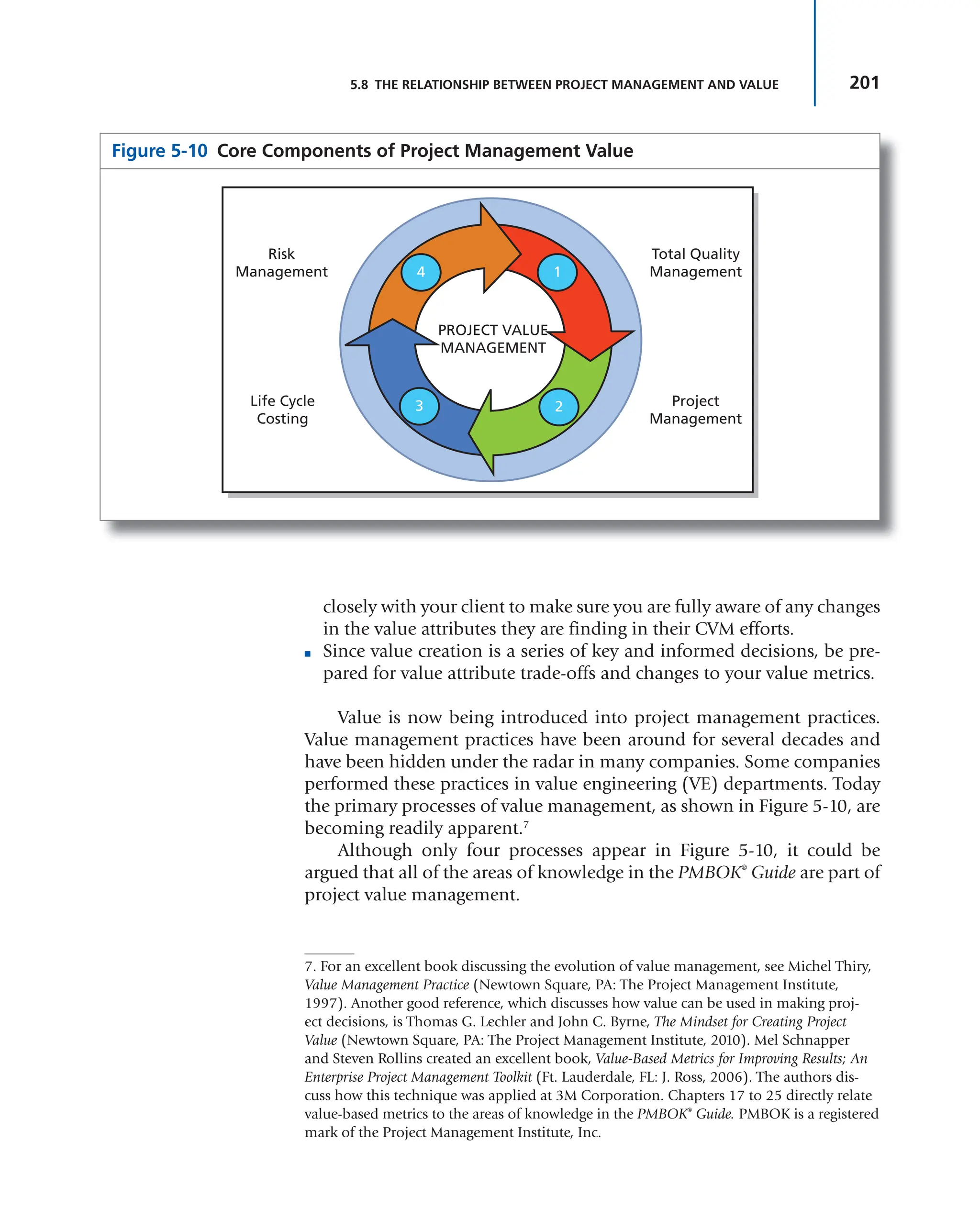

Most companies are now in the infancy stage of determining how to

define and measure value. For internal projects, we are struggling in deter-

mining the right value metrics to assist us in project portfolio manage-

ment with the selection of one project over another. For external projects,

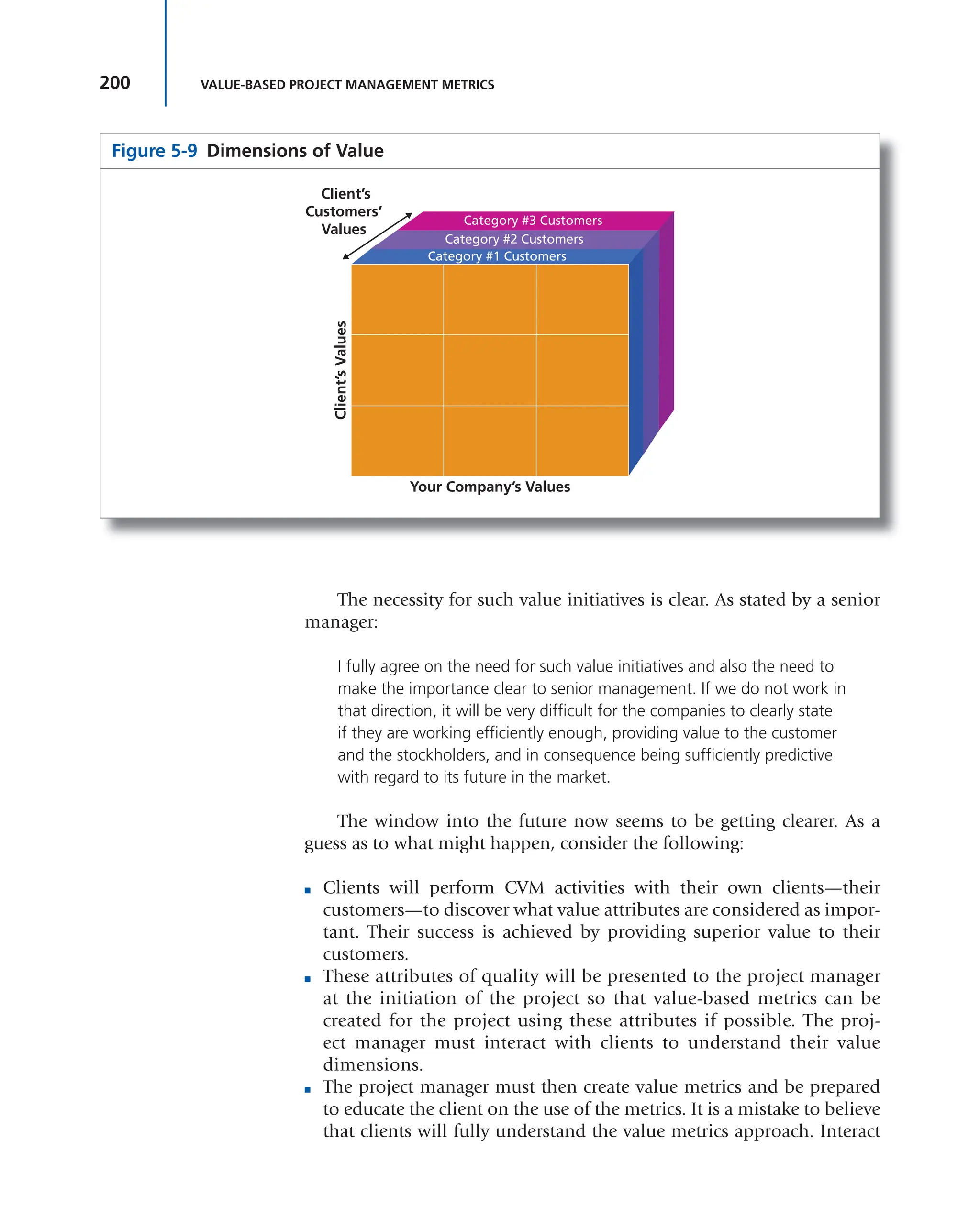

the picture is more complex. Unlike traditional metrics used in the past,

value-based metrics are different for each client and each stakeholder.

Figure 5-9 shows the three dimensions of values: the parent company’s val-

ues, the client’s values, the client’s customers’ values, and a fourth dimen-

sion could be added, namely stakeholder values. It should be understood

that value that the completion of a project brings to the project manager’s

organization may not be as important as the total value that the project

brings to the client’s organization and the client’s customer base.

For some companies, the use of value metrics will create additional

challenges. As stated by a global IT consulting company:

This will be a cultural change for us and for the customer. Both sides

will need to have staff competent in identifying the right metrics to use

and weightings; and then be able to explain in layman’s language what

the value metric is about.](https://image.slidesharecdn.com/projectmanagementmetrics-kpis-anddashboardsaguidetomeasuringandmonitoringprojectperformance-231112195000-7390cb3a/75/Project-management-metrics-KPIs-and-dashboards-_-a-guide-to-measuring-and-monitoring-project-performance-pdf-207-2048.jpg)

![279

6.11 BRIGHTPOINT CONSULTING, INC.: DESIGNING EXECUTIVE DASHBOARDS

3. Trend icons: A trend icon represents how a key performance indica-

tor or metric is behaving over a period of time. It can be in one of

three states: moving toward a target, [moving] away from a target, or

static. Various symbols may be used to represent these states, includ-

ing arrows or numbers. Trend icons can be combined with alert icons

to display two dimensions of information within the same visual

space. This can be accomplished by placing the trend icon within a

color- or shape-coded alert icon.

When to use: Trend icons can be used by themselves in the same

situation you would use an alert icon, or to supplement another

more complex KPI visualization when you want to provide a refer-

ence to the KPI’s movement over time.

4. Progress bars: A progress bar represents more than one dimension

of information about a KPI via its scale, color, and limits. At its most

basic level, a progress bar can provide a visual representation of prog-

ress along a one-dimensional axis. With the addition of color and

alert levels, you can also indicate when you have crossed specific tar-

get thresholds as well as how close you are to a specific limit.

When to use: Progress bars are primarily used to represent relative

progress toward a positive quantity of a real number. They do not

work well when the measure you want to represent can have nega-

tive values: The use of shading within a “bar” to represent a negative

value can be confusing to the viewer because any shading is seen to

represent some value above zero, regardless of the label on the axis.

Progress bars also work well when you have KPIs or metrics that share

a common measure along an axis (similar to a bar chart), and you

want to see relative performance across those KPIs/metrics.

5. Gauges: A gauge is an excellent mechanism by which to quickly

assess both positive and negative values along a relative scale. Gauges

lend themselves to dynamic data that can change over time in rela-

tionship to underlying variables. Additionally, the use of embedded

alert levels allows you to quickly see how close or far away you are

from a specific threshold.

When to use: Gauges should be reserved for the highest level and

most critical metrics or KPIs on a dashboard because of their visual

density and tendency to focus user attention. Most of these critical

operational metrics/KPIs will be more dynamic values that change

on a frequent basis throughout the day. One of the most important

considerations in using gauges is their size: too small, and it is diffi-

cult for the viewer to discern relative values because of the density of

the “ink” used to represent the various gauge components; too large,

and you end up wasting valuable screen space.

With more sophisticated data visualization packages, gauges also

serve as excellent context-sensitive navigation elements because of

their visual predominance within the dashboard.](https://image.slidesharecdn.com/projectmanagementmetrics-kpis-anddashboardsaguidetomeasuringandmonitoringprojectperformance-231112195000-7390cb3a/75/Project-management-metrics-KPIs-and-dashboards-_-a-guide-to-measuring-and-monitoring-project-performance-pdf-286-2048.jpg)

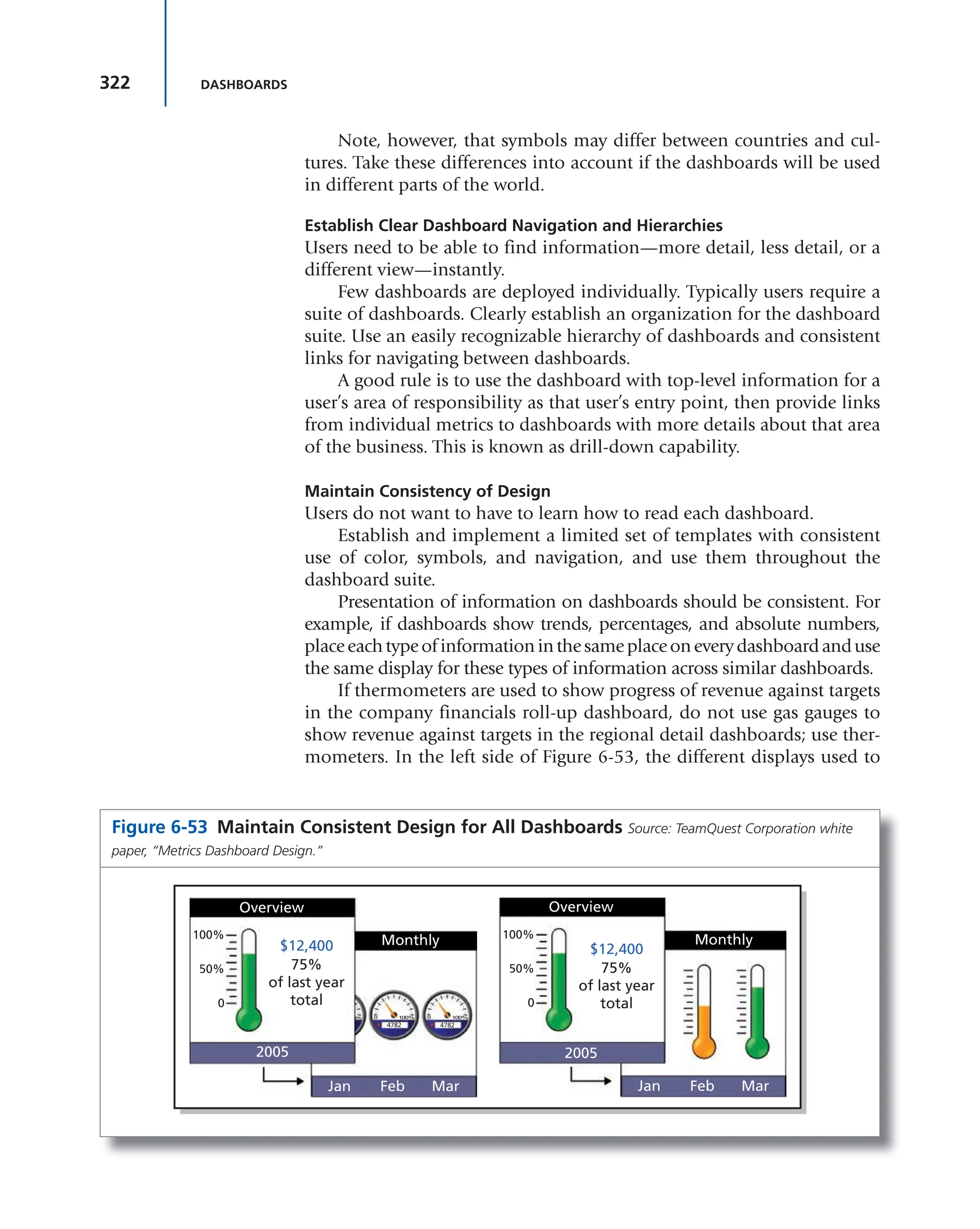

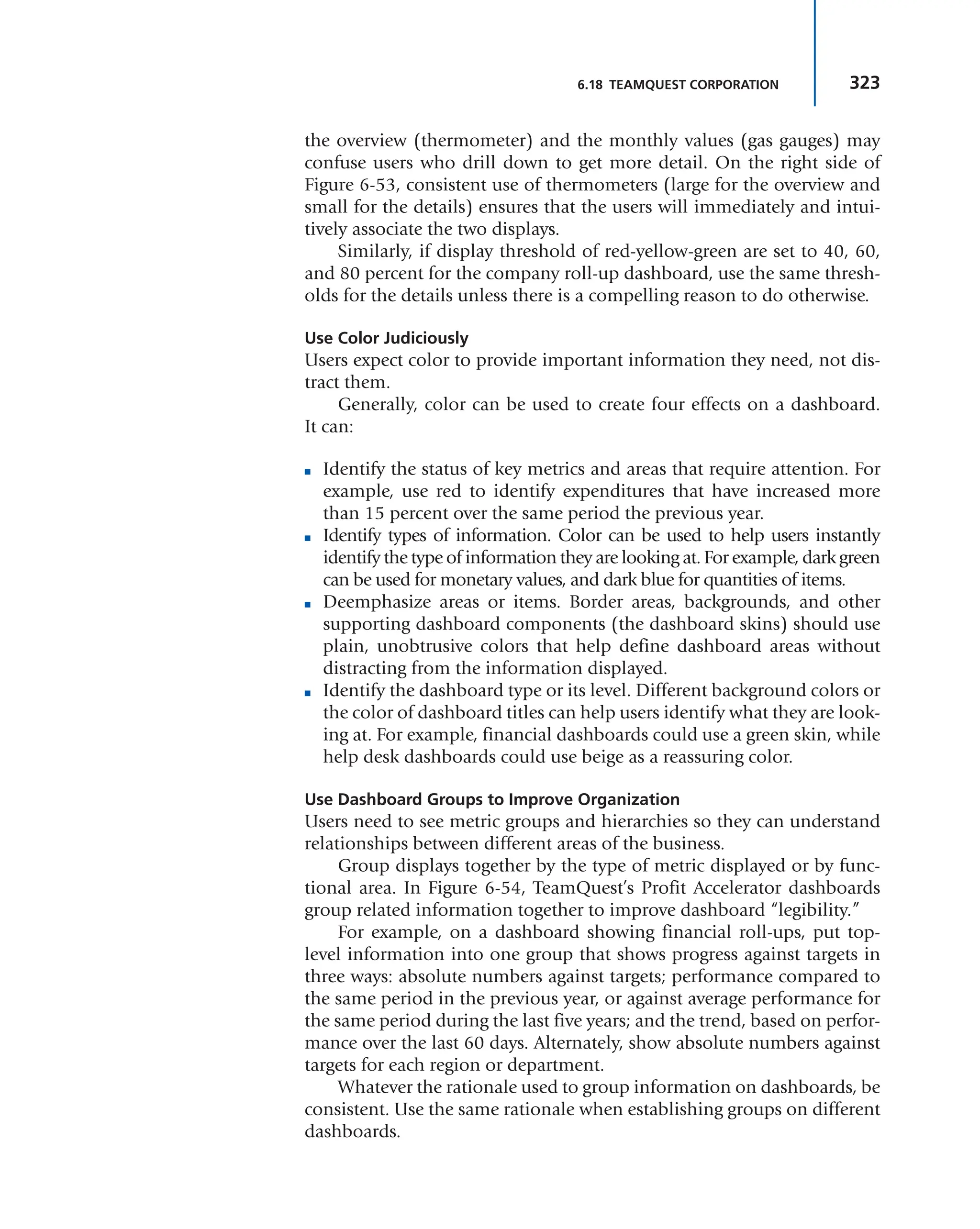

![314 DASHBOARDS

The accuracy of the time estimates is important when using burn-

down charts. If time requirements are continuously overestimated or

underestimated, these charts allow for the inclusion of an efficiency fac-

tor to remove the variability.

There are always advantages and disadvantages to each metric chosen

and the way that the data is displayed. Some people prefer to use burn-up

charts, arguing that burn-down charts do not necessarily show how much

work was actually completed in a given time period.

There are many more metrics common to agile and Scrum projects,

and they are somewhat different from traditional project management

metrics. Agile metrics generally contain more business-oriented metrics.

Typical agile metrics might include:

■ Total project duration

■ Features delivered and time to market

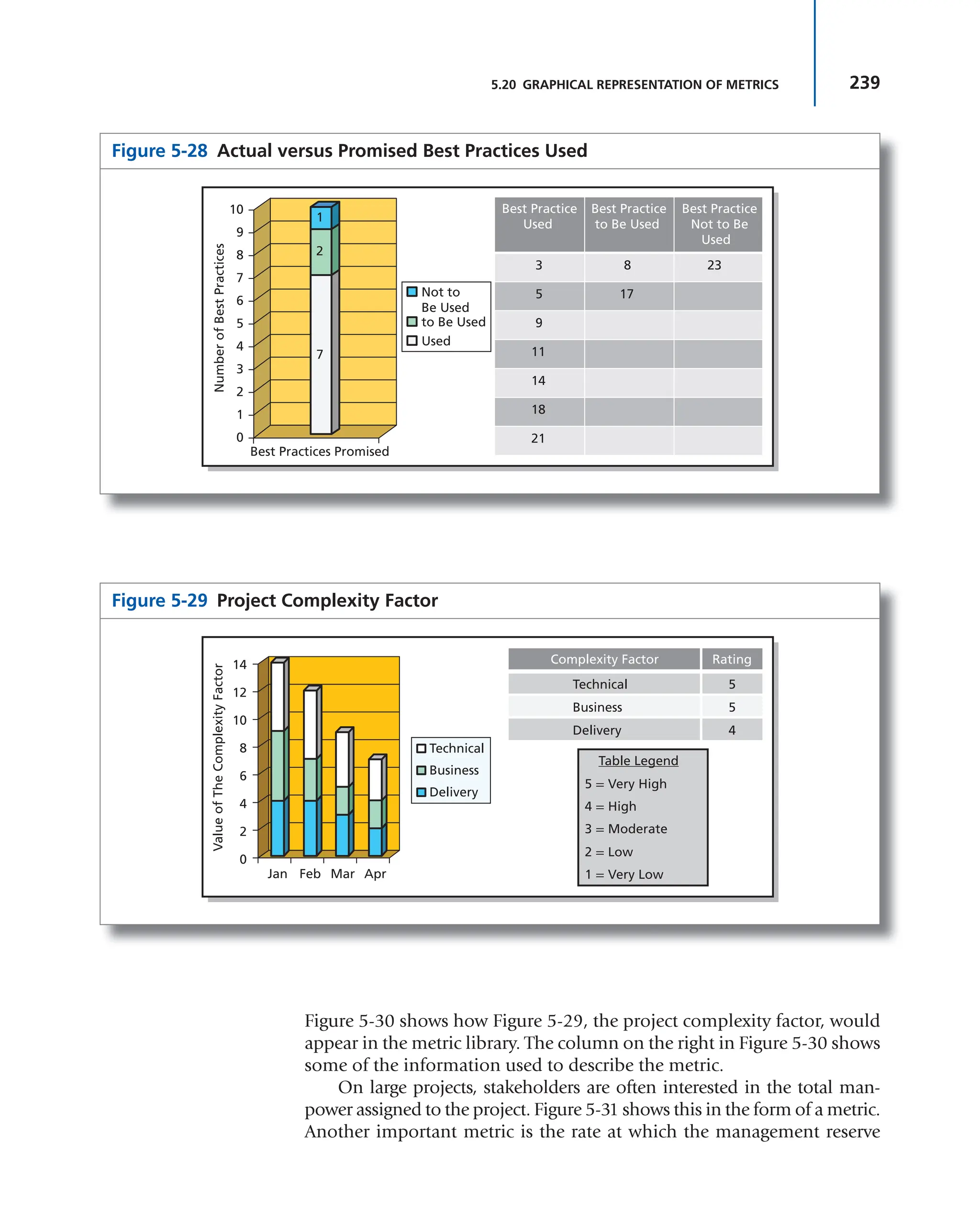

■ Total project costs

■ Number of defects detected, open or escaped

■ Number of impediments discovered, removed and escalated

■ Financial factors (i.e., return on investment [ROI], net present value,

internal rate of return, payback period, etc.)

■ Customer satisfaction

■ Business value delivered

■ Sprint burn-up or burn-down charts

■ Sprint goal success rates

■ Capacity per sprint

■ Scope increase or decrease

■ Team member utilization rate

■ Team member turnover rate

■ Company turnover rate (as it might affect morale)

■ Capital redeployment (i.e., comparing the cost of further improve-

ments against the future value received)

6.16 DATA WAREHOUSES

During the past 15 years, companies have established project manage-

ment offices (PMOs), which have become the guardians of the company’s

intellectual property on project management. Today, project managers

are expected to make both project decisions and business decisions. It is

therefore unrealistic to expect each PMO to have at its disposal all of the

BI that will be needed. PMOs may be replaced by information or data

warehouses. On large and/or complex projects, each stakeholder may

have an information warehouse, and the project team may have to tap

into each warehouse to get the appropriate information for the dash-

boards. Warehouse creation projects generally are performed by the IT

departments with little input from prospective user groups.](https://image.slidesharecdn.com/projectmanagementmetrics-kpis-anddashboardsaguidetomeasuringandmonitoringprojectperformance-231112195000-7390cb3a/75/Project-management-metrics-KPIs-and-dashboards-_-a-guide-to-measuring-and-monitoring-project-performance-pdf-321-2048.jpg)

![421

8.5 CRISIS DASHBOARDS

problem but not necessarily a crisis. But if the construction of a manu-

facturing plant is behind schedule and plant workers have already been

hired to begin work on a certain date or the delay in the plant will acti-

vate penalty clauses for late delivery of manufactured items for a client,

then the situation could be a crisis. Sometimes exceeding a target favor-

ably also triggers a crisis. As an example, a manufacturing company had

a requirement to deliver 10 and only 10 units to a client each month. The

company manufactured 15 units each month but could only ship 10 per

month to the client. Unfortunately, the company did not have storage

facilities for the extra units produced, and a crisis occurred.

How do project managers determine whether the out-of-tolerance

condition is just a problem or a crisis that needs to appear on the crisis

dashboard? The answer is in the potential damage that can occur. If any

of the items on the next list could occur, then the situation would most

likely be treated as a crisis and necessitate a dashboard display:

■ There is a significant threat to:

■ The outcome of the project.

■ The organization as a whole, its stakeholders, and possibly the

general public.

■ The firm’s business model and strategy.

■ Worker health and safety

■ There is a possibility for loss of life.

■ Redesigning existing systems is now necessary.

■ Organizational change will be necessary.

■ The firm’s image or reputation will be damaged.

■ Degradation in customer satisfaction could result in a present and

future loss of significant revenue.

It is important to understand the difference between risk manage-

ment and crisis management.

In contrast to risk management, which involves assessing potential

threats and finding the best ways to avoid those threats, crisis manage-

ment involves dealing with threats before, during, and after they have

occurred. [That is, crisis management is proactive, not merely reactive.]

It is a discipline within the broader context of management consisting

of skills and techniques required to identify, assess, understand, and

cope with a serious situation, especially from the moment it first occurs

to the point that recovery procedures start.1

Crises often require that immediate decisions be made. Effective deci-

sion making requires information. If one metric appears to be in crisis

1. Wikipedia contributors, “Crisis Management,” Wikipedia, The Free Encyclopedia, https://

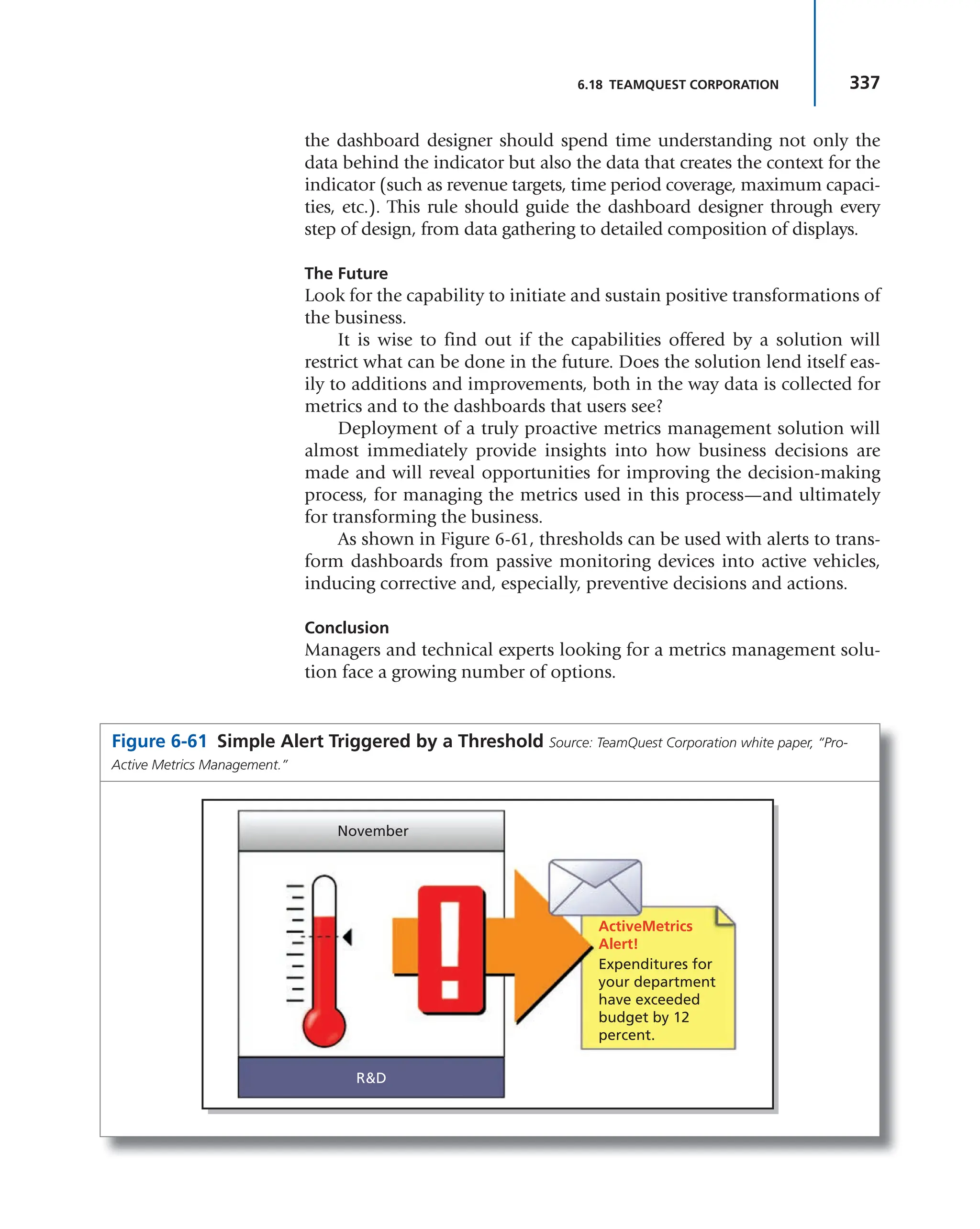

en.wikipedia.org/w/index.php?title=Crisis_management&oldid=774782017.](https://image.slidesharecdn.com/projectmanagementmetrics-kpis-anddashboardsaguidetomeasuringandmonitoringprojectperformance-231112195000-7390cb3a/75/Project-management-metrics-KPIs-and-dashboards-_-a-guide-to-measuring-and-monitoring-project-performance-pdf-426-2048.jpg)