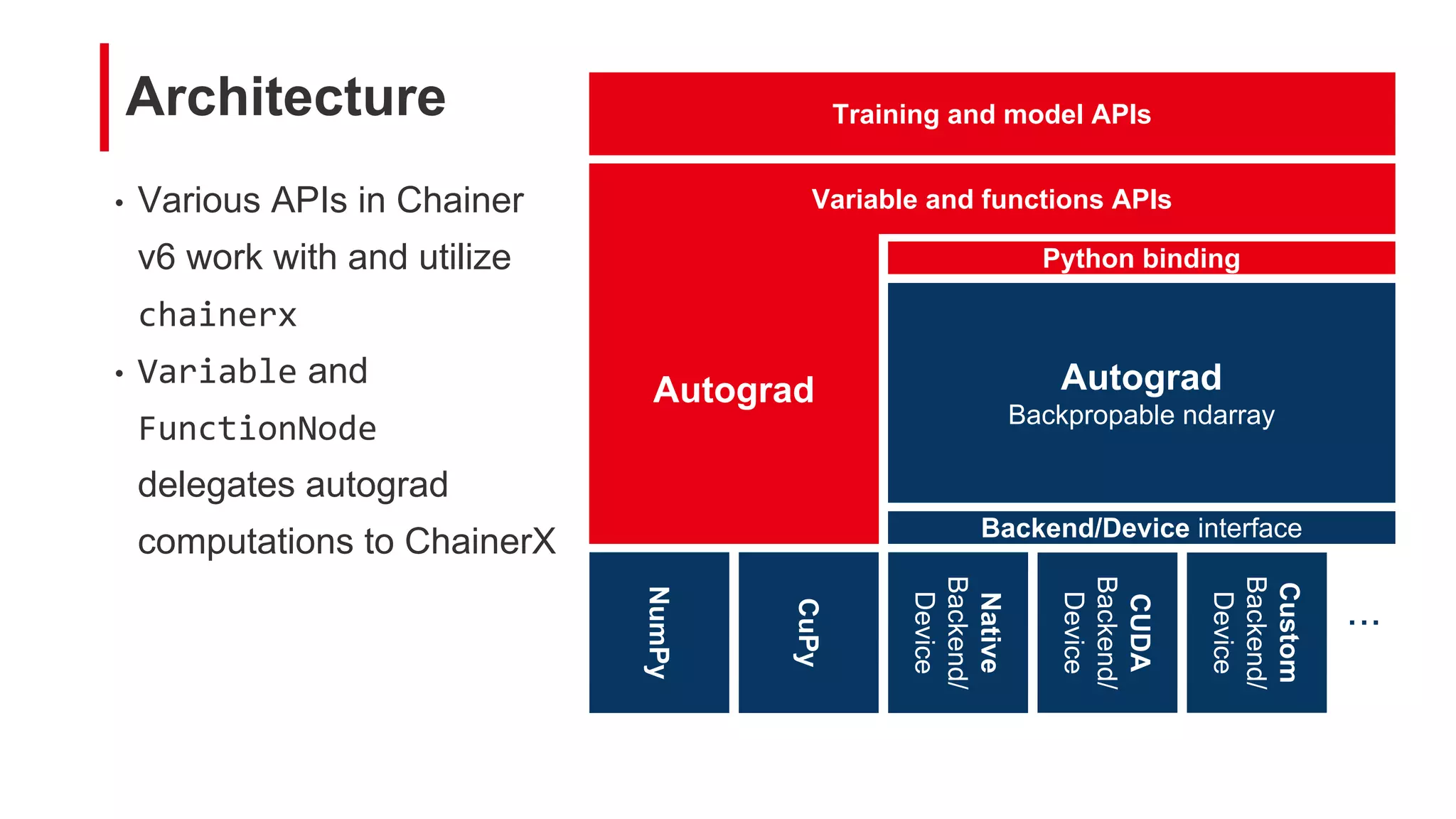

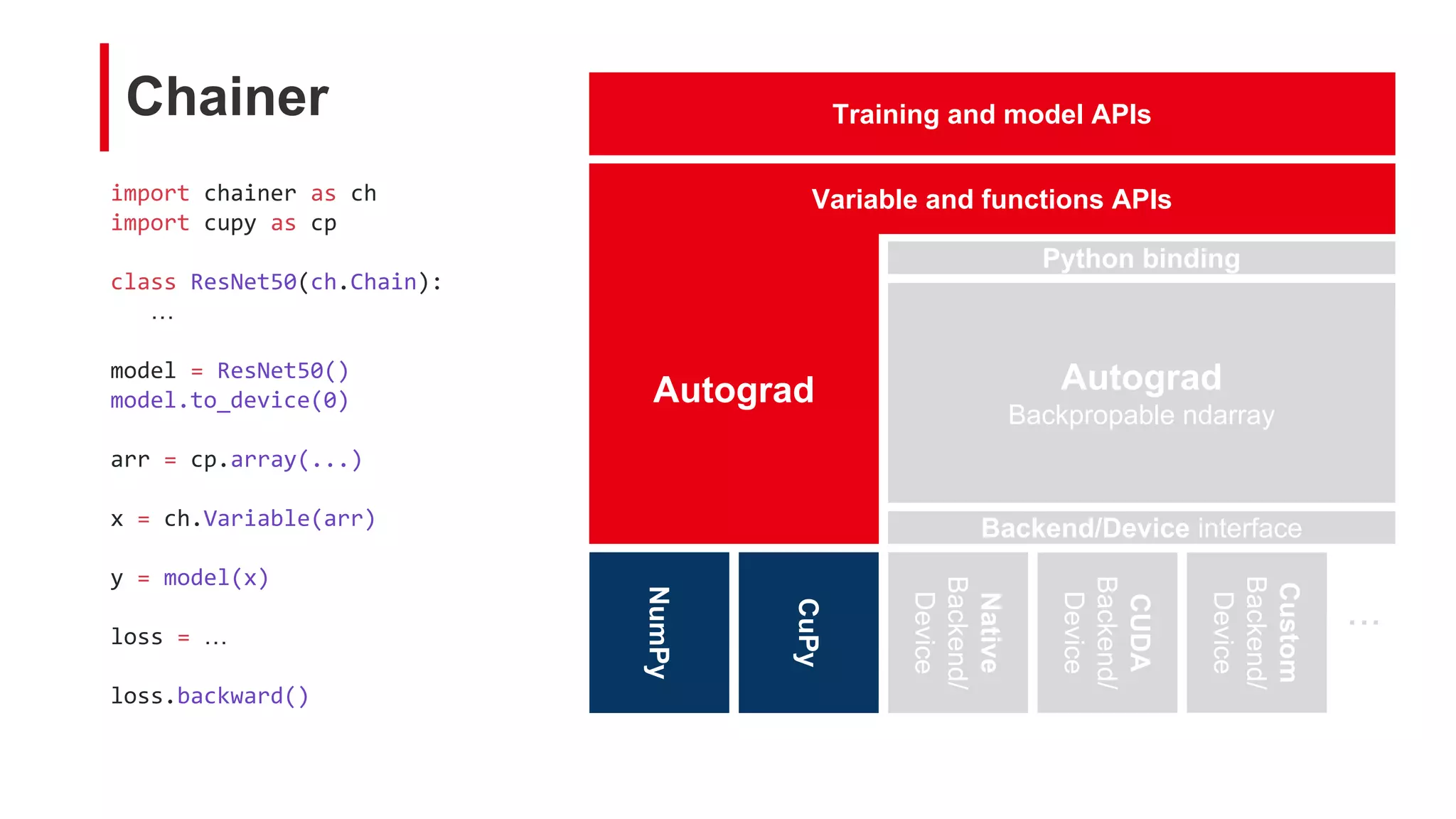

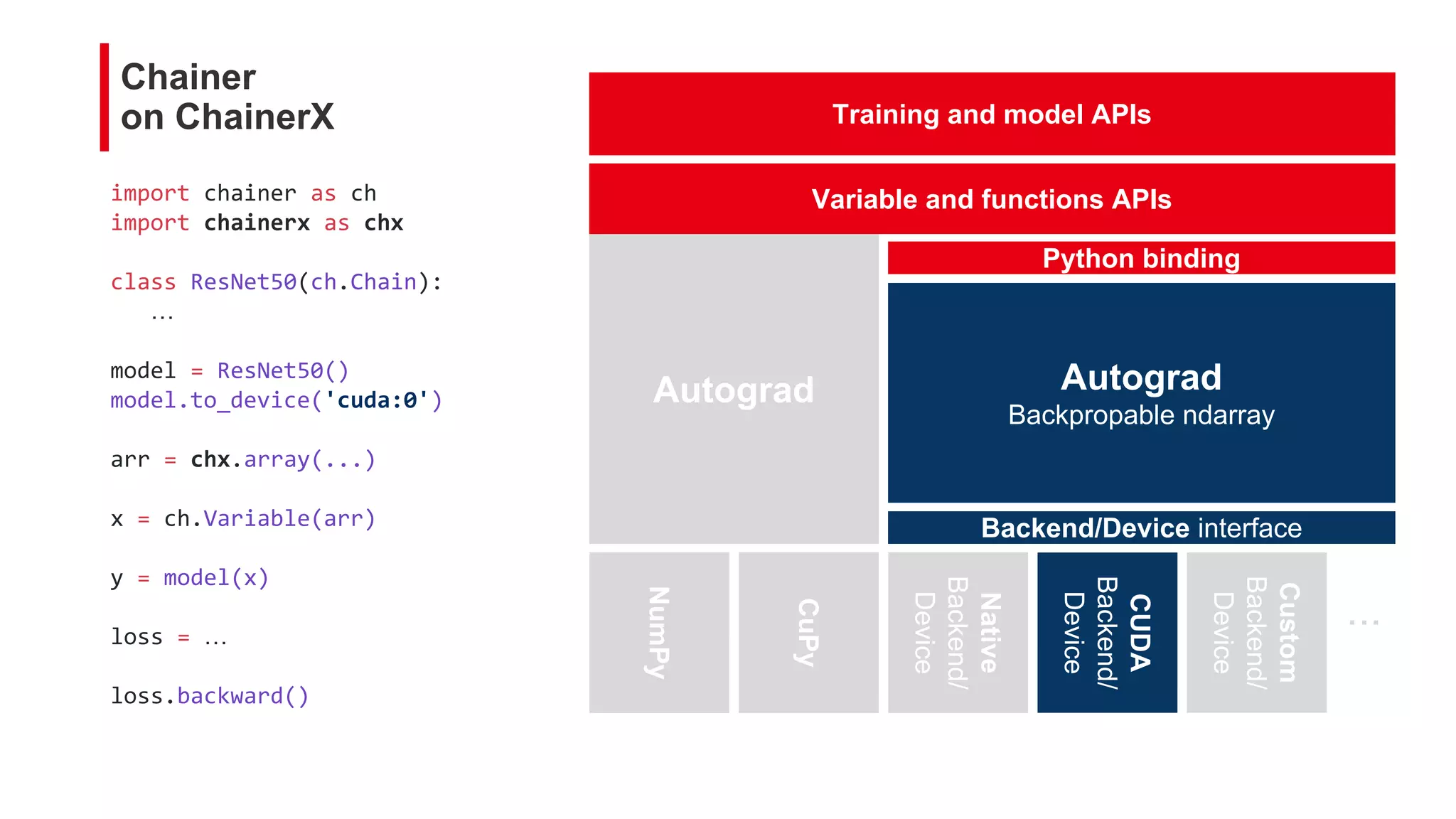

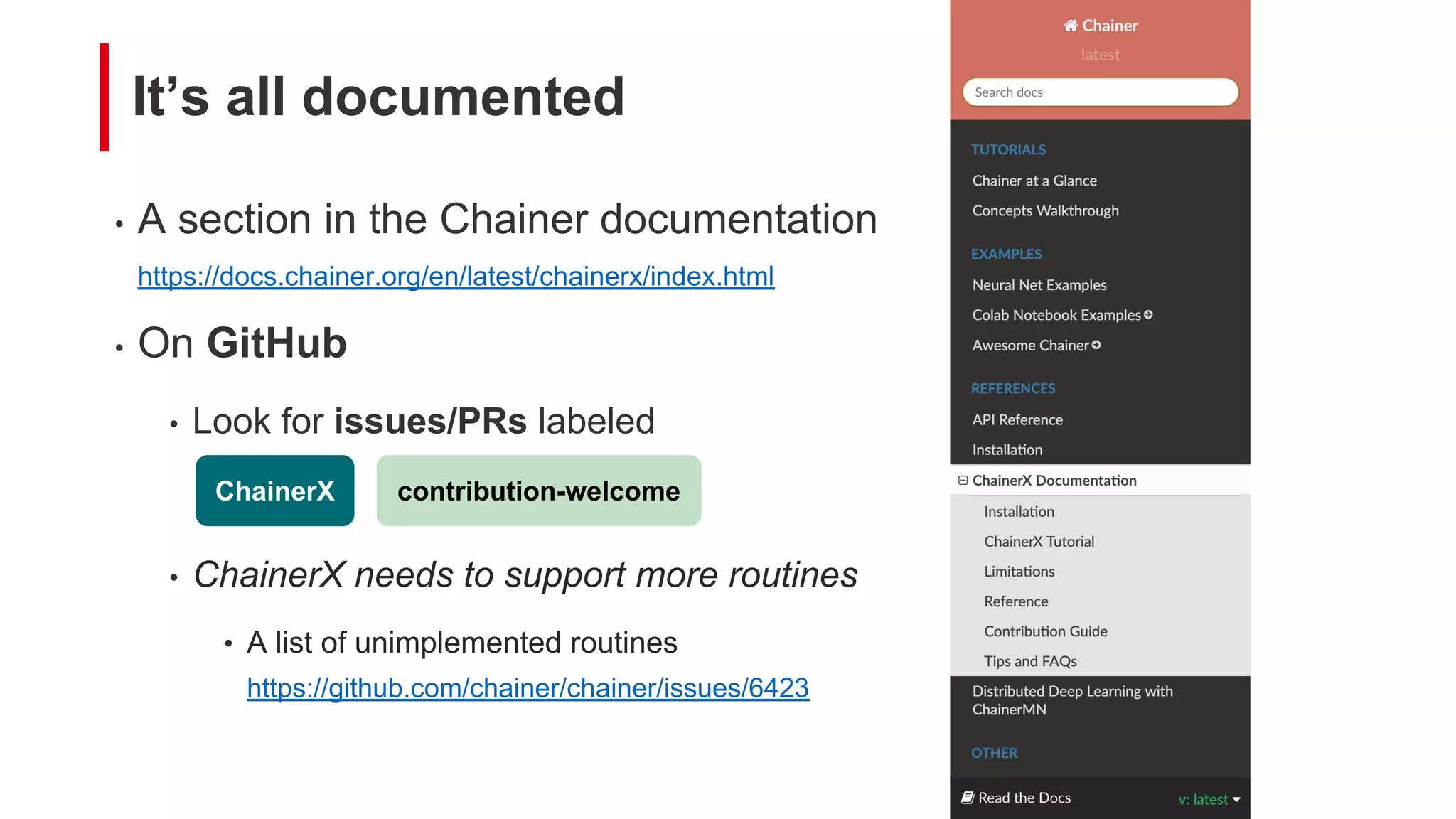

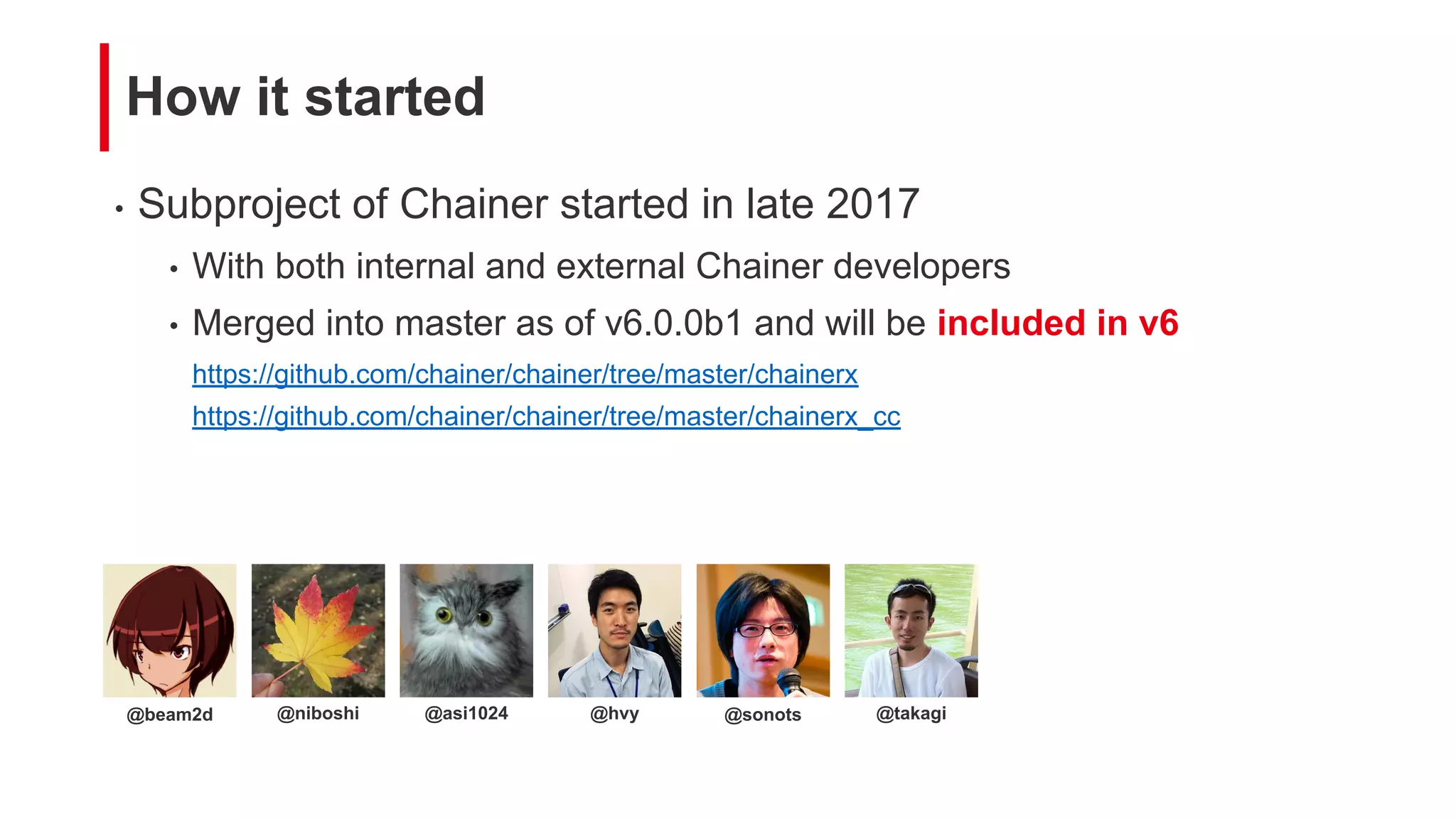

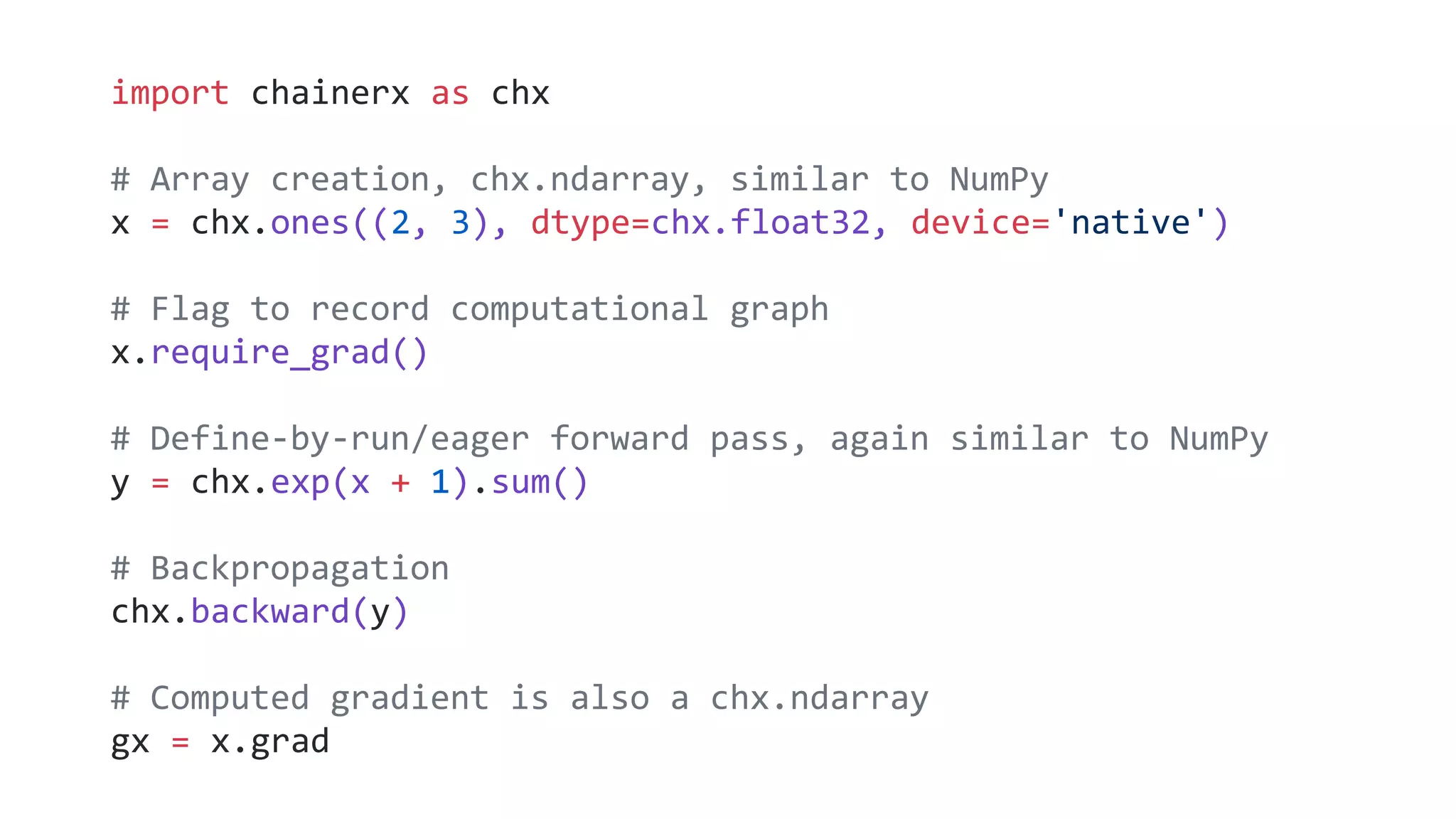

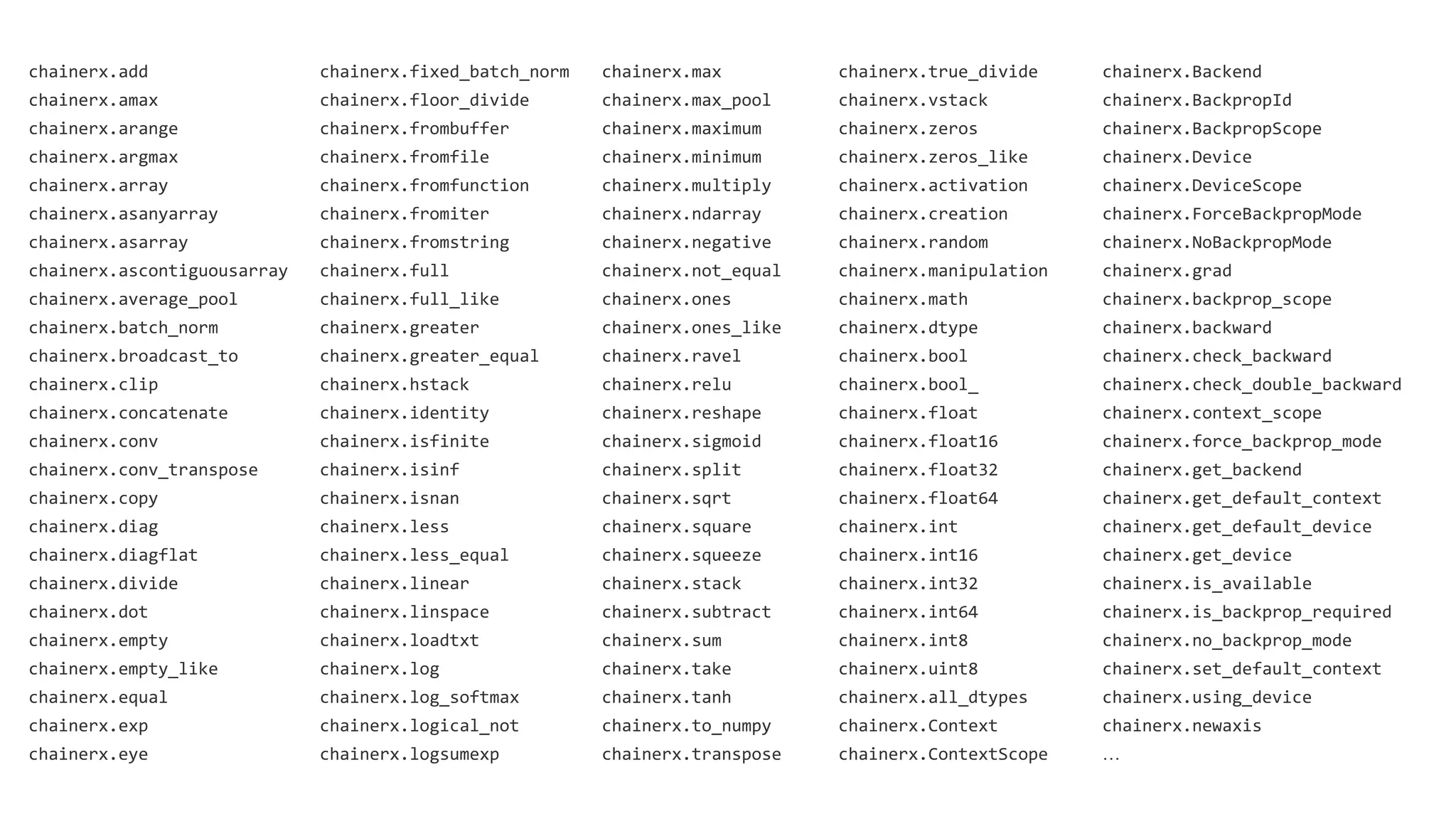

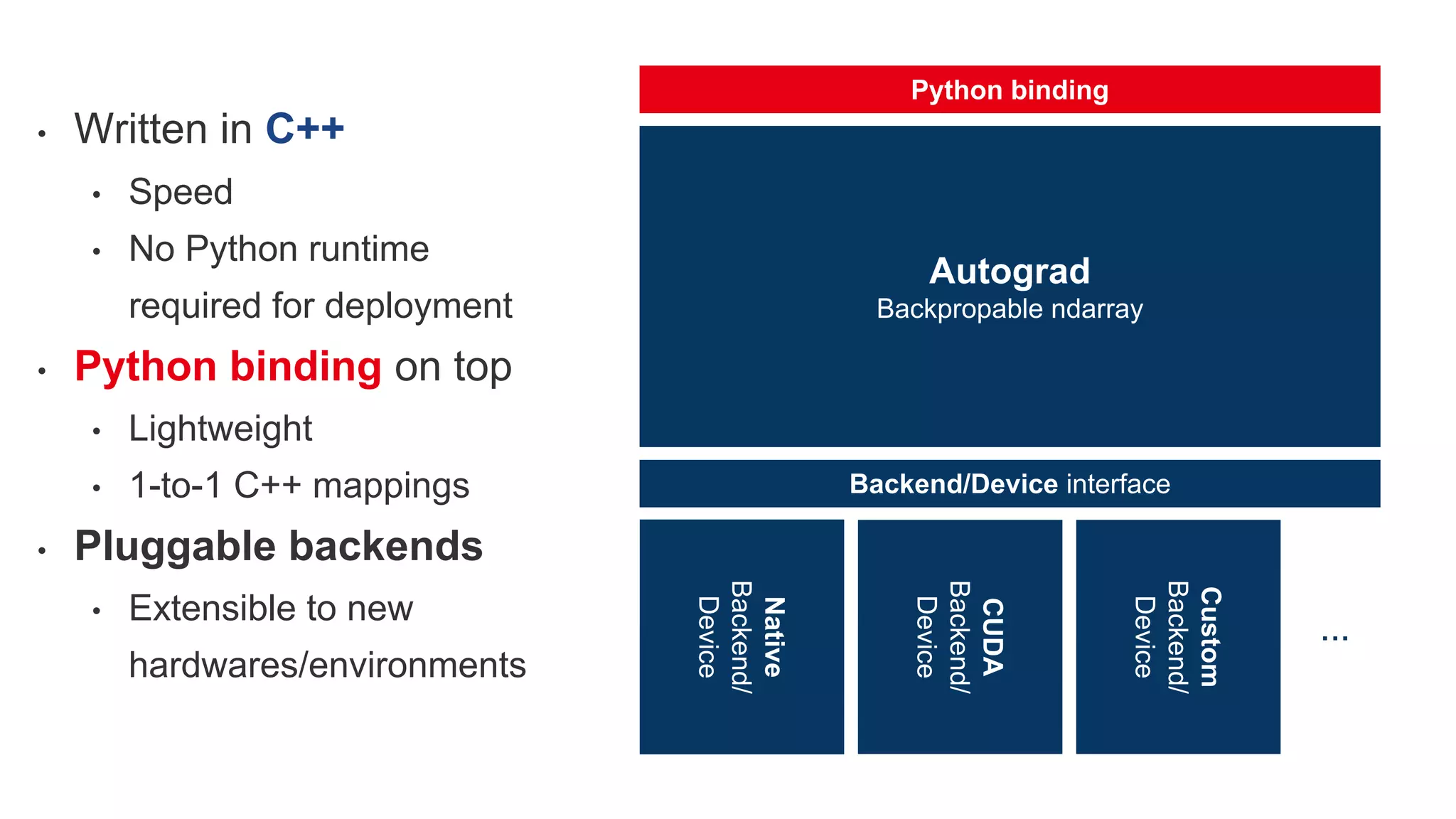

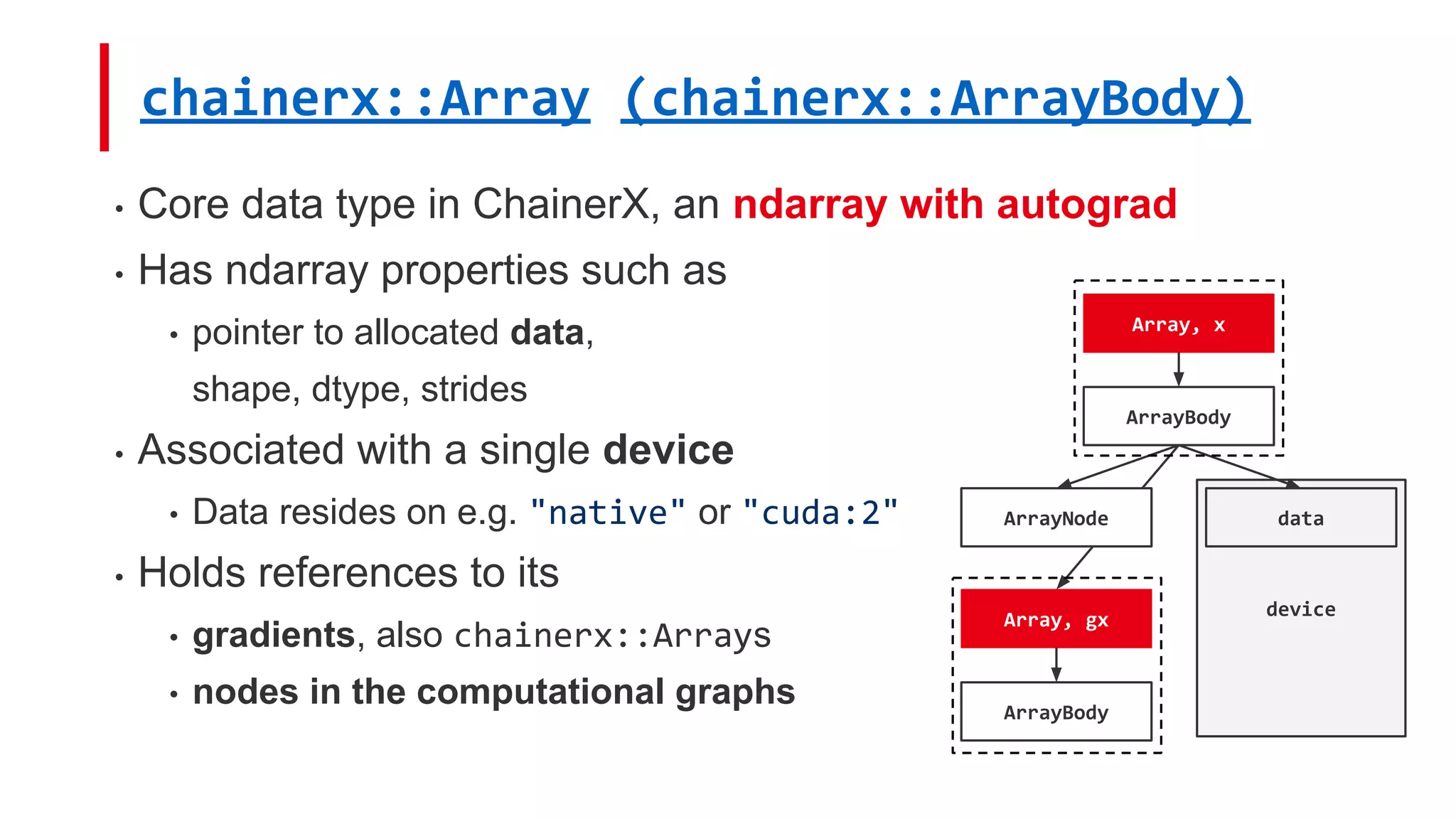

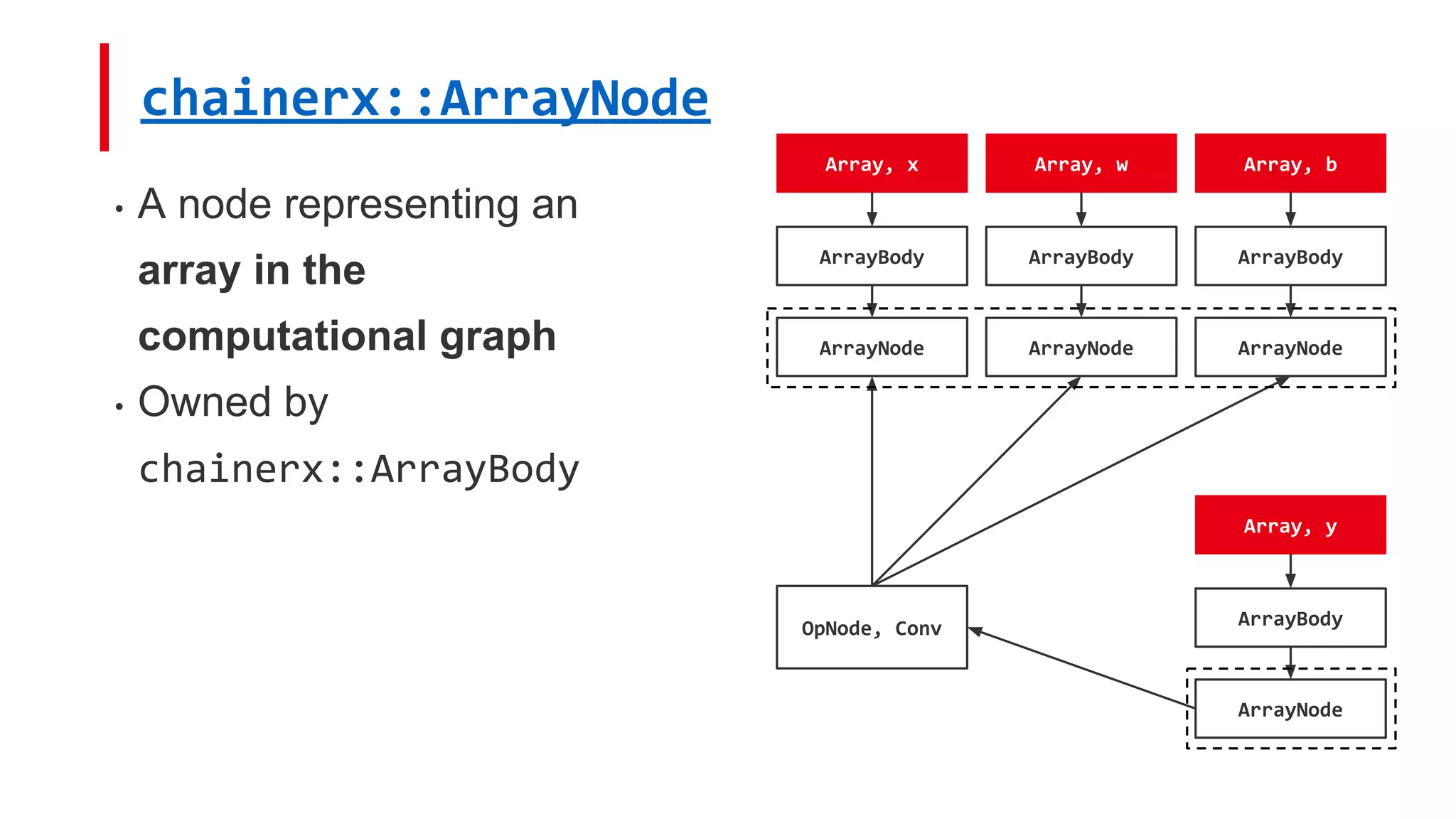

This document discusses ChainerX, a NumPy-like library for deep learning in Chainer with autograd support. ChainerX is implemented in C++ for speed and to allow Python-free deployment. It provides a Python interface and supports pluggable backends. The talk explains ChainerX internals like Array and Node types, and how it integrates with Chainer. It encourages contributions to ChainerX on GitHub to expand supported operations and backends.

![Routines (2/2)

• Defines forward and backward

logic using

chainerx::BackwardBuilder

• Delegates actual computations

to the device methods

• chainerx::Dot calls

chainerx::Device::Dot

Array Dot(const Array& a, const Array& b, Dtype dtype) {

int64_t m = a.shape()[0];

int64_t k = a.shape()[1];

int64_t n = b.shape()[1];

Array out = Empty({m, n}, dtype, a.device());

{

NoBackpropModeScope scope{};

a.device().Dot(a, b, out);

}

{

BackwardBuilder bb{"dot", {a, b}, out};

if (BackwardBuilder::Target bt = bb.CreateTarget(0)) {

bt.Define([b_tok = bb.RetainInput(1), a_dtype = a.dtype()](BackwardContext& bctx) {

const Array& b = bctx.GetRetainedInput(b_tok);

bctx.input_grad() = Dot(*bctx.output_grad(), b.Transpose(), a_dtype);

});

}

if (BackwardBuilder::Target bt = bb.CreateTarget(1)) {

bt.Define([a_tok = bb.RetainInput(0), b_dtype = b.dtype()](BackwardContext& bctx) {

const Array& a = bctx.GetRetainedInput(a_matrix_tok);

bctx.input_grad() = Dot(a.Transpose(), *bctx.output_grad(), b_dtype);

});

}

bb.Finalize();

}

return out;

}](https://image.slidesharecdn.com/chainerx190330-190330051839/75/ChainerX-and-How-to-Take-Part-22-2048.jpg)