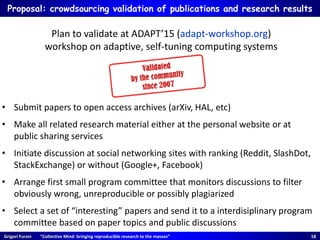

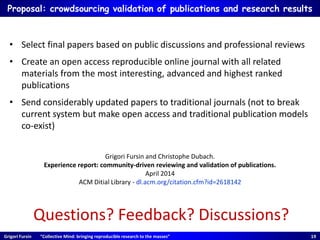

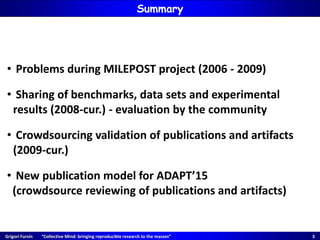

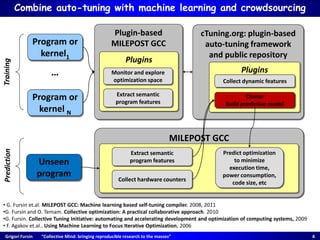

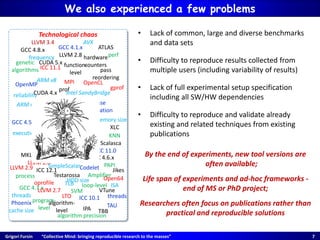

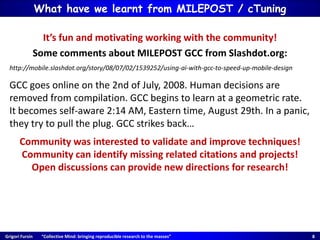

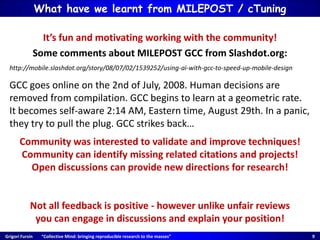

The document discusses the challenges and solutions related to reproducible research and validation of publications in the context of computing and optimization, particularly focusing on the Milepost project and CTuning framework. It emphasizes the importance of sharing comprehensive experimental setups and data while proposing a crowdsourced model for validating research results. The authors advocate for a collaborative approach that combines community feedback, open access, and machine learning methodologies to enhance the reproducibility and efficiency of research processes.

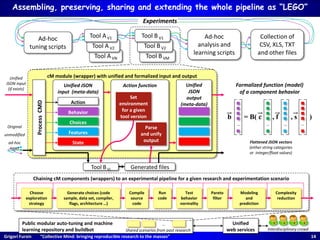

![Grigori Fursin “Collective Mind: bringing reproducible research to the masses” 11

cM module (wrapper) with unified and formalized input and output

ProcessCMD

Tool BVi Generated files

Original

unmodified

ad-hoc

input

Behavior

Choices

Features

State

Wrappers around code and data (linked together)

Tool BVM

Tool BV2

Tool AVN

Tool AV2

Tool AV1 Tool BV1 Ad-hoc

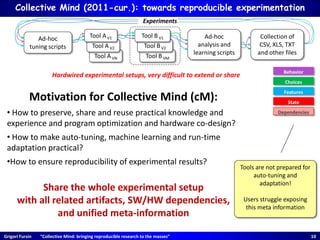

analysis and

learning scripts

Ad-hoc

tuning scripts

Collection of

CSV, XLS, TXT

and other files

Experiments

cm [module name] [action] (param1=value1 param2=value2 … -- unparsed command line)

cm compiler build -- icc -fast *.c

cm code.source build ct_compiler=icc13 ct_optimizations=-fast

cm code run os=android binary=./a.out dataset=image-crazy-scientist.pgm

Should be able to run on any OS (Windows, Linux, Android, MacOS, etc)!

Dependencies](https://image.slidesharecdn.com/panelatacmsigplantrust2014-140616050524-phpapp01/85/Panel-at-acm_sigplan_trust2014-11-320.jpg)

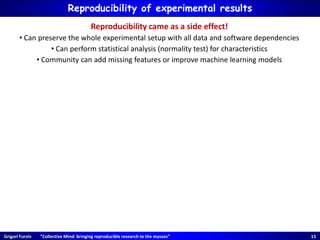

![Grigori Fursin “Collective Mind: bringing reproducible research to the masses” 12

cM module (wrapper) with unified and formalized input and output

Unified JSON

input (meta-data)

ProcessCMD

Tool BVi

Behavior

Choices

Features

State

Action

Action function

Generated files

Parse

and unify

output

Unified

JSON

output

(meta-data)

Unified

JSON input

(if exists)

Original

unmodified

ad-hoc

input

b = B( c , f , s )

… … … …

Formalized function (model)

of a component behavior

Flattened JSON vectors

(either string categories

or integer/float values)

Exposing meta information in a unified way

Tool BVM

Tool BV2

Tool AVN

Tool AV2

Tool AV1 Tool BV1 Ad-hoc

analysis and

learning scripts

Ad-hoc

tuning scripts

Collection of

CSV, XLS, TXT

and other files

Experiments

cm [module name] [action] (param1=value1 param2=value2 … -- unparsed command line)

cm compiler build -- icc -fast *.c

cm code.source build ct_compiler=icc13 ct_optimizations=-fast

cm code run os=android binary=./a.out dataset=image-crazy-scientist.pgm

Should be able to run on any OS (Windows, Linux, Android, MacOS, etc)!](https://image.slidesharecdn.com/panelatacmsigplantrust2014-140616050524-phpapp01/85/Panel-at-acm_sigplan_trust2014-12-320.jpg)

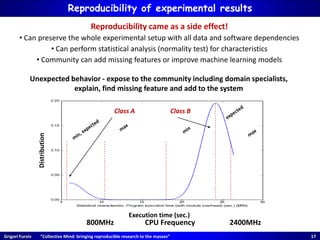

![Grigori Fursin “Collective Mind: bringing reproducible research to the masses” 13

cM module (wrapper) with unified and formalized input and output

Unified JSON

input (meta-data)

ProcessCMD

Tool BVi

Behavior

Choices

Features

State

Action

Action function

Generated files

Set

environment

for a given

tool version

Parse

and unify

output

Unified

JSON

output

(meta-data)

Unified

JSON input

(if exists)

Original

unmodified

ad-hoc

input

b = B( c , f , s )

… … … …

Multiple tool versions

can co-exist, while their

interface is abstracted

by cM module

Adding SW/HW dependencies check

Tool BVM

Tool BV2

Tool AVN

Tool AV2

Tool AV1 Tool BV1 Ad-hoc

analysis and

learning scripts

Ad-hoc

tuning scripts

Collection of

CSV, XLS, TXT

and other files

Experiments

cm [module name] [action] (param1=value1 param2=value2 … -- unparsed command line)

cm compiler build -- icc -fast *.c

cm code.source build ct_compiler=icc13 ct_optimizations=-fast

cm code run os=android binary=./a.out dataset=image-crazy-scientist.pgm

Should be able to run on any OS (Windows, Linux, Android, MacOS, etc)!

Flattened JSON vectors

(either string categories

or integer/float values)

Formalized function (model)

of a component behavior](https://image.slidesharecdn.com/panelatacmsigplantrust2014-140616050524-phpapp01/85/Panel-at-acm_sigplan_trust2014-13-320.jpg)