OpenStack Super Bootcamp Mirantis, 2012

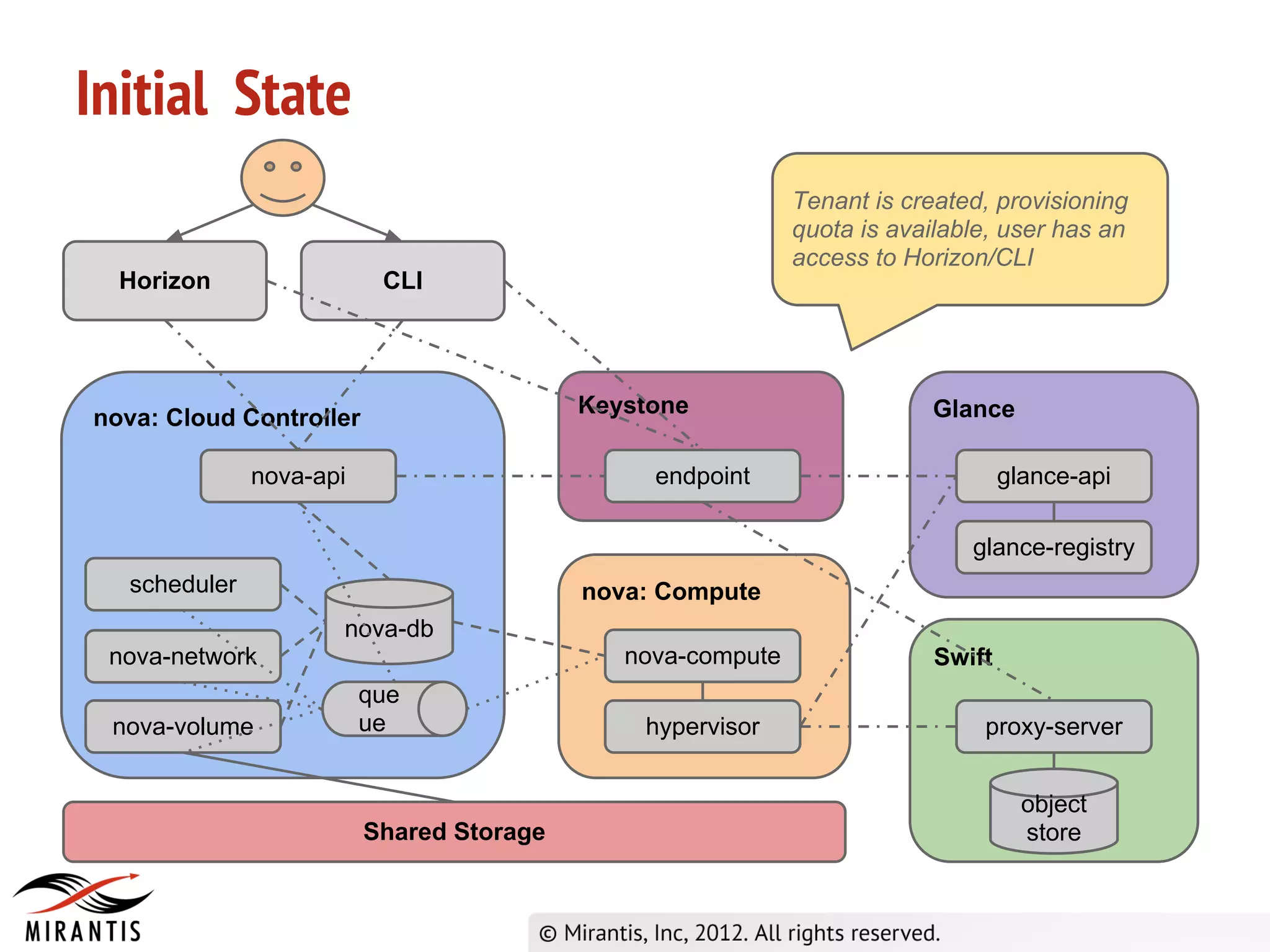

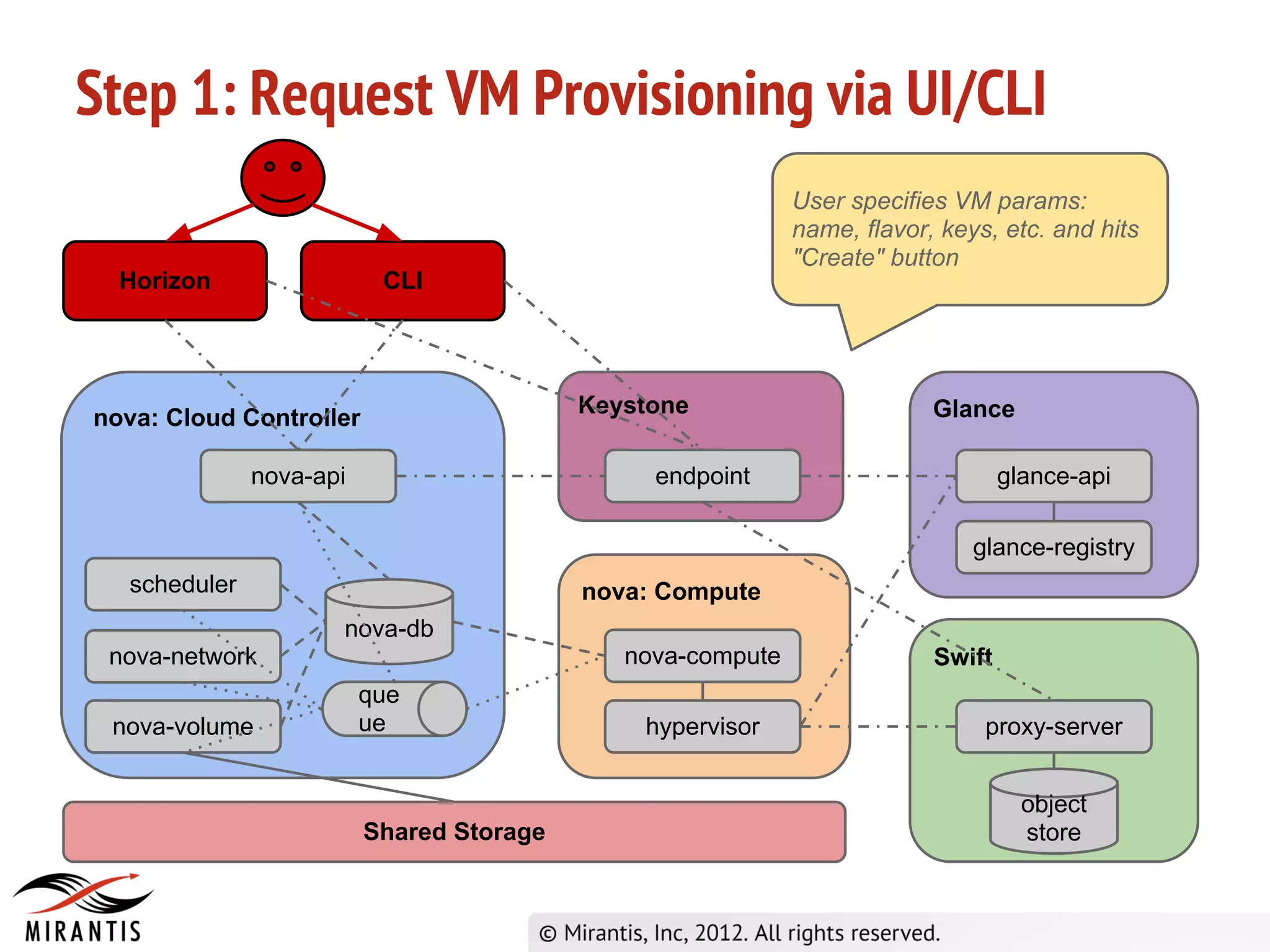

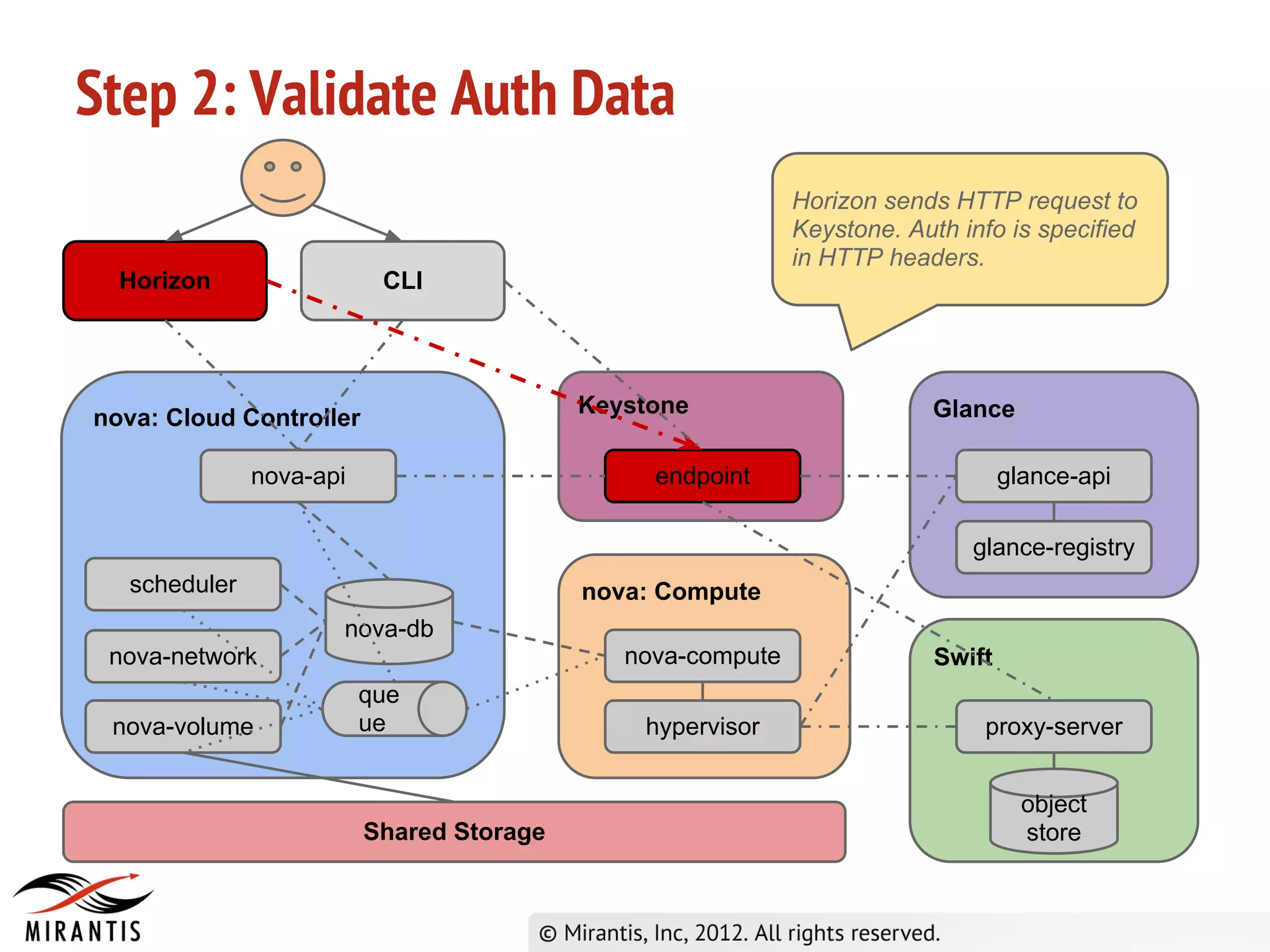

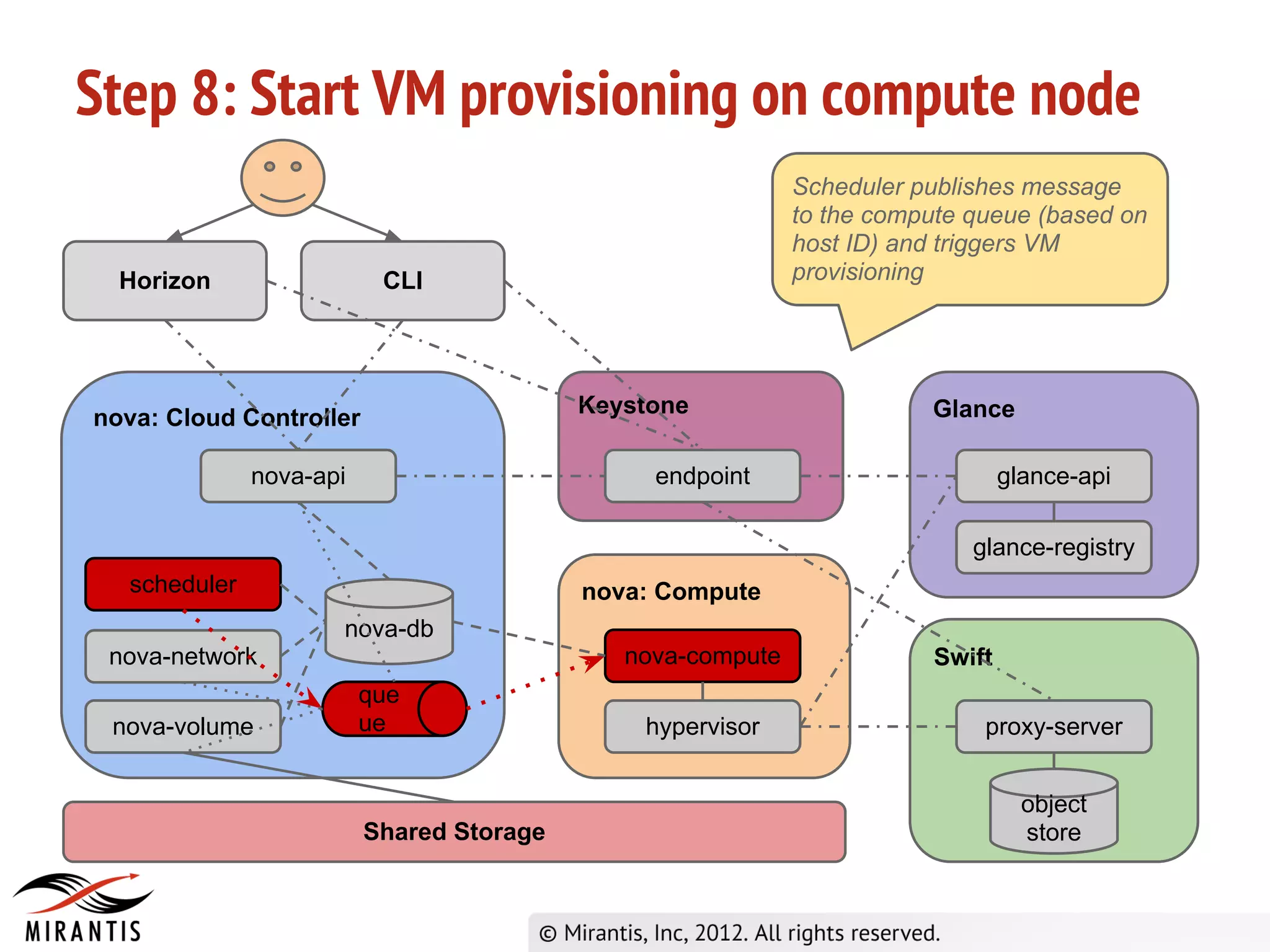

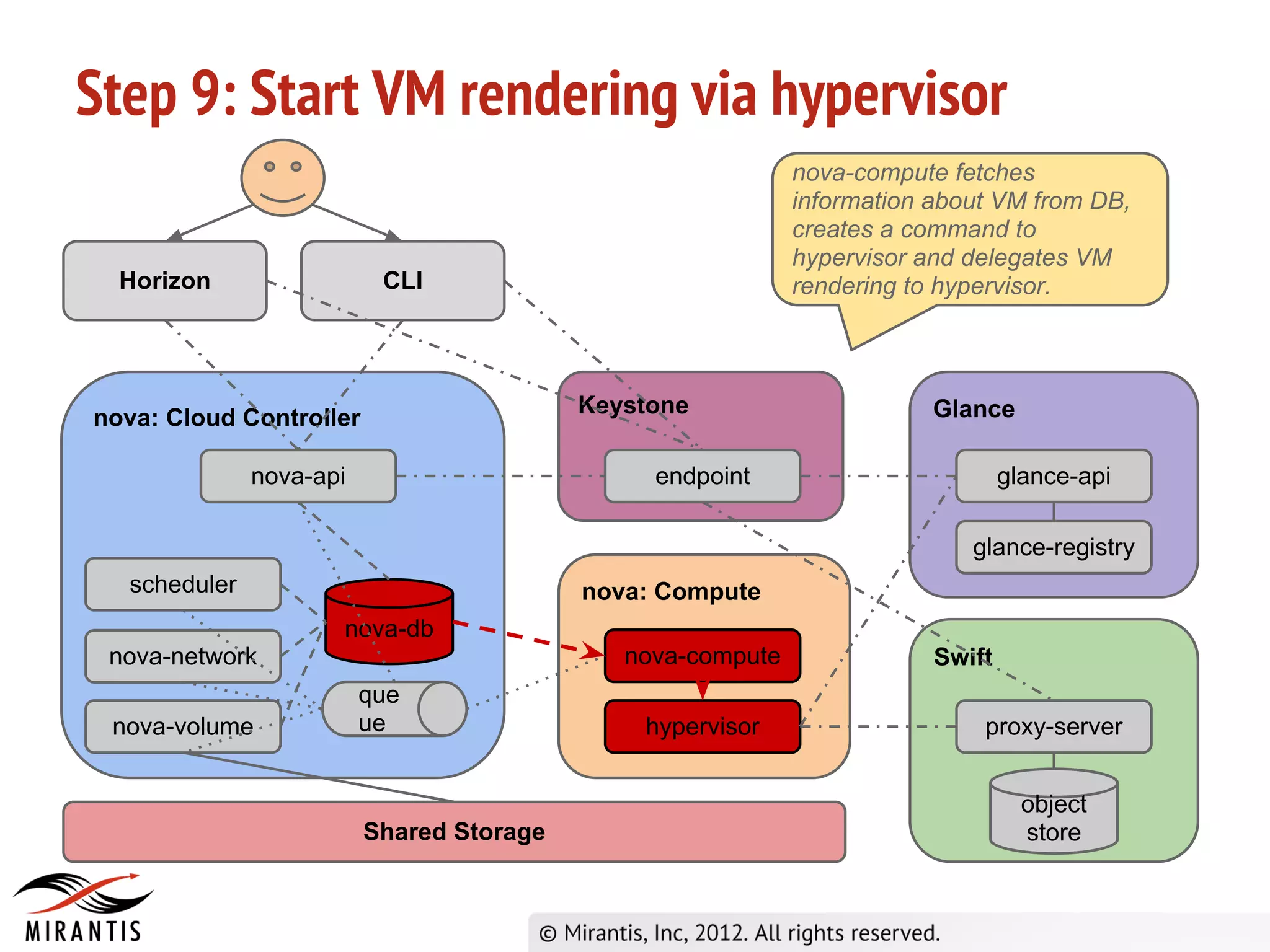

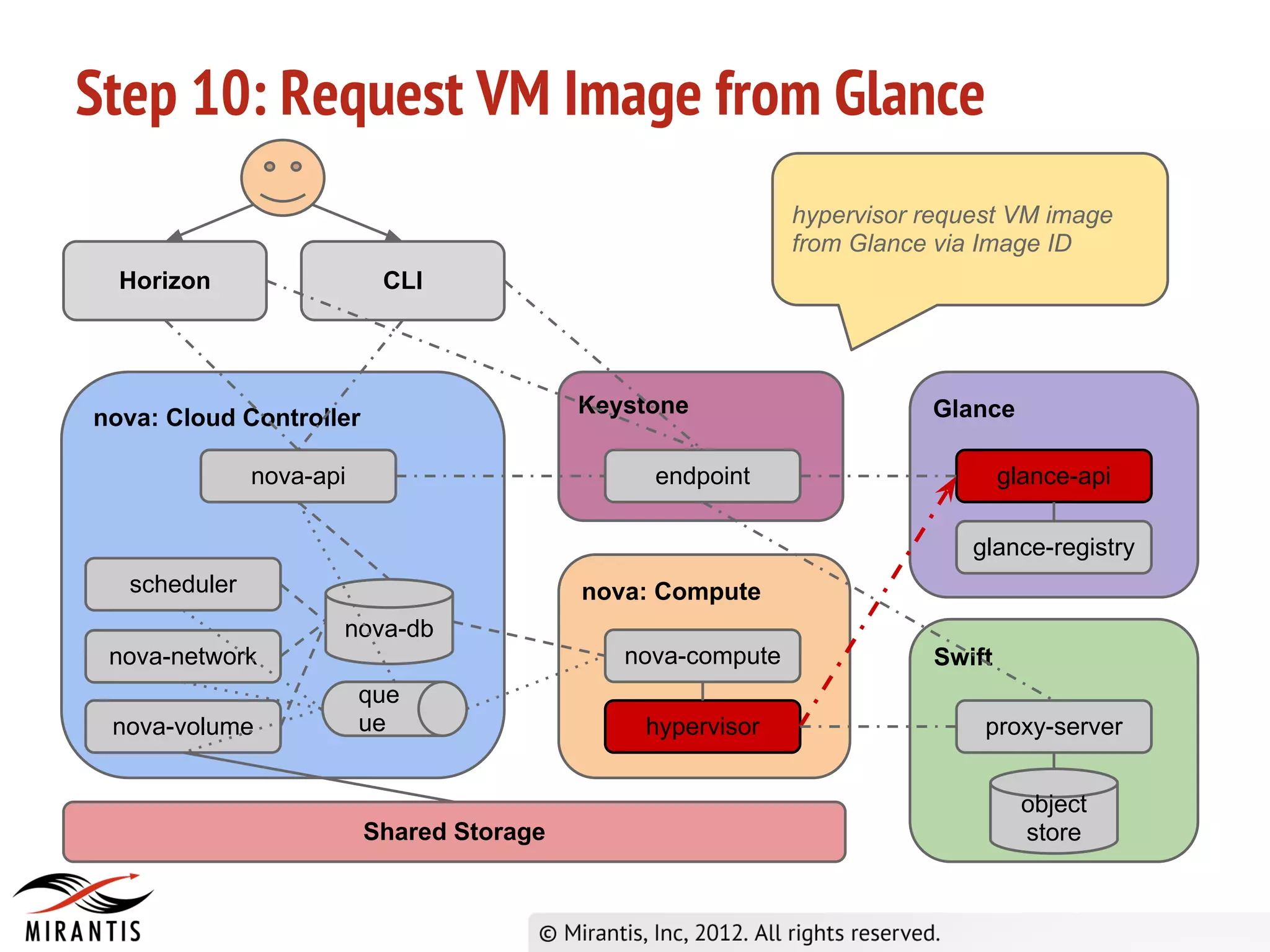

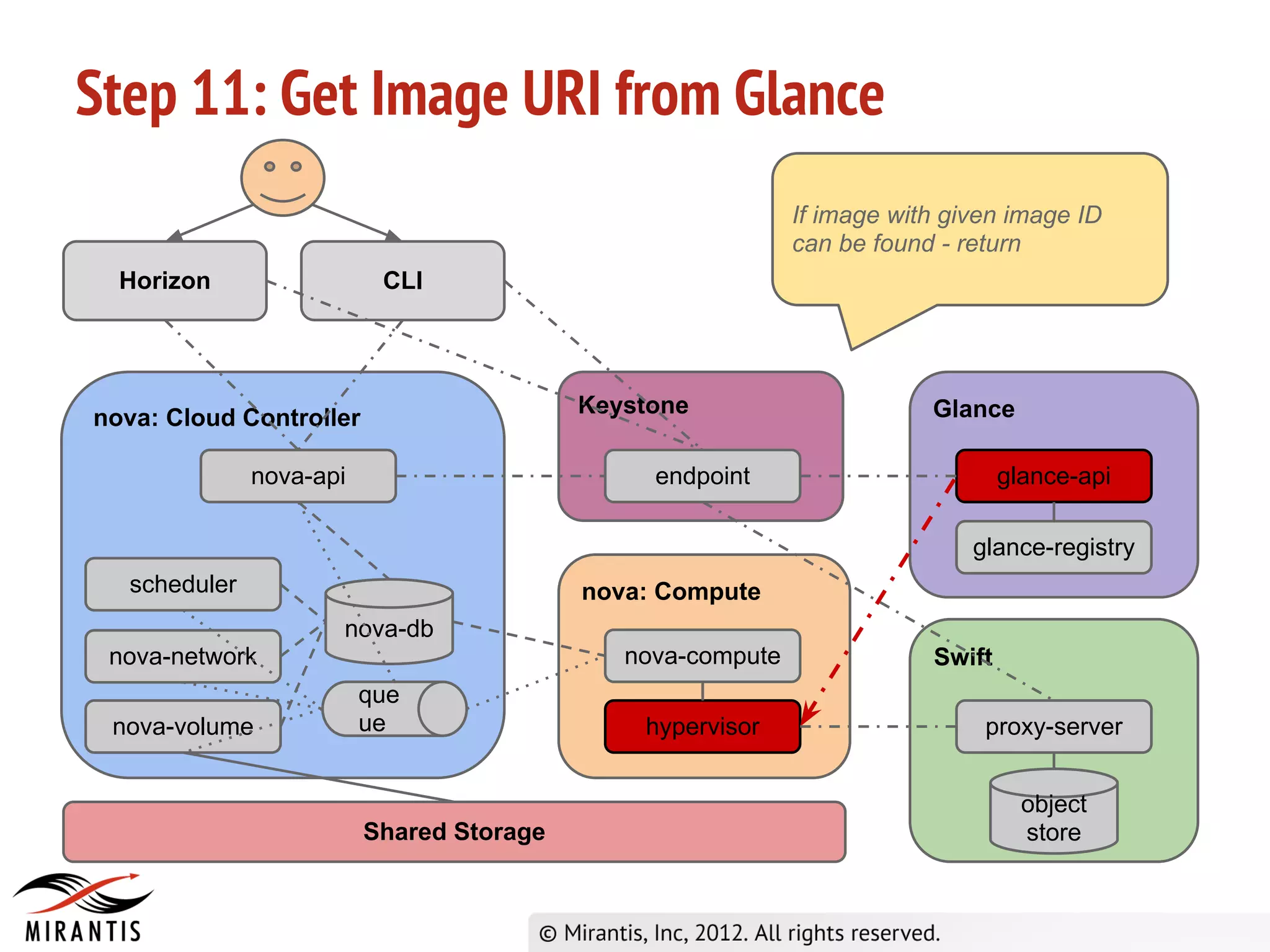

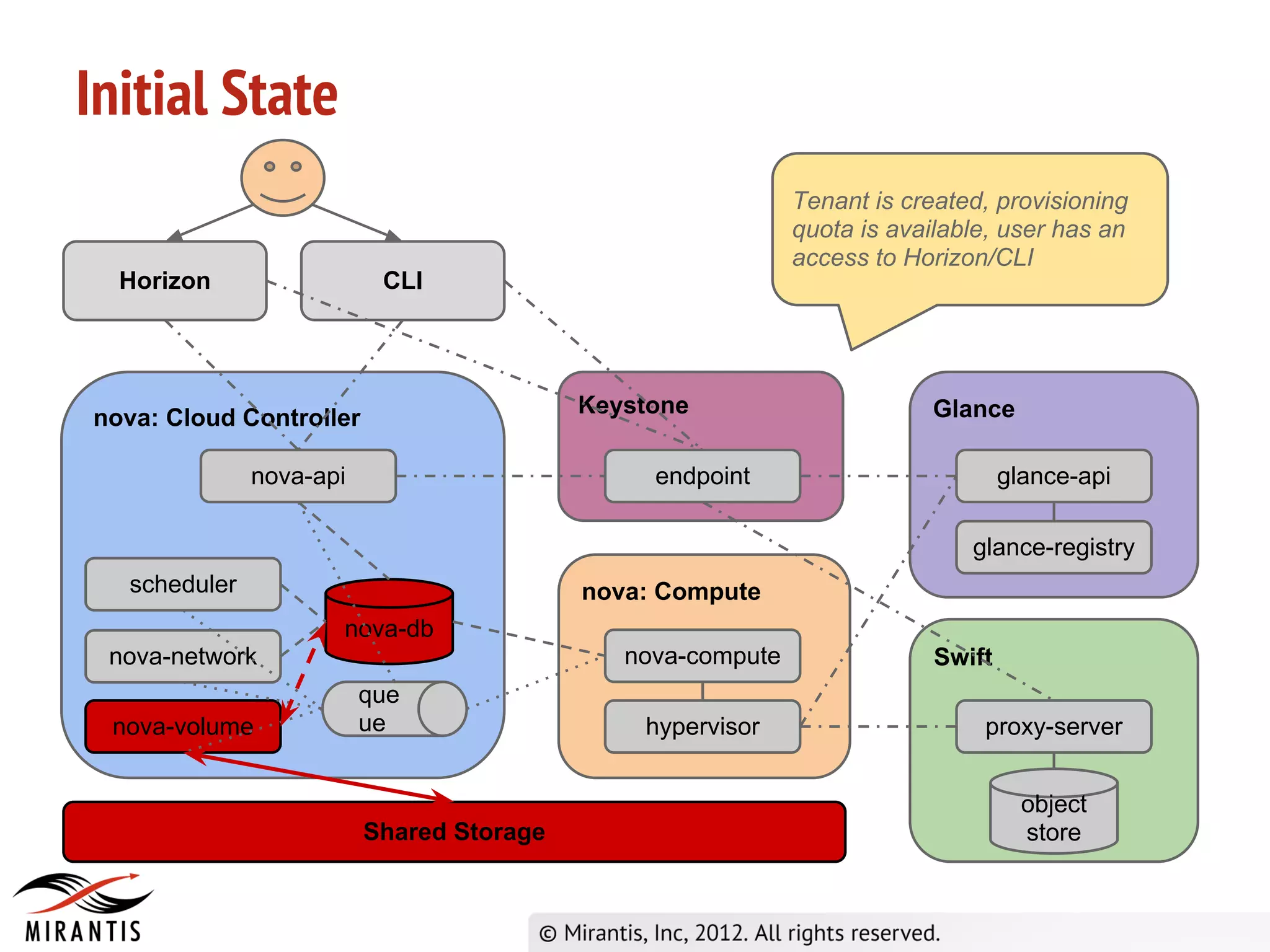

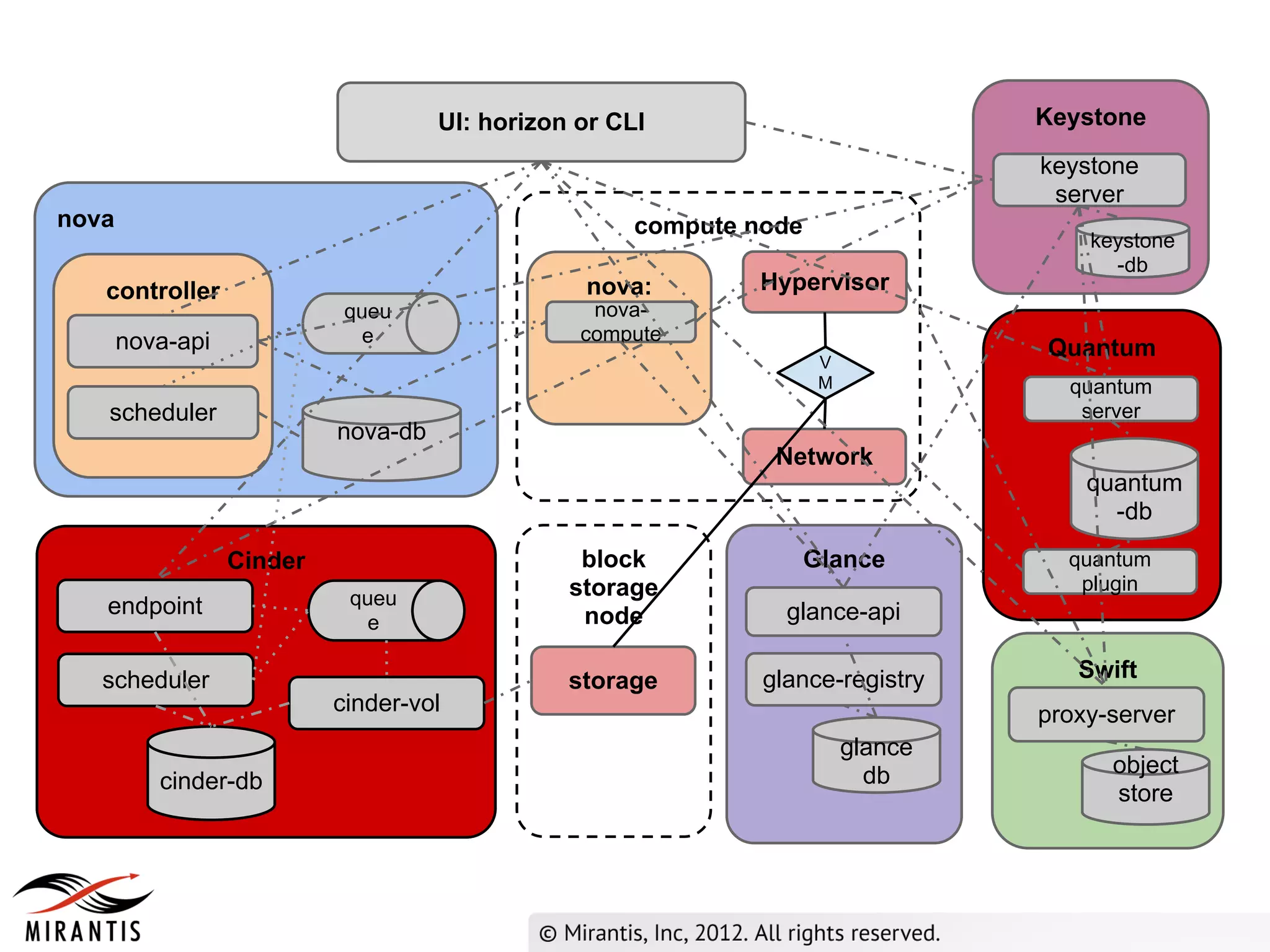

The document outlines the steps in the OpenStack provisioning process:

1) A user requests VM provisioning via the Horizon dashboard or CLI which sends the request to Keystone for authentication.

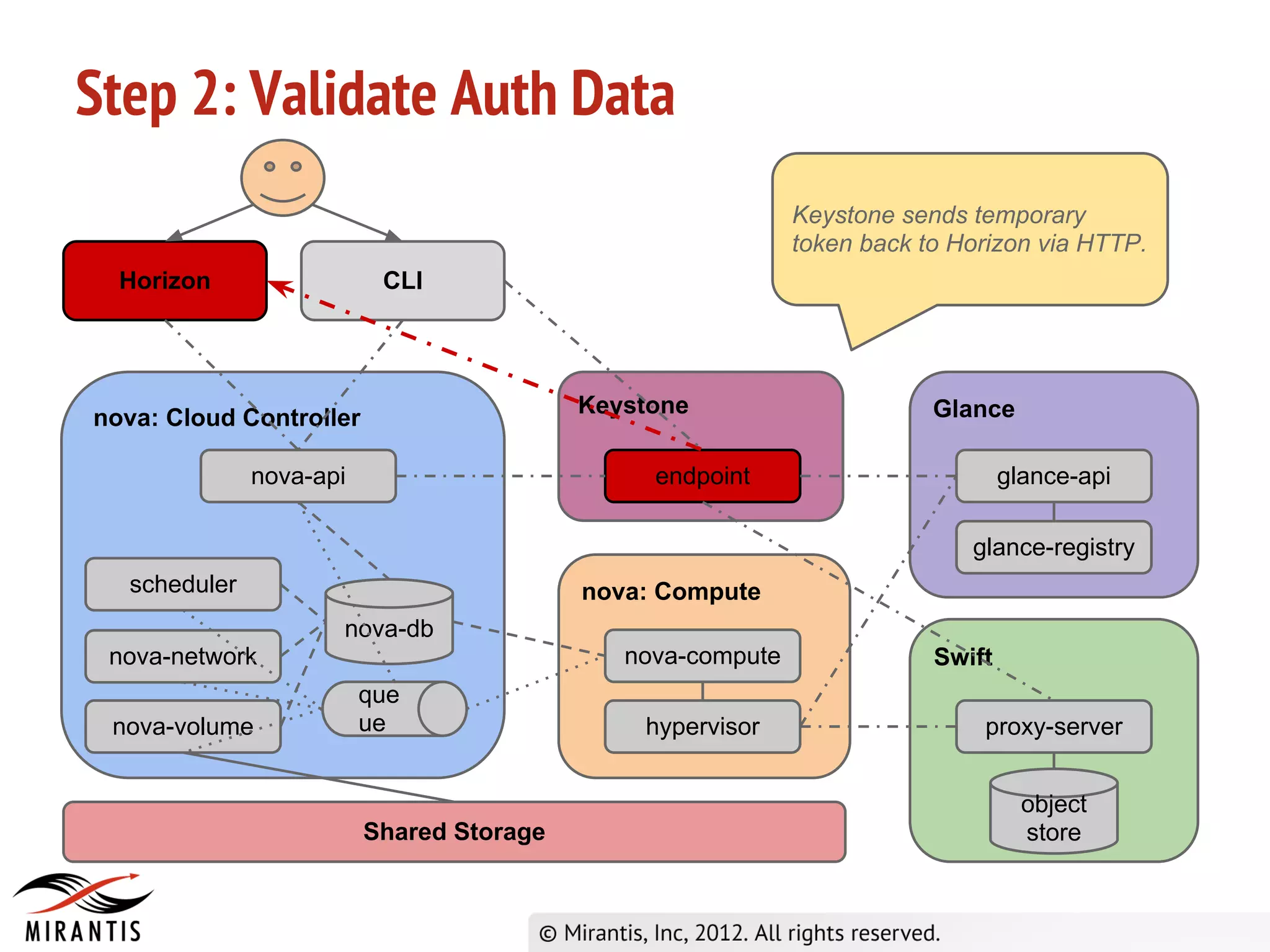

2) Keystone validates the authentication data and provides a temporary token to Horizon.

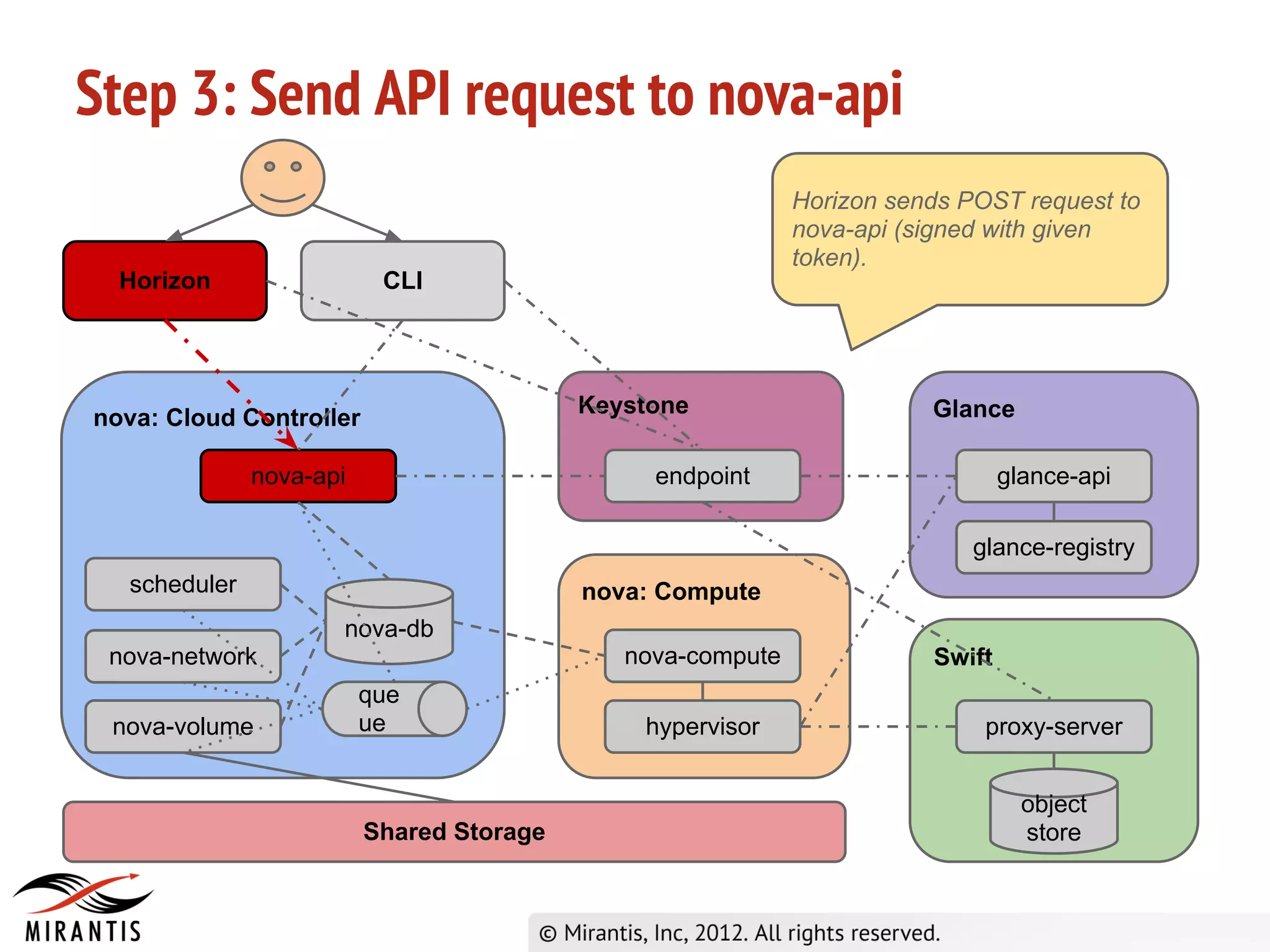

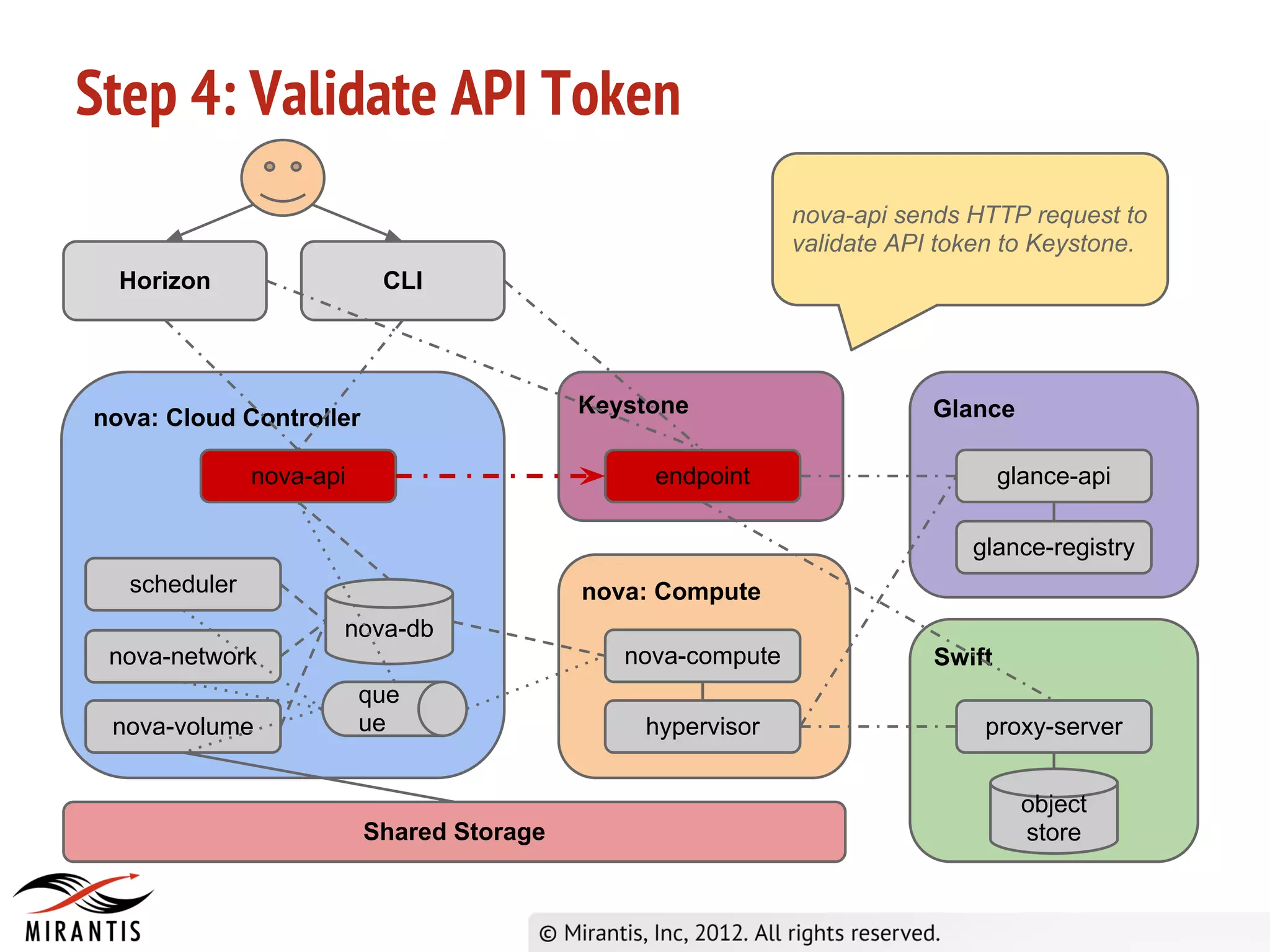

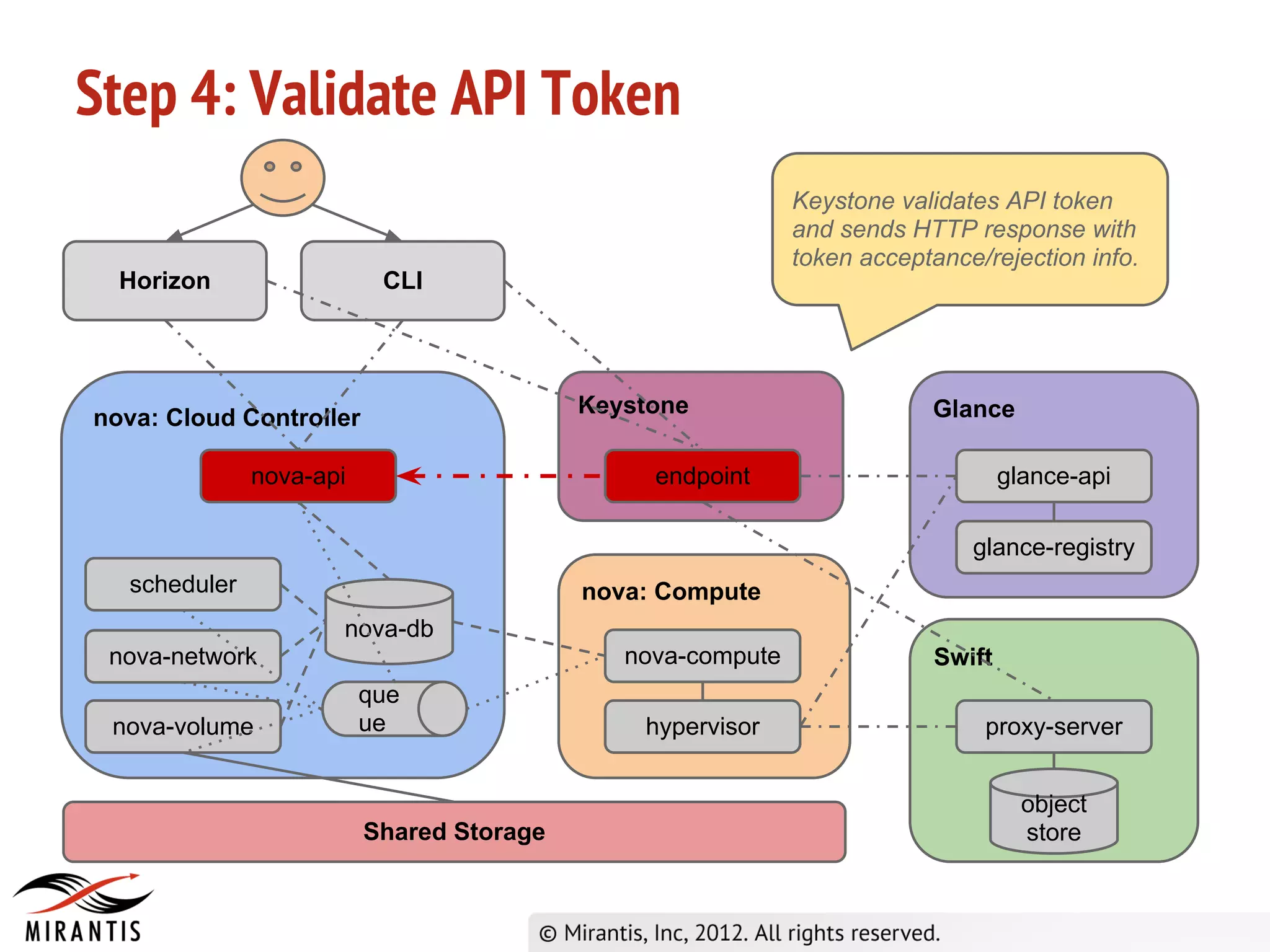

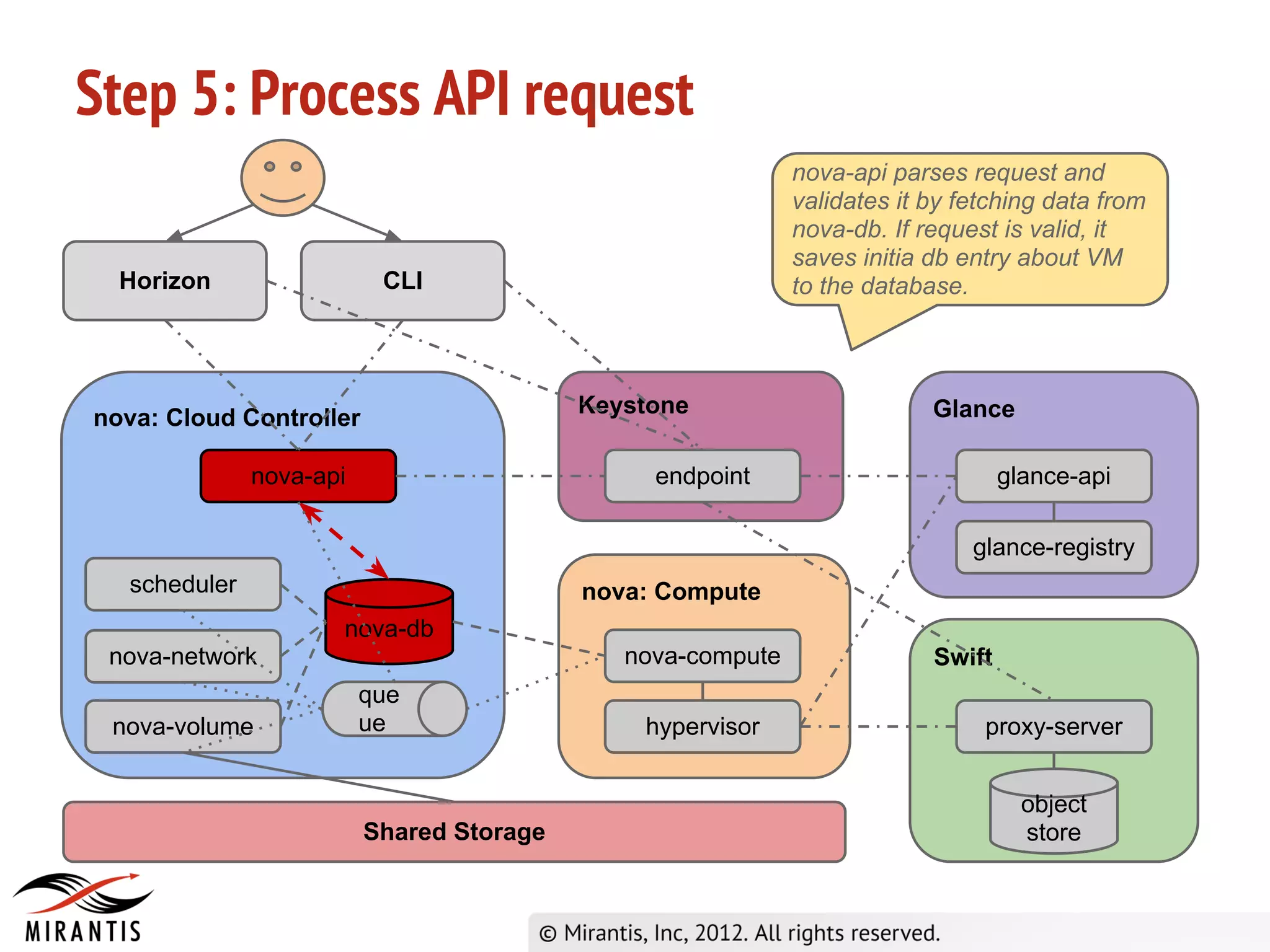

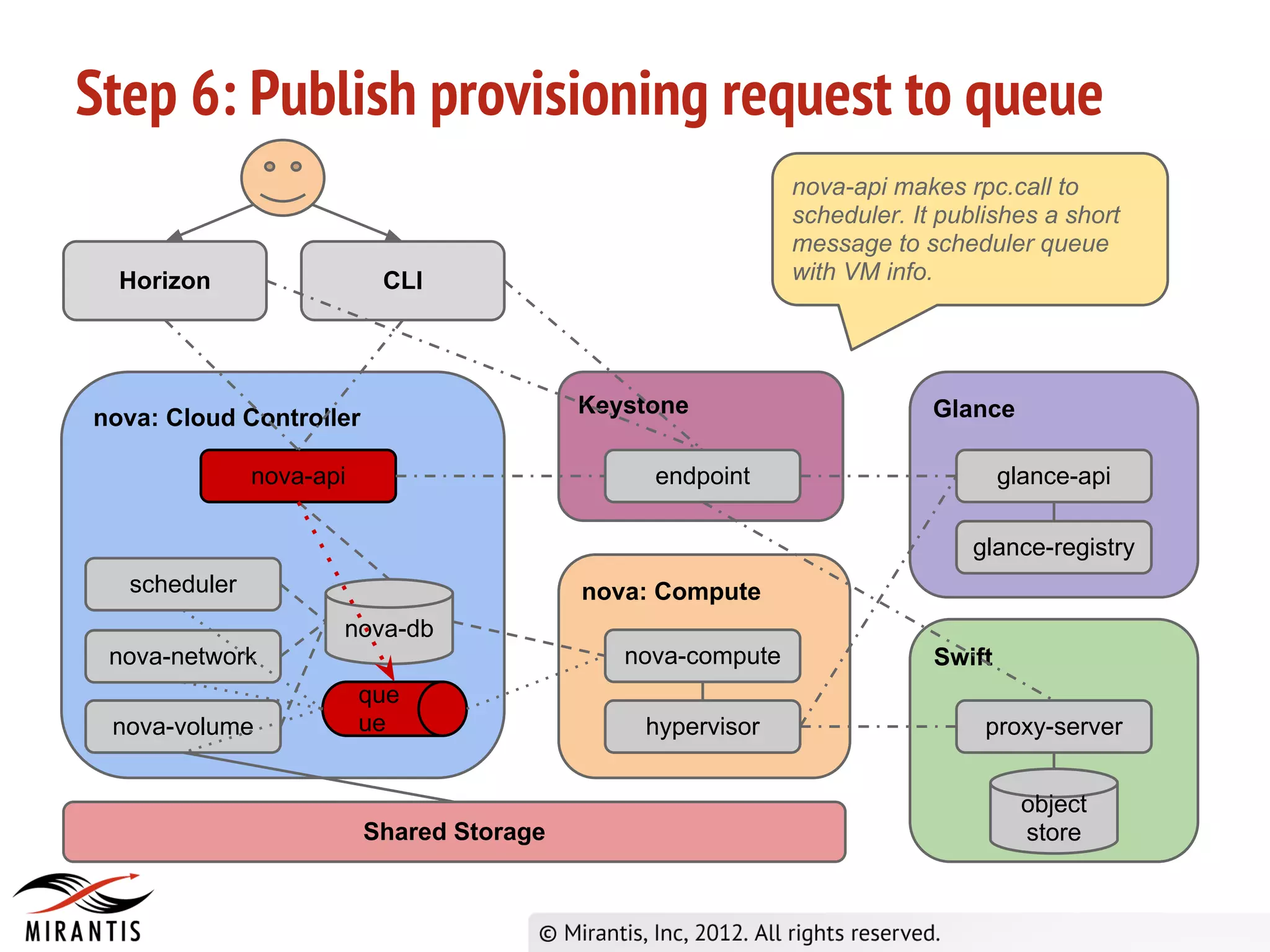

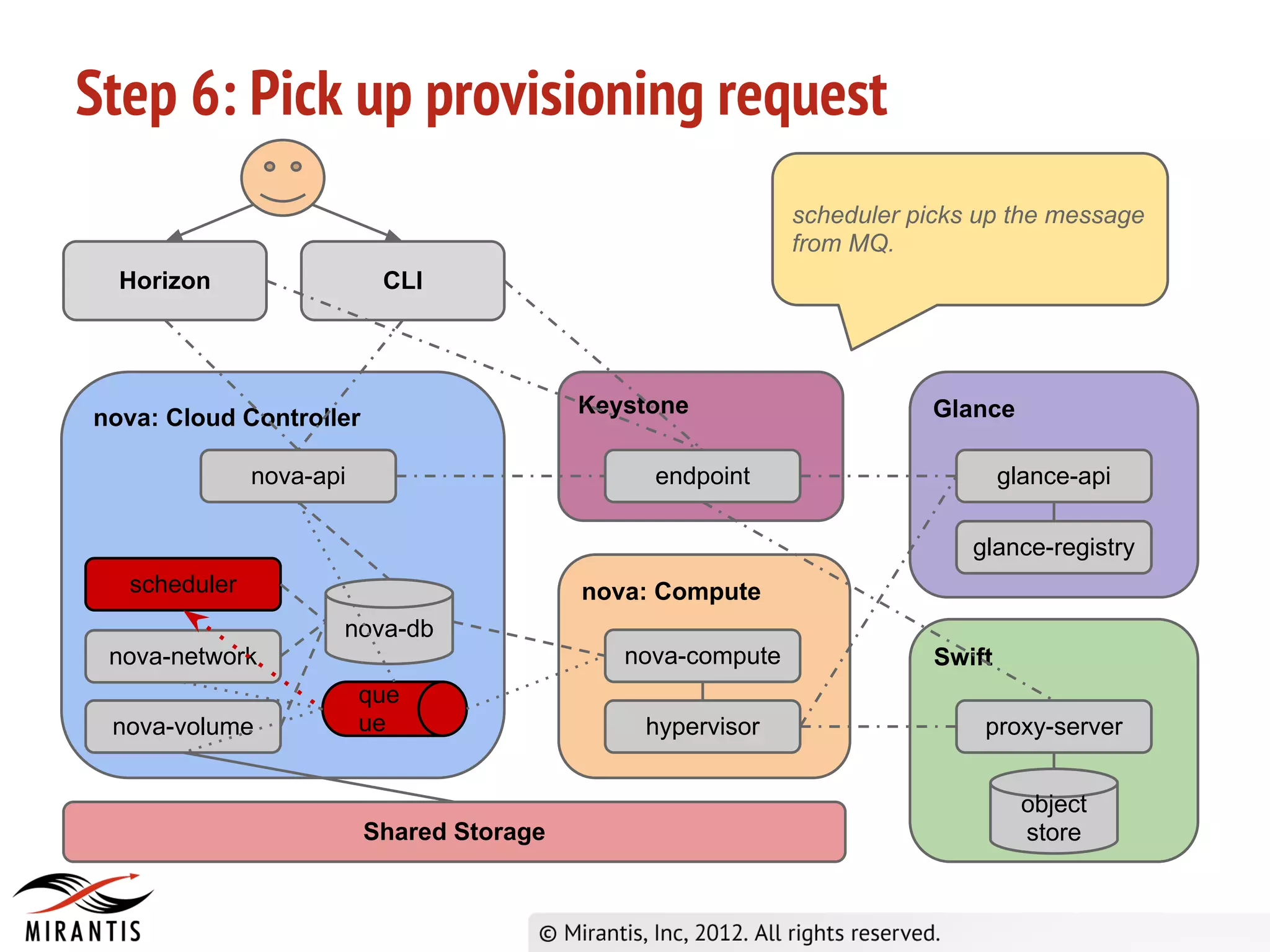

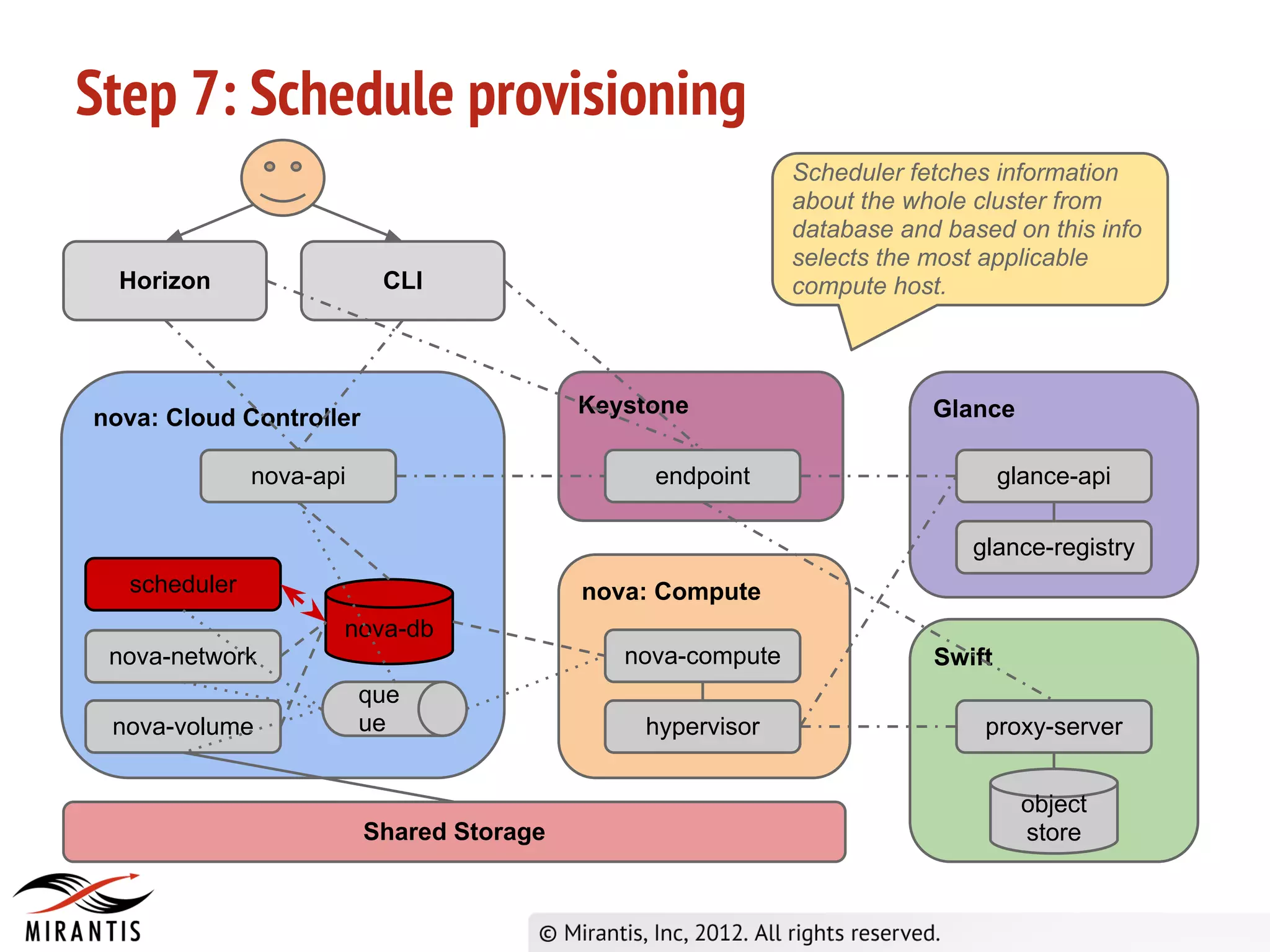

3) Horizon sends the API request to nova-api which validates the token with Keystone before processing the request and saving details to the database. It then publishes the request to the scheduler queue.