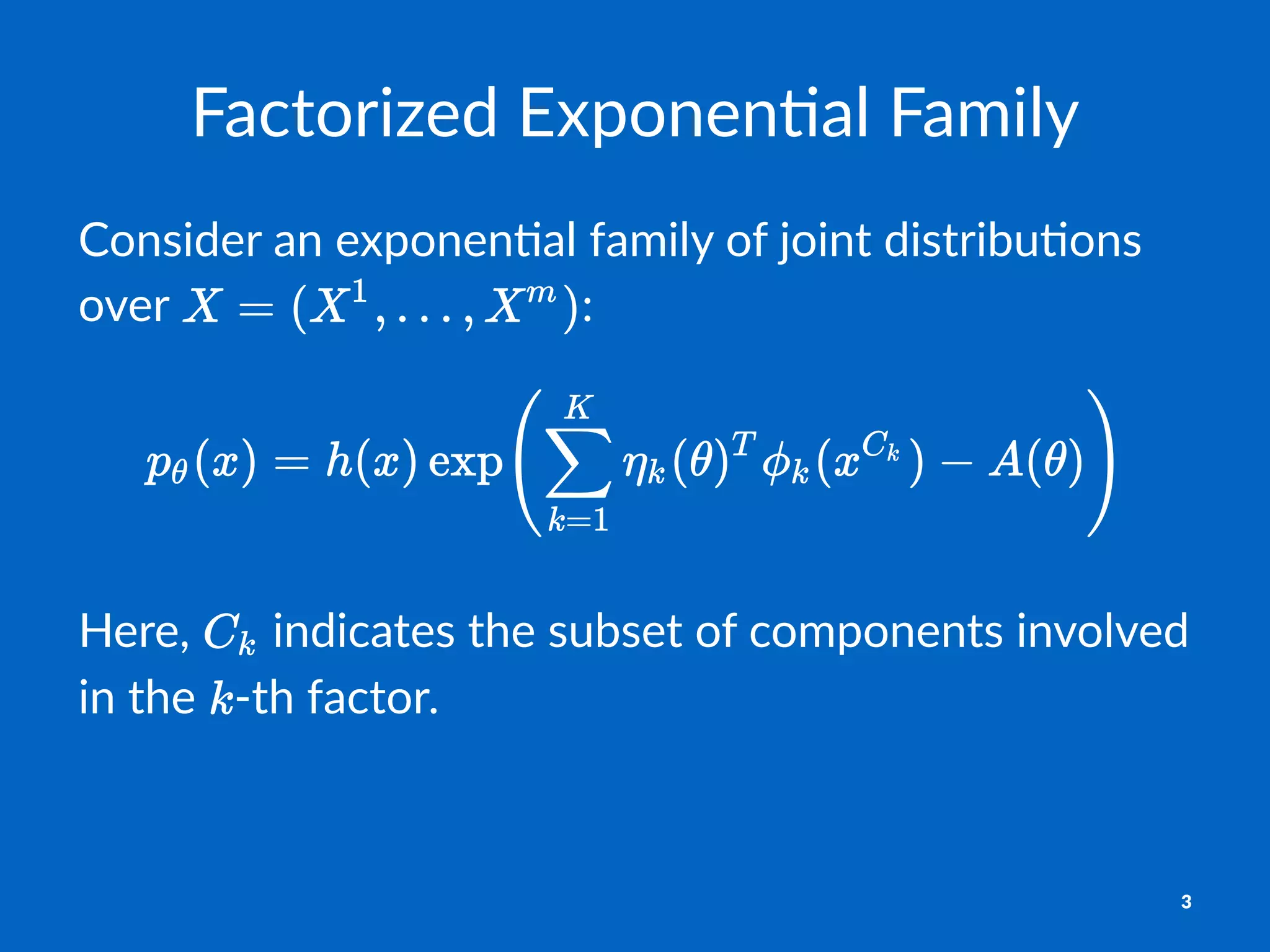

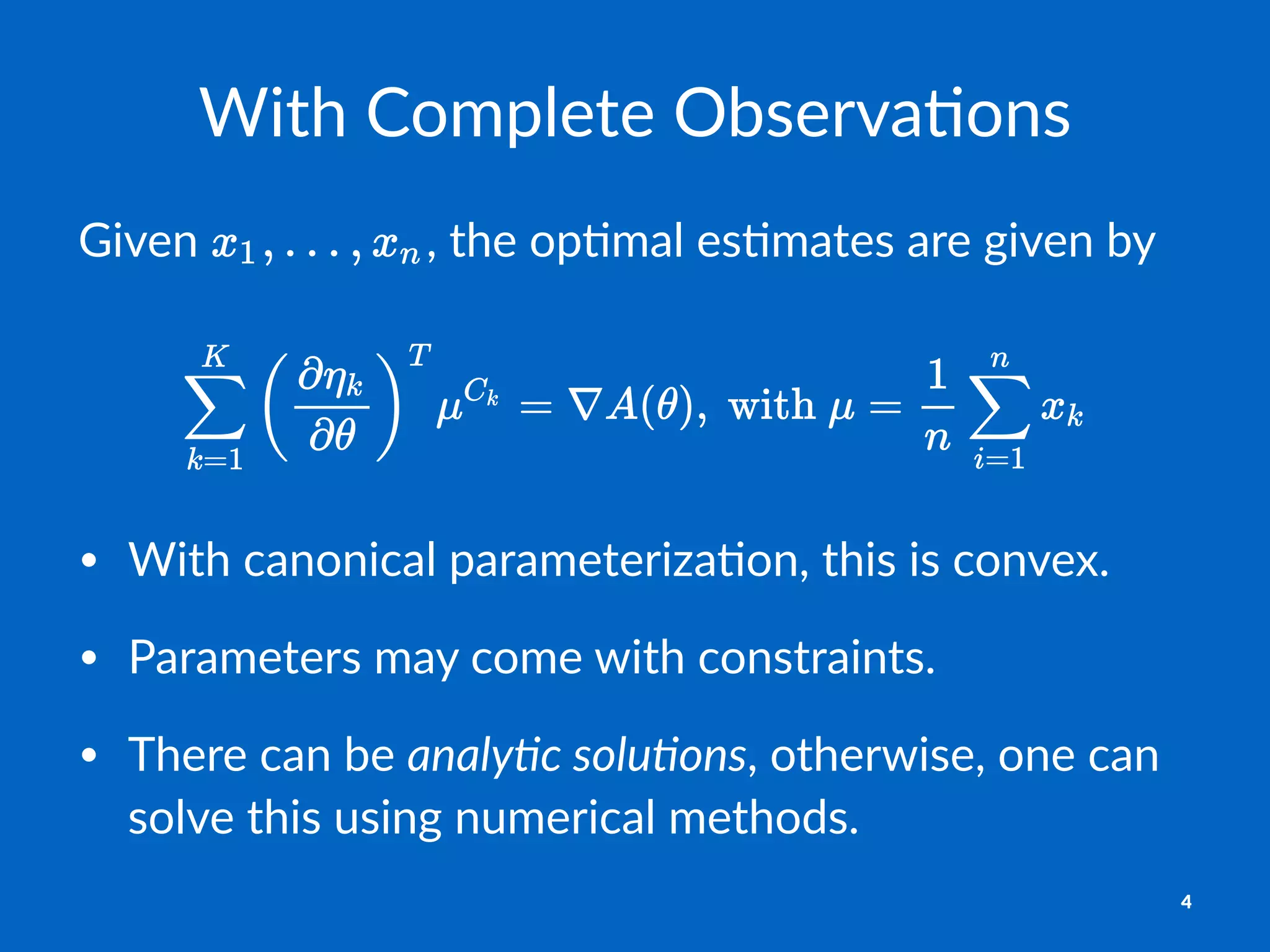

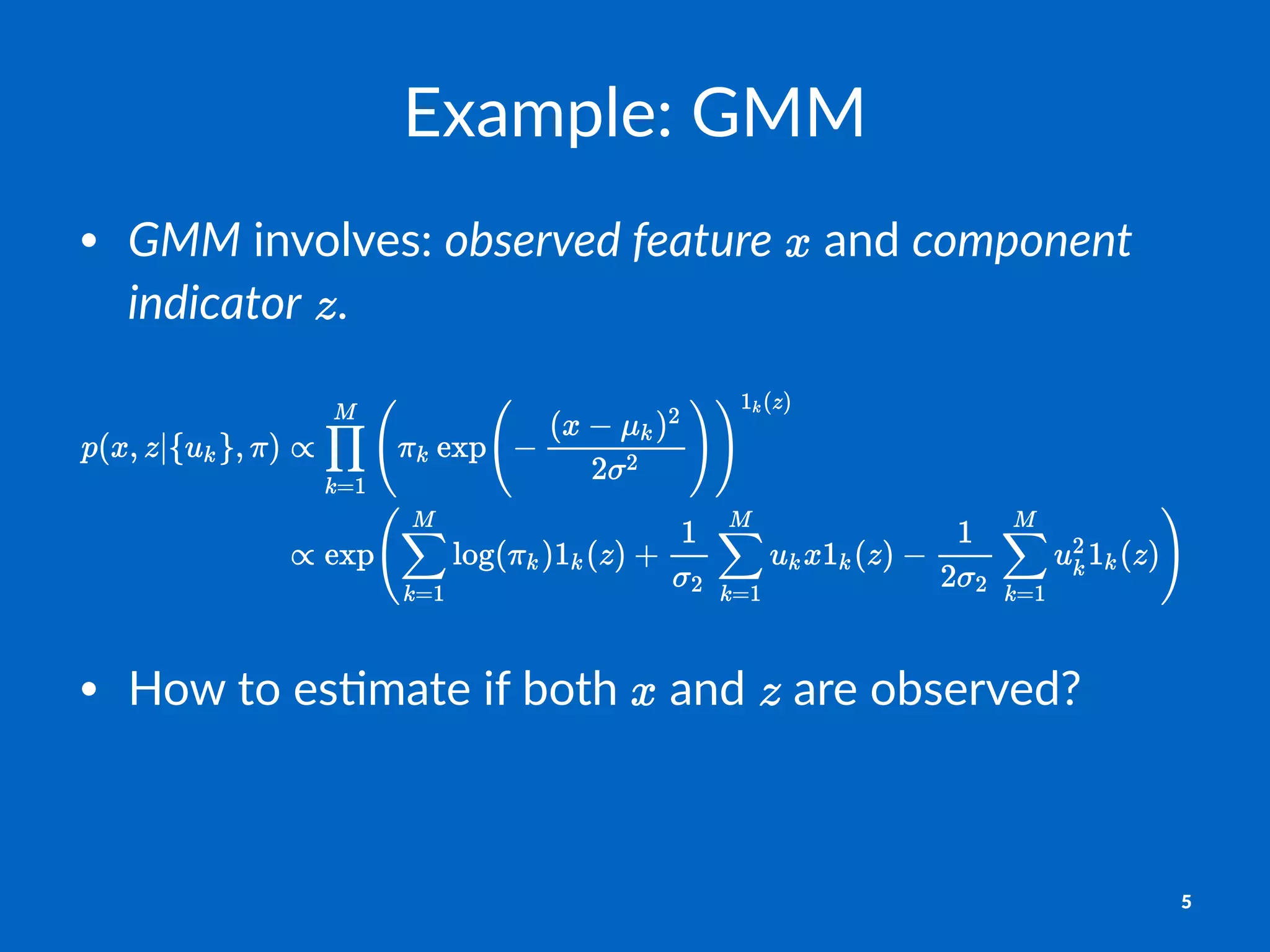

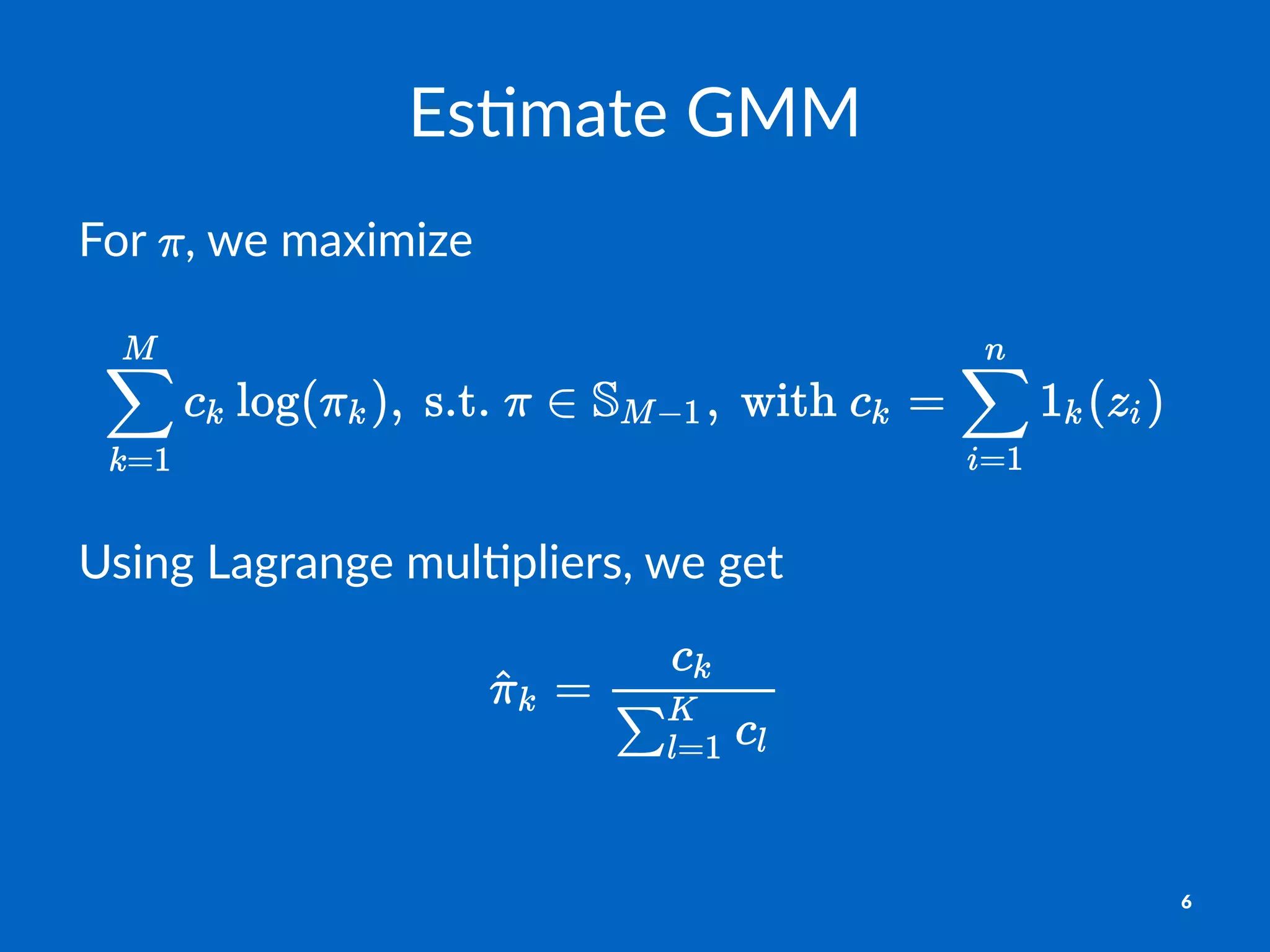

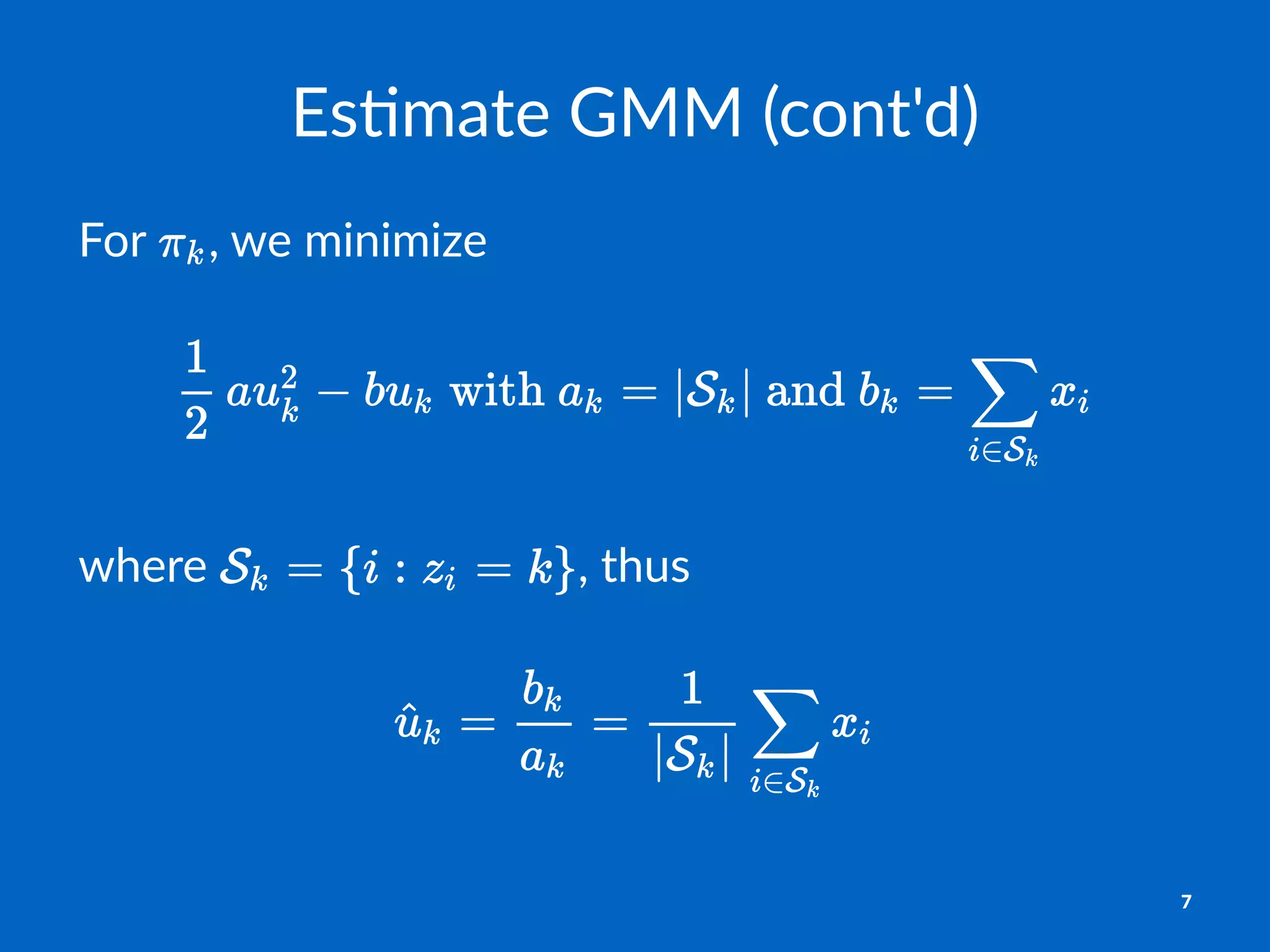

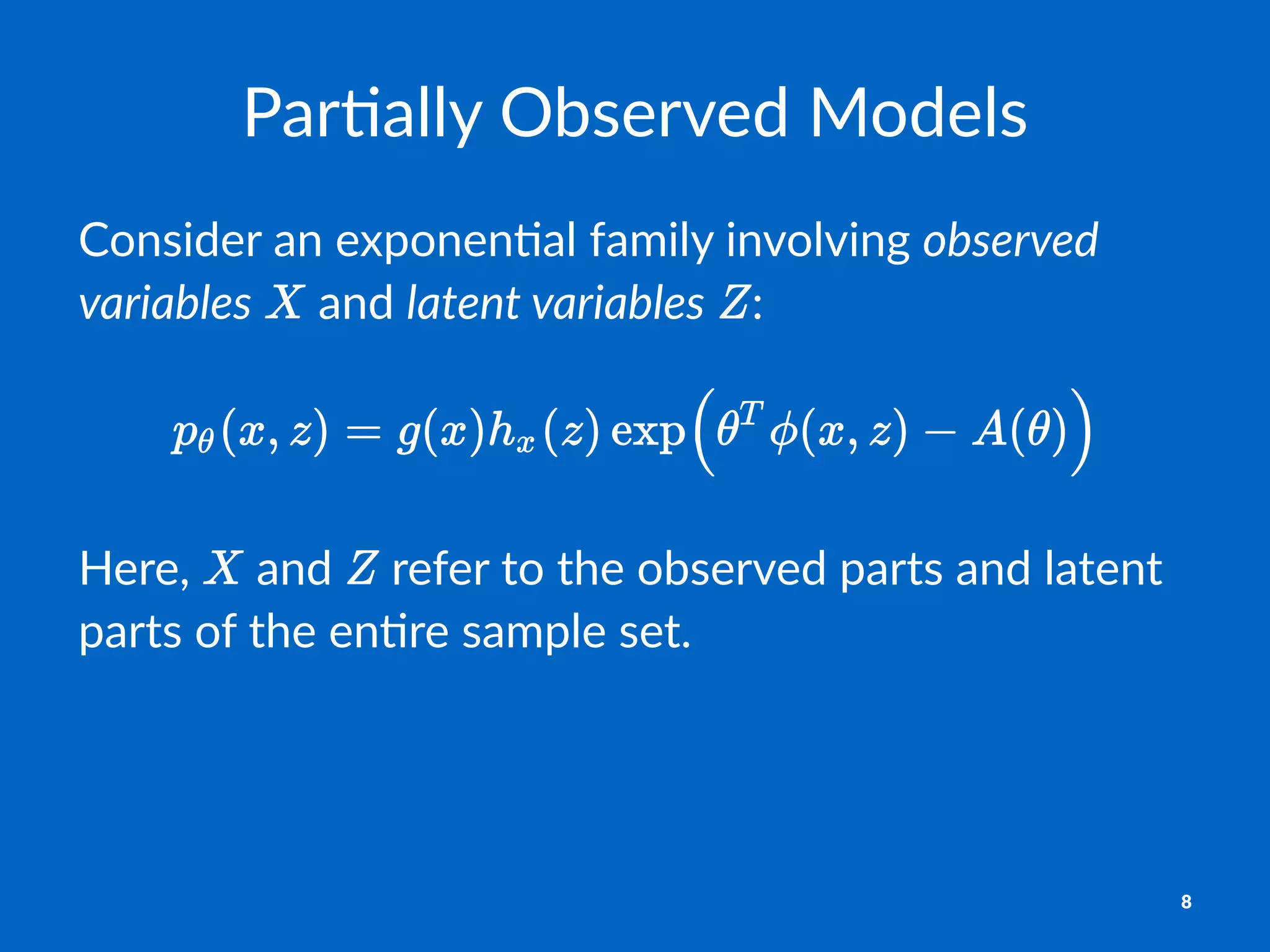

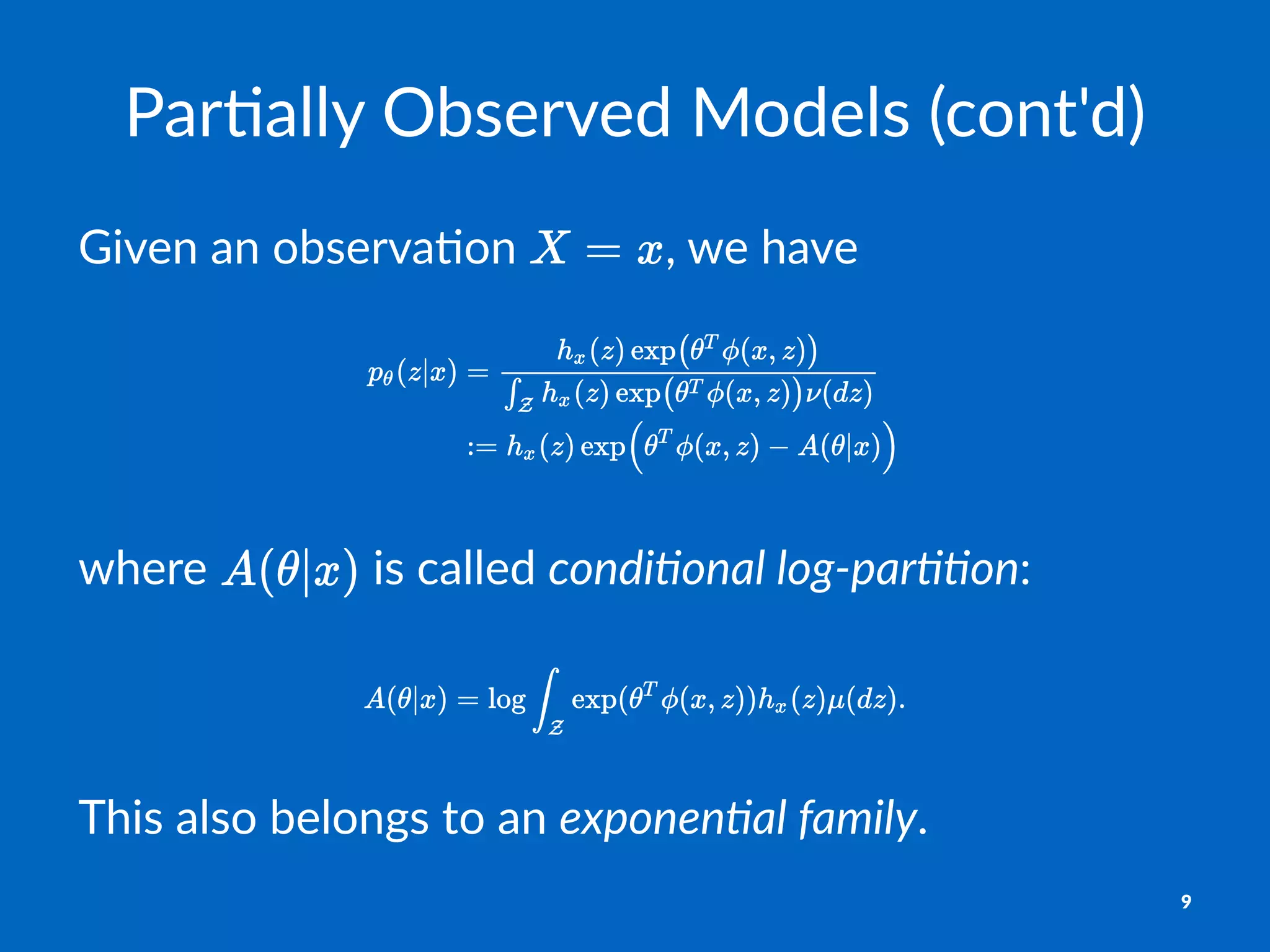

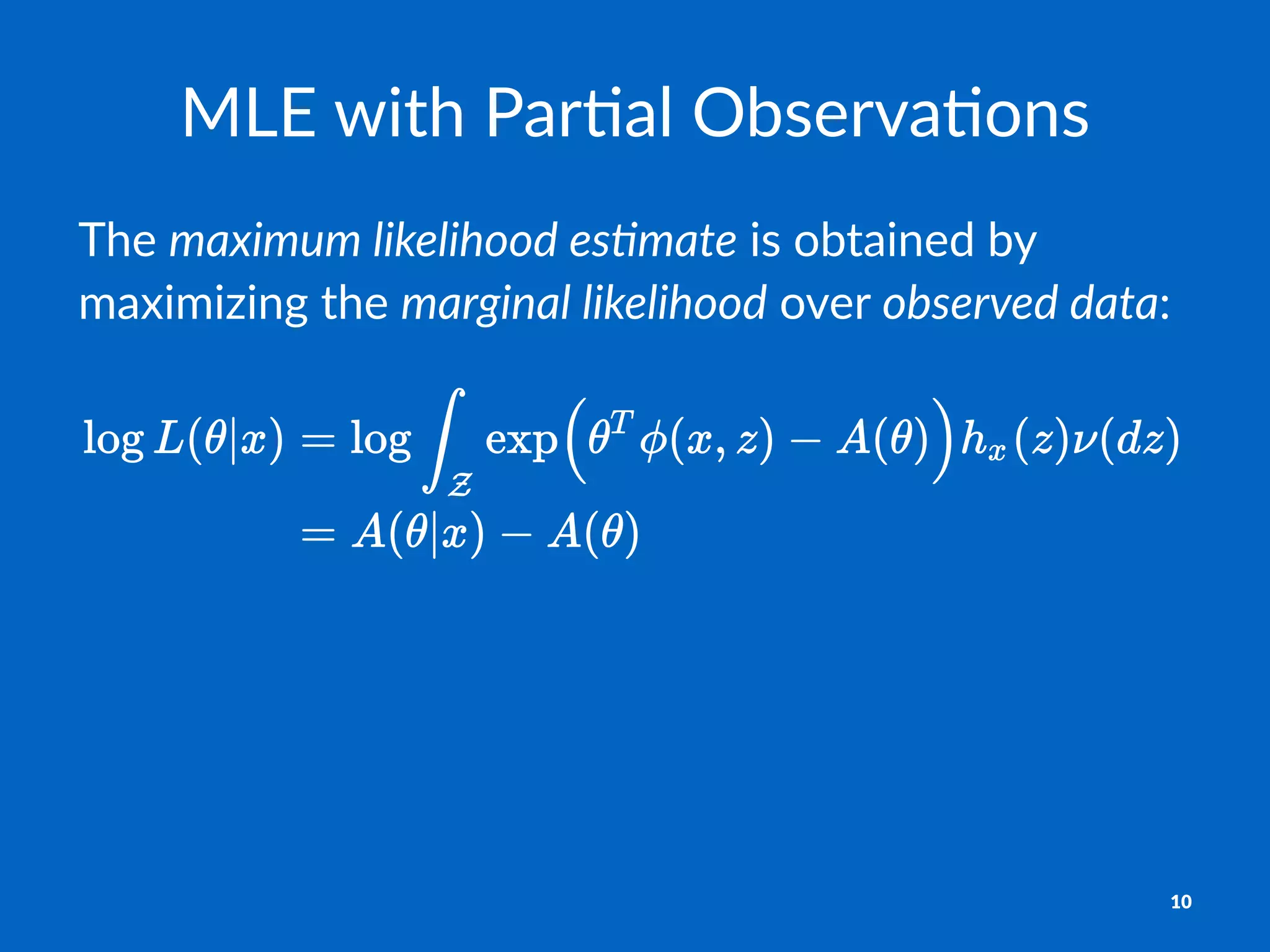

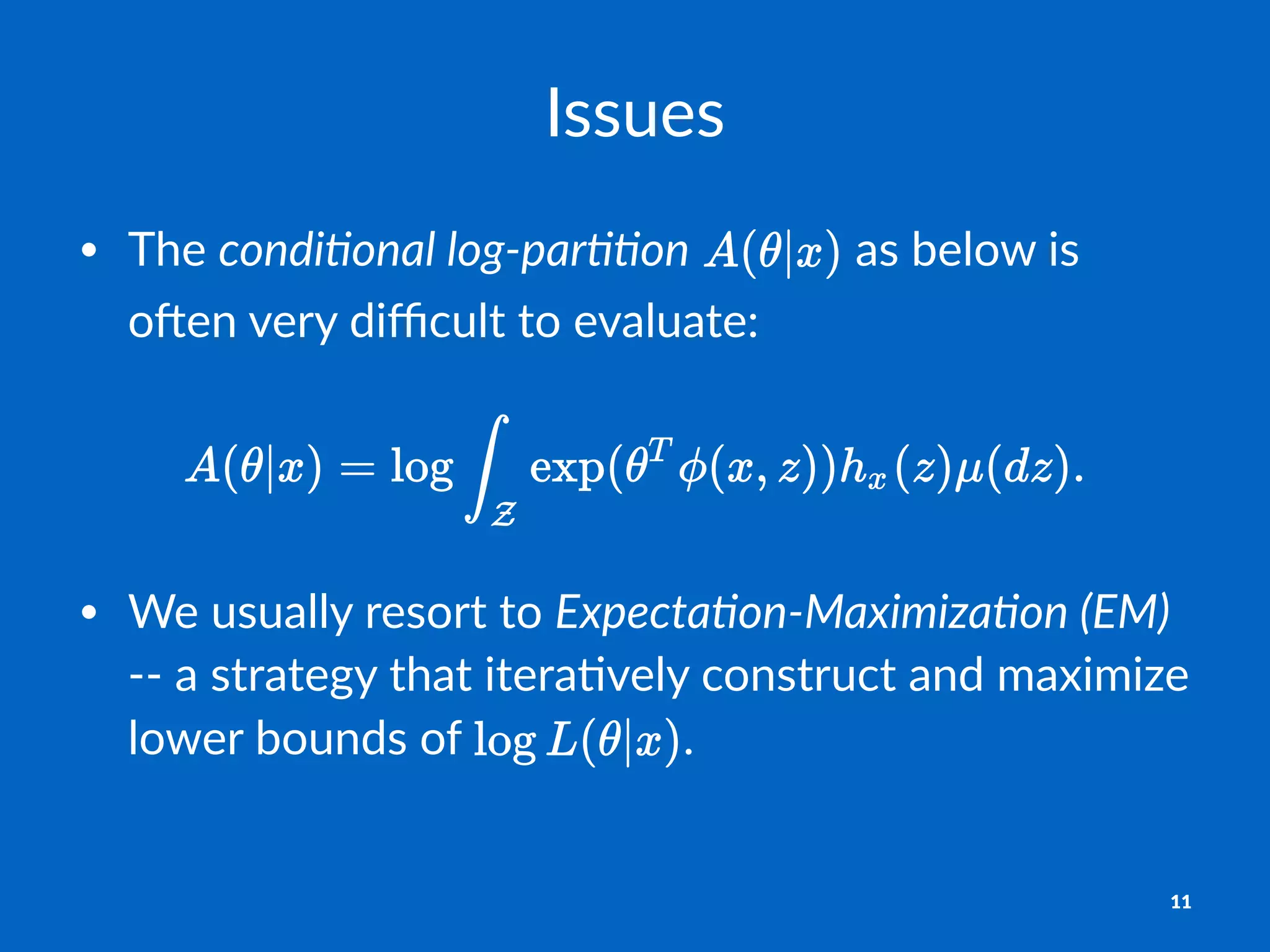

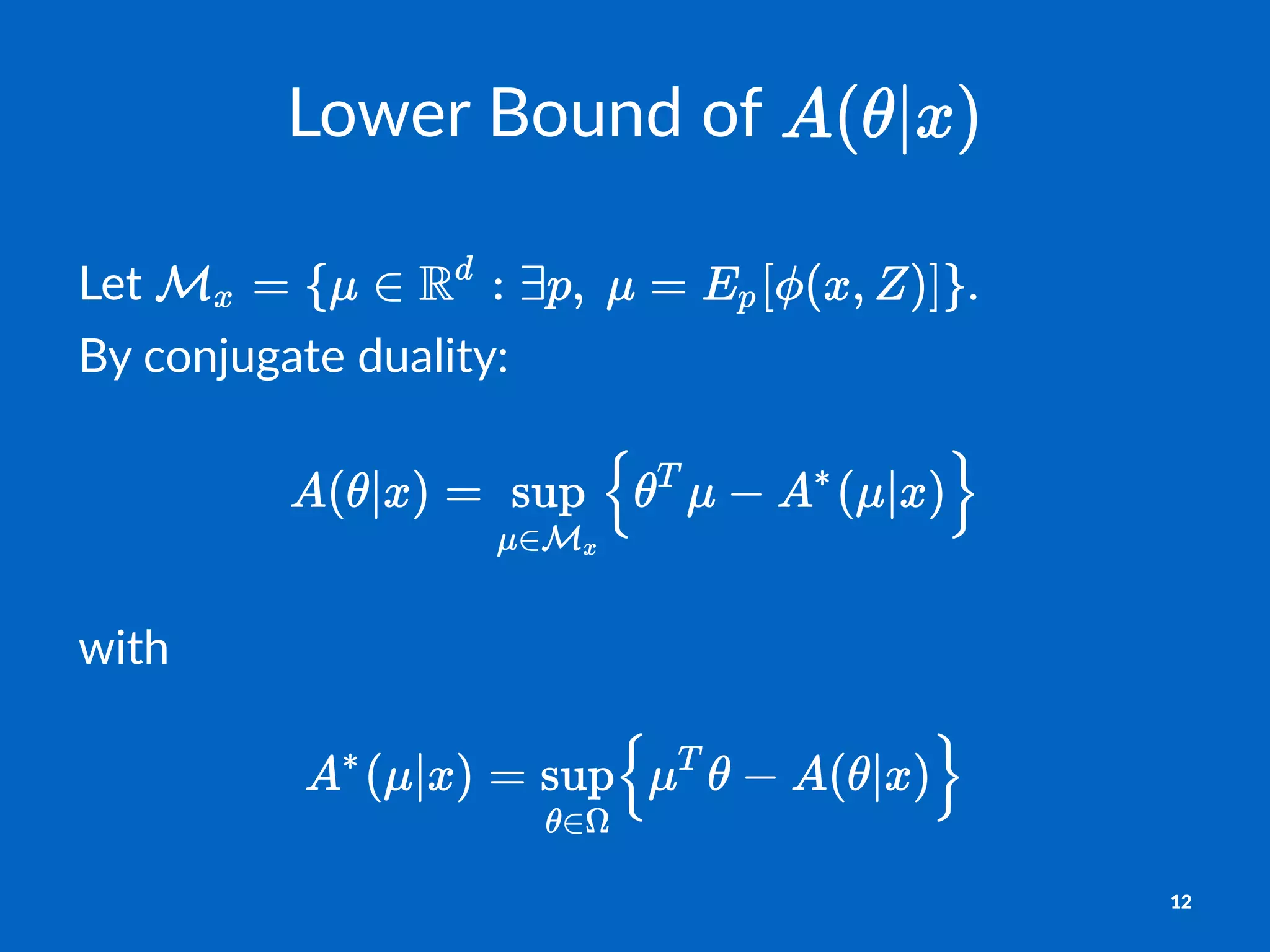

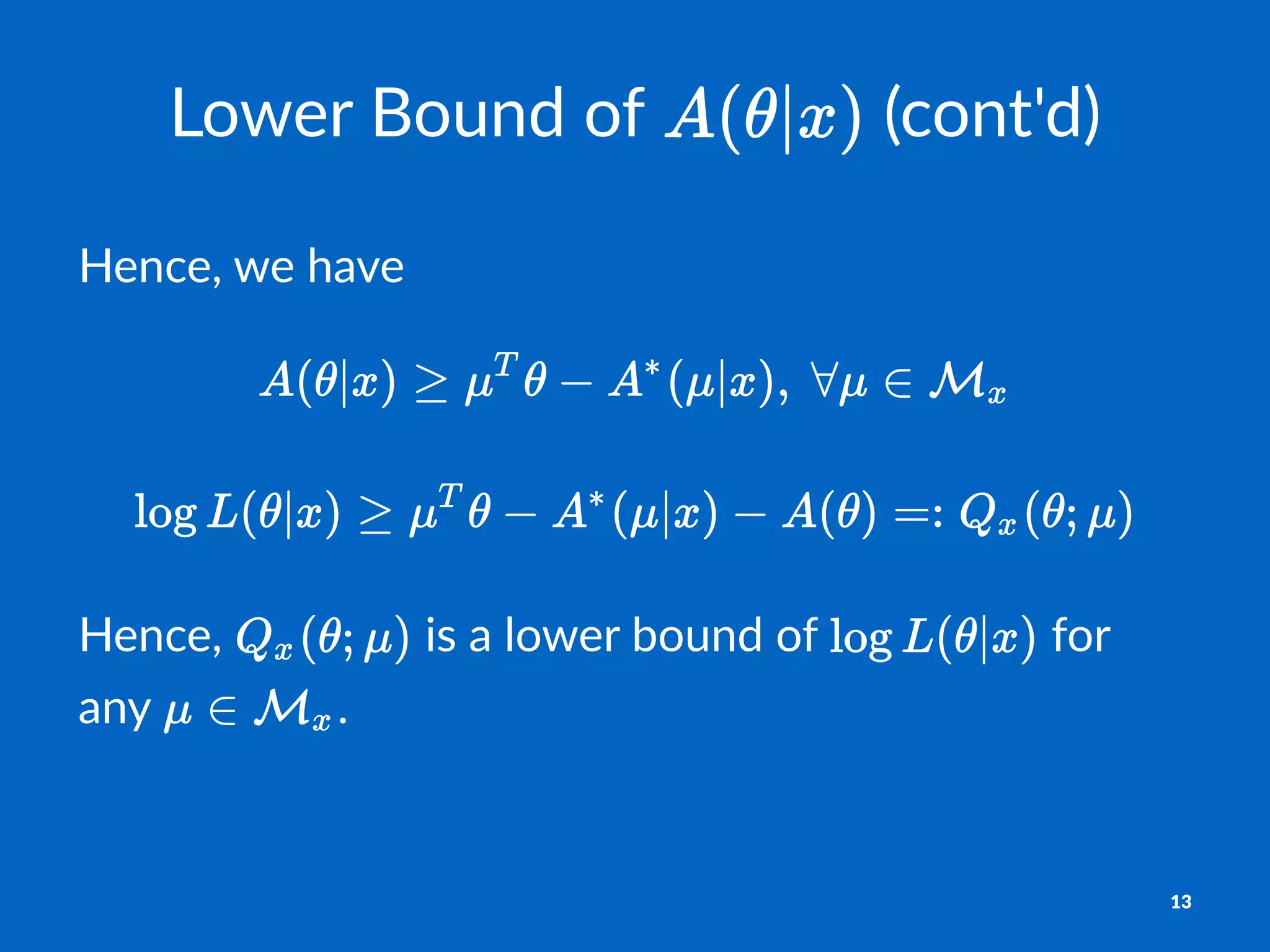

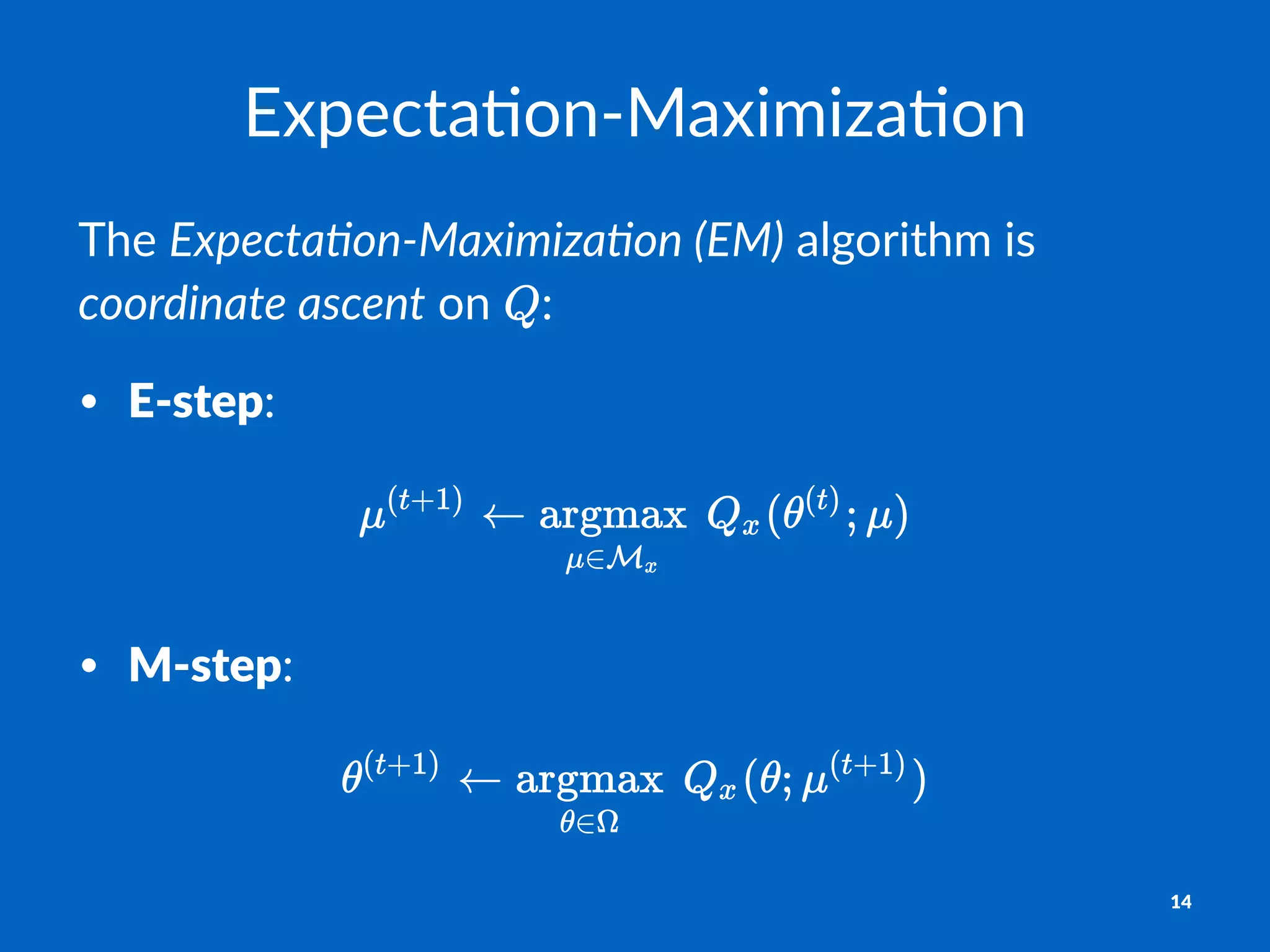

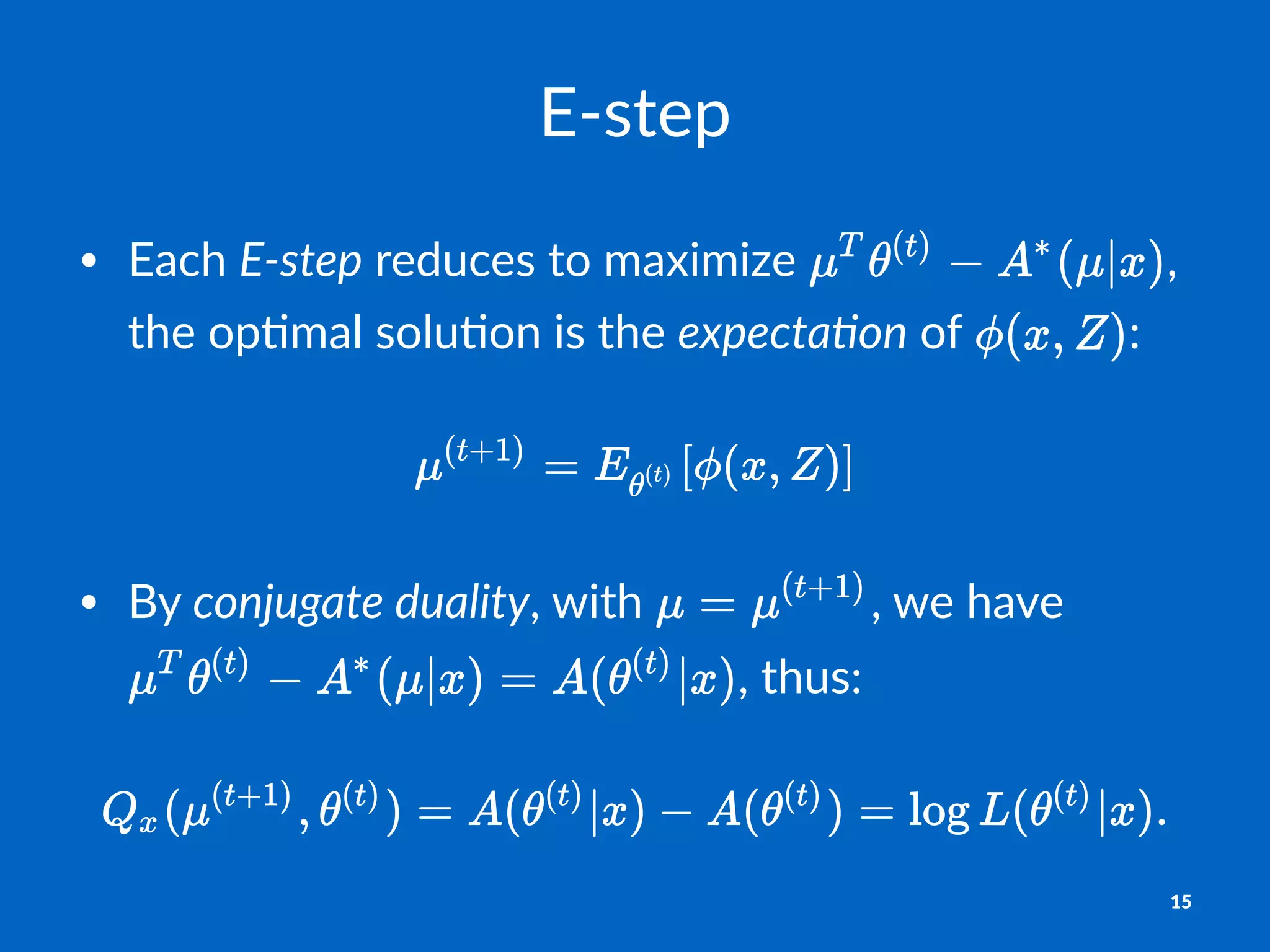

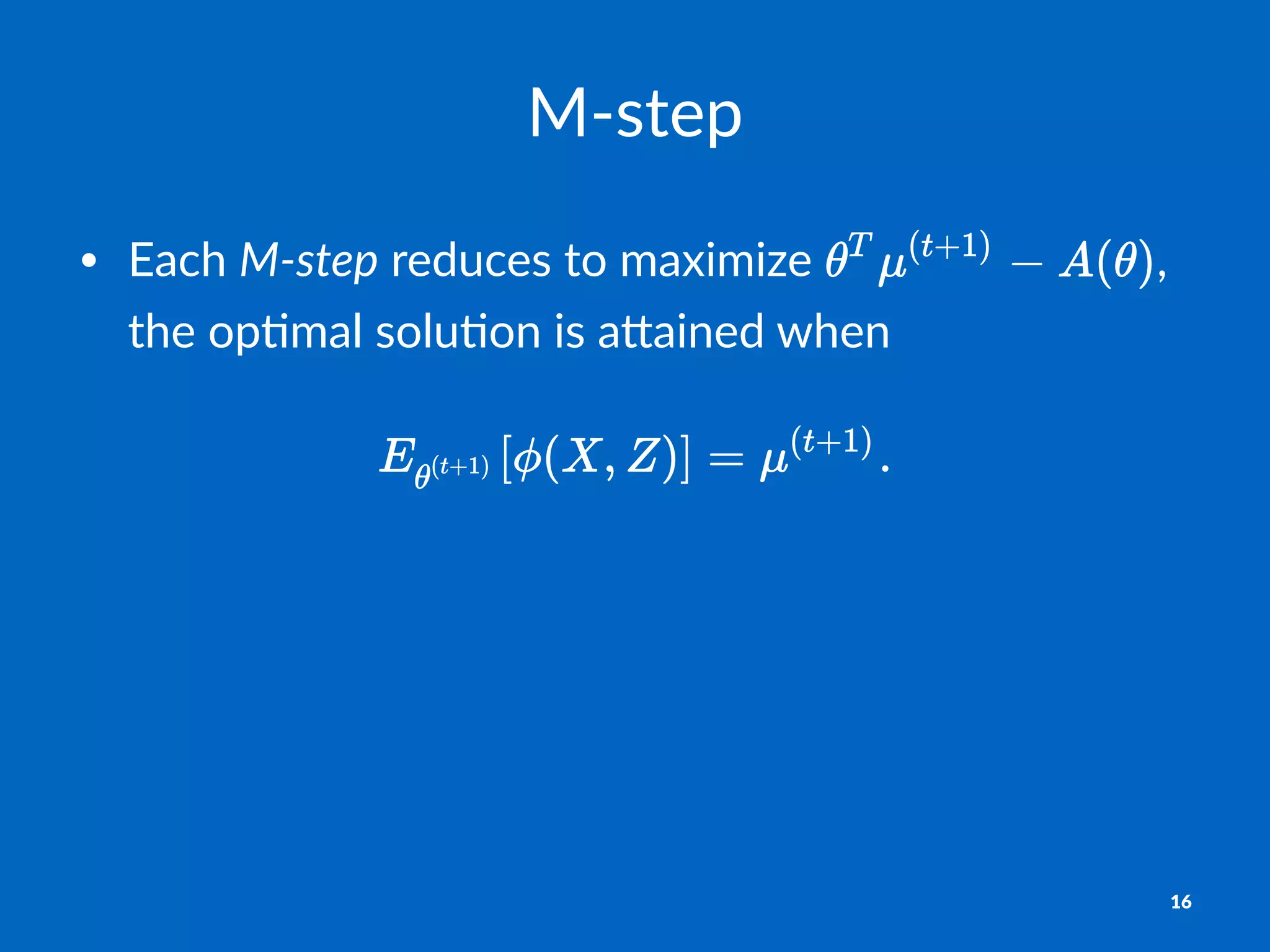

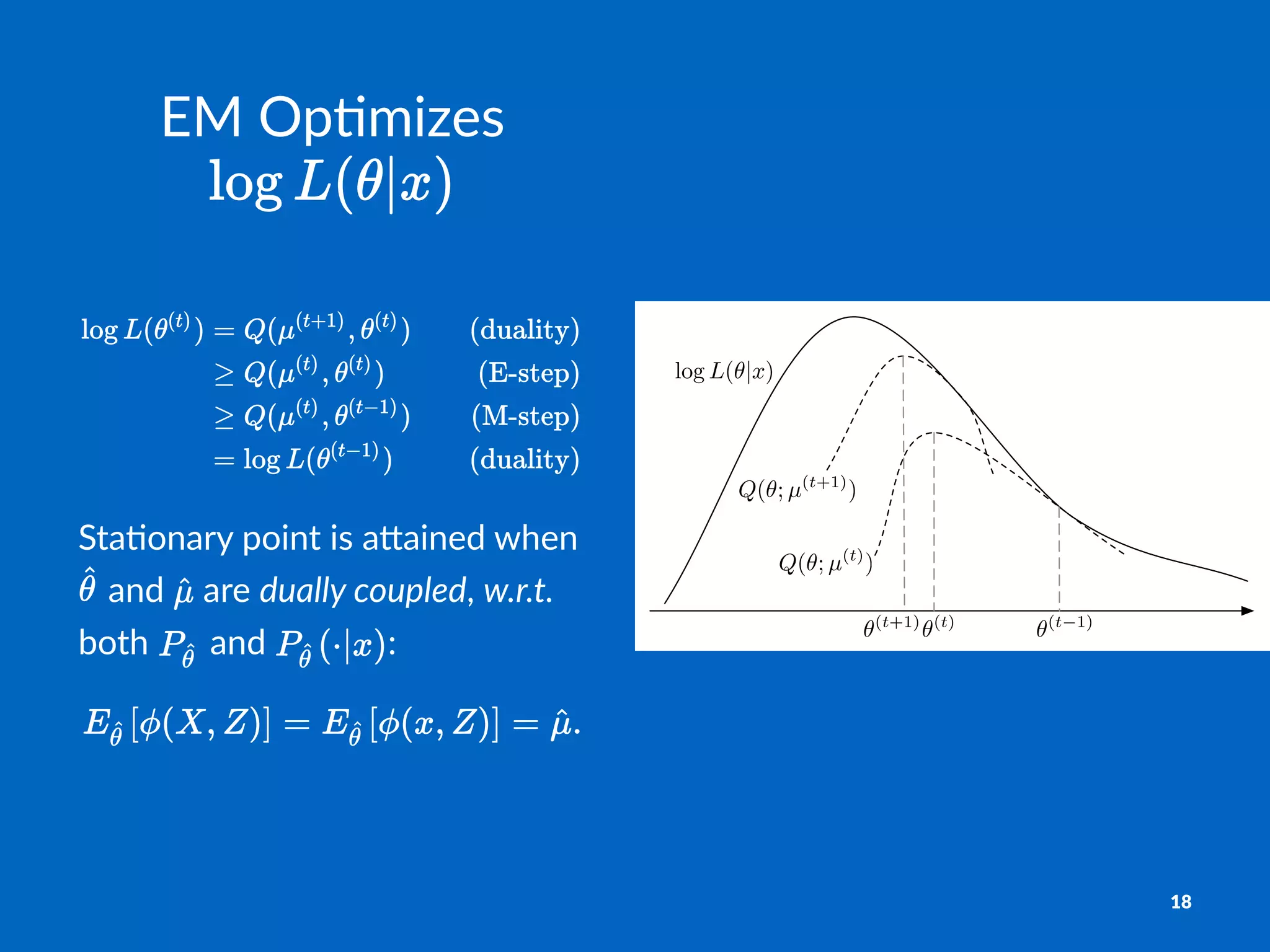

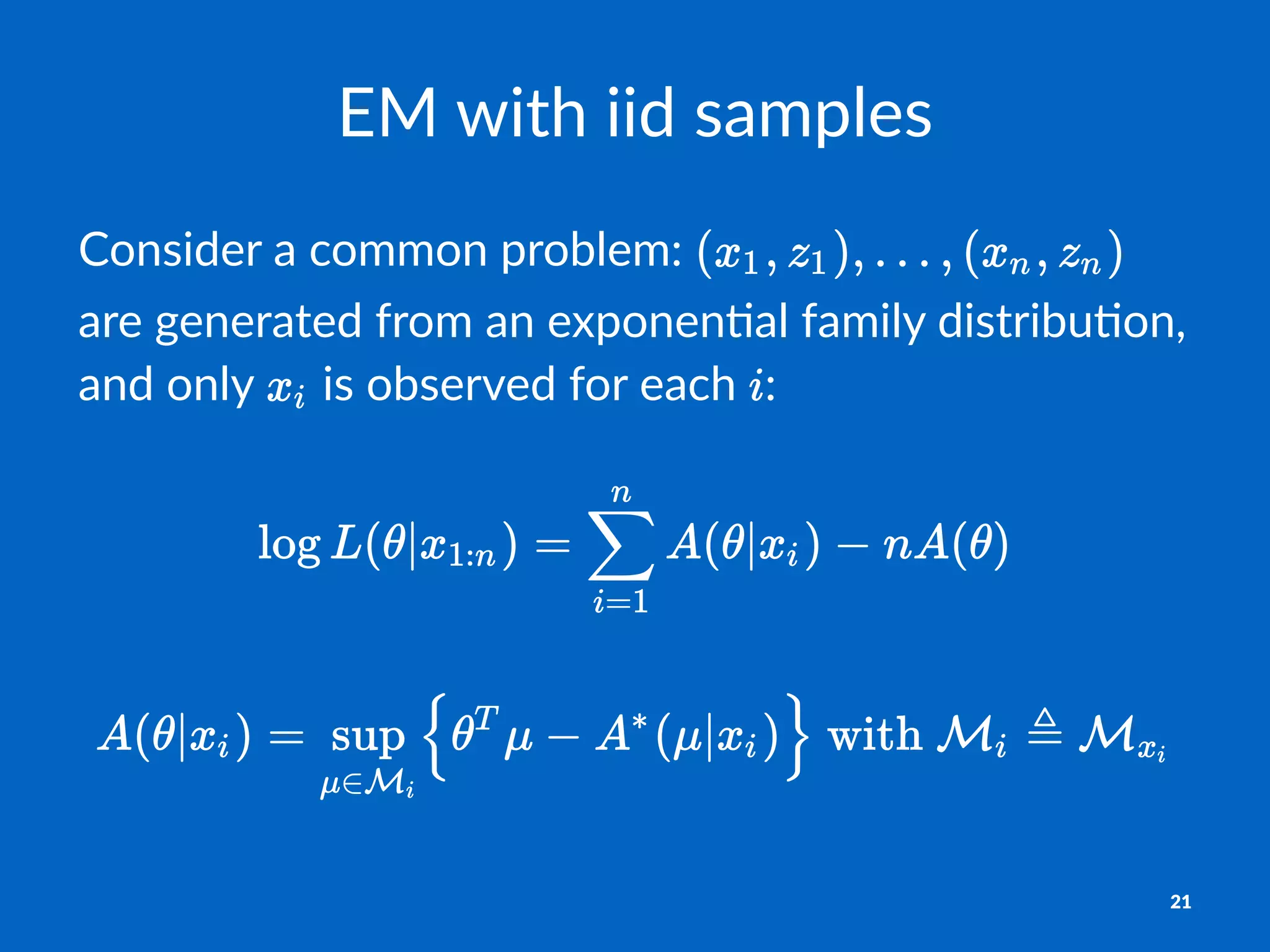

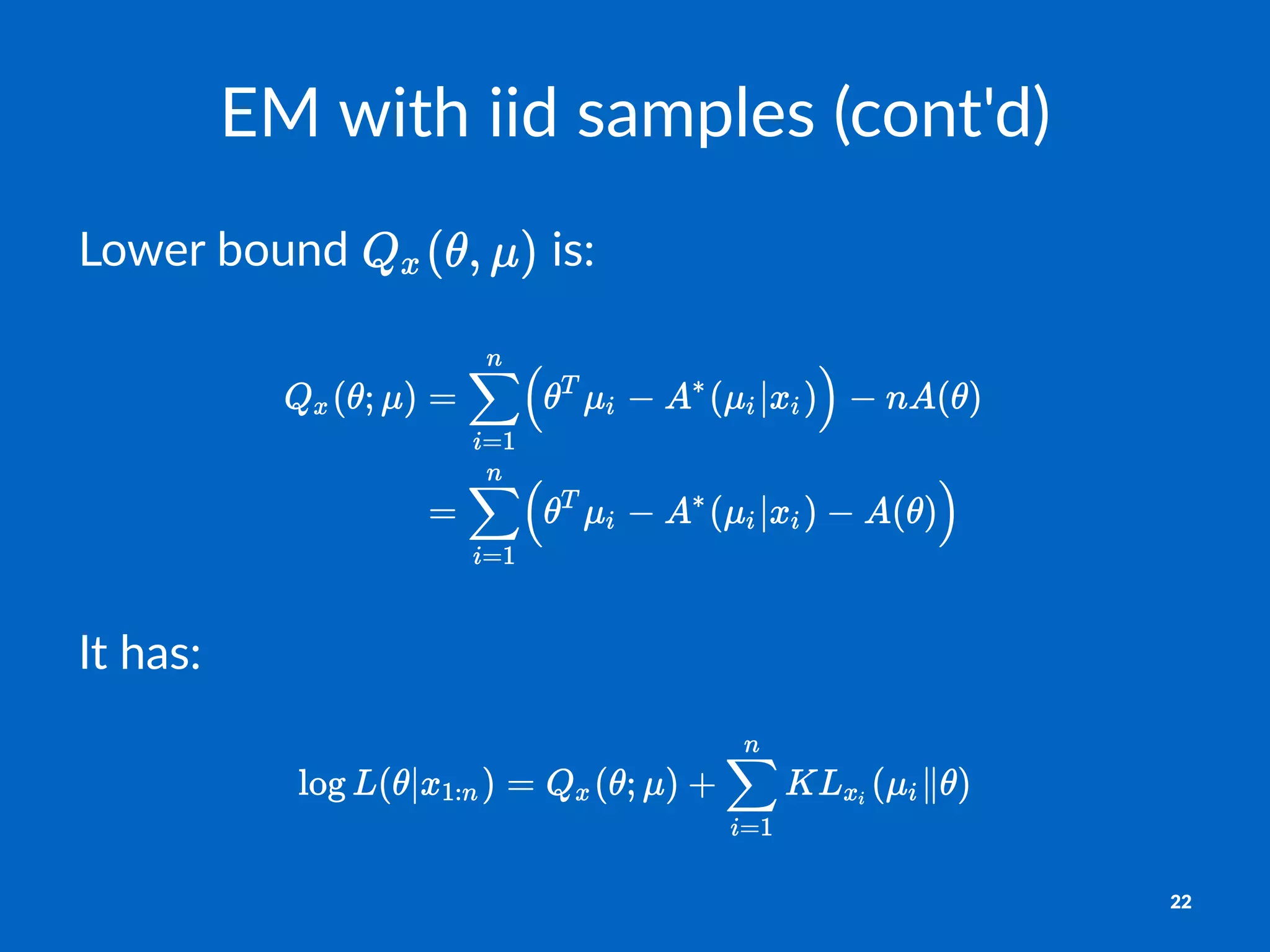

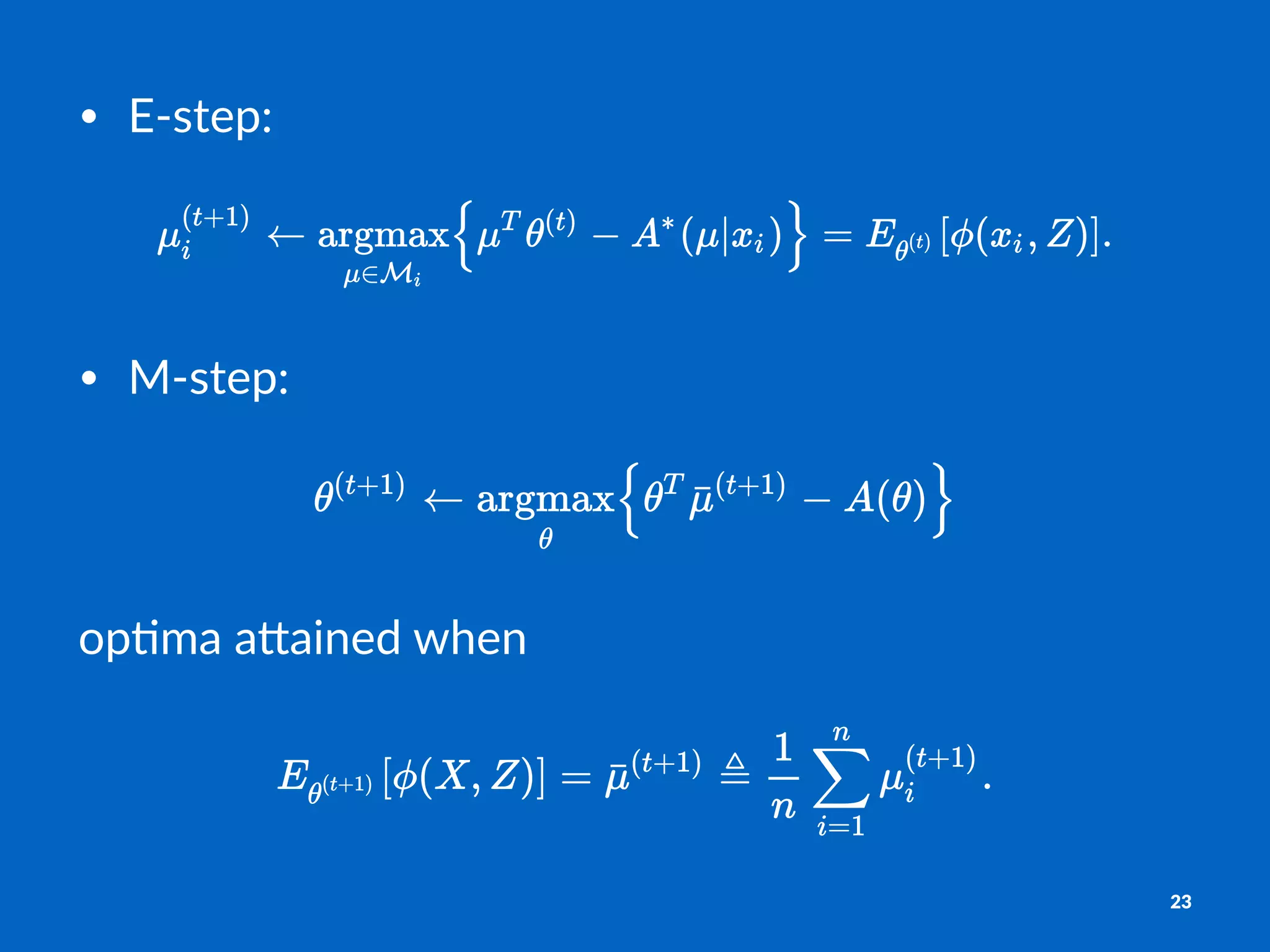

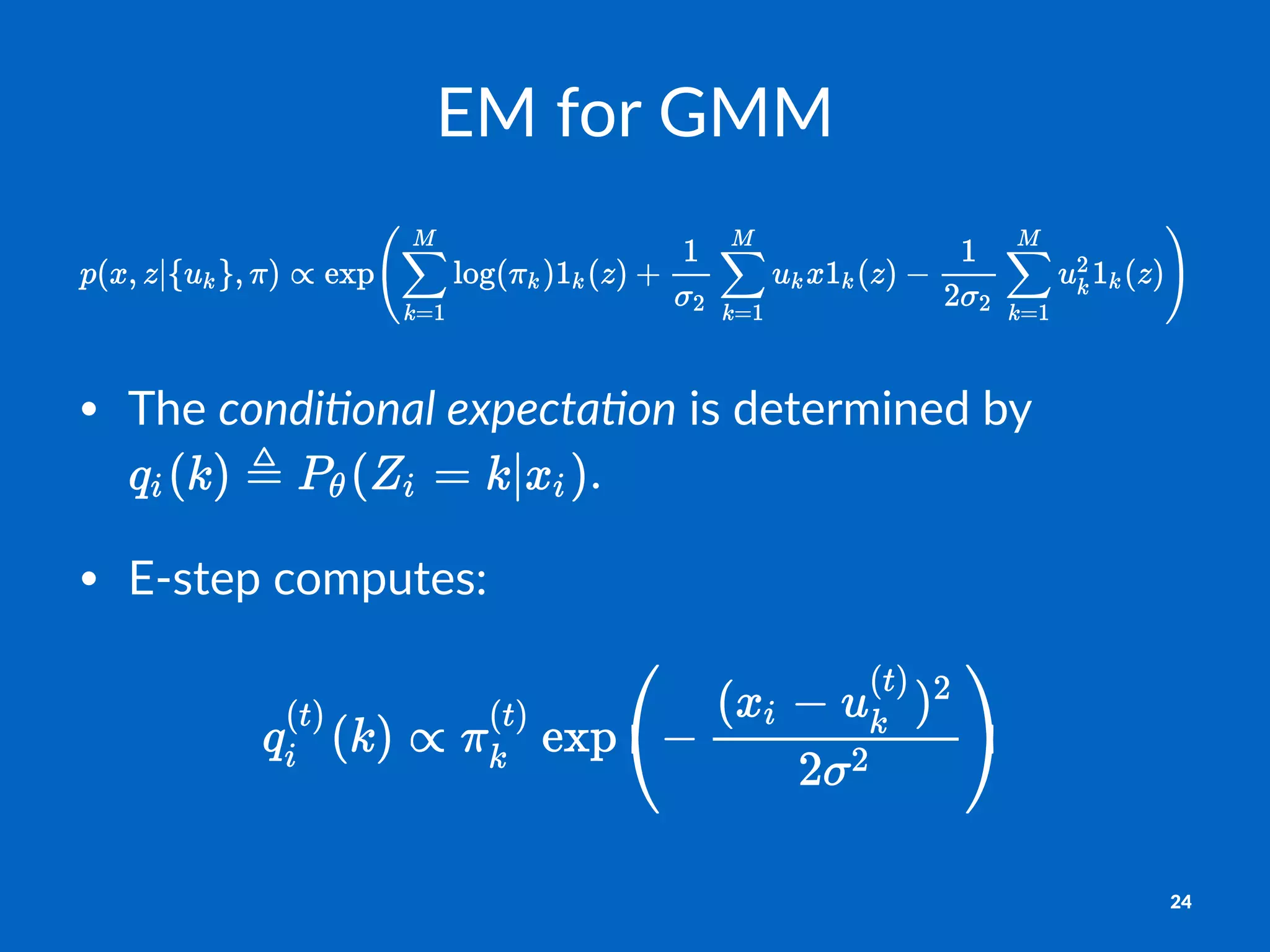

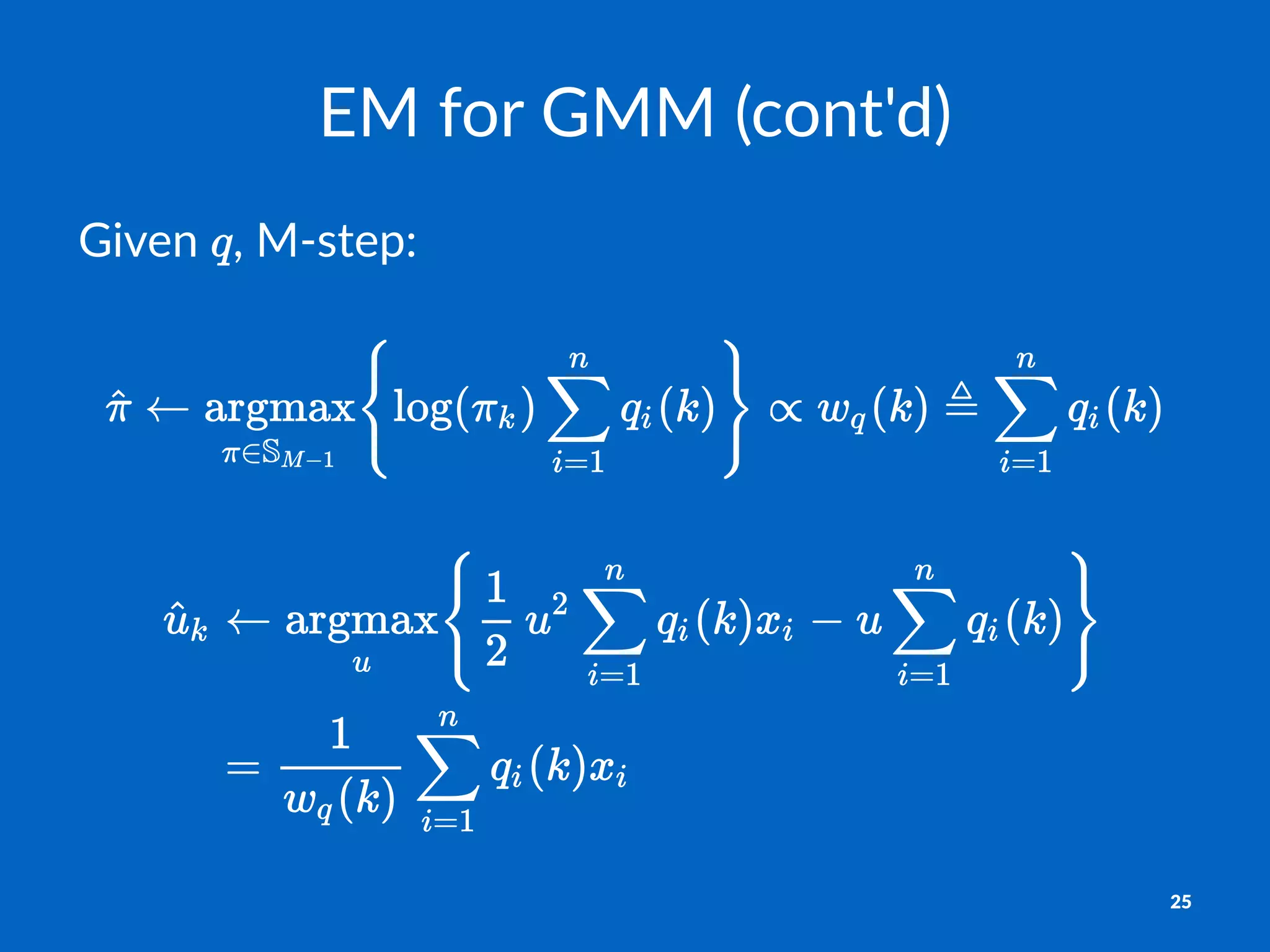

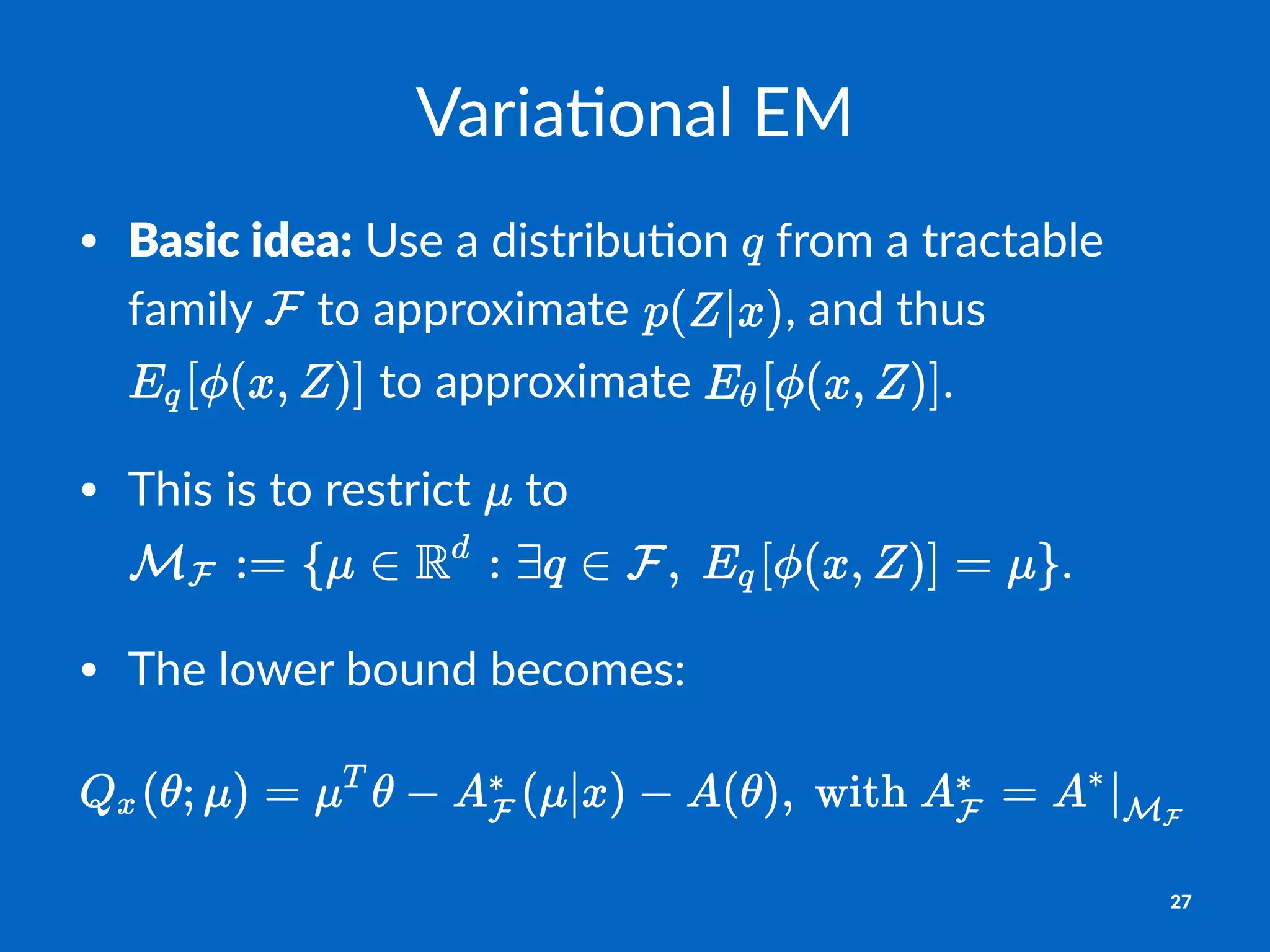

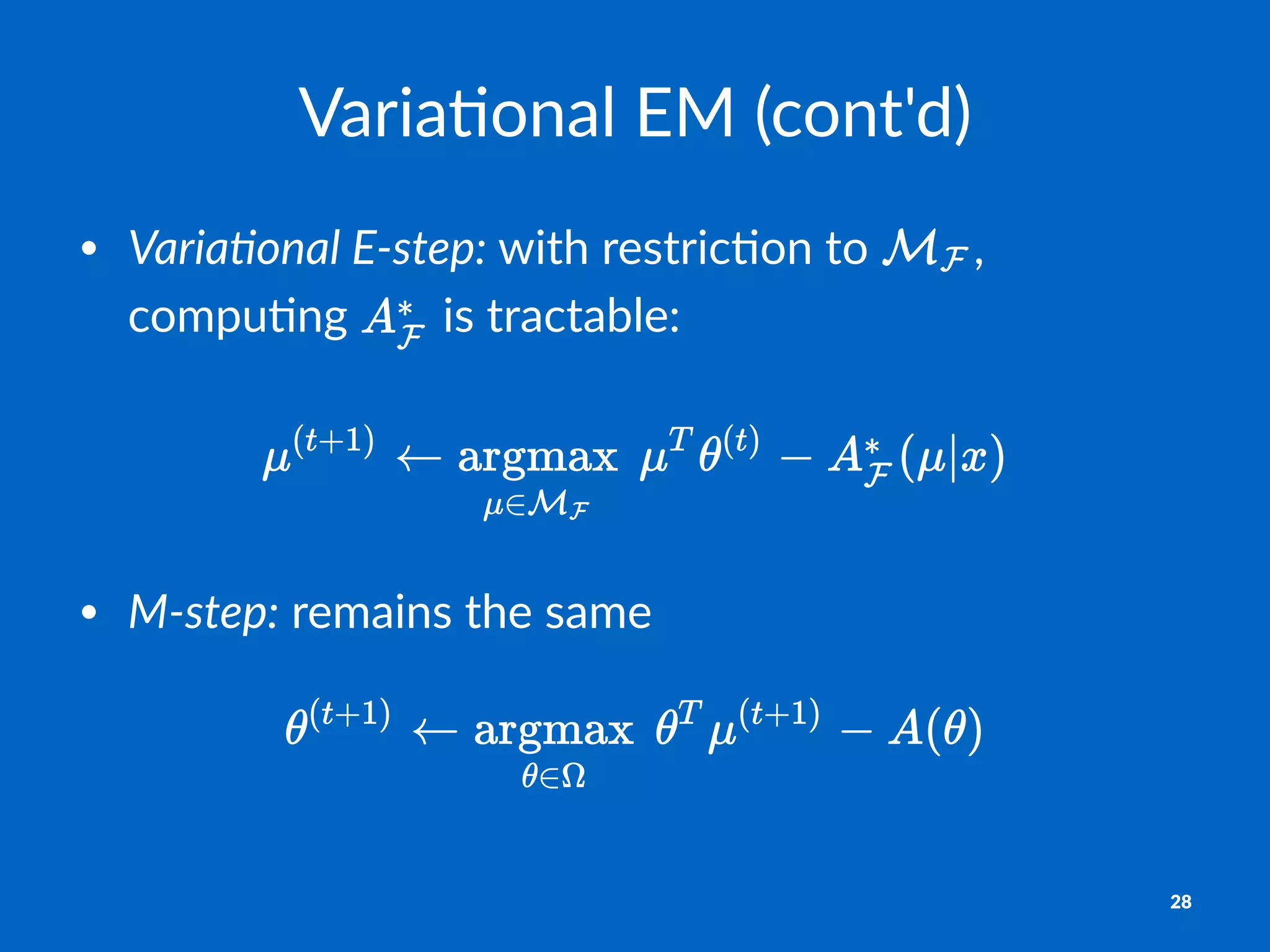

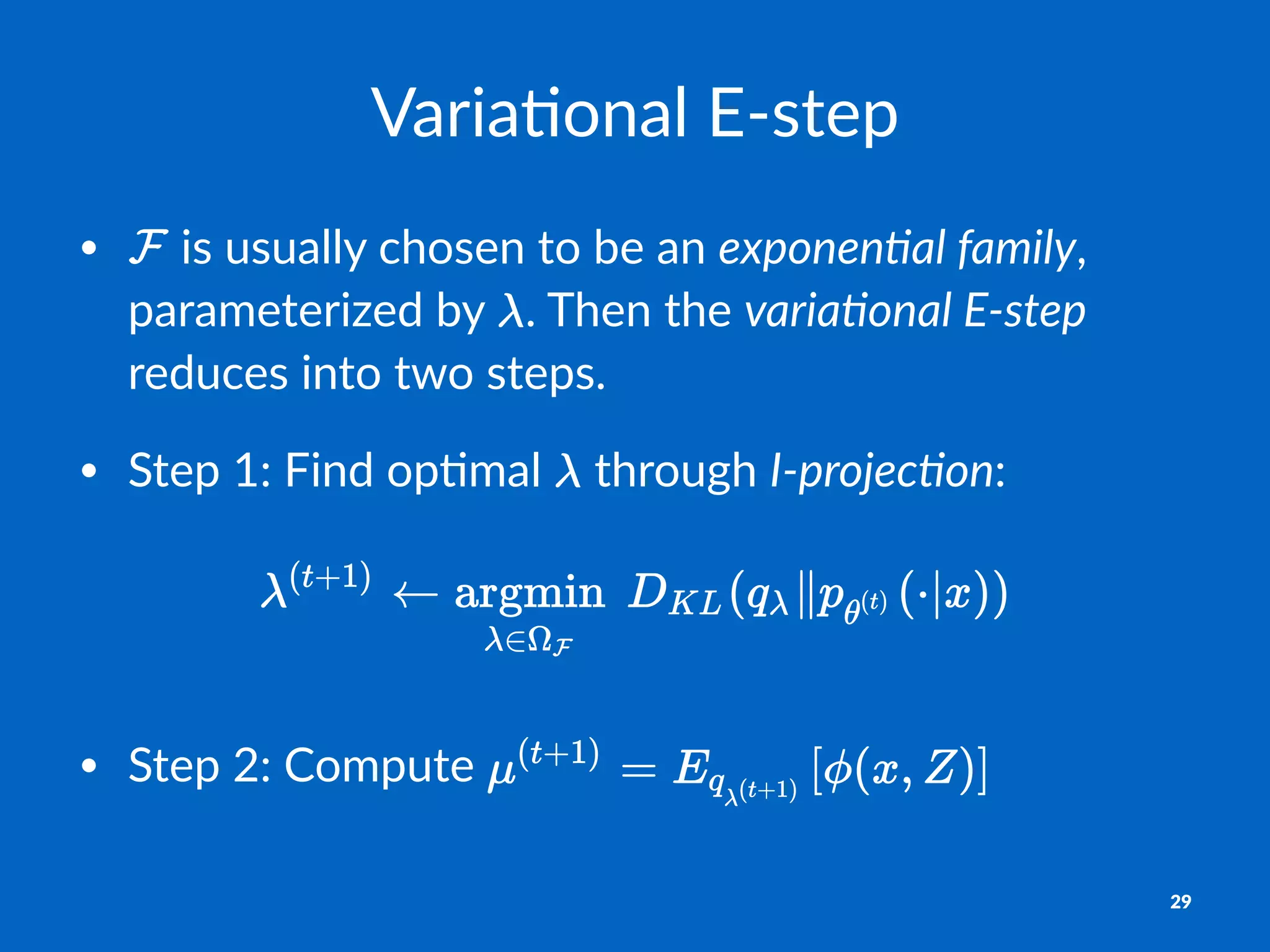

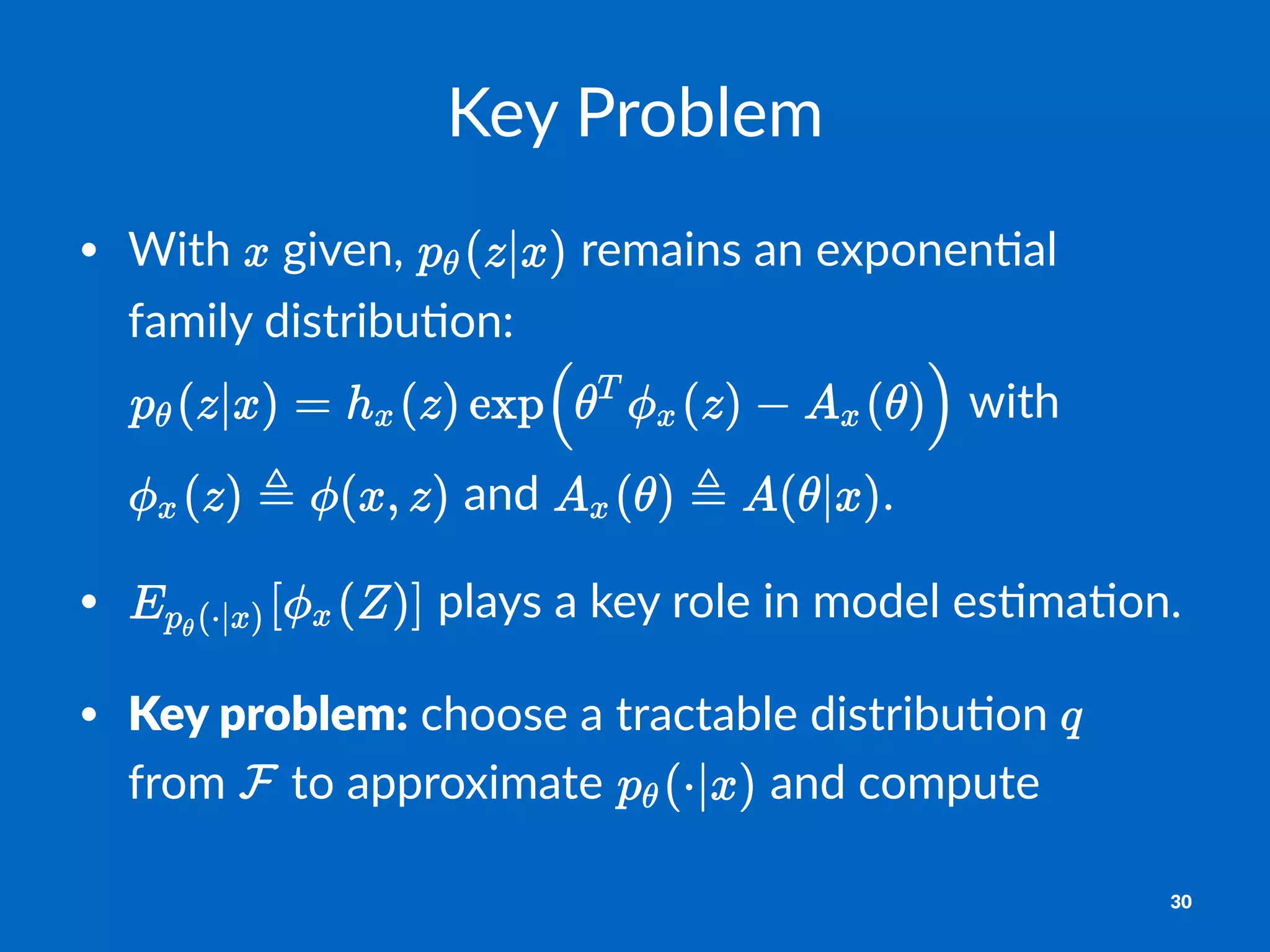

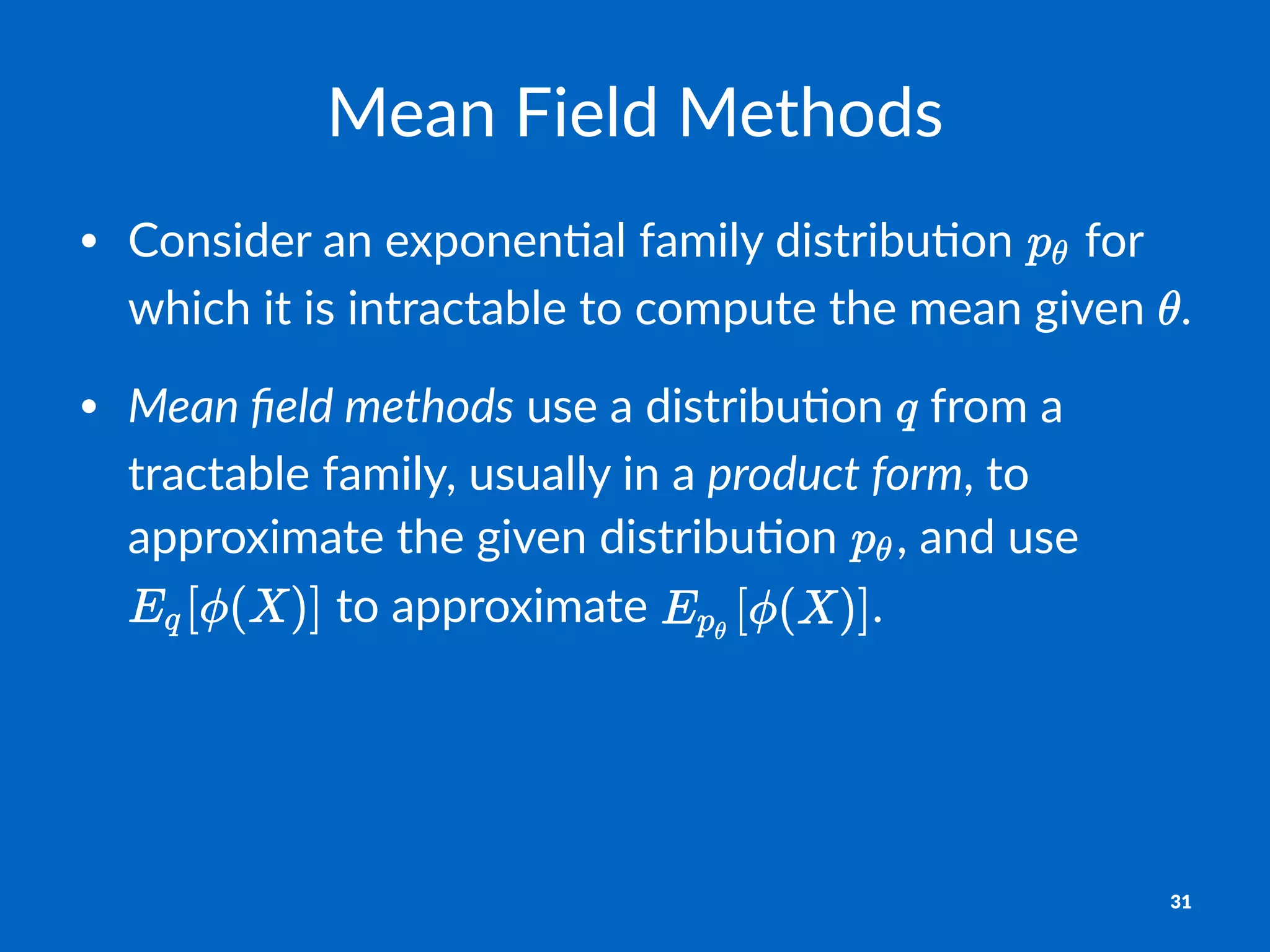

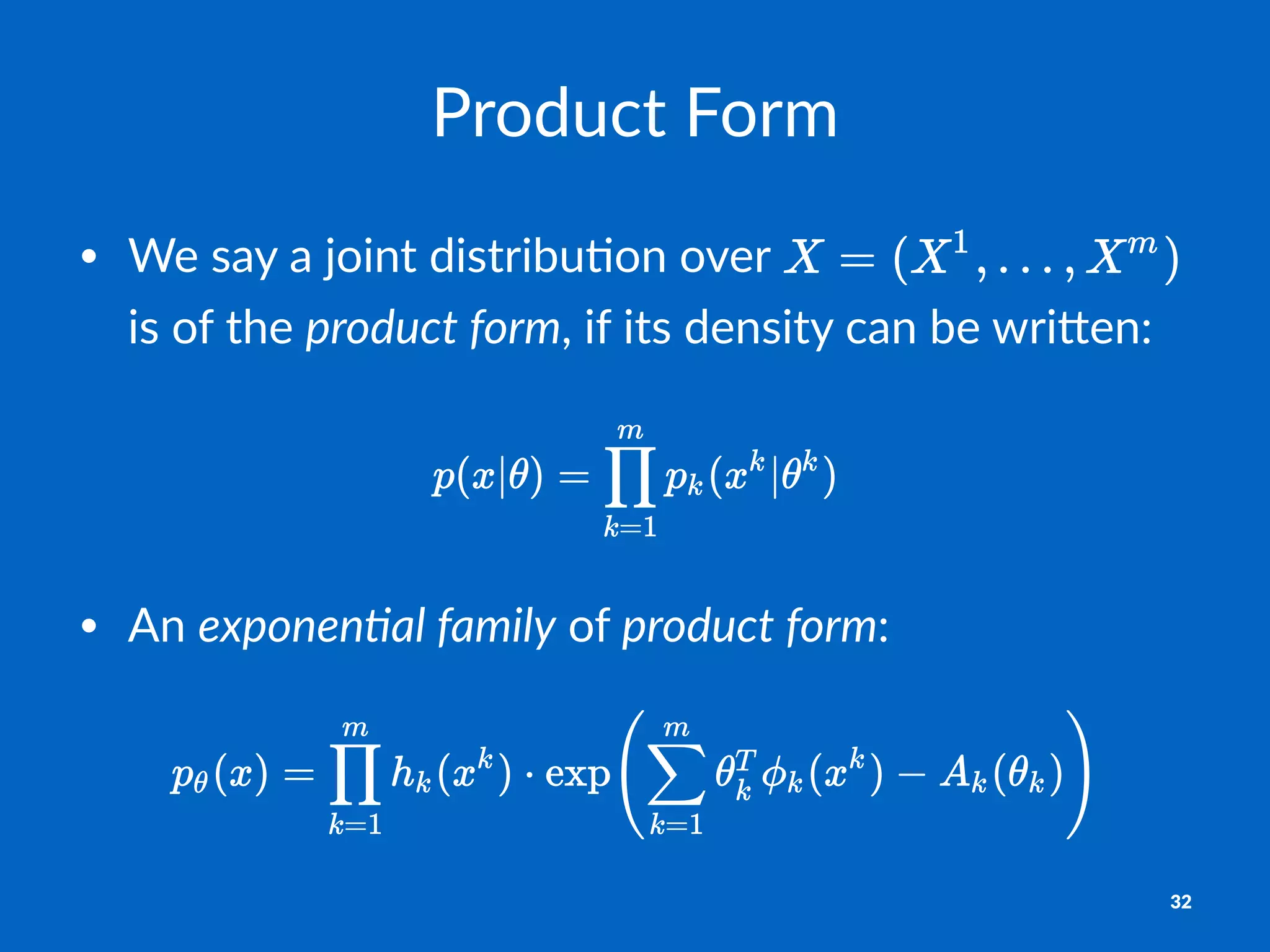

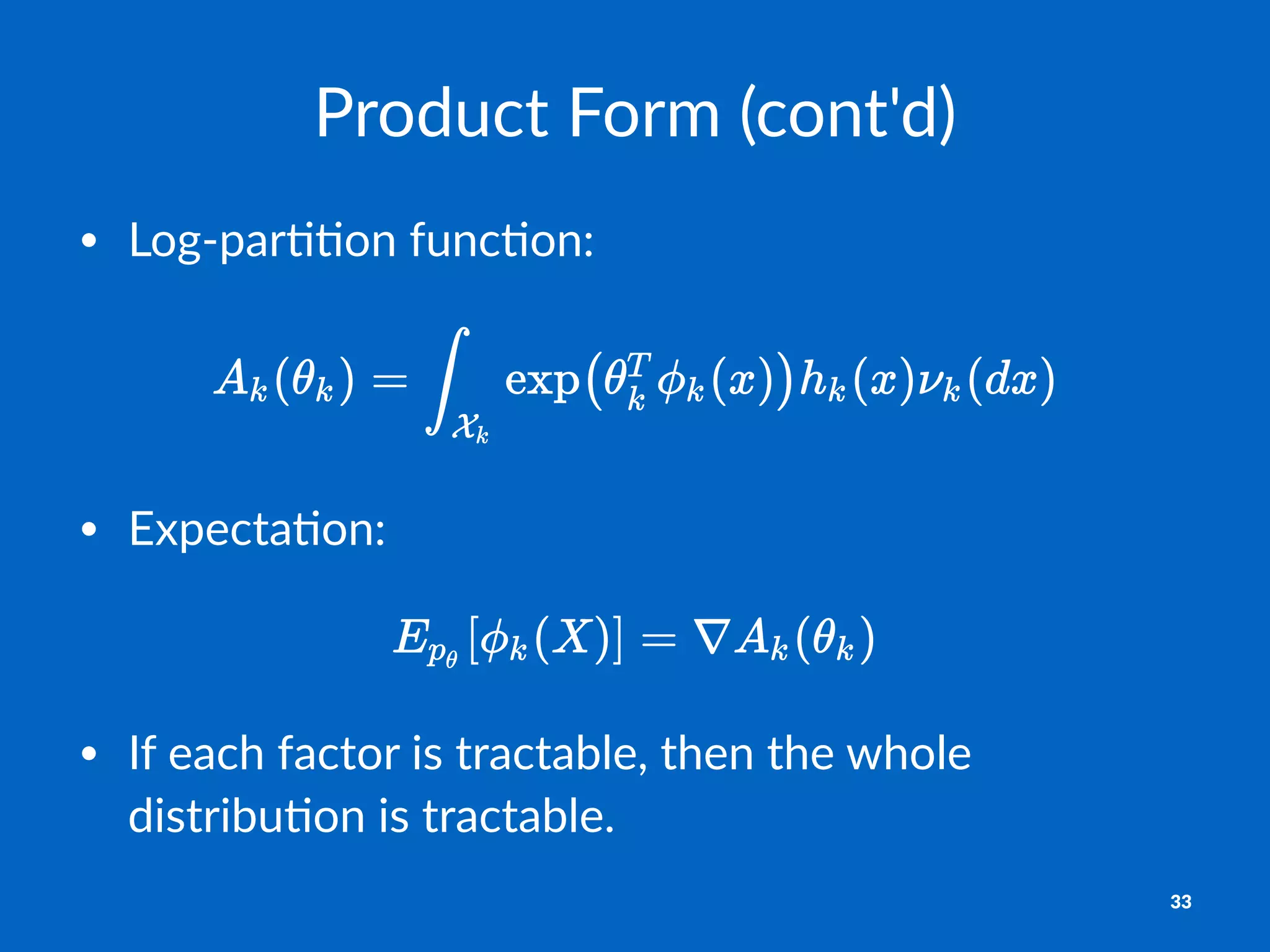

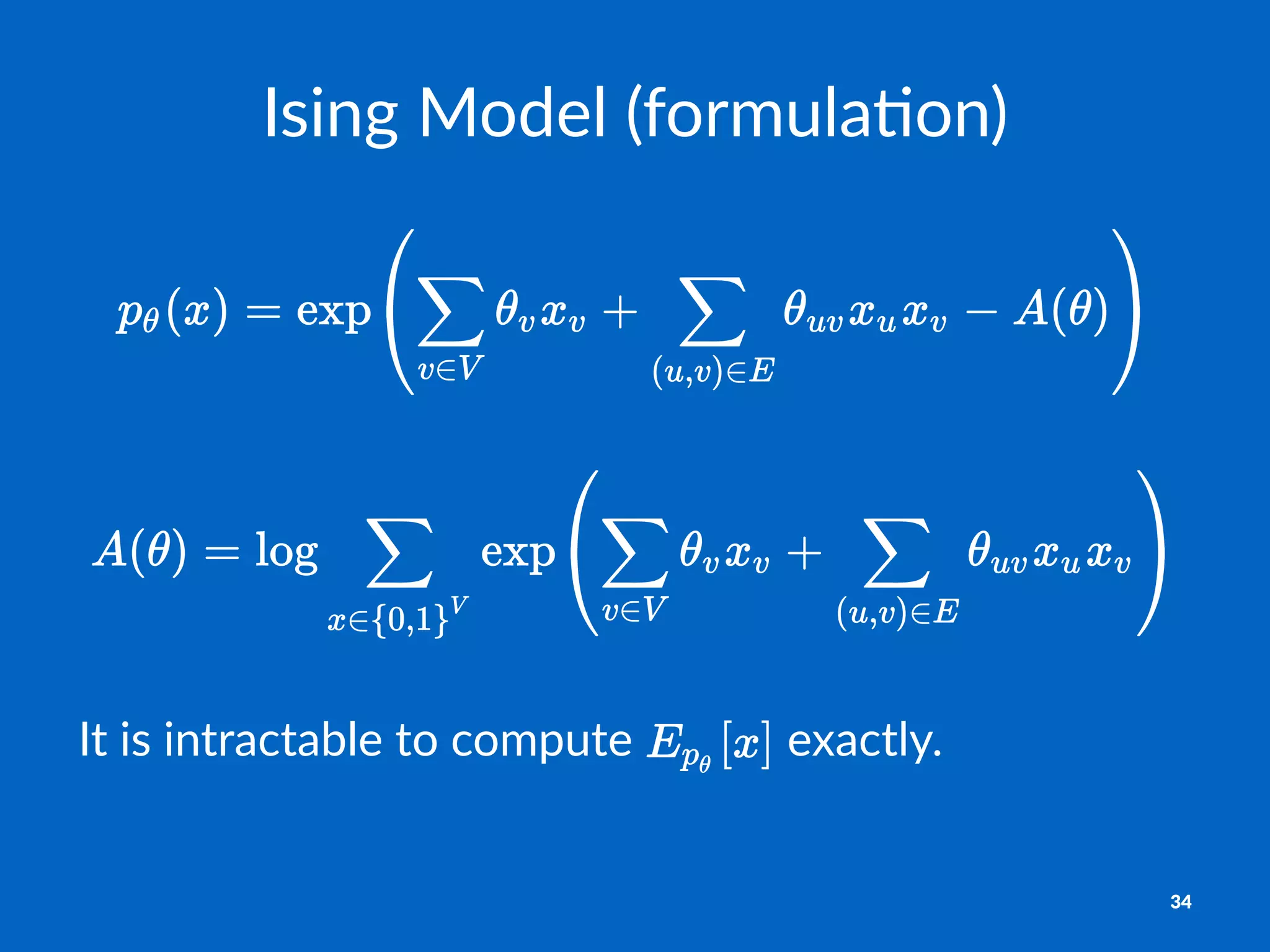

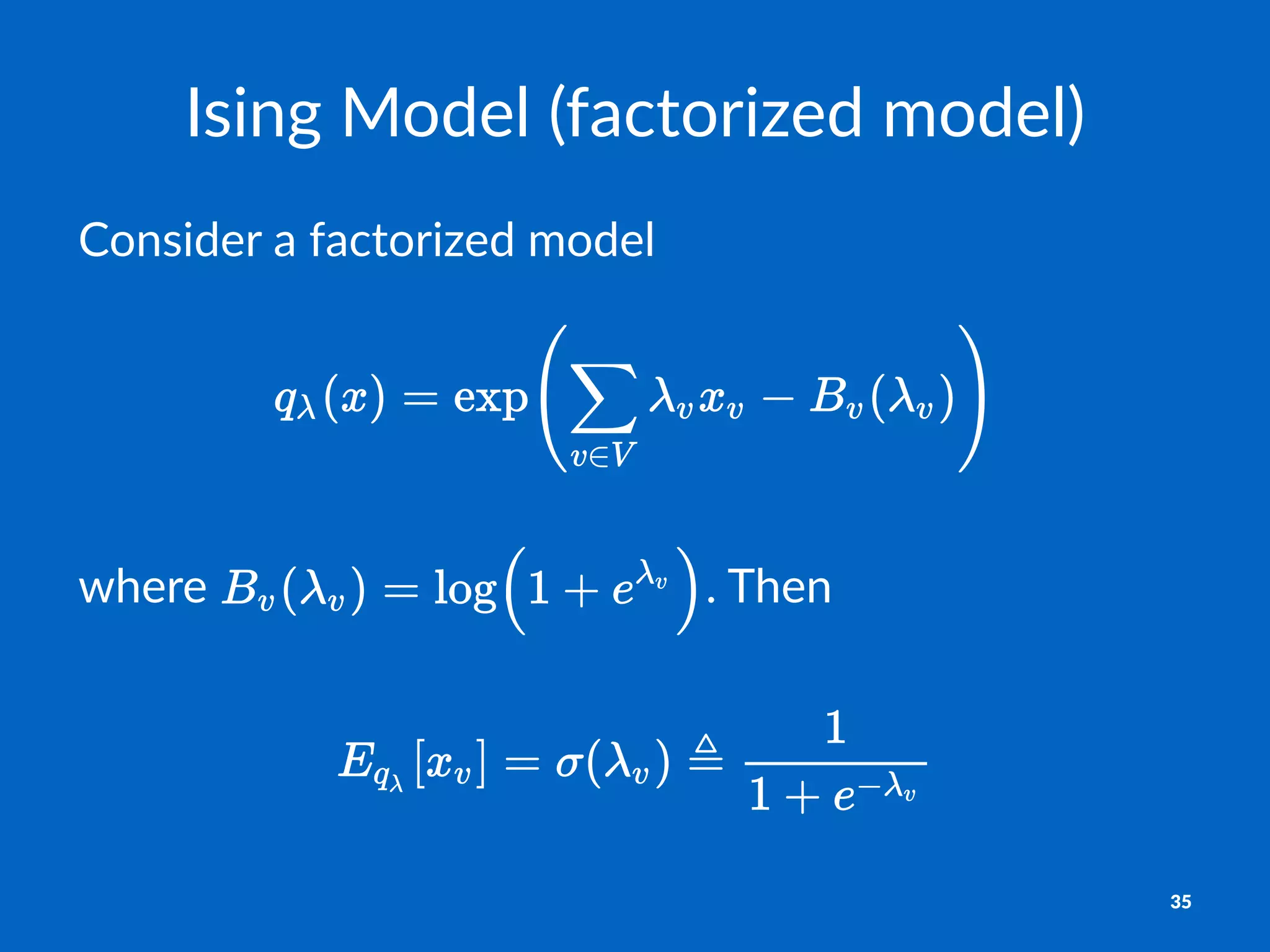

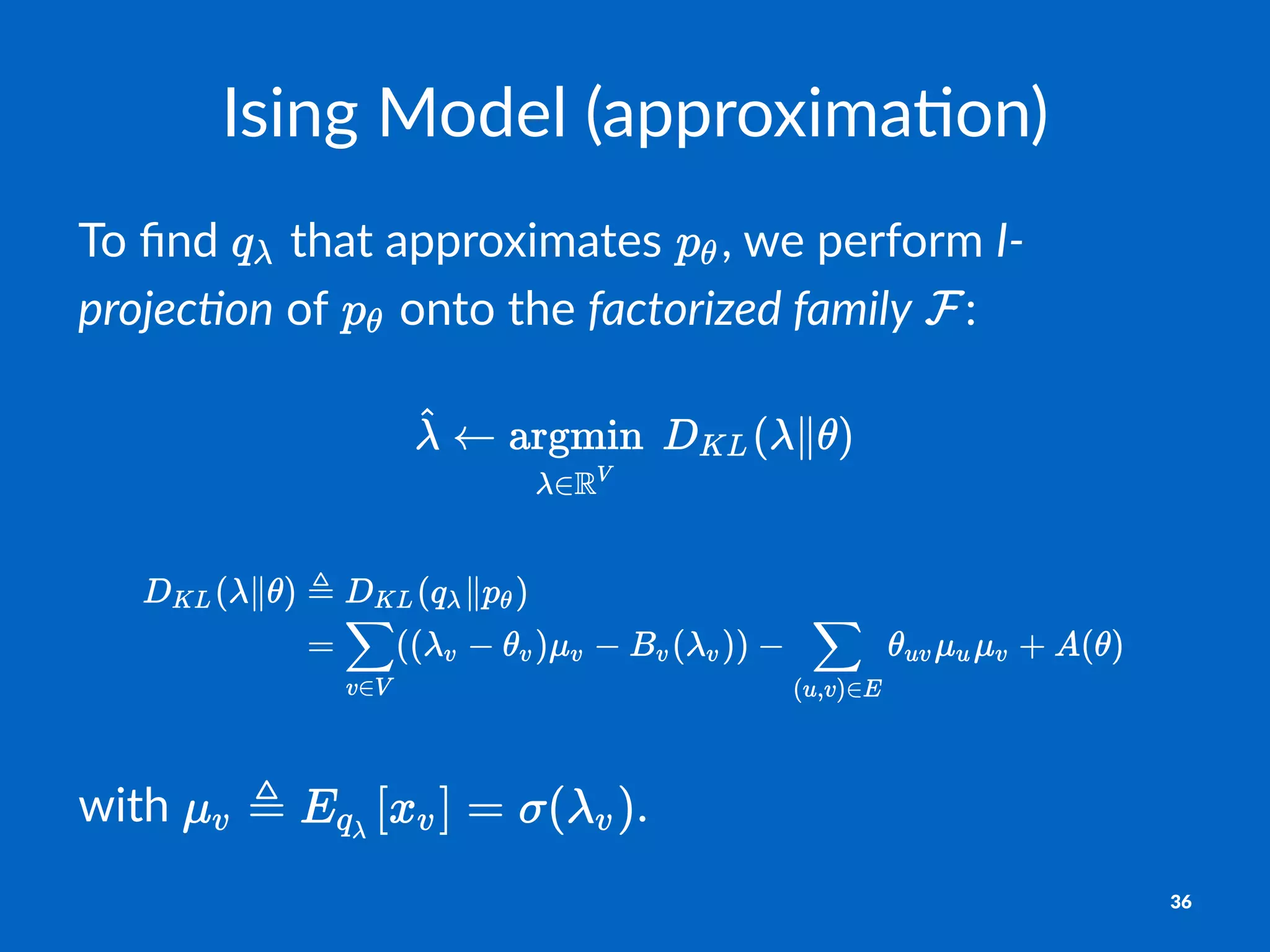

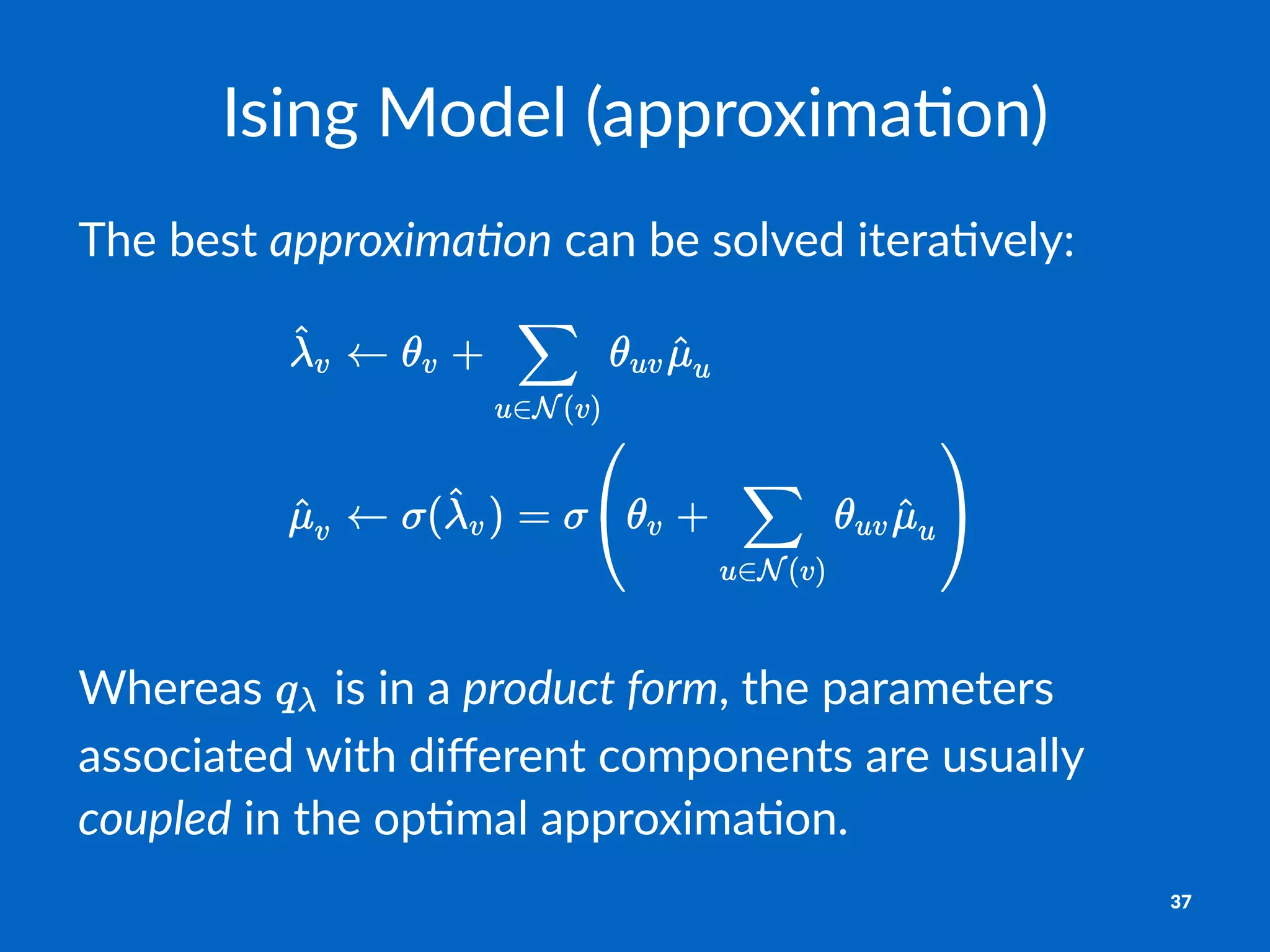

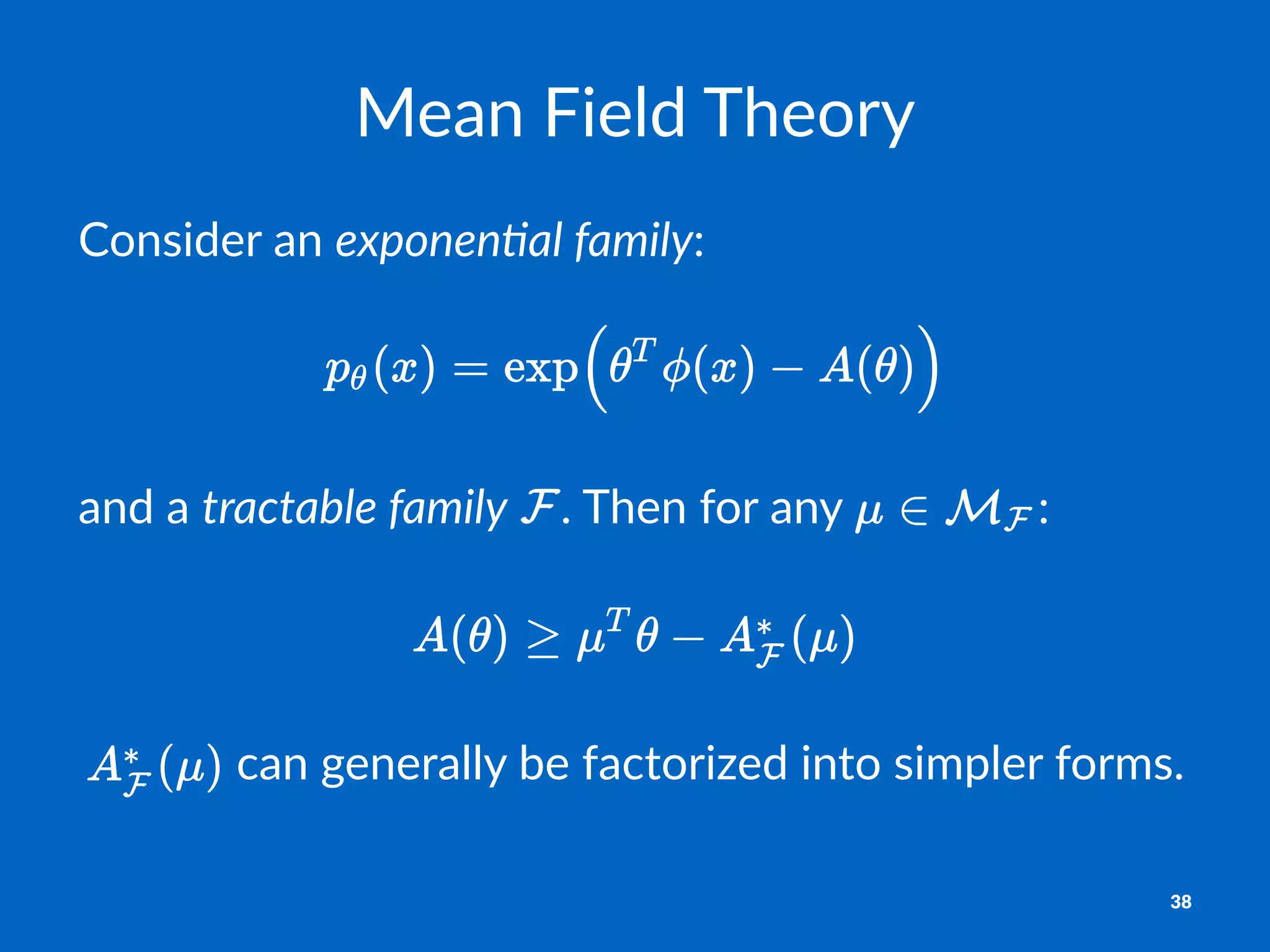

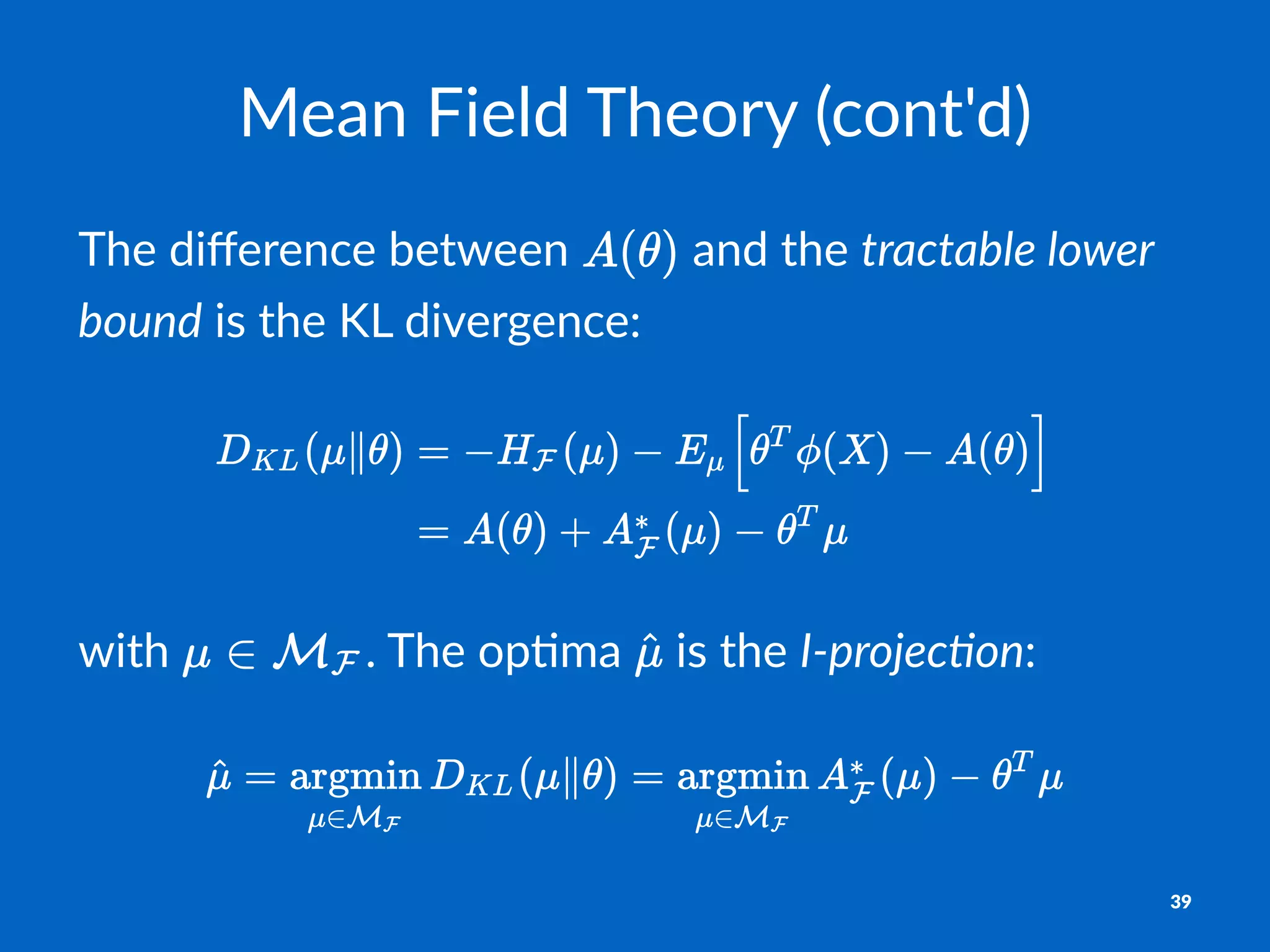

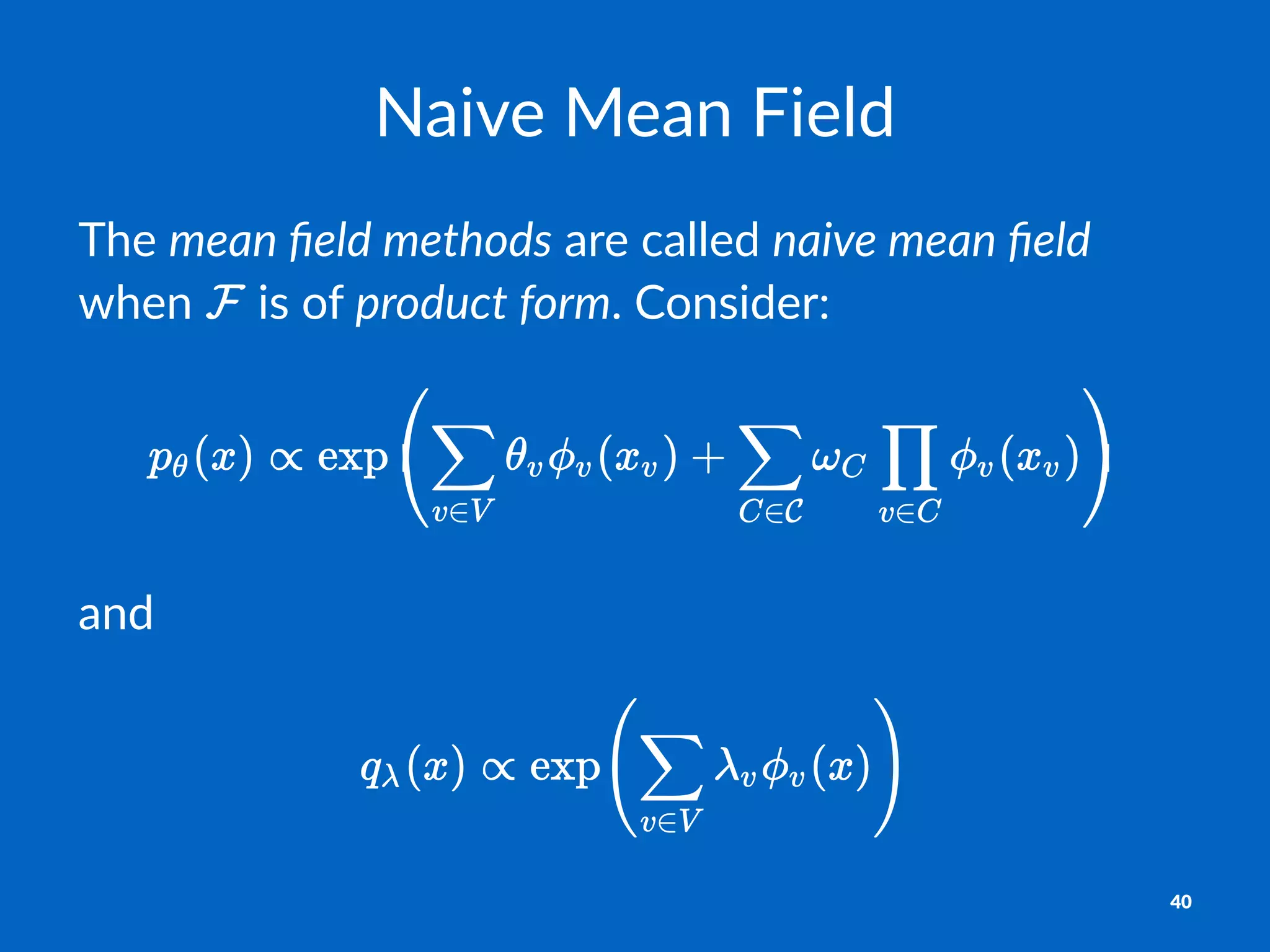

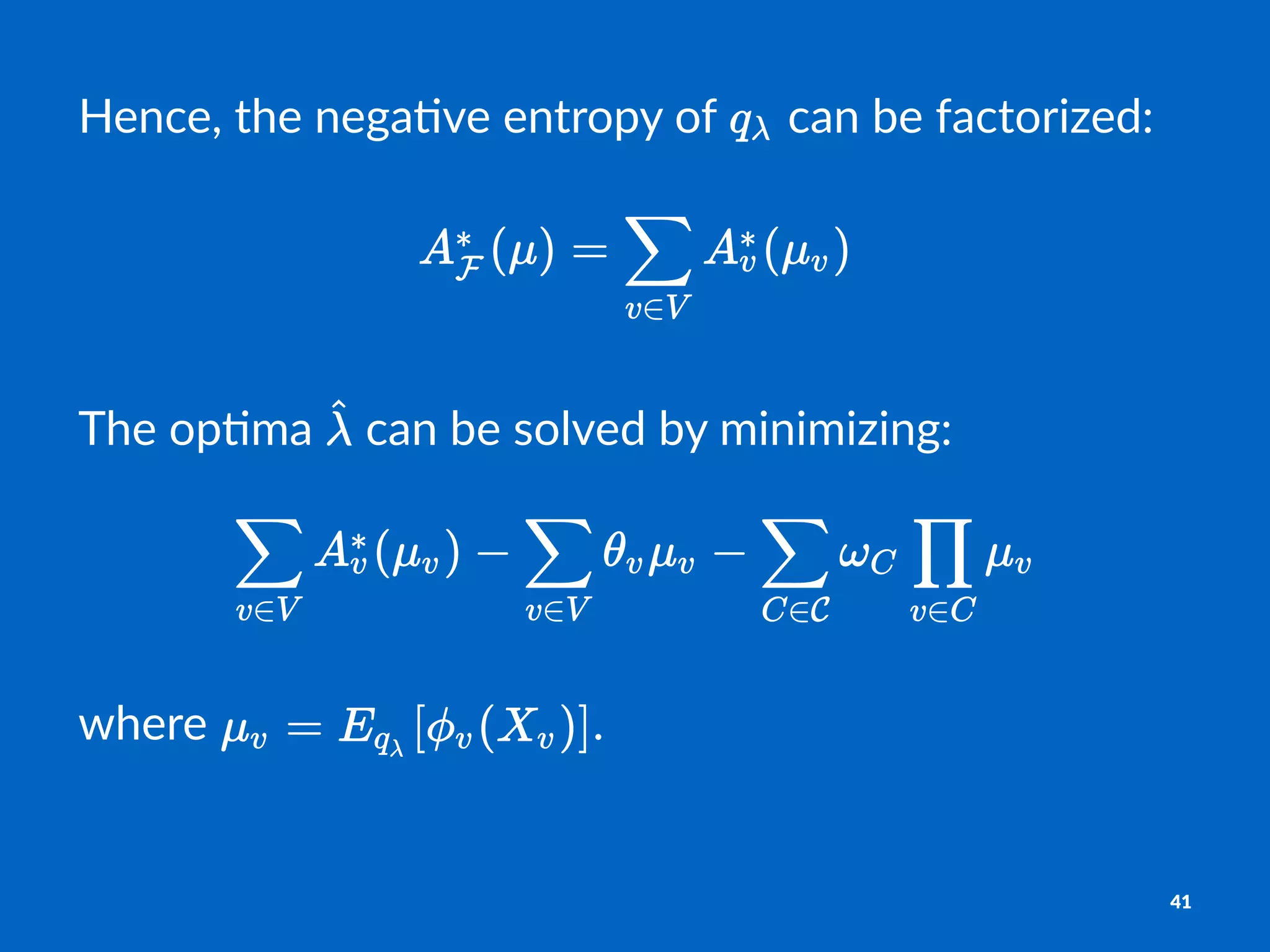

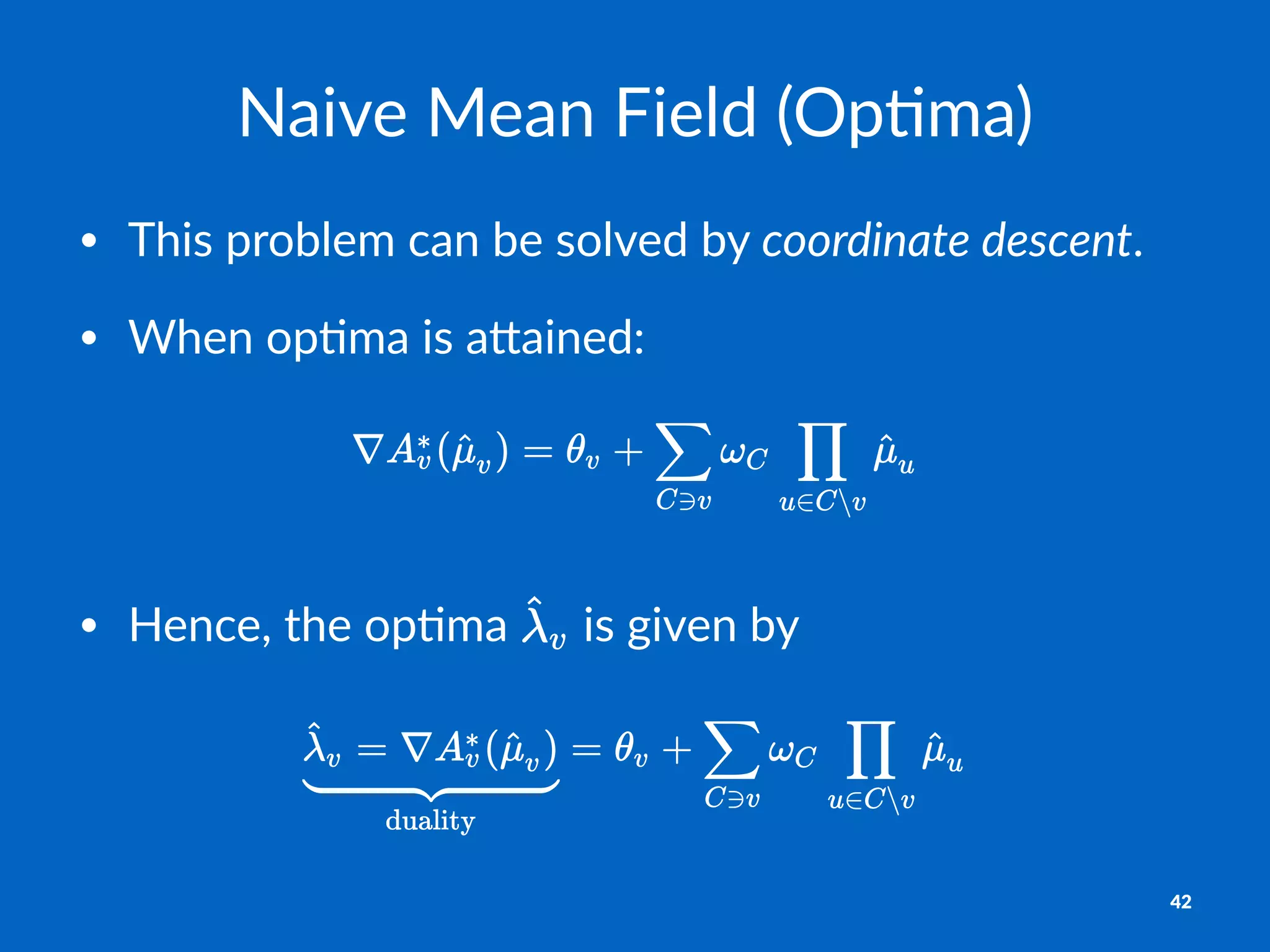

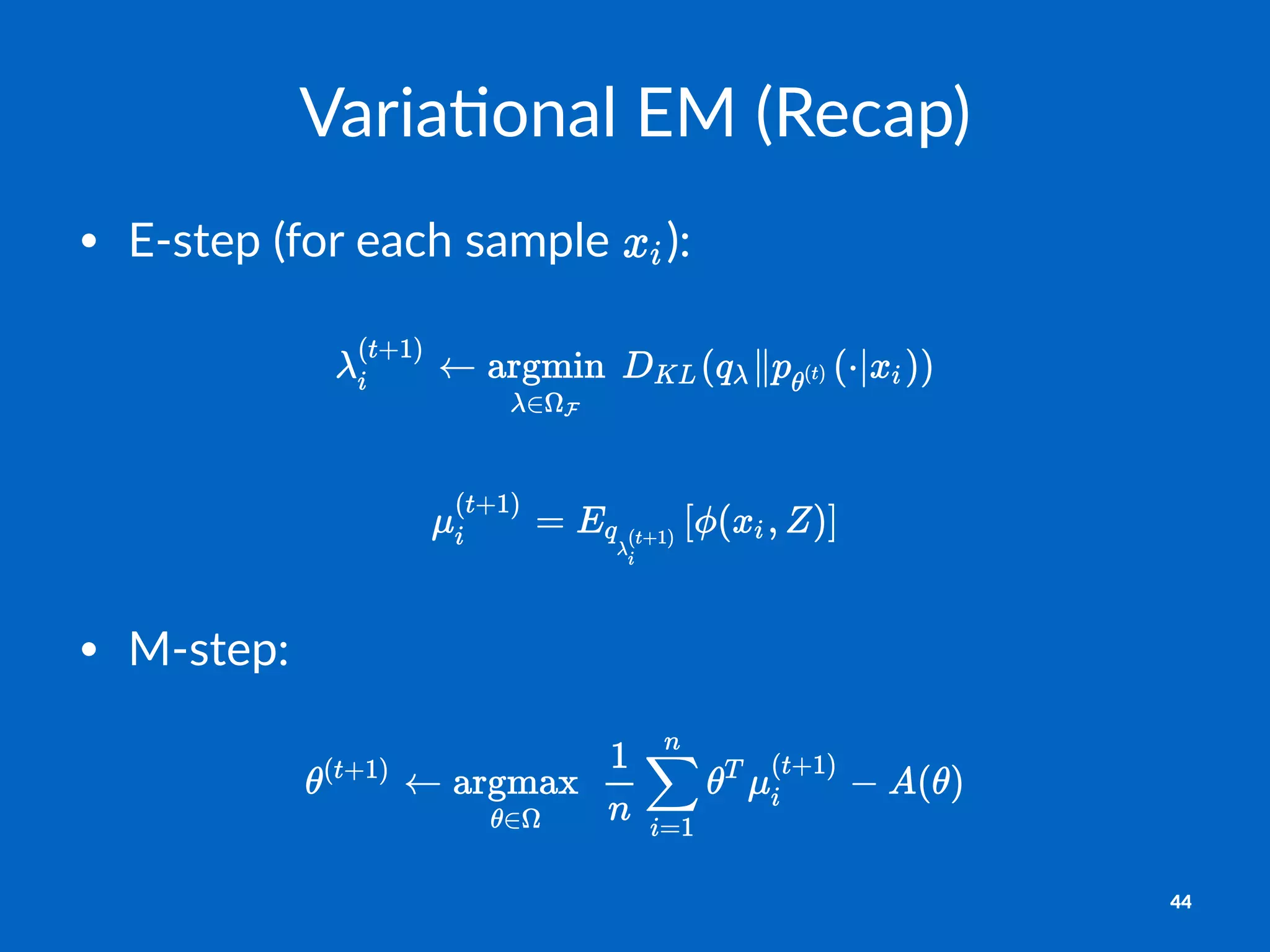

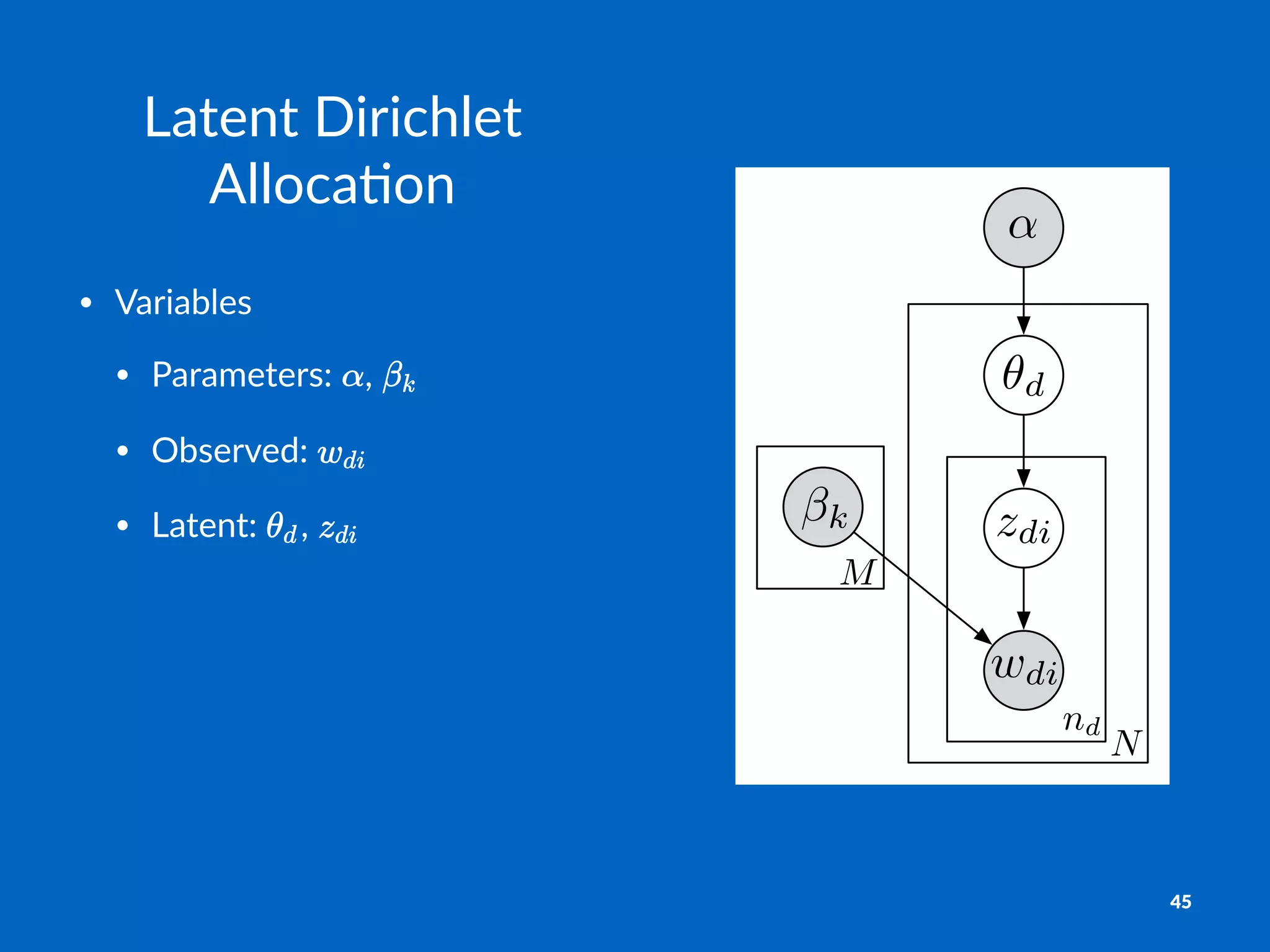

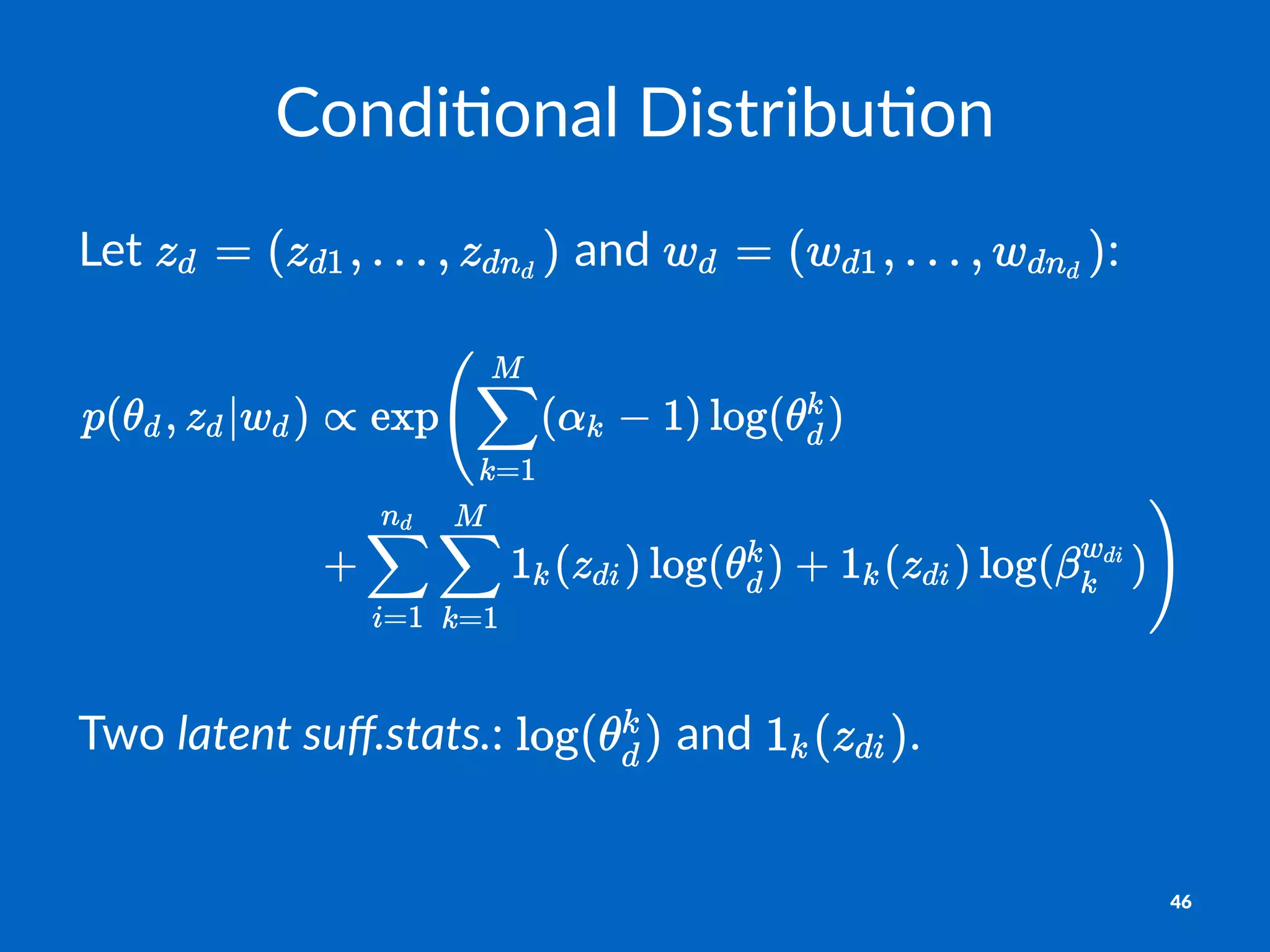

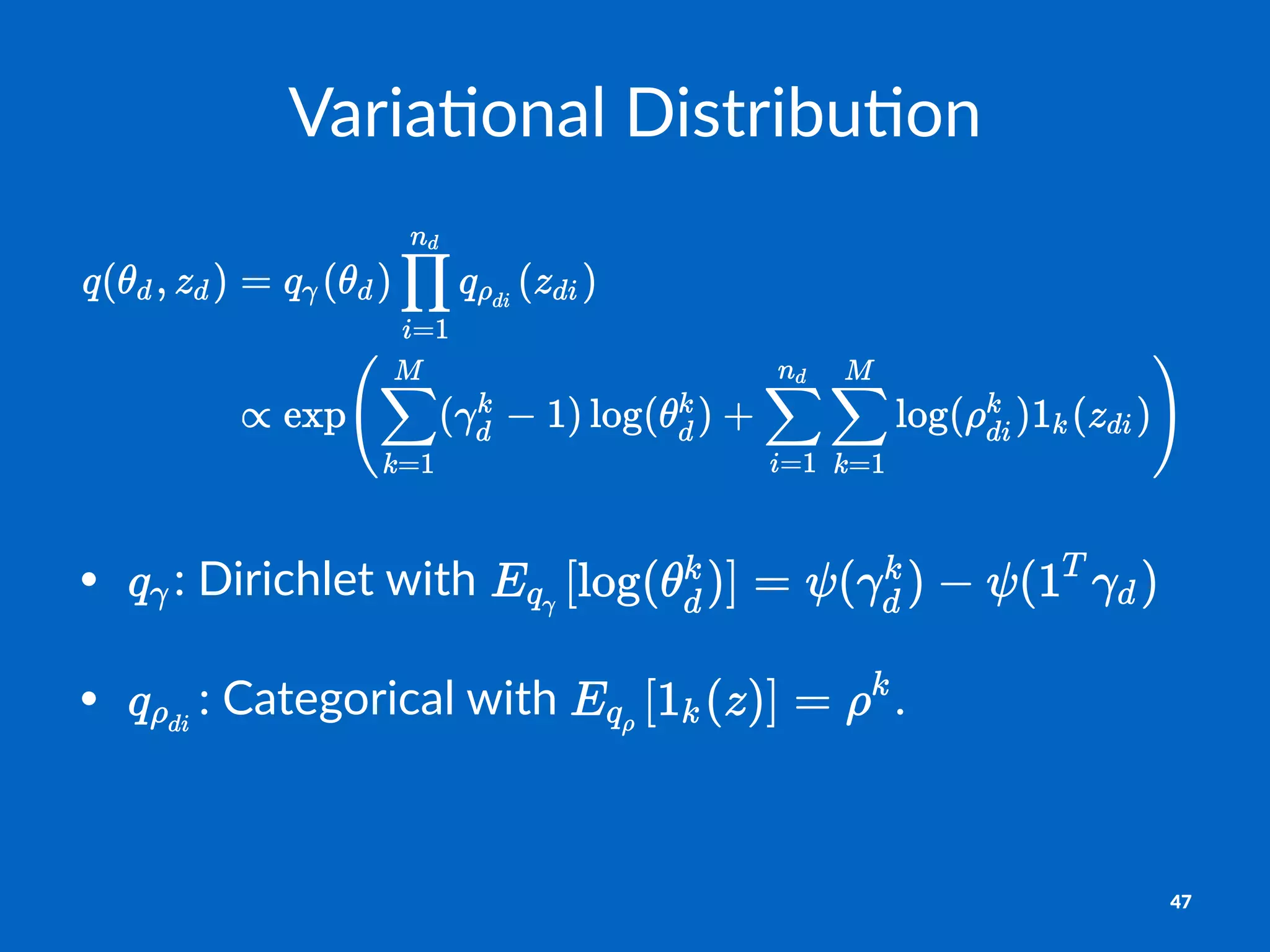

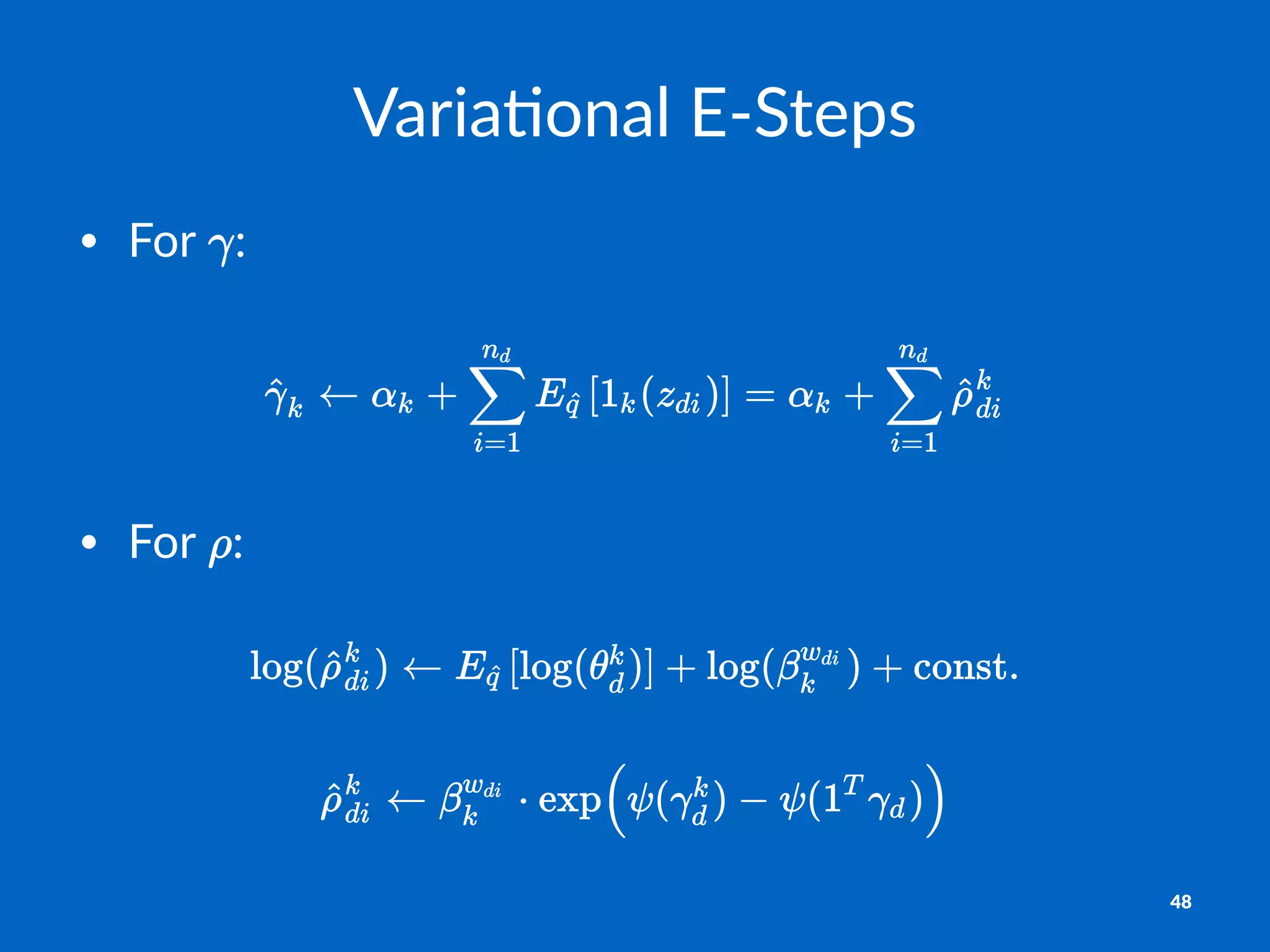

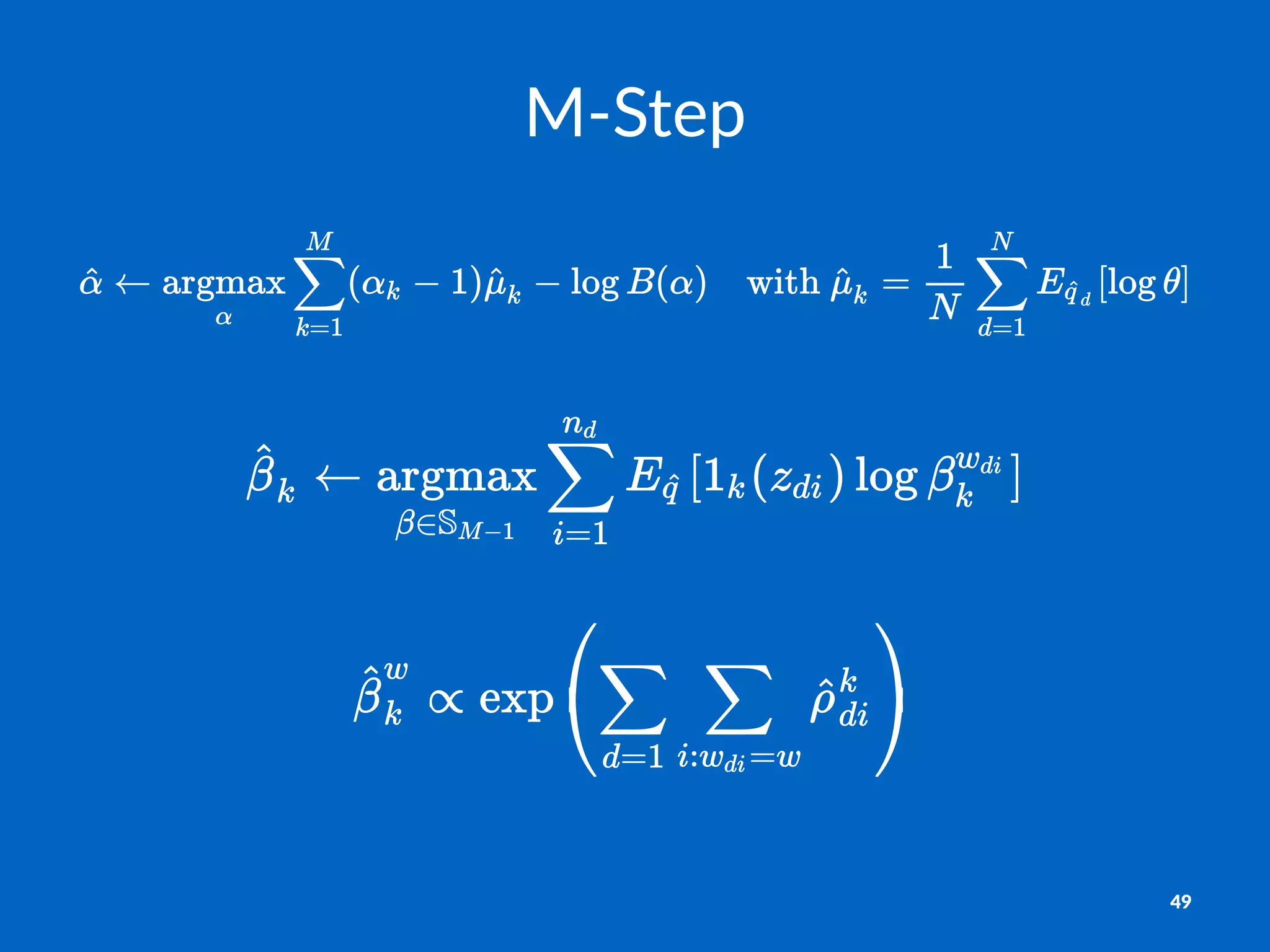

The document discusses variational estimation and inference techniques, focusing on exponential family models, particularly in relation to estimating models with partial observations and using methods like Expectation-Maximization (EM) and variational EM. It covers concepts such as conditional log-partitions, maximizing likelihood through latent variables, and introduces mean field methods to handle intractable expectations. The document outlines various strategies for managing observed and latent variables to optimize estimations effectively.