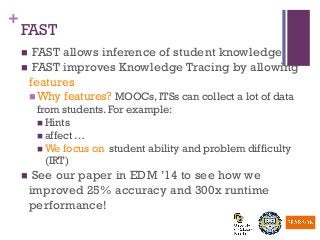

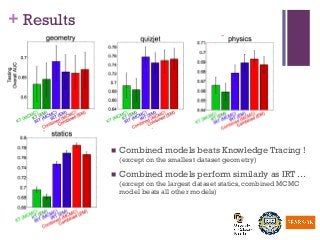

This is a following-up work of FAST(http://educationaldatamining.org/EDM2014/uploads/procs2014/long%20papers/84_EDM-2014-Full.pdf), which is nominated for the best paper award in Education Data Mining 2014 conference. The code is available at http://ml-smores.github.io/fast/.