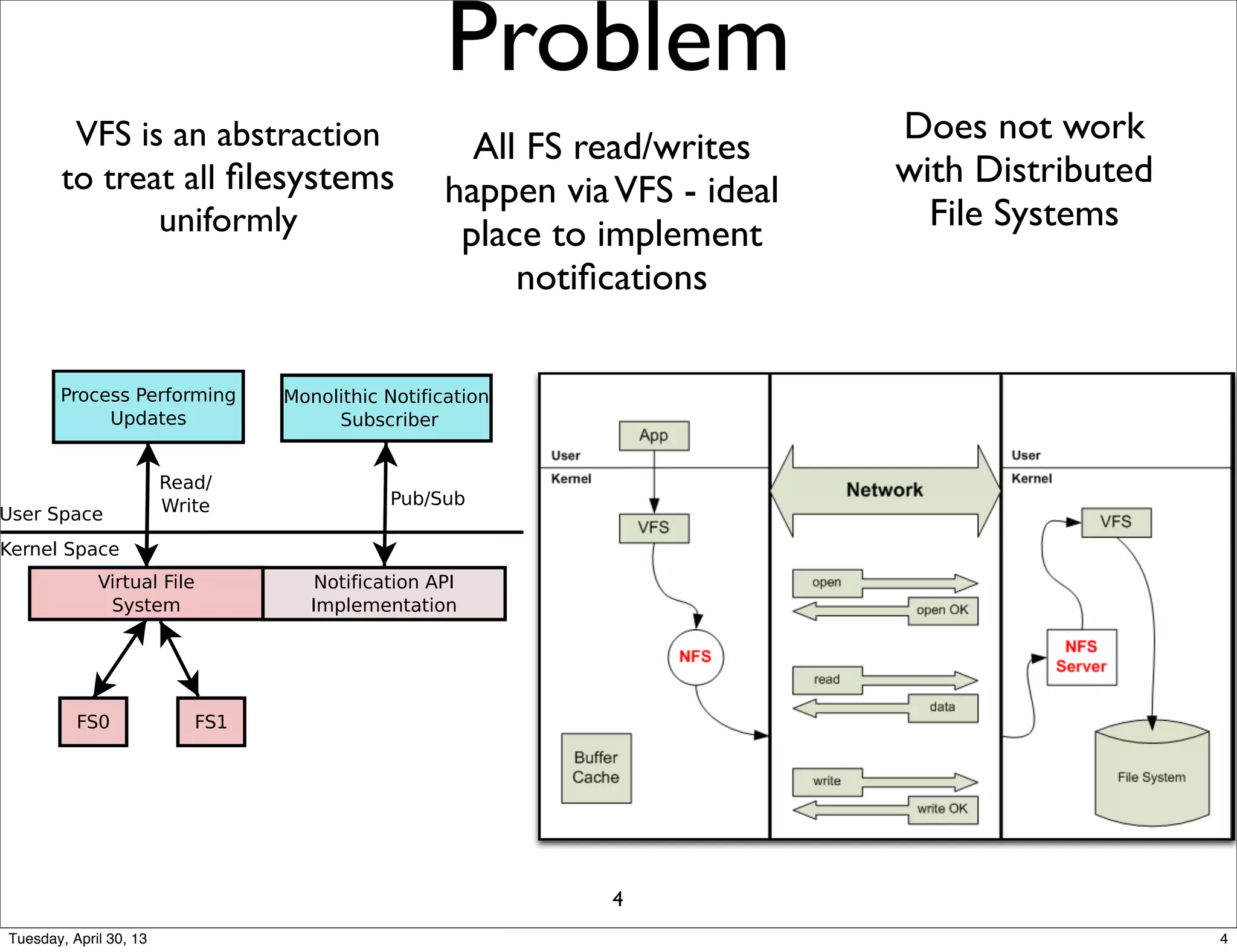

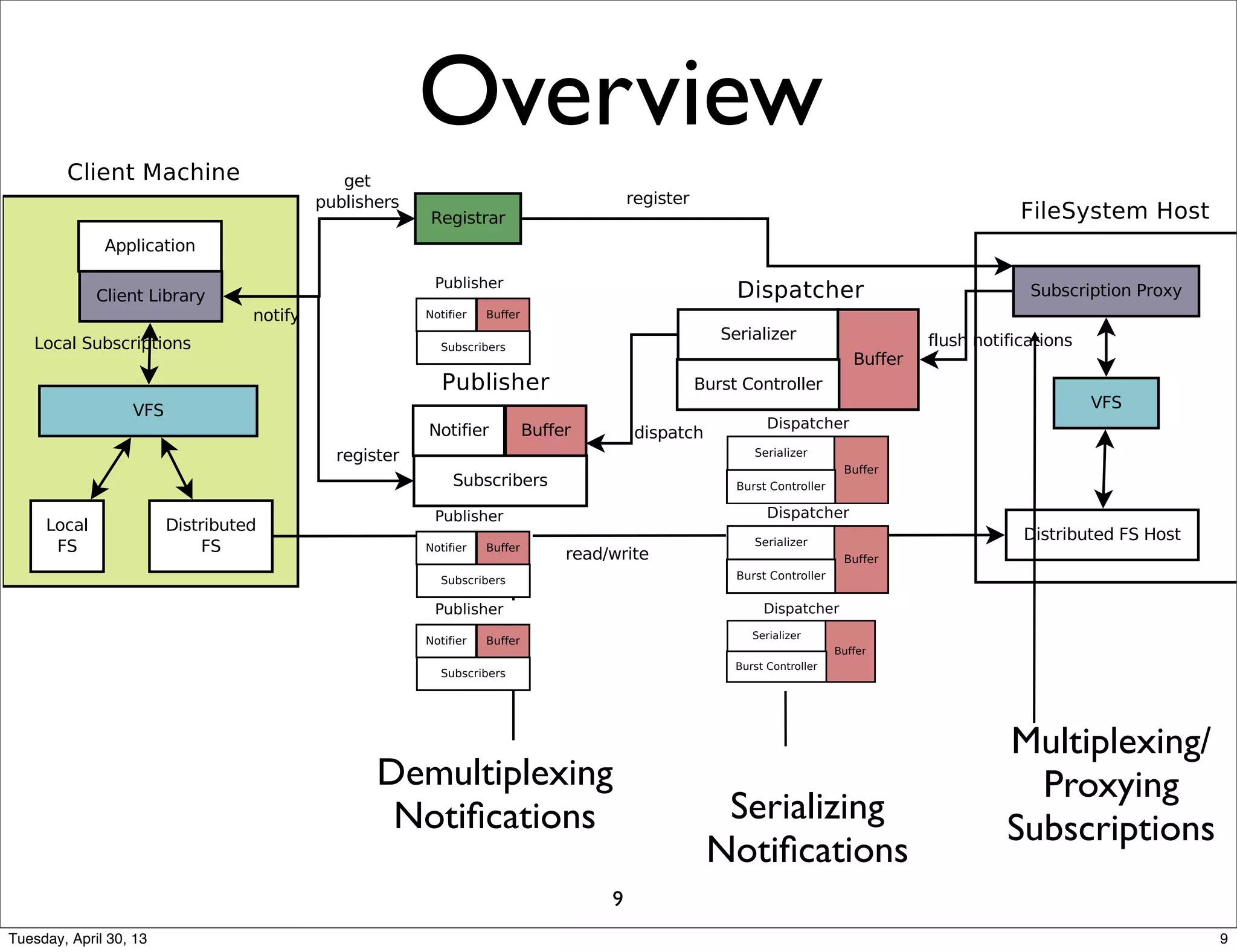

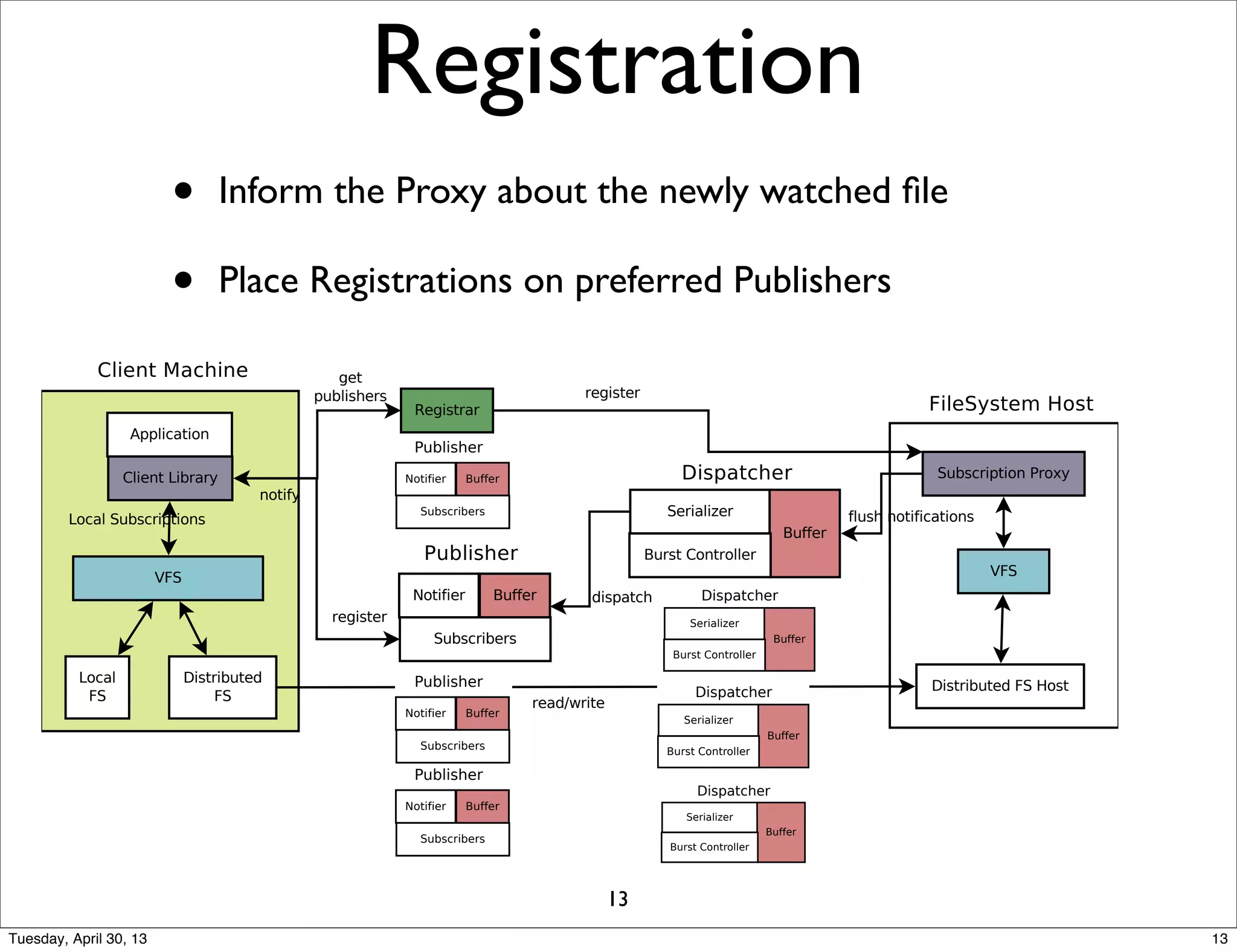

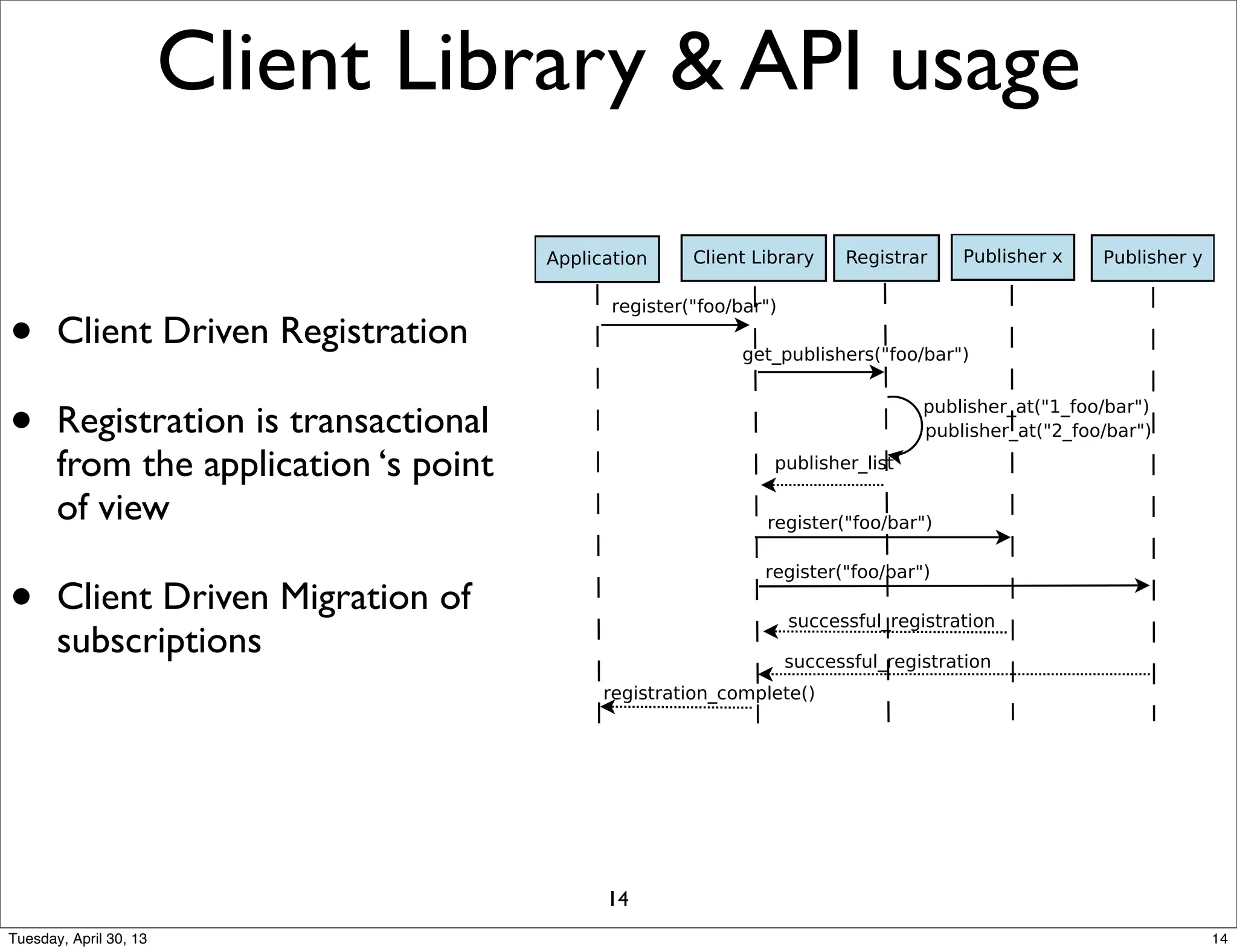

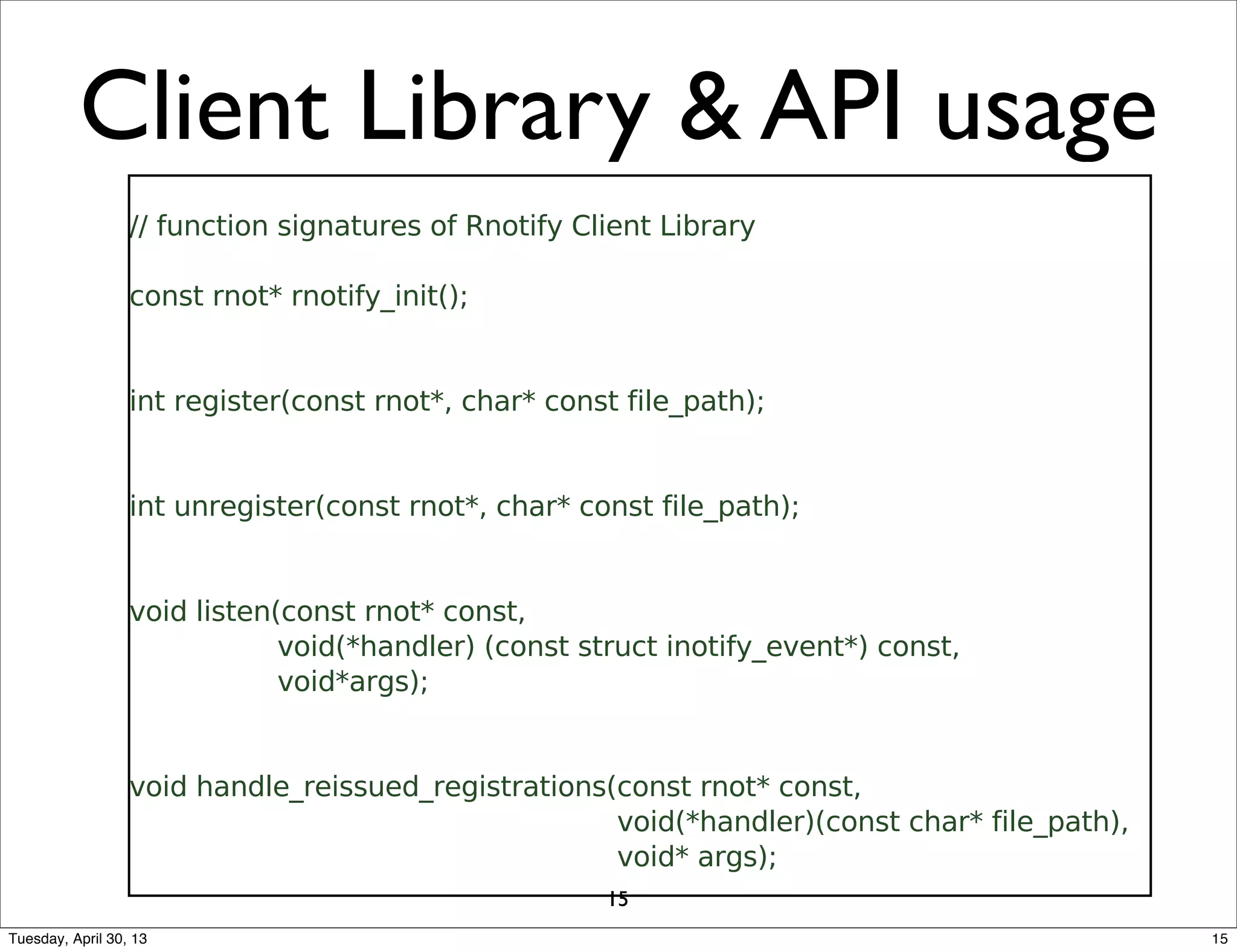

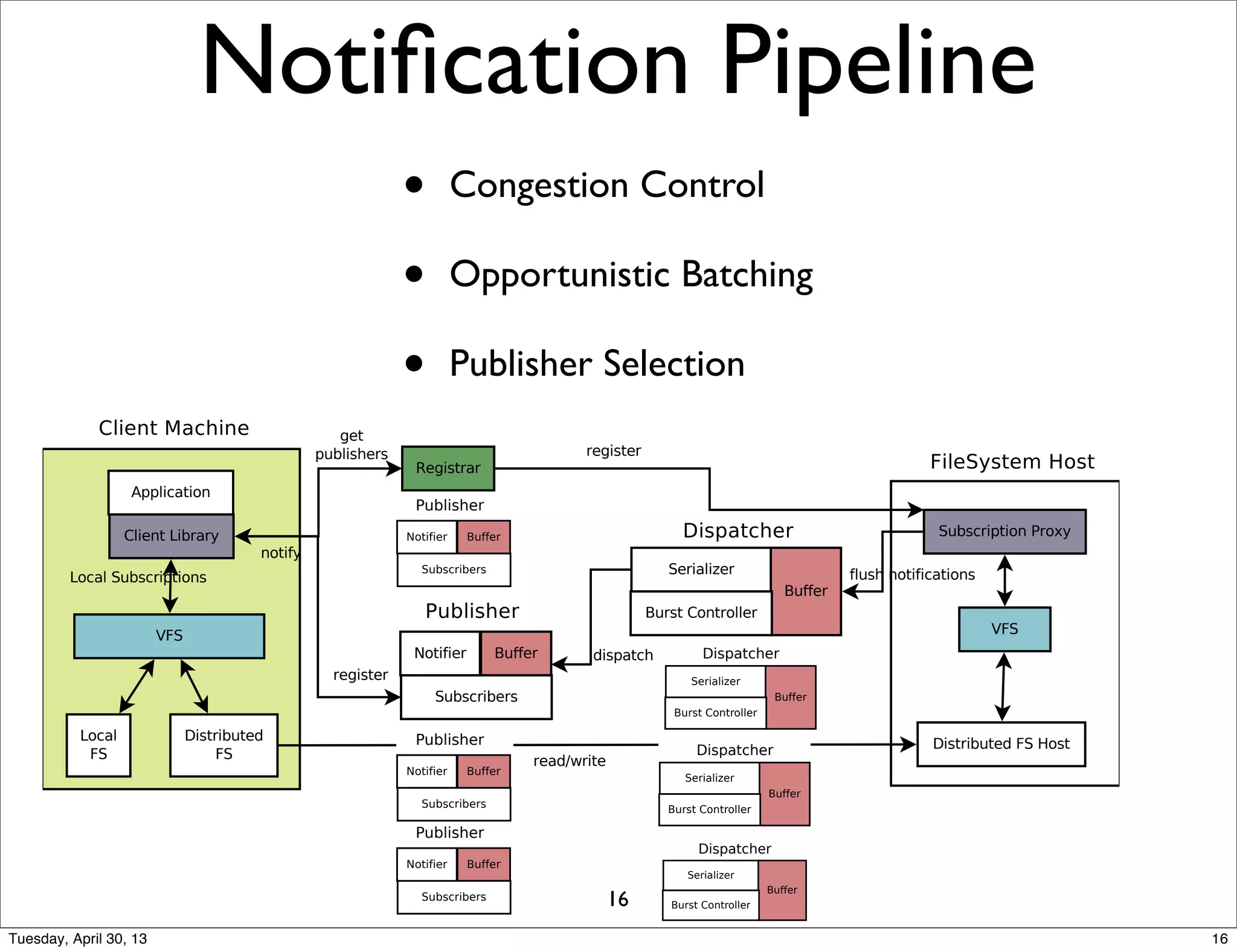

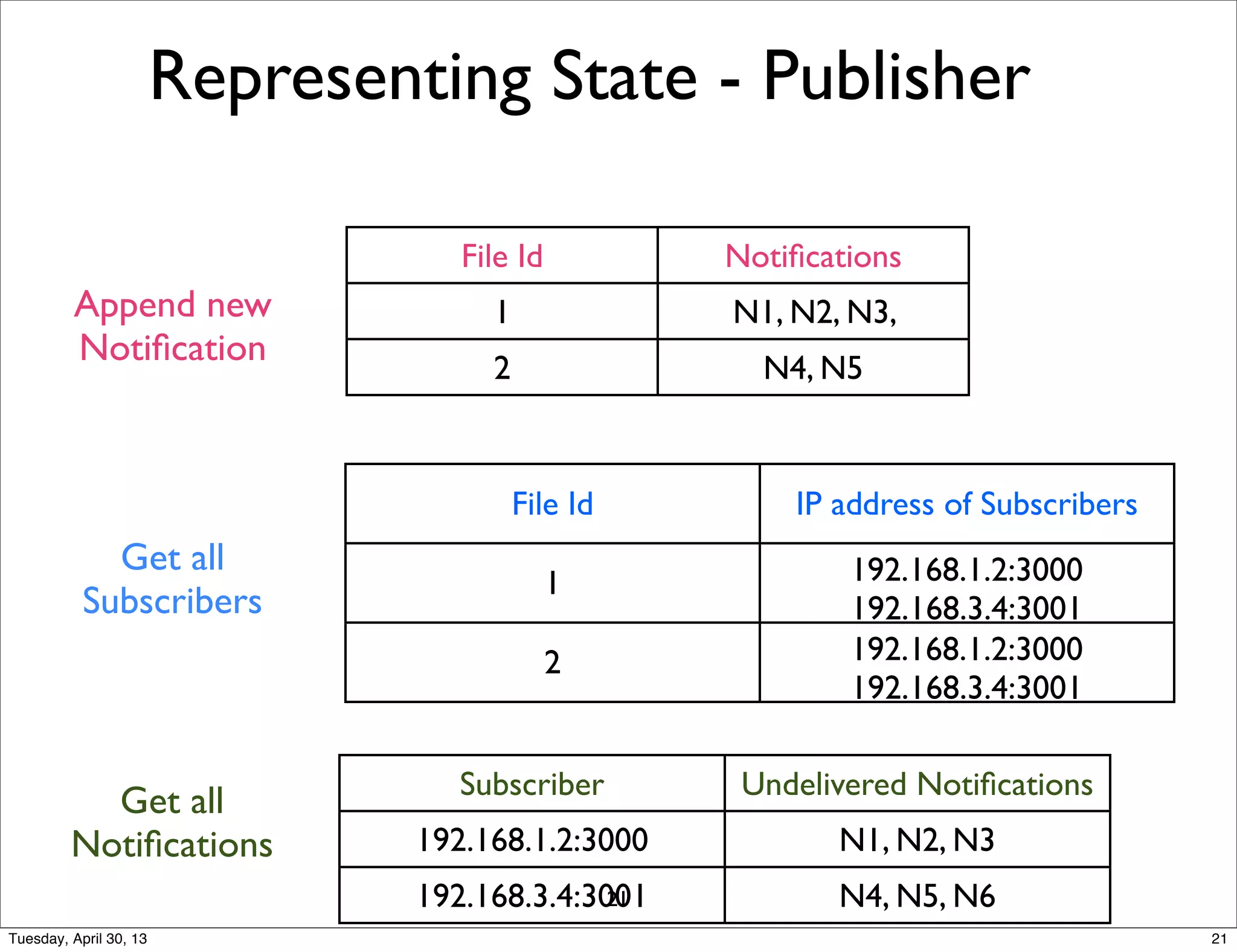

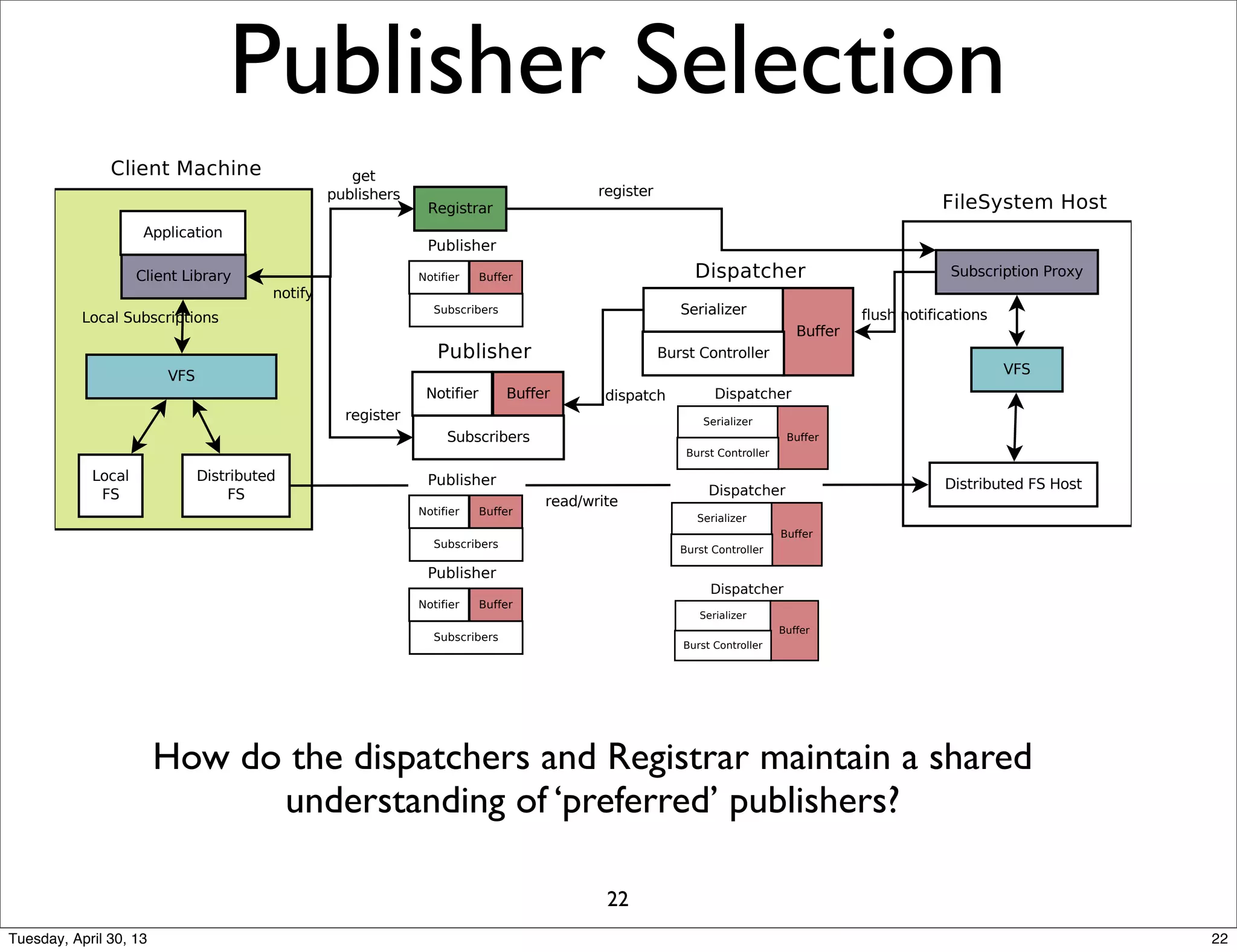

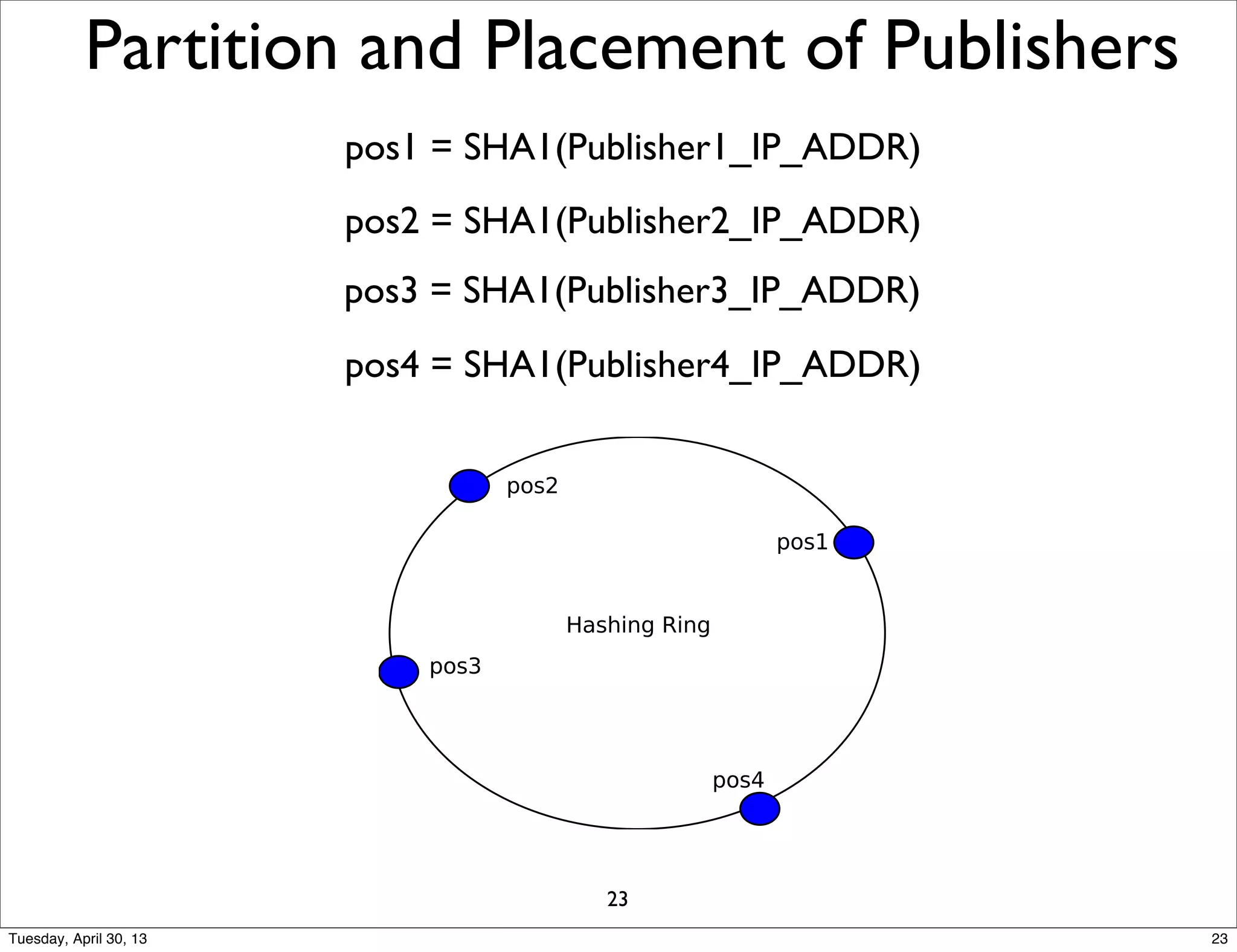

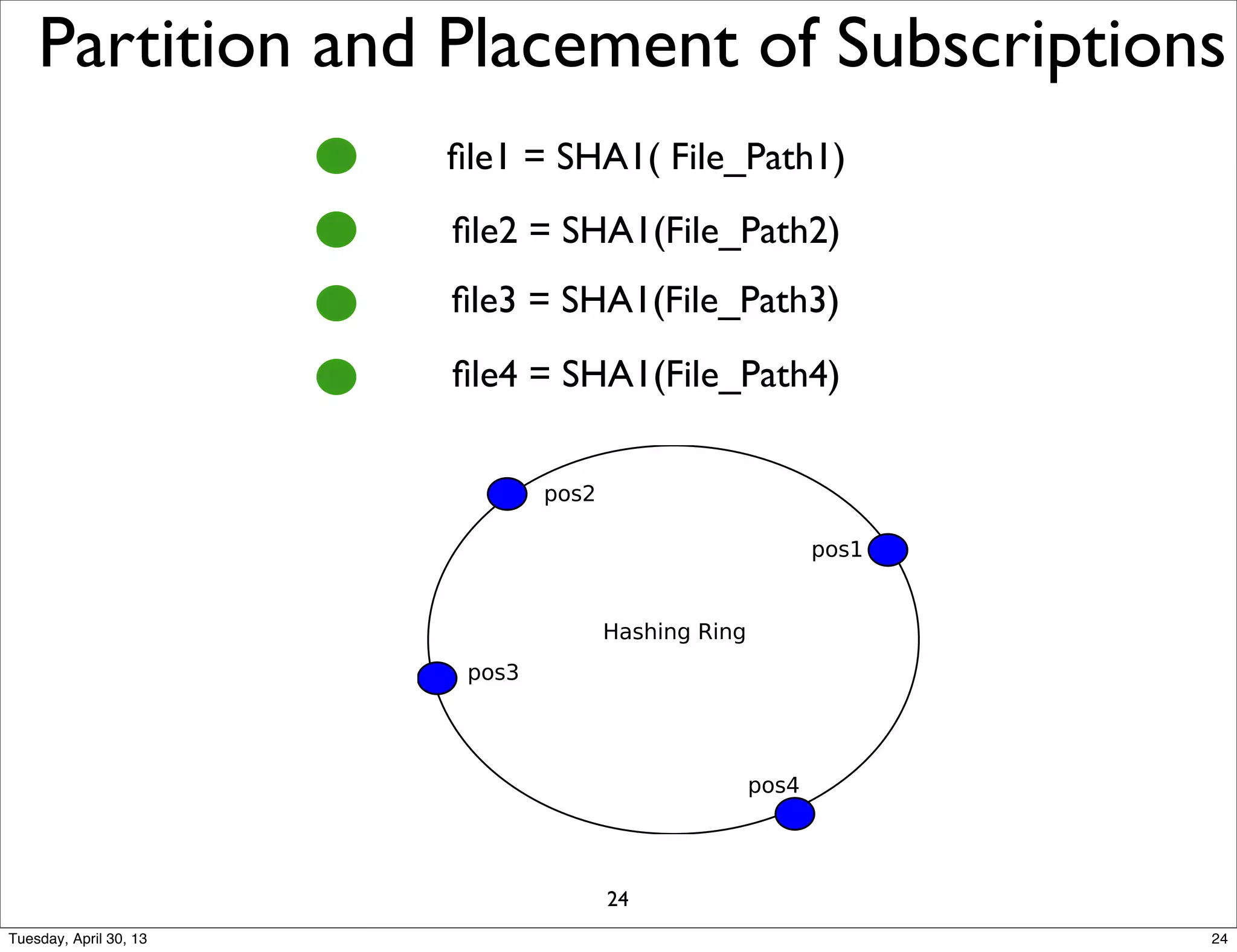

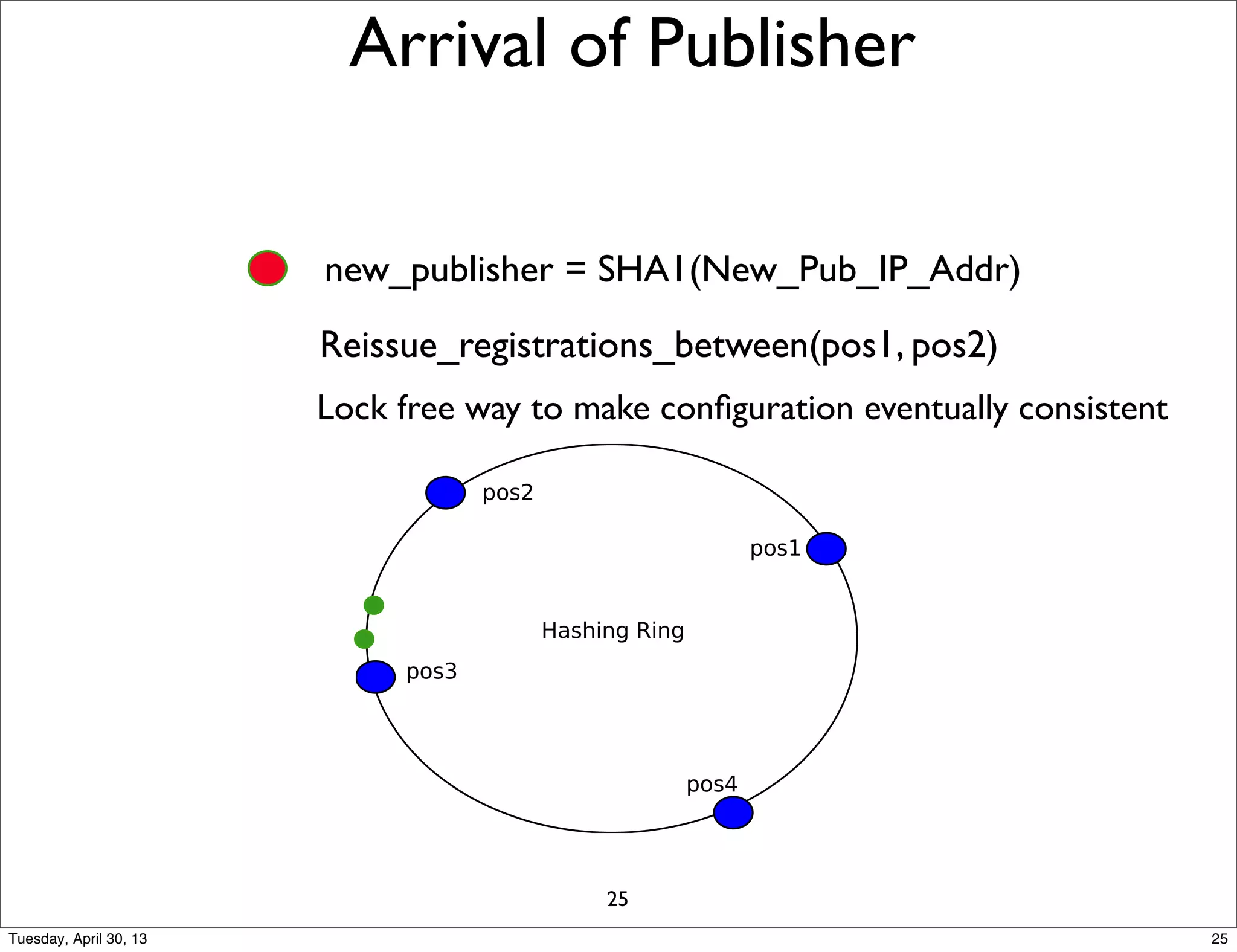

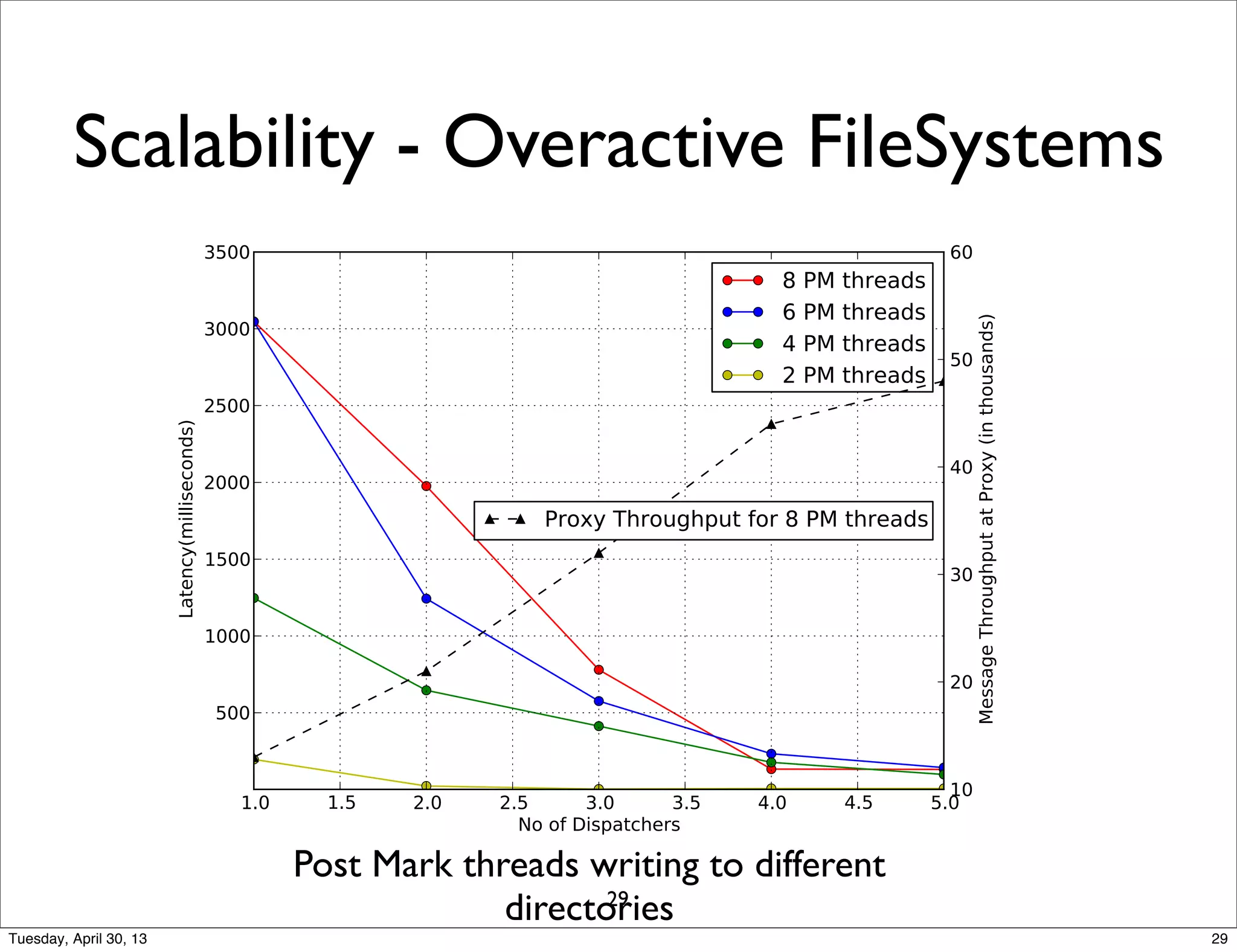

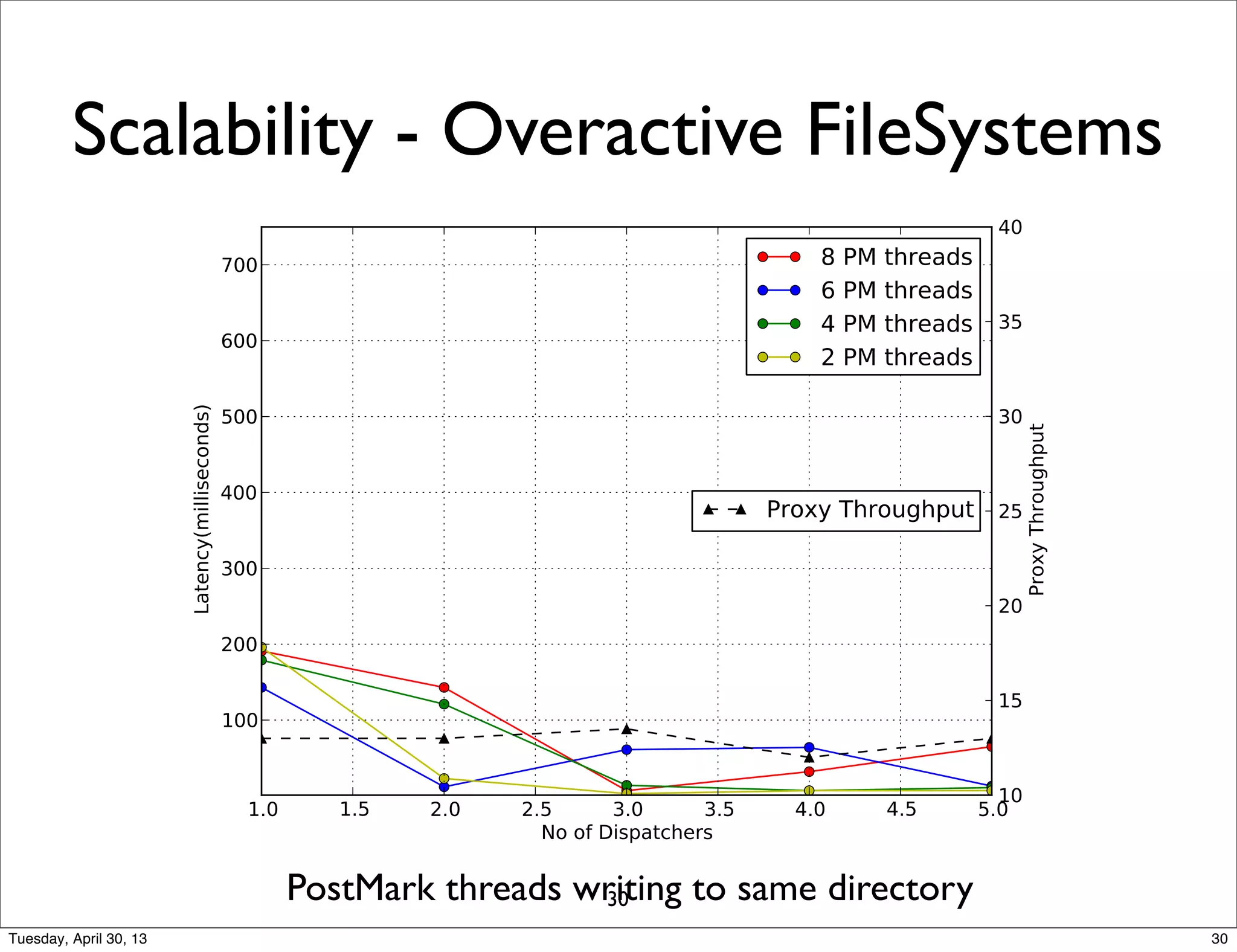

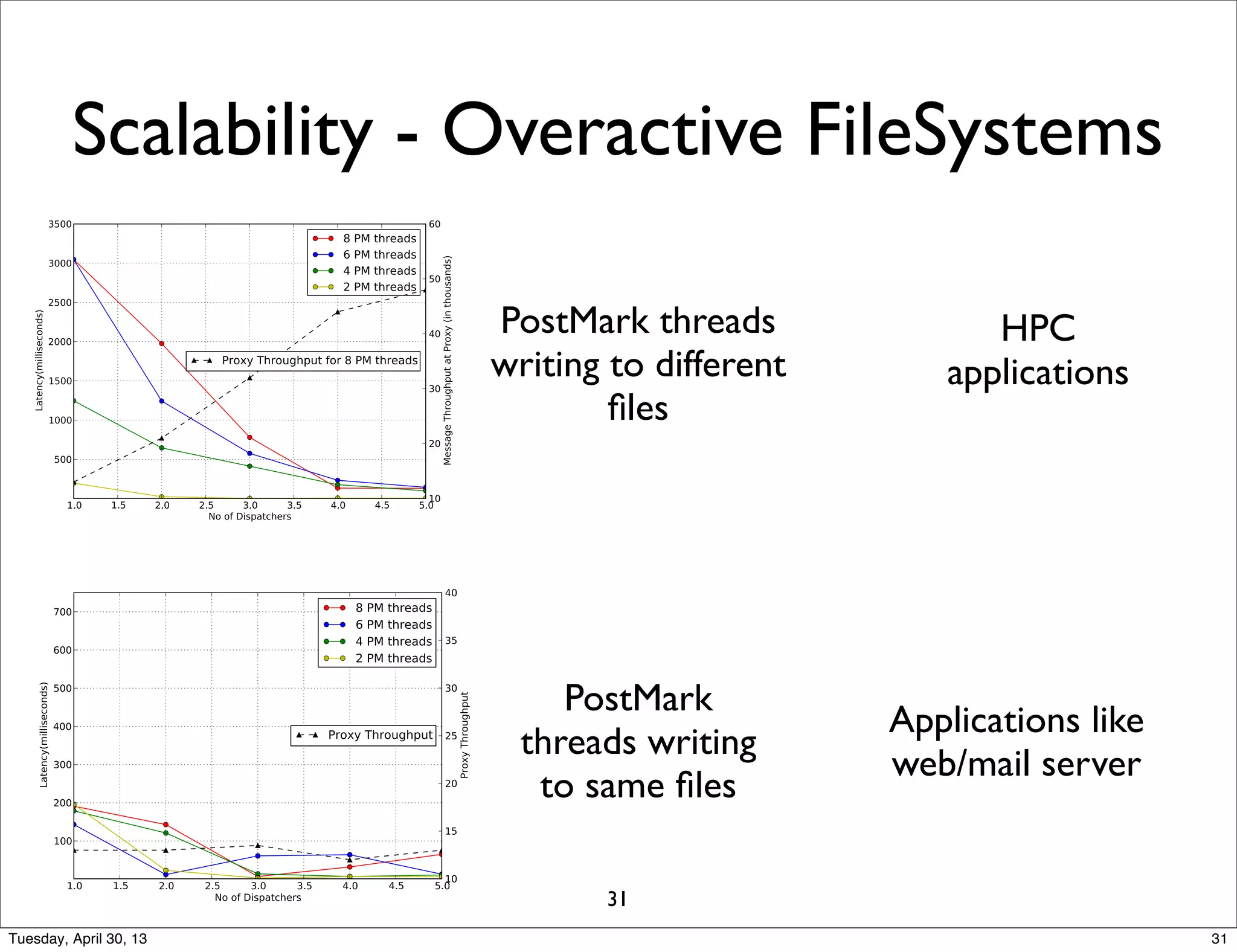

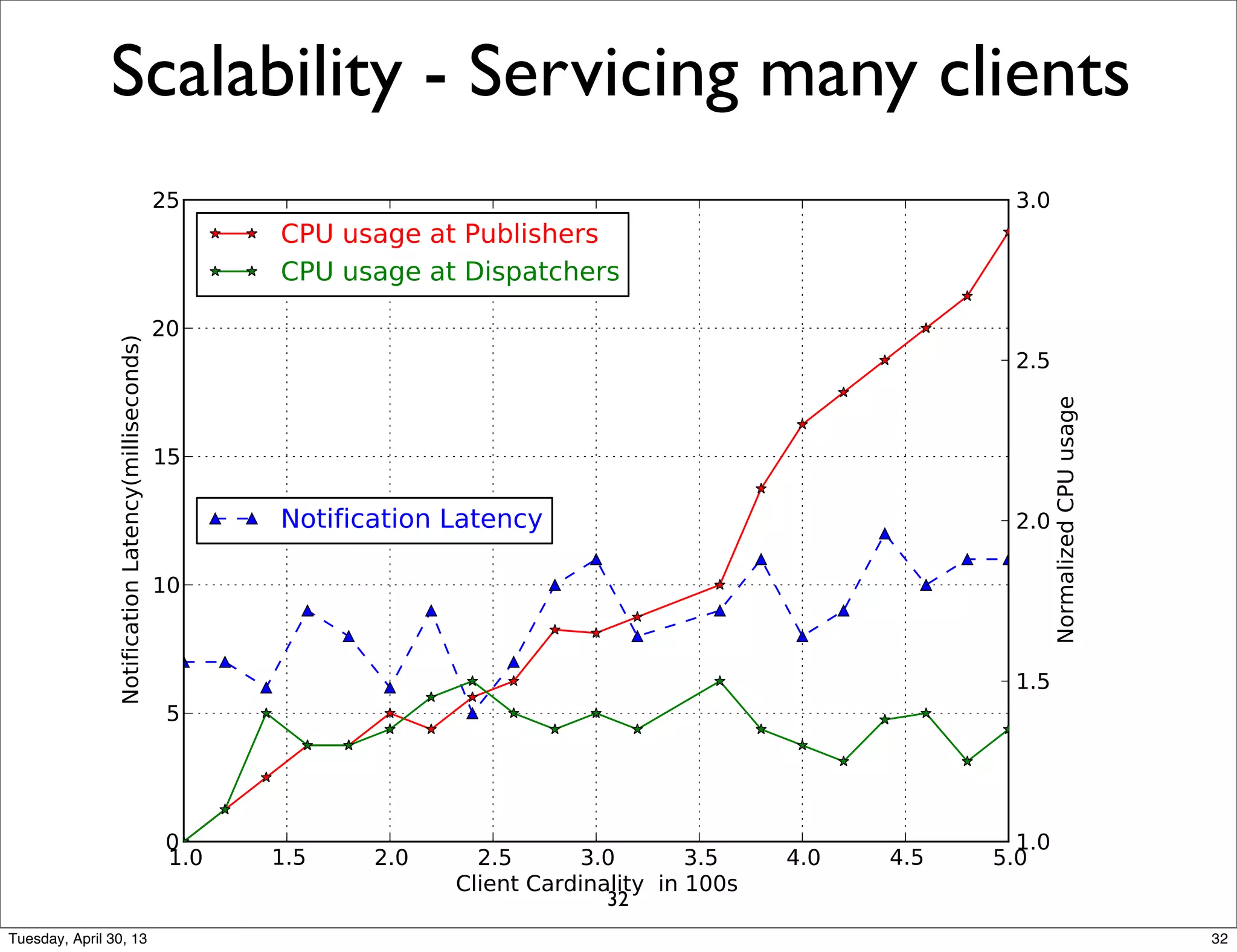

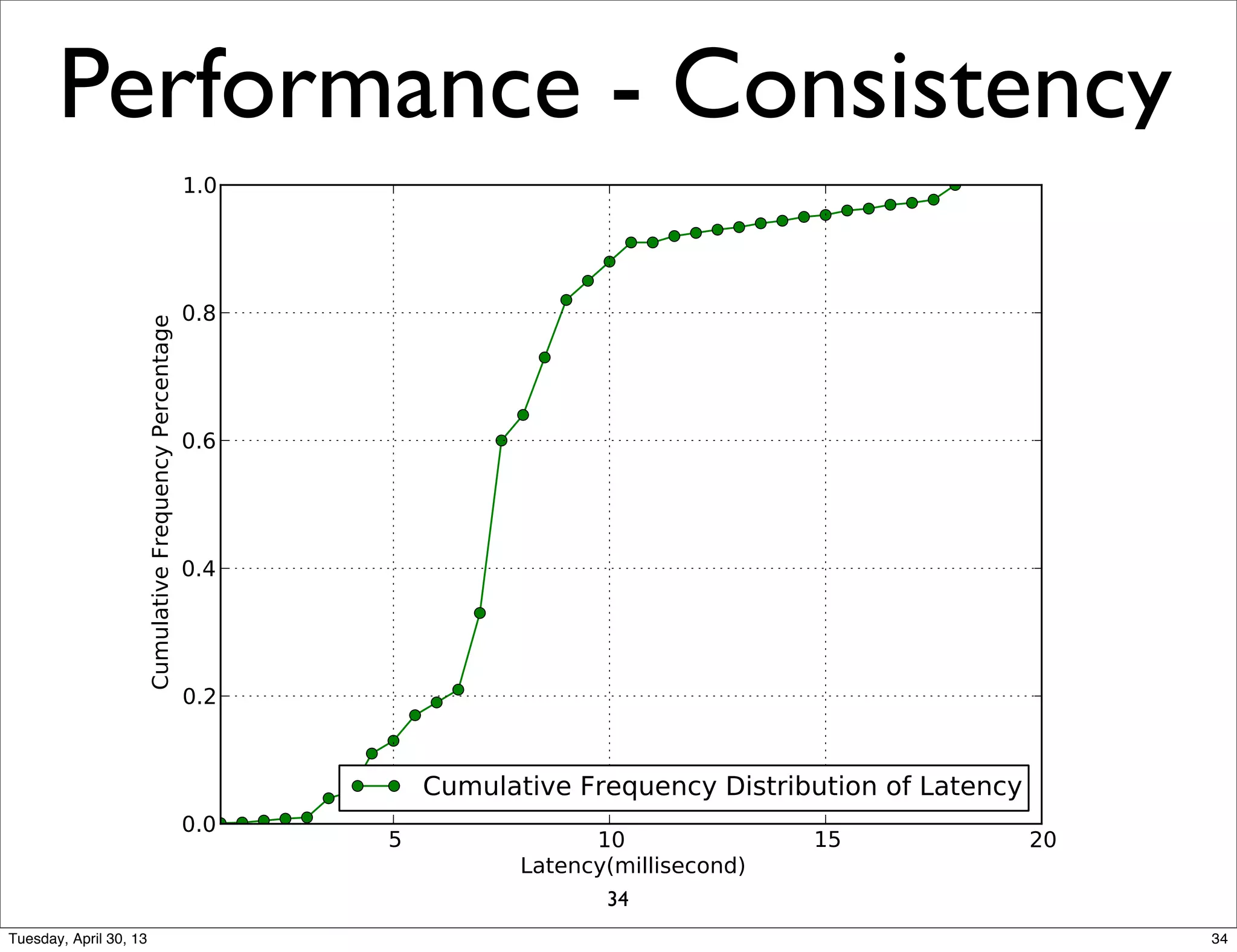

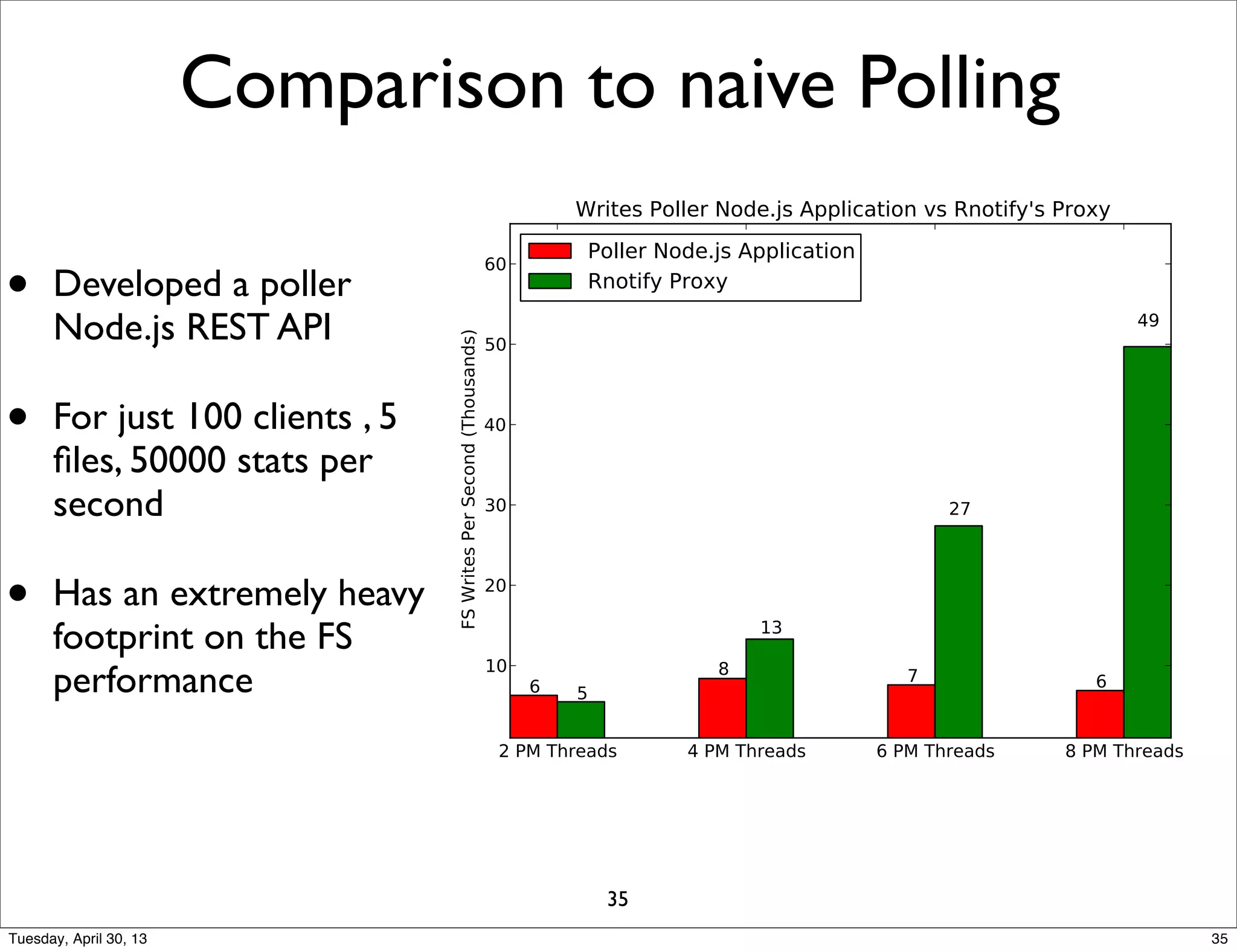

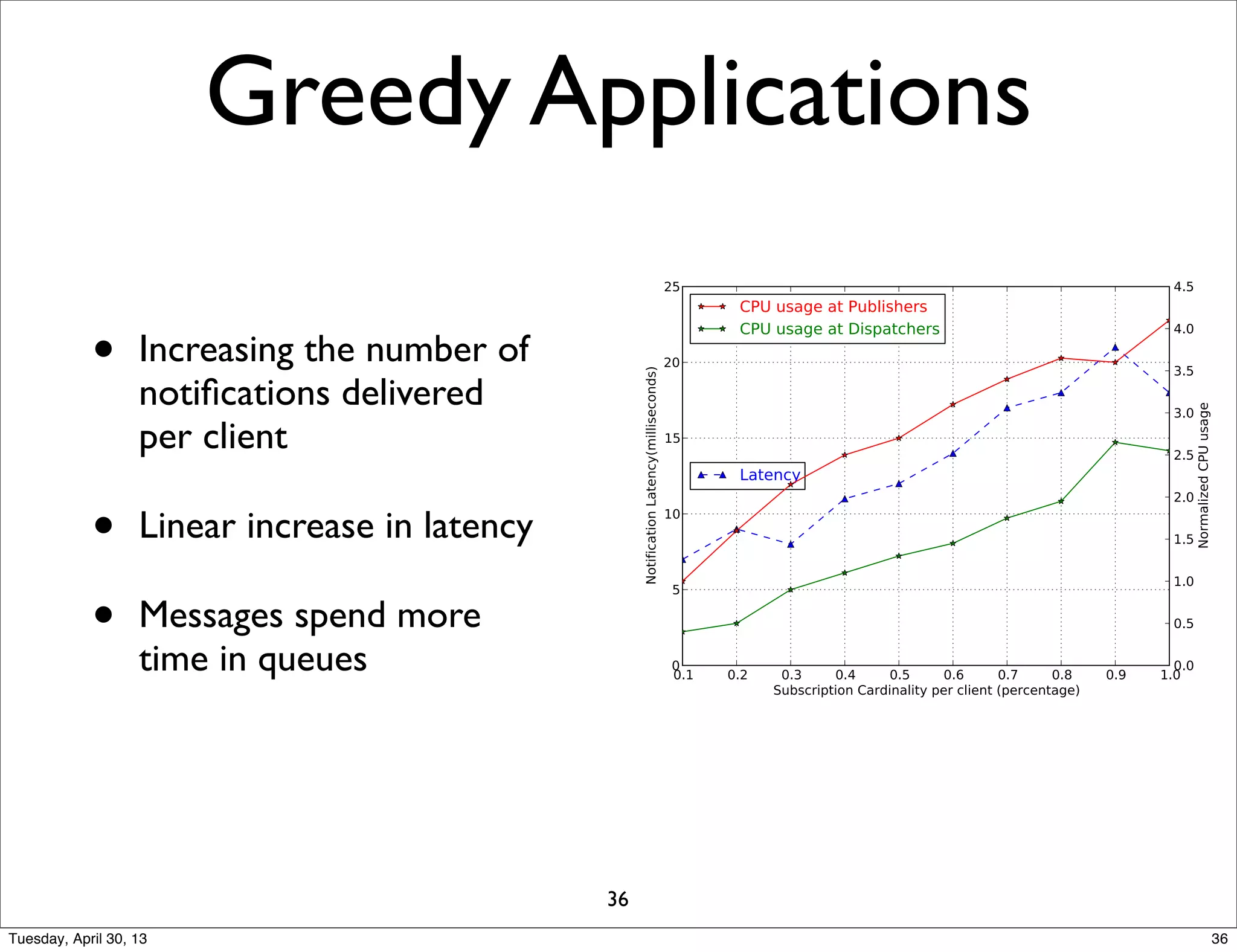

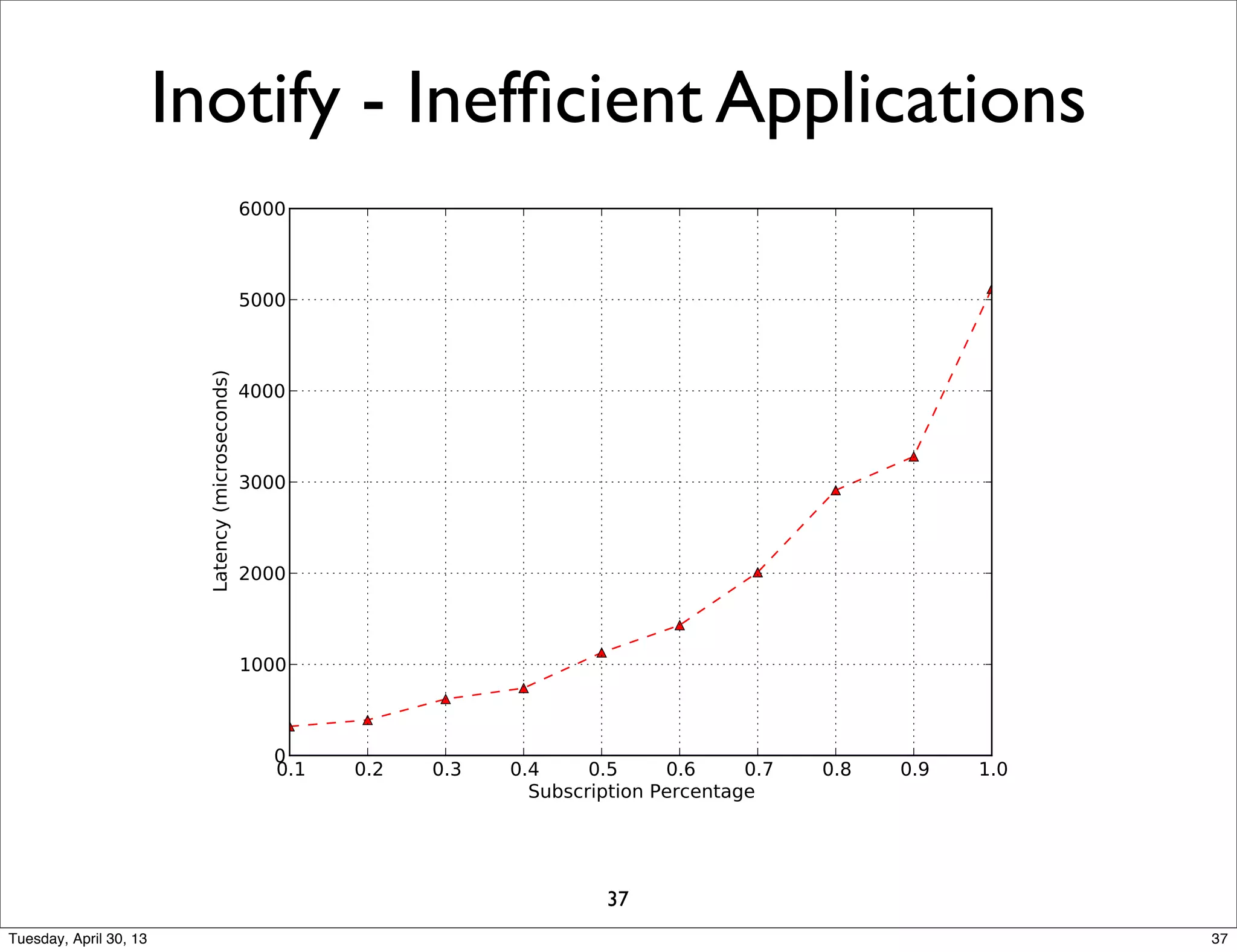

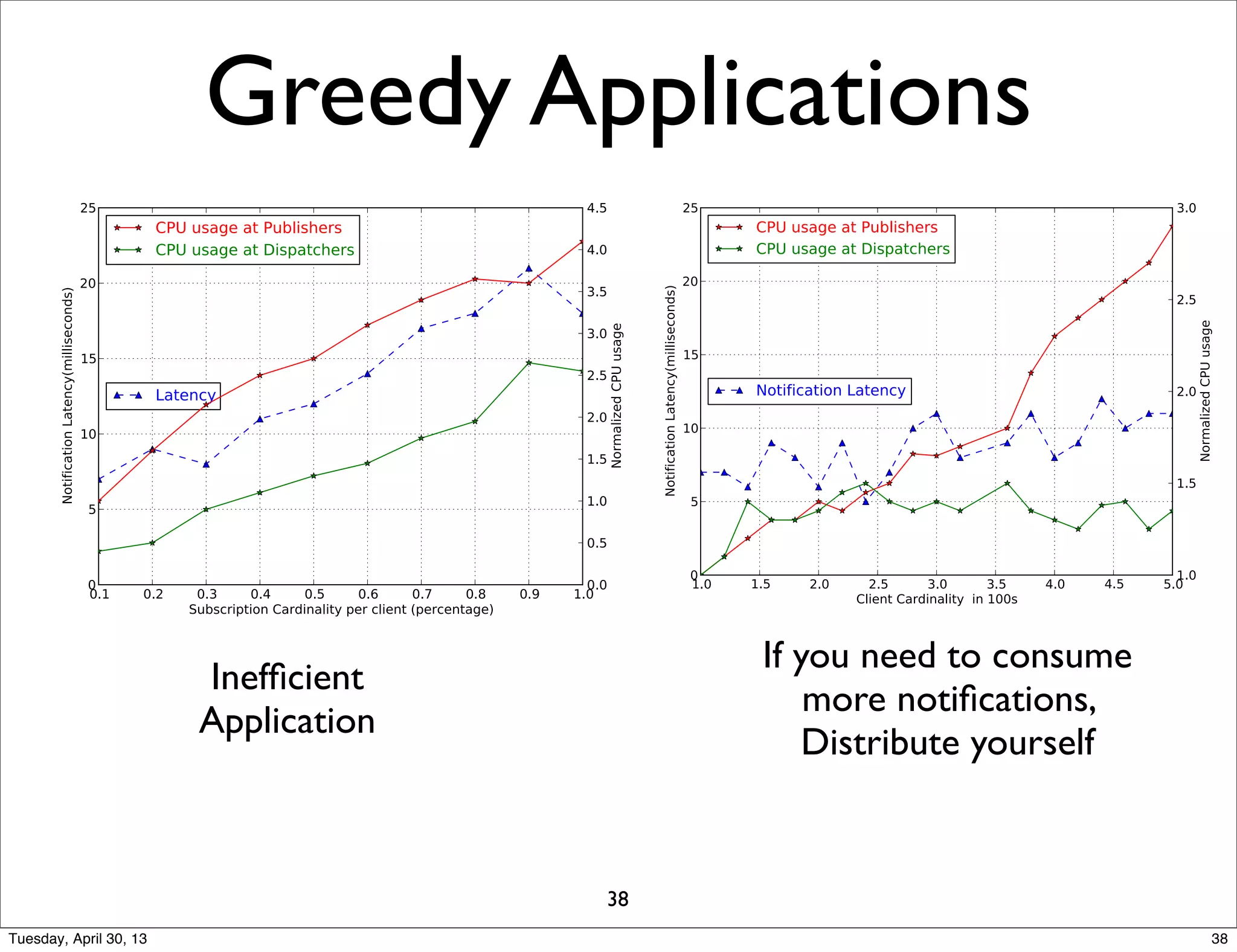

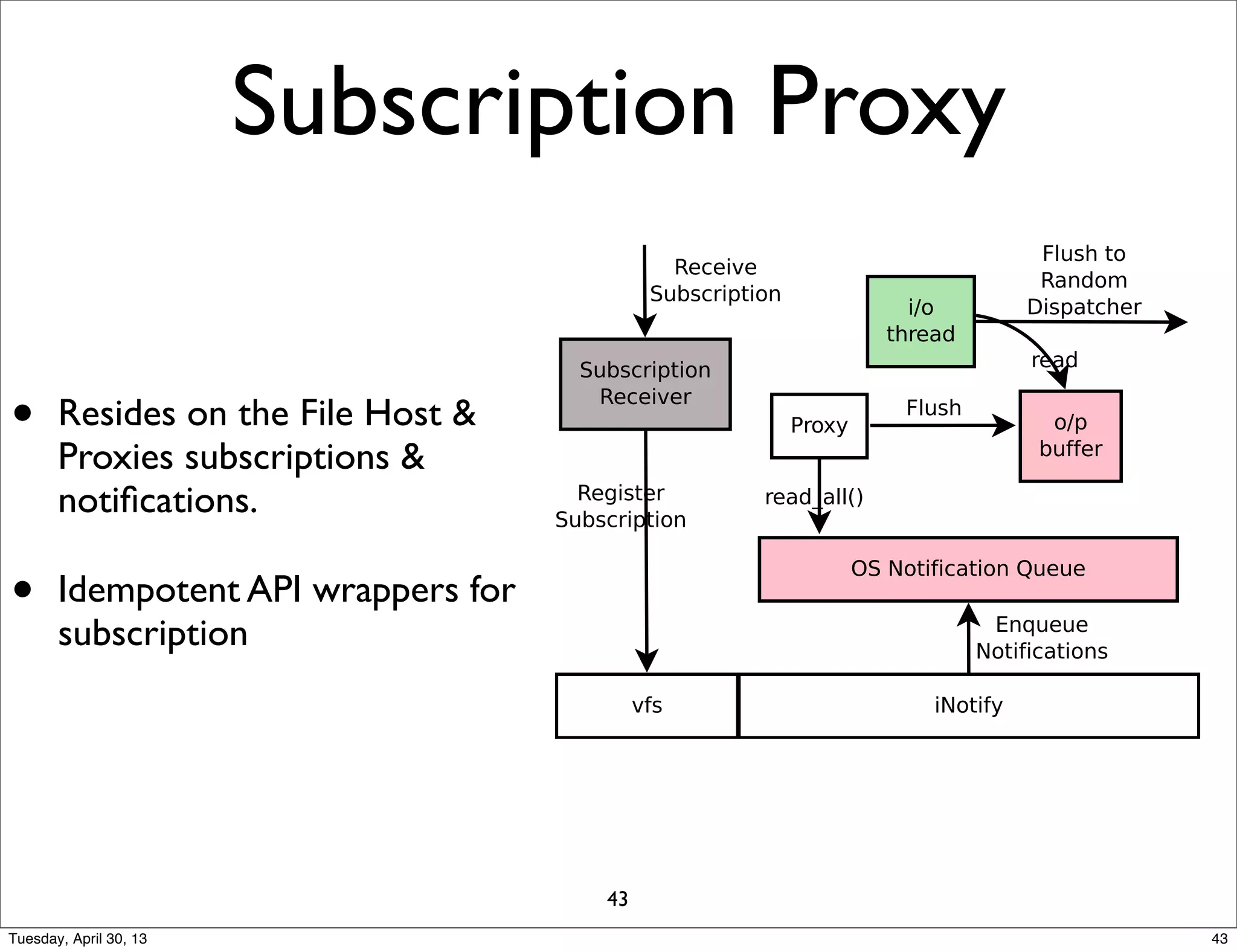

The document presents Rnotify, a scalable distributed filesystem notifications solution. It discusses the need for file system notifications in applications and limitations of existing approaches. Rnotify aims to provide location transparency, scalability, and tunability while maintaining compatibility with applications using Inotify. It proposes to decompose functionality into replicable components to deliver low-latency notifications under different workloads.