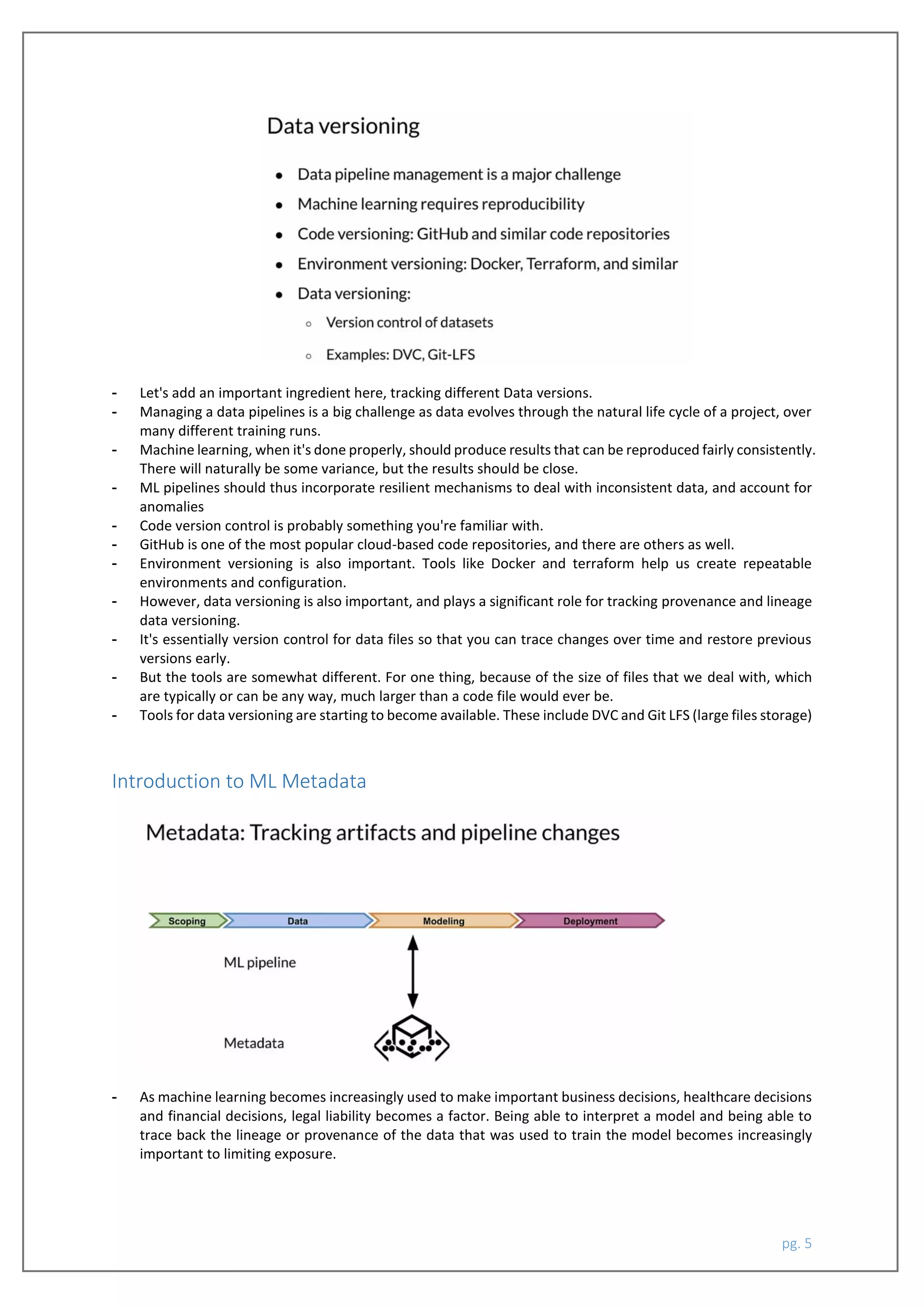

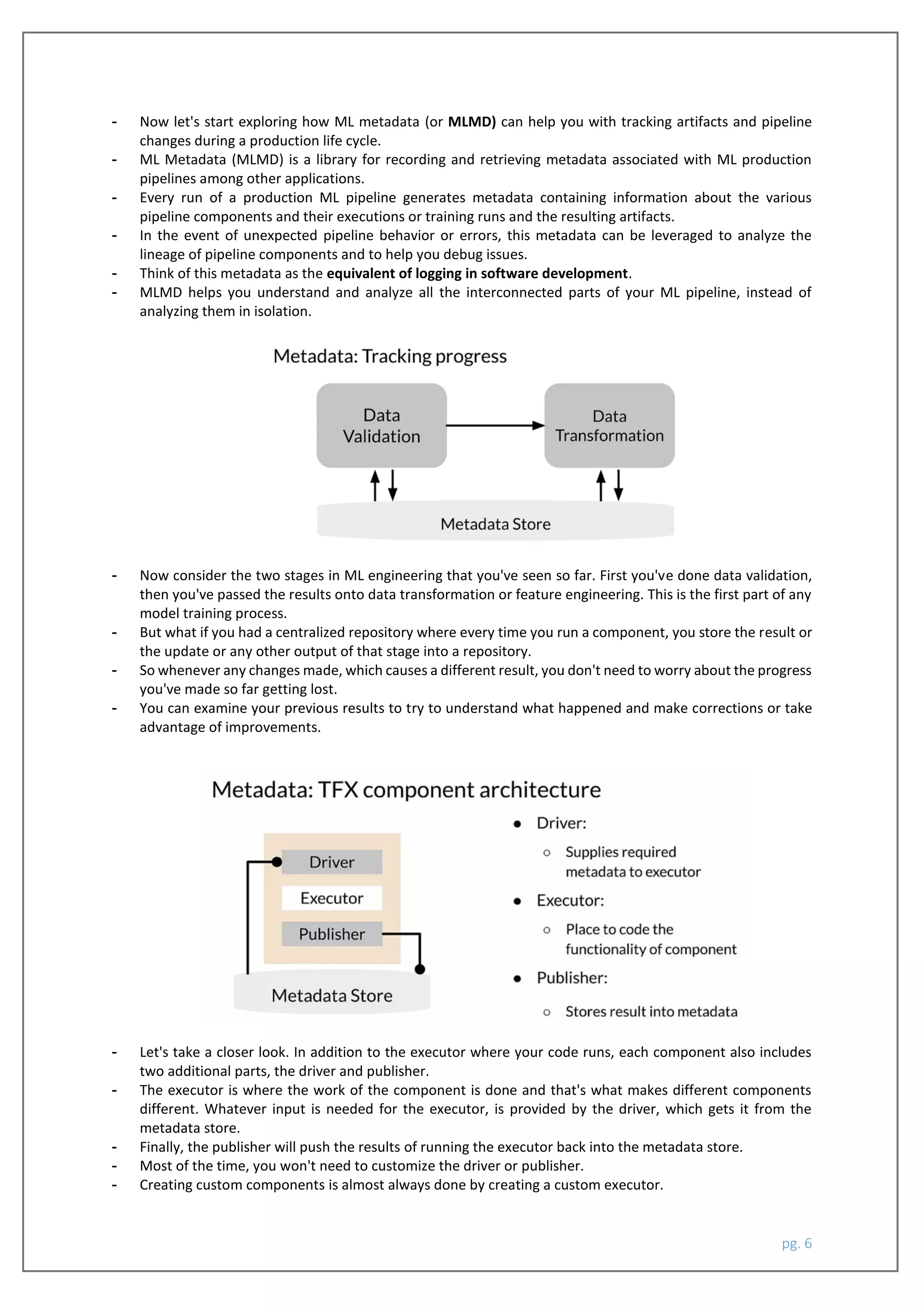

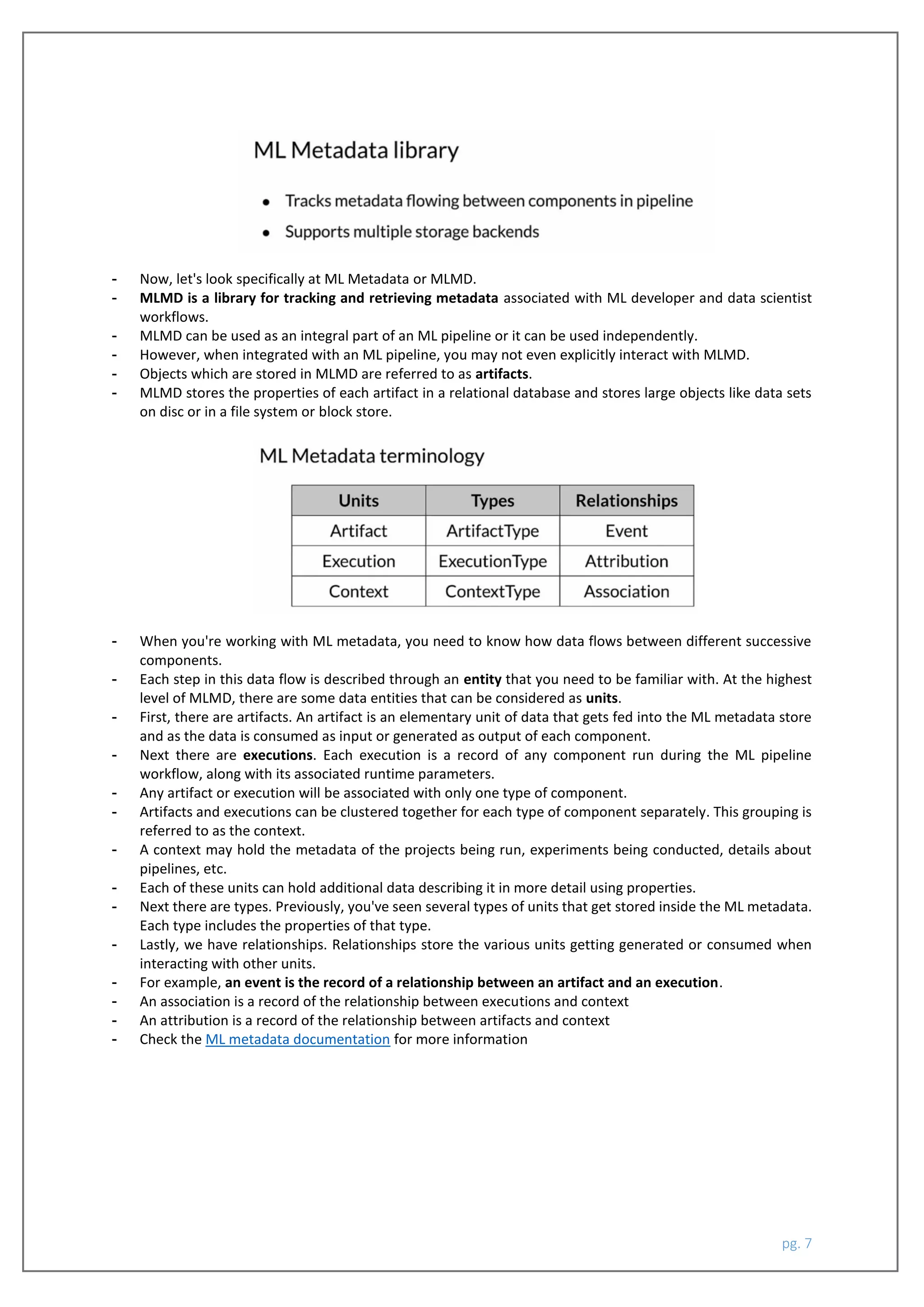

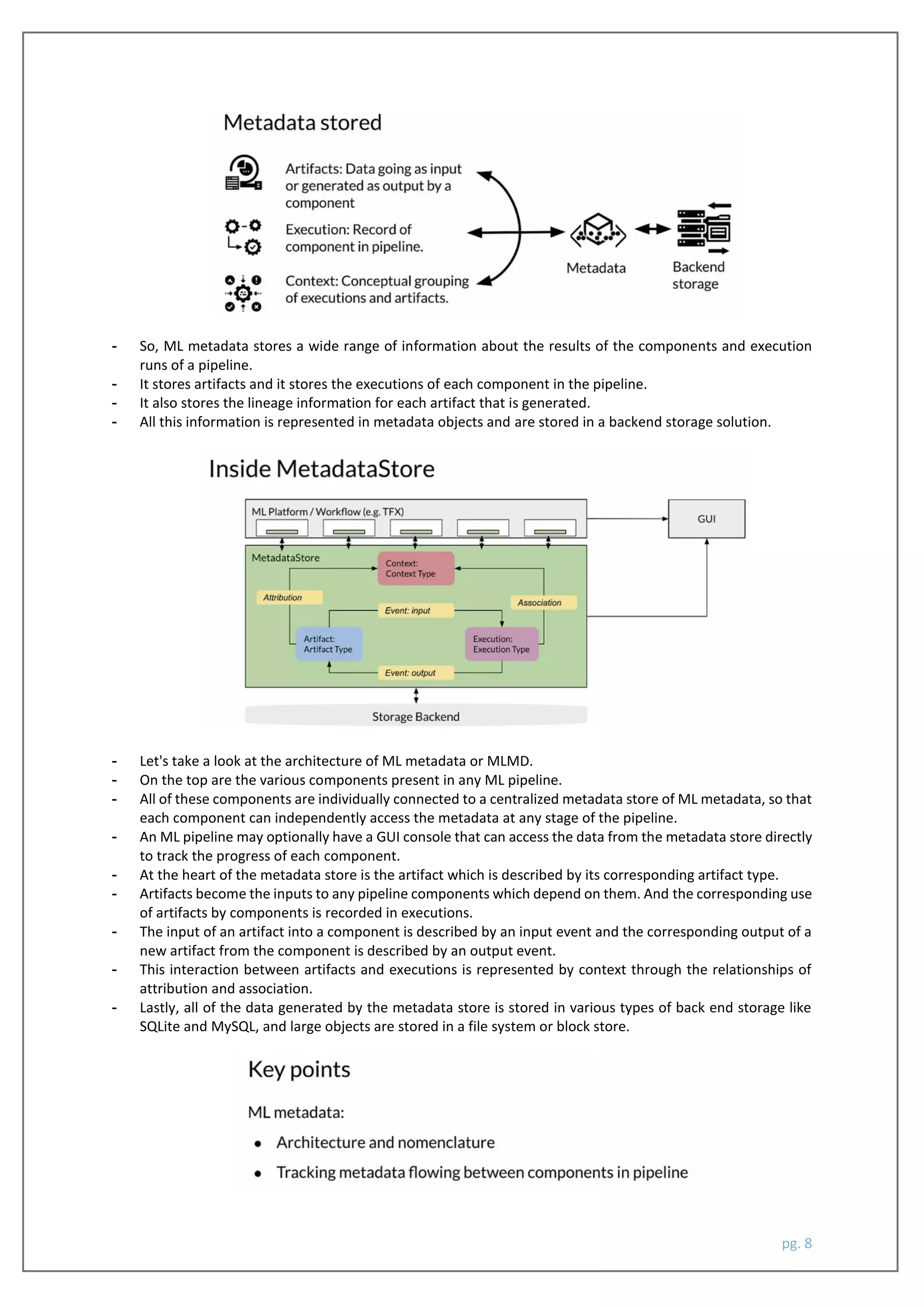

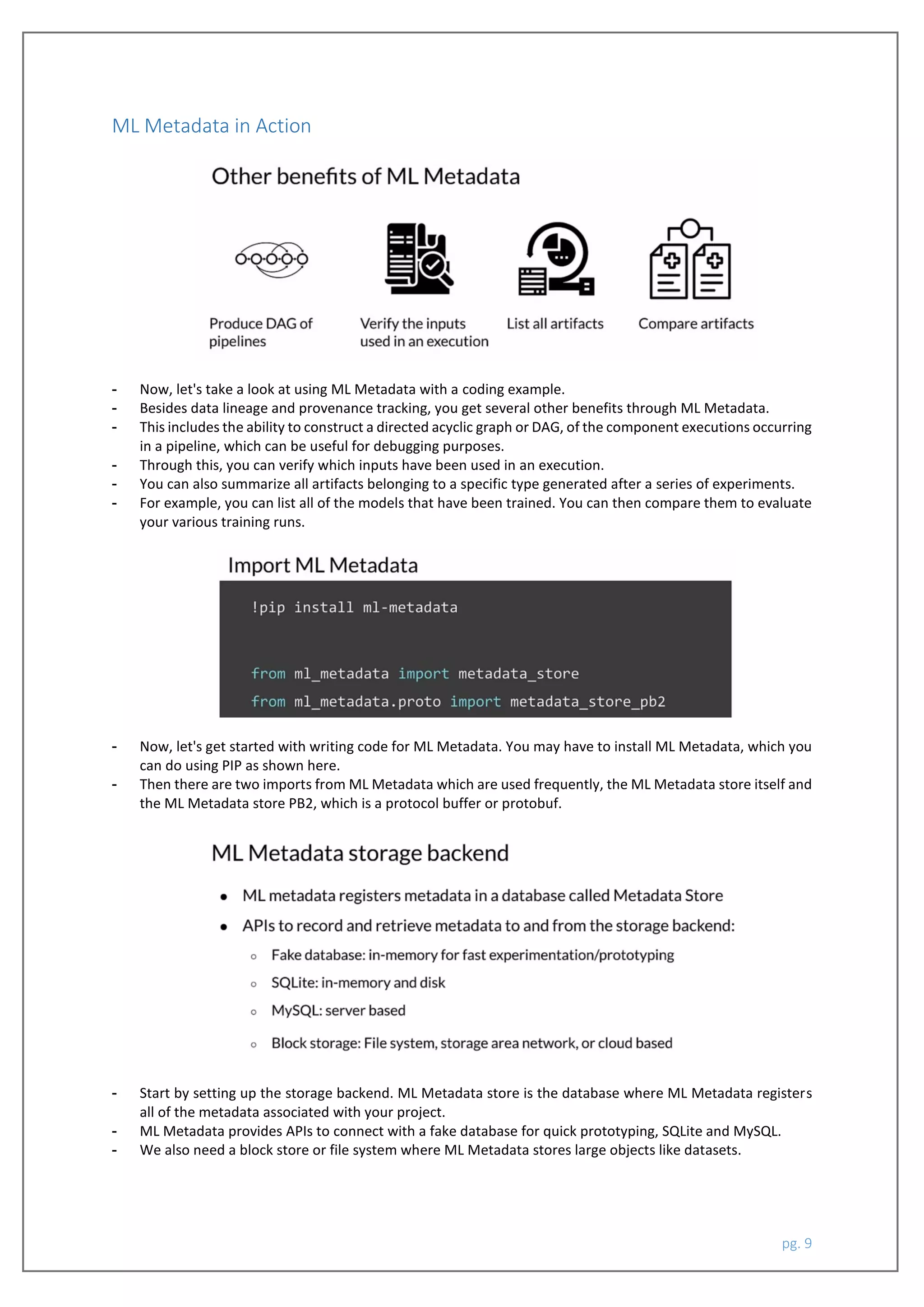

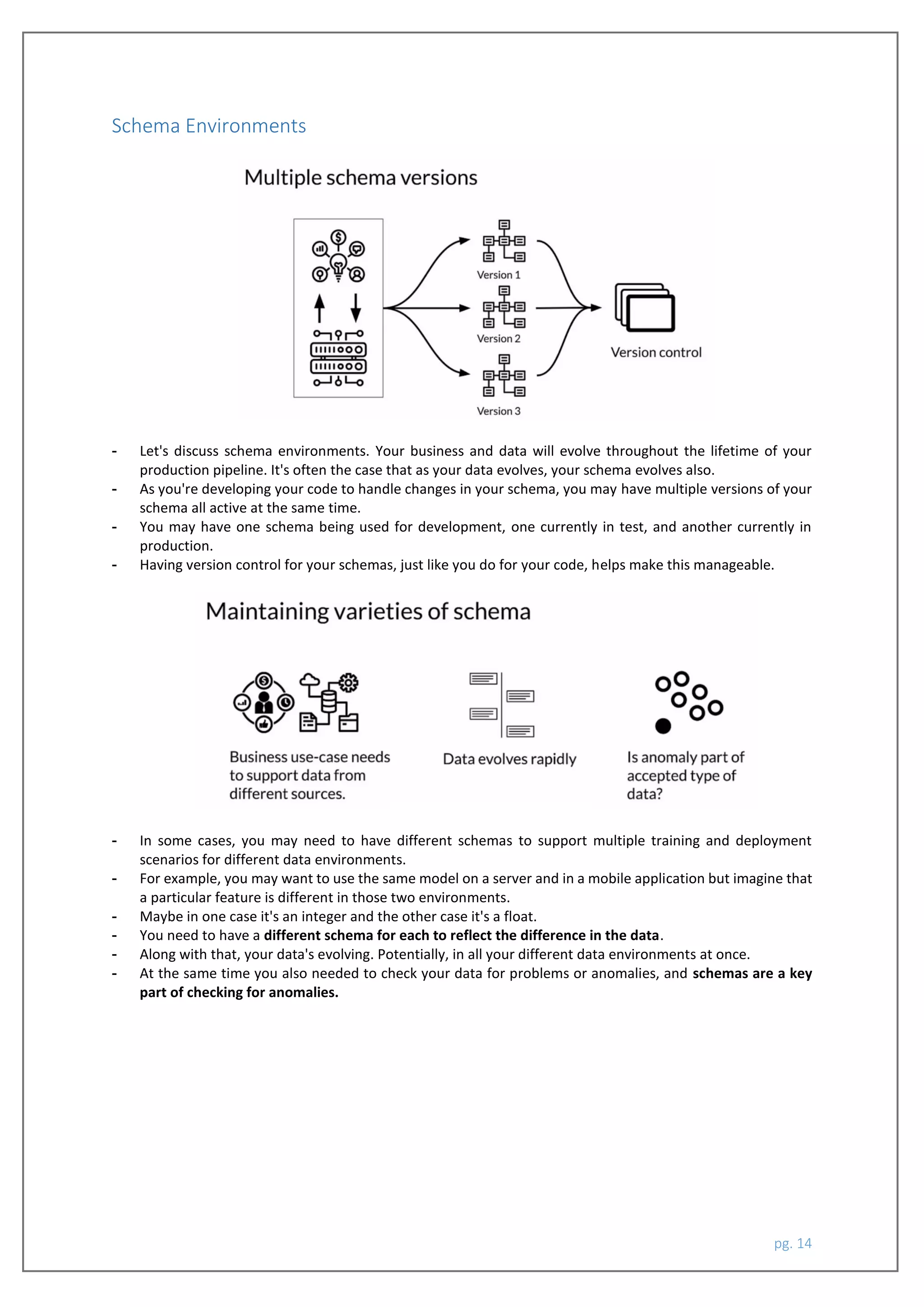

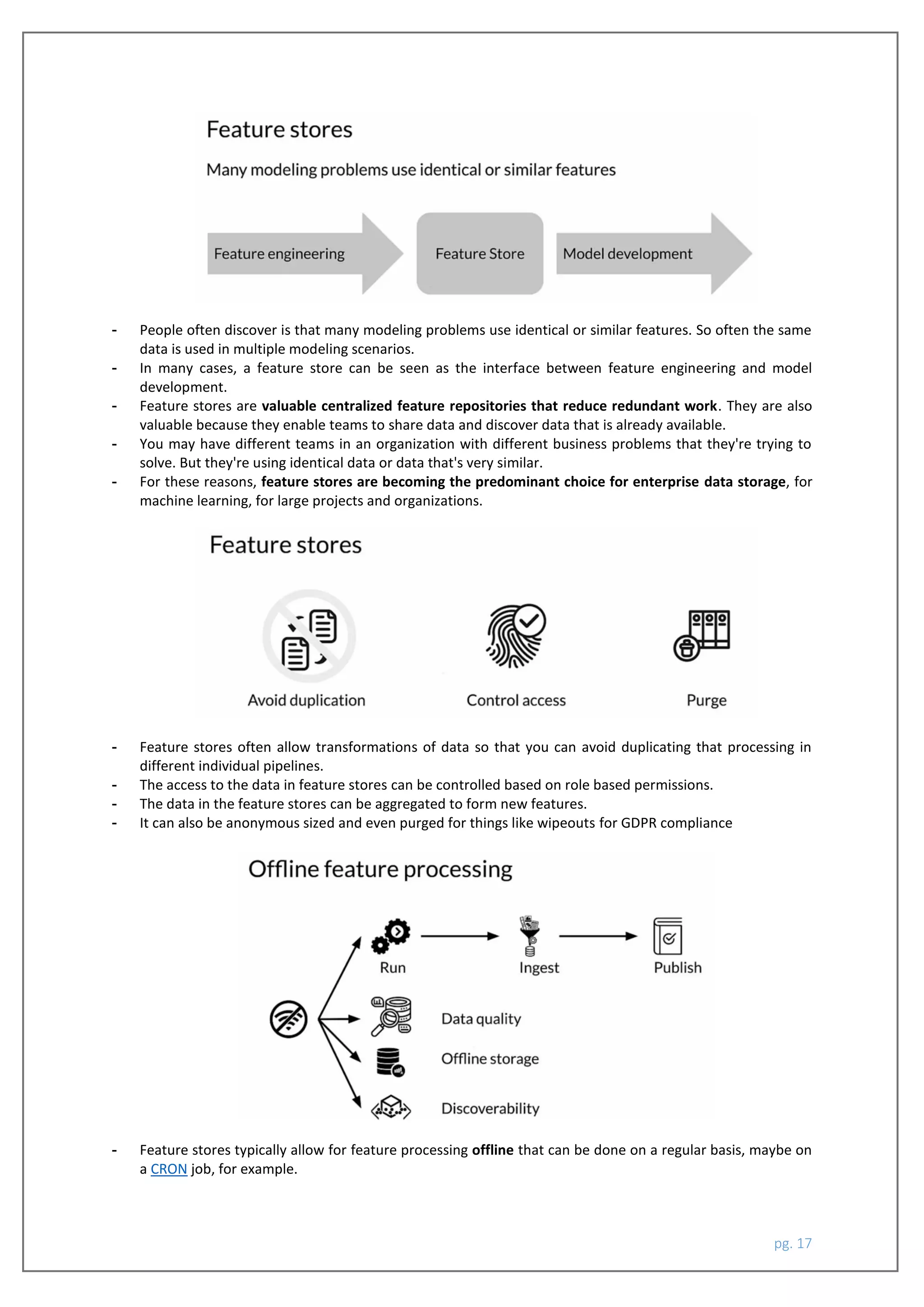

The document outlines the data lifecycle in machine learning engineering, focusing on building data pipelines that include gathering, cleaning, and validating datasets, as well as feature engineering. It emphasizes the importance of tracking data lineage and provenance to ensure reproducibility, debugging, and compliance with regulations like GDPR. Additionally, it introduces tools like ML Metadata (mlmd) for recording and retrieving metadata related to ML pipelines, facilitating better management and understanding of the complex processes involved.