This document provides an overview of support vector machines (SVMs), including:

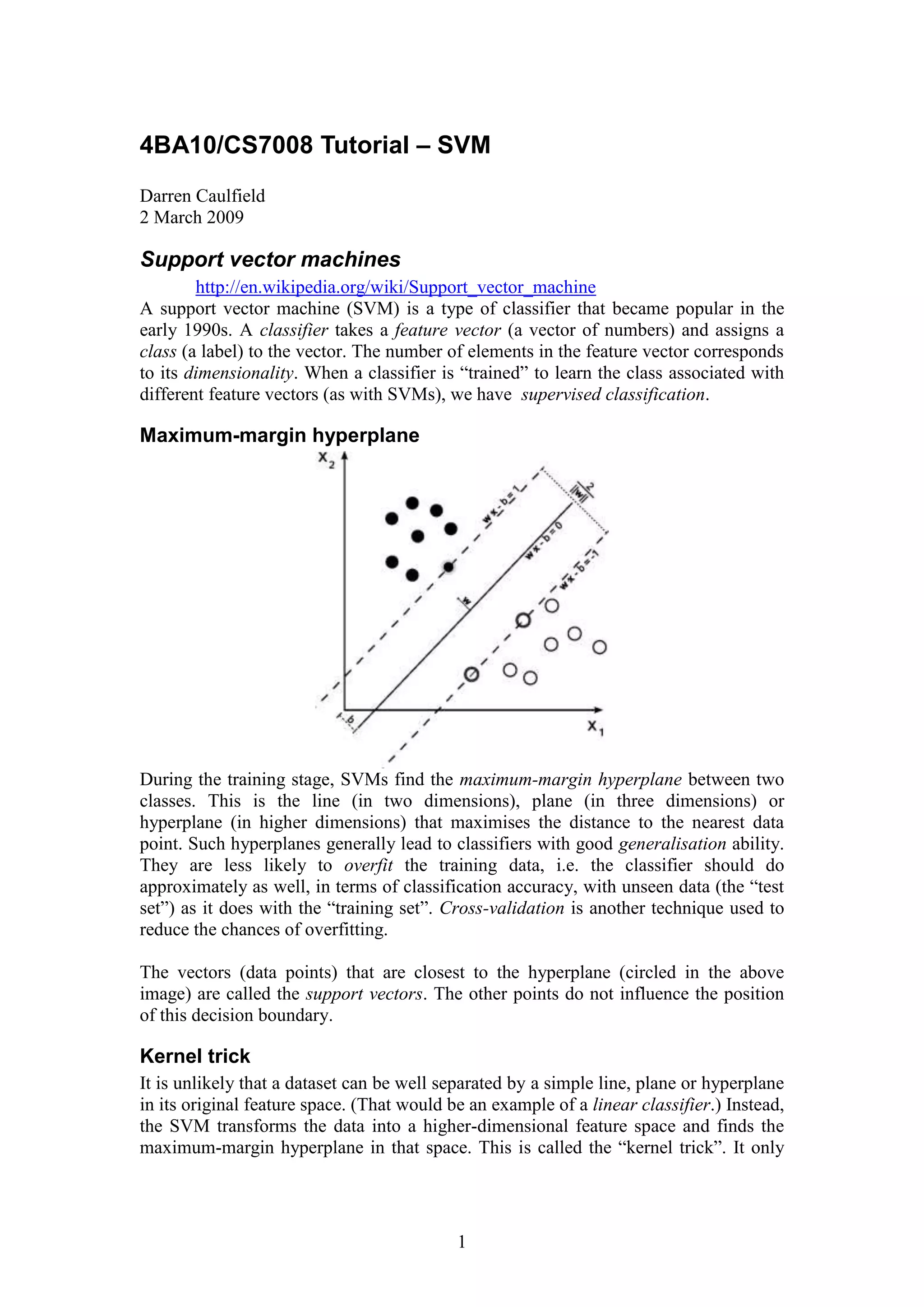

- SVMs find the maximum-margin hyperplane between two classes during training to create classifiers with good generalization.

- The kernel trick allows transforming data into a higher-dimensional space to find nonlinear decision boundaries.

- Soft-margin SVMs allow some misclassified examples to improve accuracy when data is not perfectly separable.

- Tutorial tasks demonstrate using LIBSVM to classify toy datasets and evaluate SVM performance by varying parameters.

![Change the parameters (in the text box at the bottom right). In particular, try changing

the t, c g, d and r values. Find parameters that leave the two classes well separated.

svm-train and svm-predict

Download and unzip the “a1a” dataset (training and test sets) and put the files in the

“windows” folder of LIBSVM. Open a command prompt in that folder.

Usage: svm-train [options] training_set_file [model_file]

Usage: svm-predict [options] test_file model_file output_file

Run the following commands. The train a classifier (on the training set) using a RBF

kernel (default), and use it for prediction (classification) on the test set:

svm-train.exe -c 10 a1a.txt a1a.model

svm-predict.exe a1a.t a1a.model a1a.output

Change the –c parameter from 0.01 to 10000 (increase by a factor of 10 each time)

and study the effect.

Change the –g (gamma) parameter.

This training set is unbalanced: there are 1210 examples from one class and 395

examples from the other. Try the “–w1 weight” and “–w-1 weight” options to adjust

the penalty for misclassification.

See the following page for some 3D results:

http://www.csie.ntu.edu.tw/~cjlin/libsvmtools/svmtoy3d/examples/

4](https://image.slidesharecdn.com/tutorial-support-vector-machines2395/85/Tutorial-Support-vector-machines-4-320.jpg)