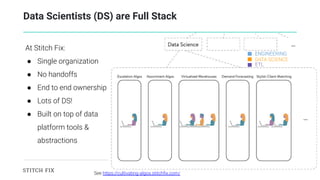

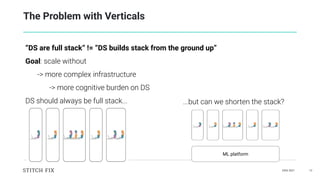

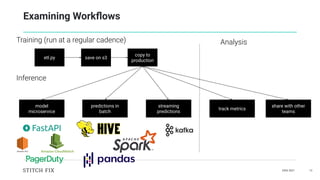

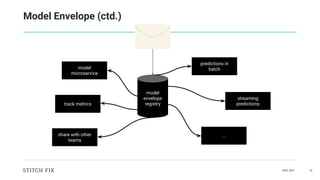

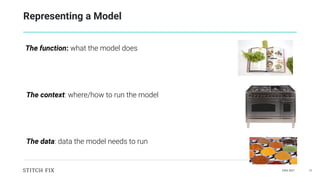

Elijah Ben Izzy, a Data Platform Engineer at Stitch Fix, discusses building abstractions for machine learning operations to optimize workflows and enhance the separation of concerns between data science and platform engineering. The presentation highlights the importance of a custom-built model envelope for seamless integration and management of ML models, as well as advancements in deployment and inference processes. Future directions include enhanced production monitoring and sophisticated feature integration to further streamline data science workflows.

![DAIS 2021 26

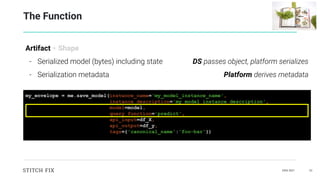

The Context

Environment + Index

- Installed packages

- Custom code

- Language + version

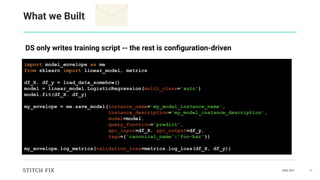

import my_custom_fancy_ml_module

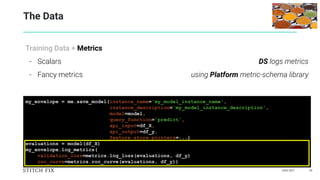

my_envelope = me.save_model(instance_name='my_model_instance_name',

instance_description='my_model_instance_description',

model=model,

query_function='predict',

api_input=df_X,

api_output=df_y,

tags={'canonical_name':'foo-bar'},

# pip_env=['scikit-learn', pandas'], edge case if needed

custom_modules=[my_custom_fancy_ml_module])

Platform automagically derived, or DS passes pointers

DS passes in as needed

Platform automagically derived](https://image.slidesharecdn.com/323elijahbenizzy-210616155358/85/The-Function-the-Context-and-the-Data-Enabling-ML-Ops-at-Stitch-Fix-26-320.jpg)

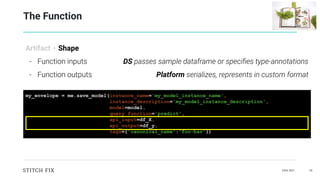

![DAIS 2021 27

The Context

Environment + Index

- Key-value tags

- Spine/index of envelope registry

import my_custom_fancy_ml_module

my_envelope = me.save_model(instance_name='my_model_instance_name',

instance_description='my_model_instance_description',

model=model,

query_function='predict',

api_input=df_X,

api_output=df_y,

tags={'canonical_name':'foo-bar'},

custom_modules=[my_custom_module])

Platform derives base tags

DS passes custom tags as desired

`](https://image.slidesharecdn.com/323elijahbenizzy-210616155358/85/The-Function-the-Context-and-the-Data-Enabling-ML-Ops-at-Stitch-Fix-27-320.jpg)

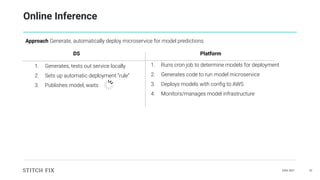

![DAIS 2021 45

Value Added by Separating Concerns

Making deployment easy

Ensuring environment in prod == environment in training

Providing easy metrics analysis

Wrapping up complex systems

Behind-the-scenes best practices

Creating the best model

Choosing the best libraries

Determining the right metrics to log

DS concerned with... Platform concerned with...

DS focuses on creating the best model [writing the recipe]

Platform focuses on optimal infrastructure [cooking it]](https://image.slidesharecdn.com/323elijahbenizzy-210616155358/85/The-Function-the-Context-and-the-Data-Enabling-ML-Ops-at-Stitch-Fix-45-320.jpg)