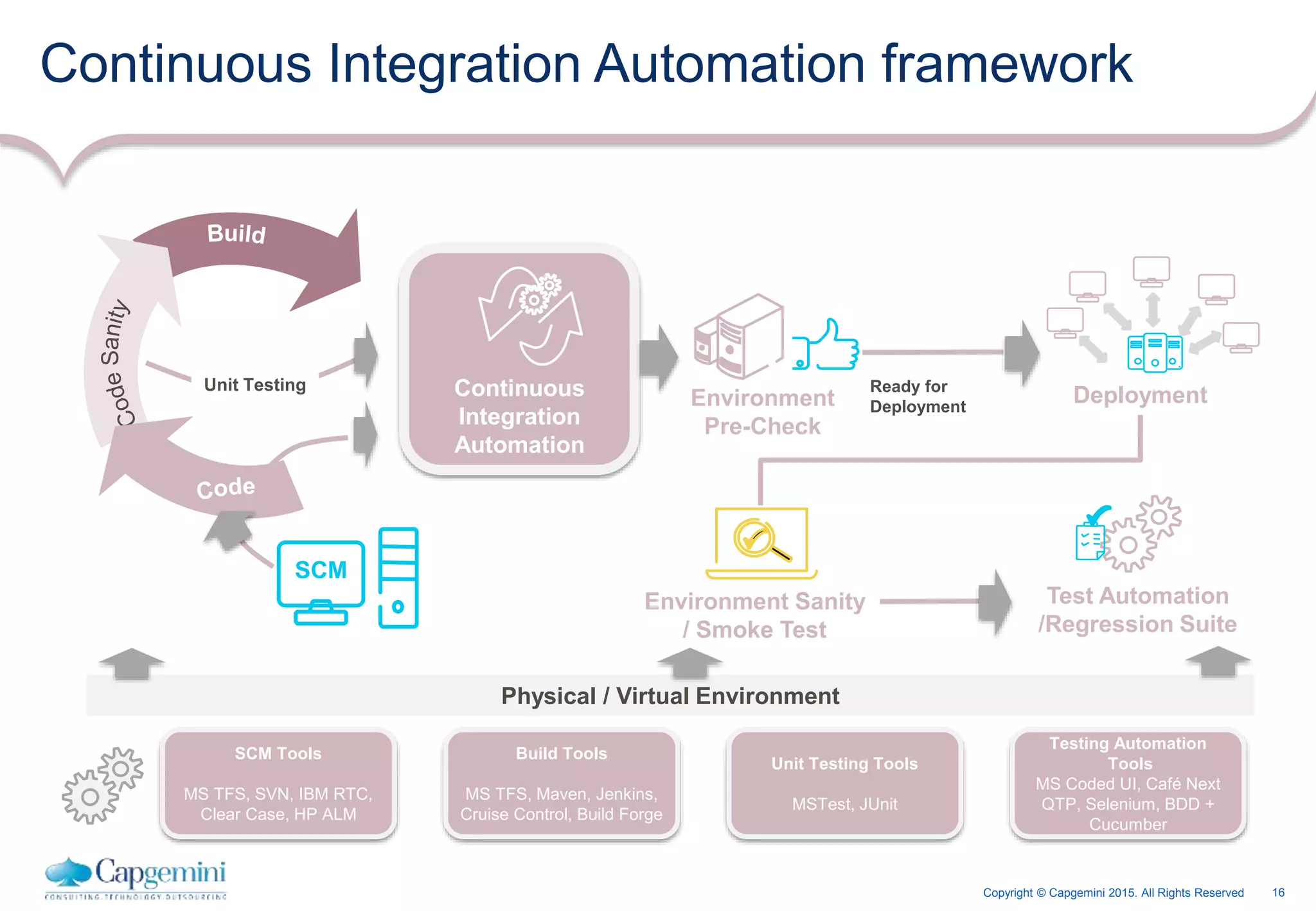

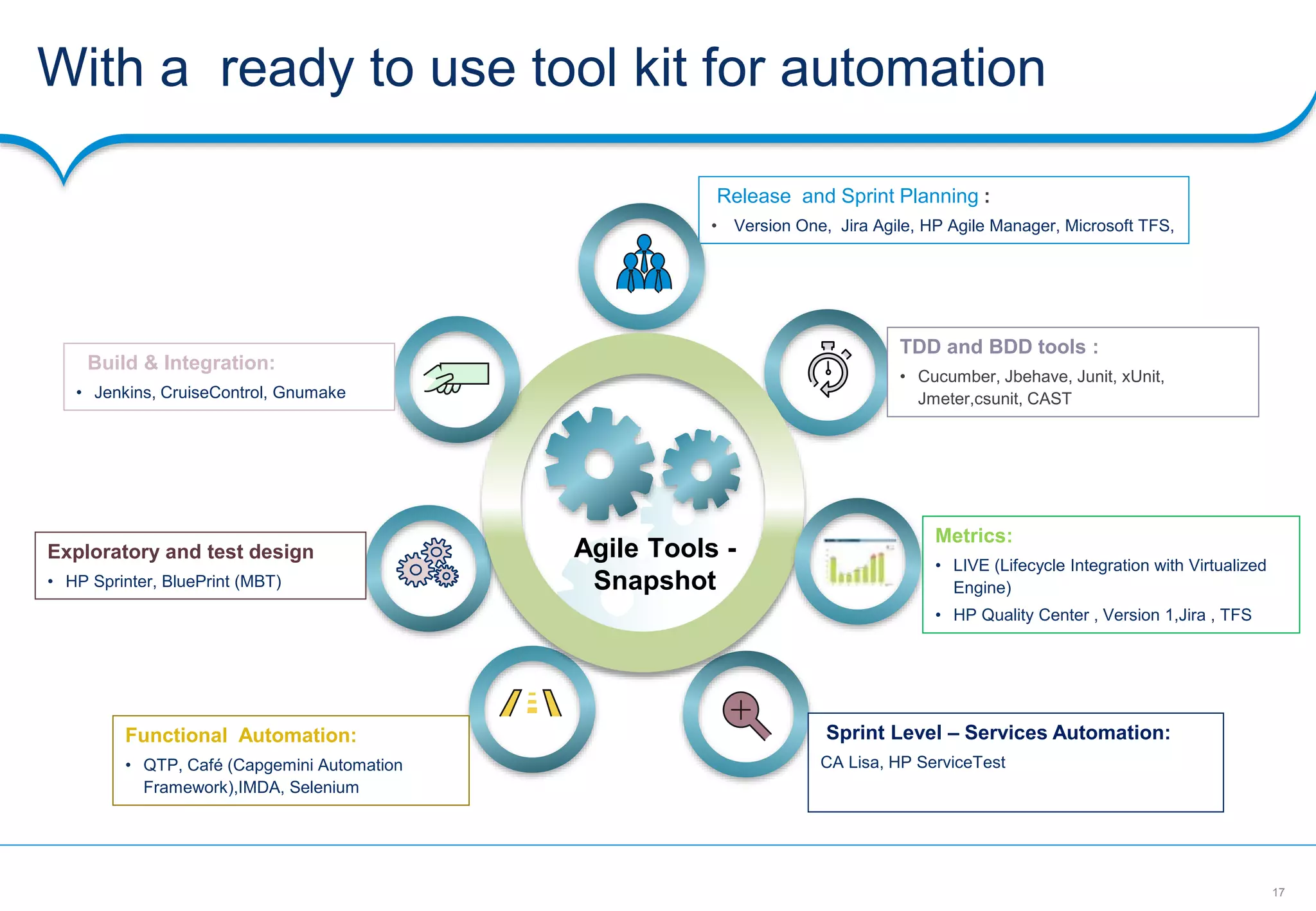

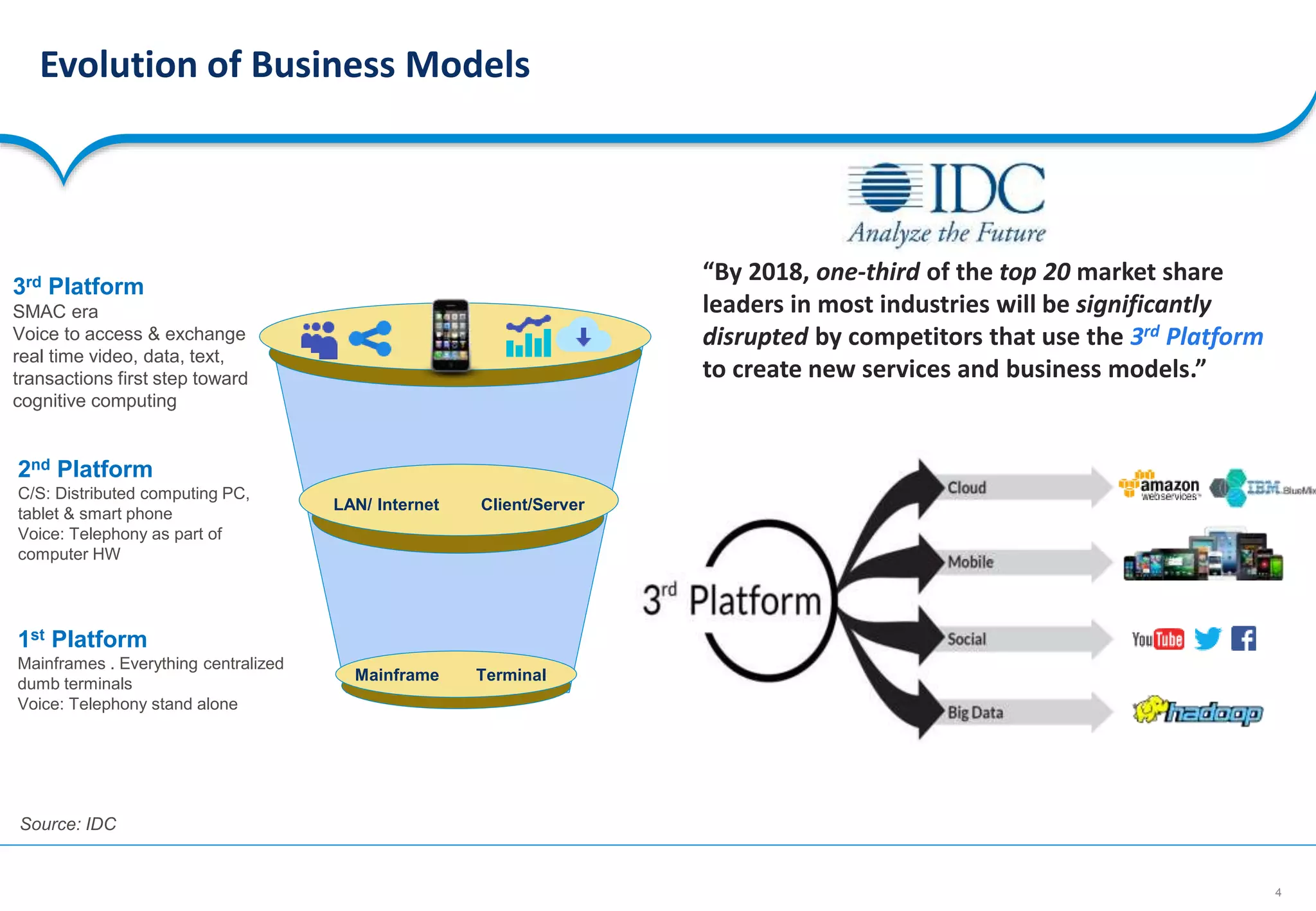

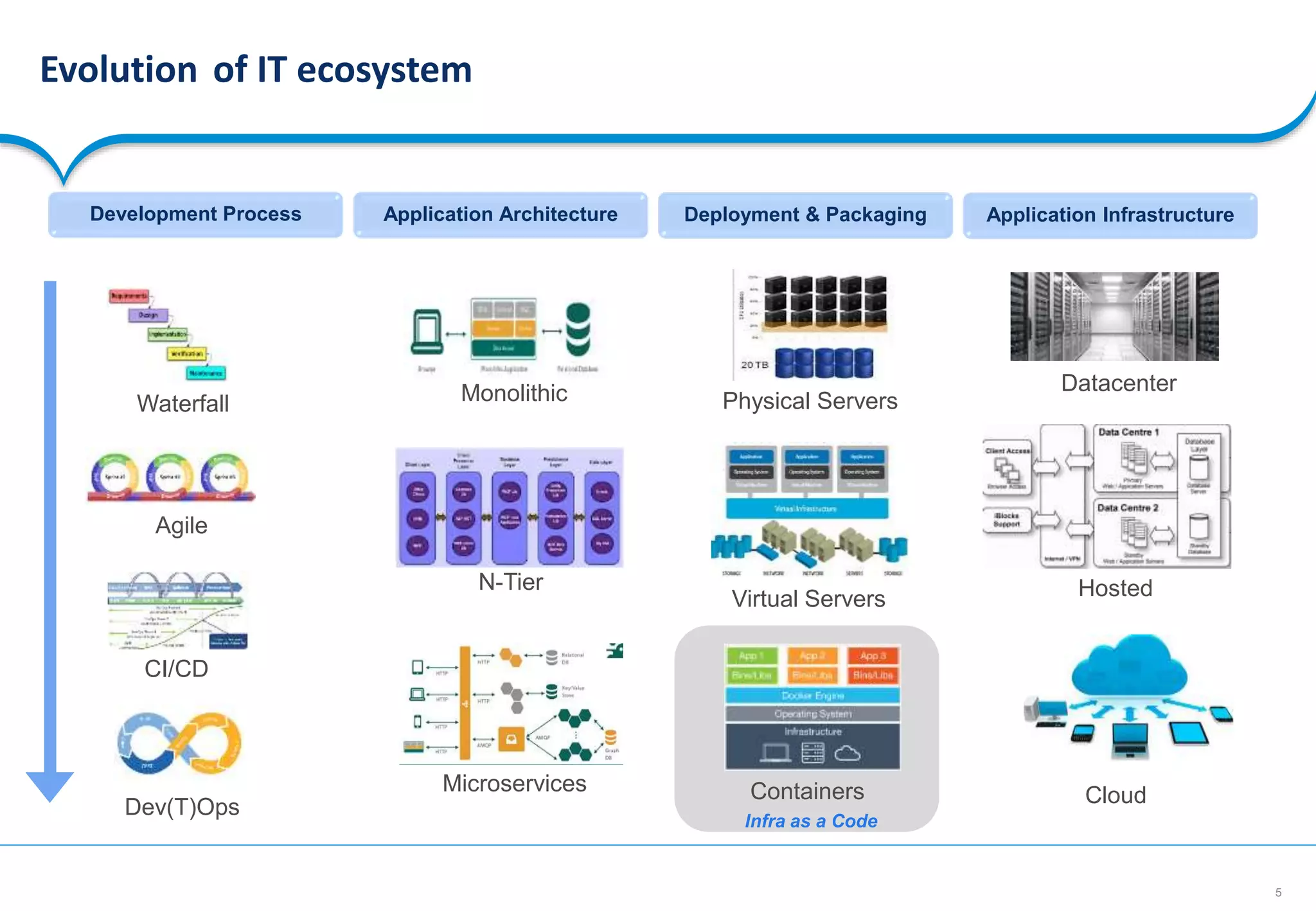

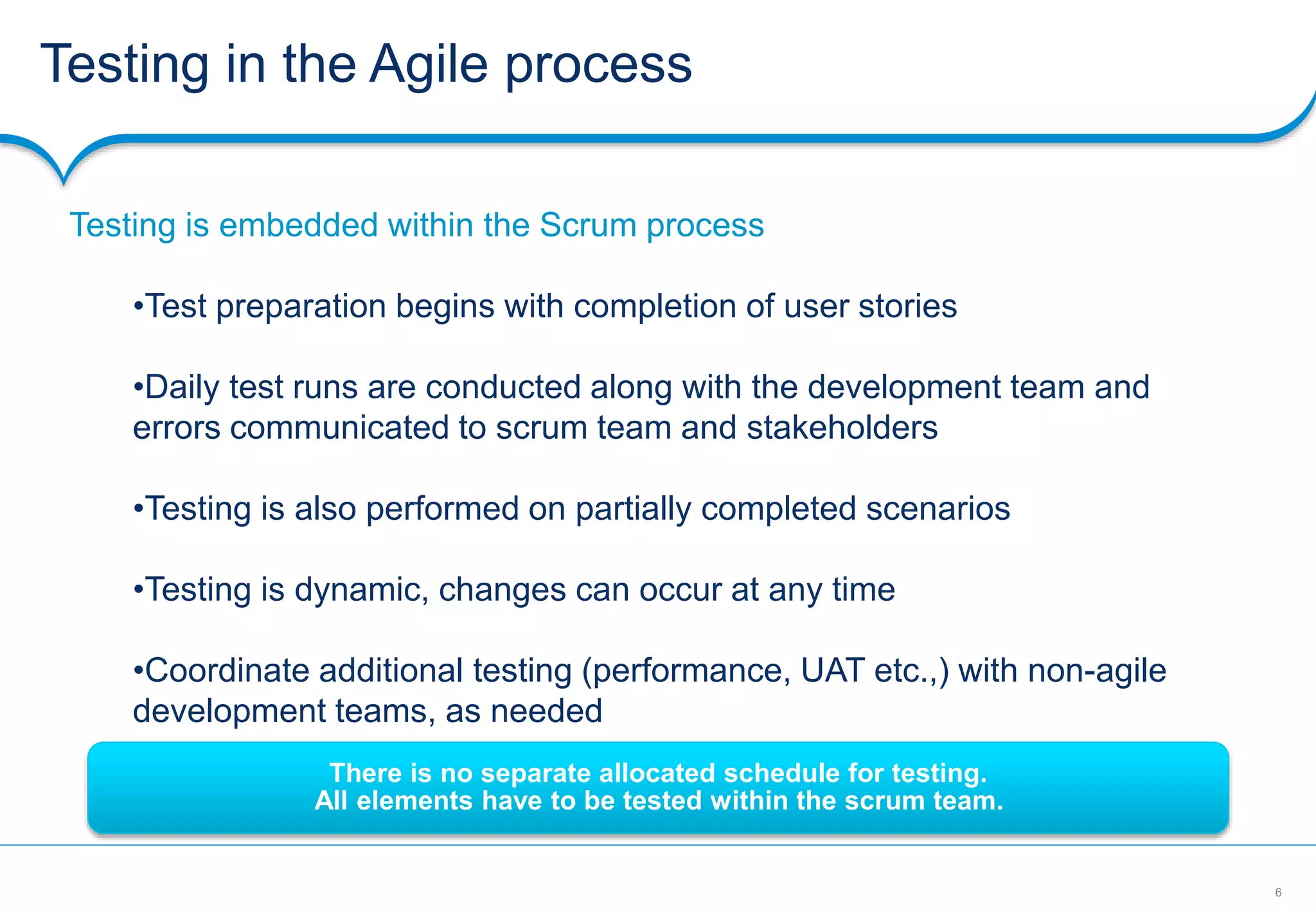

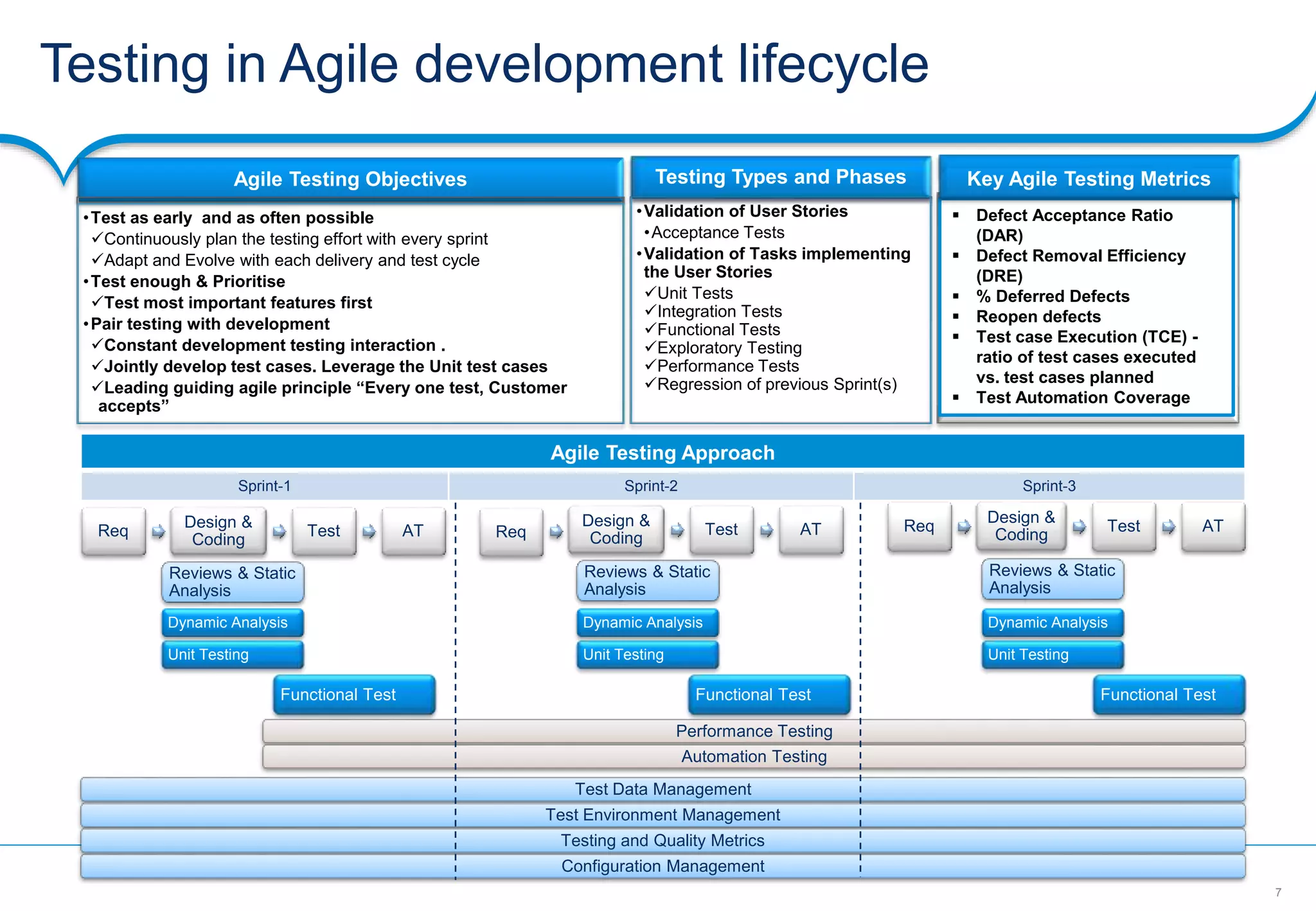

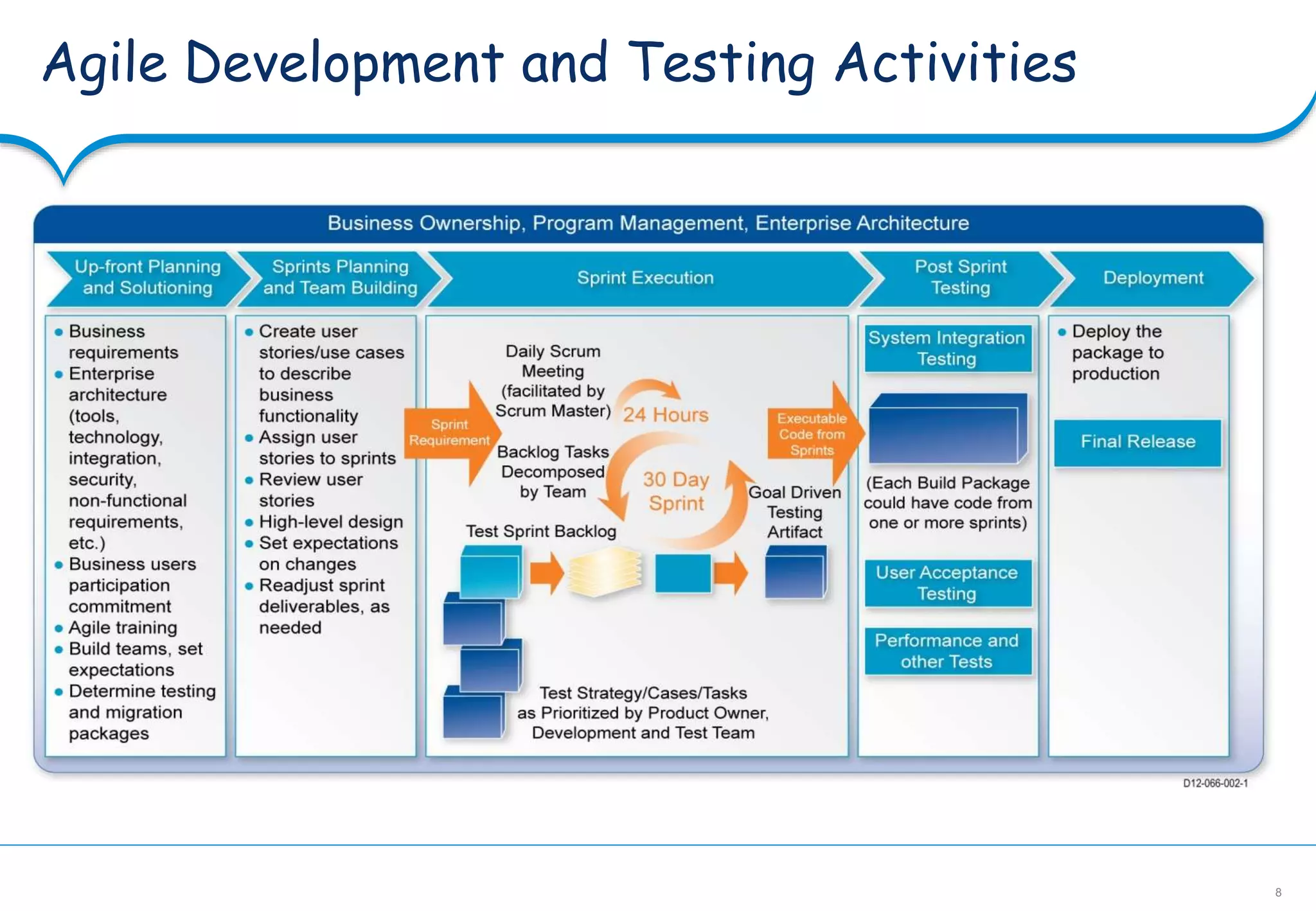

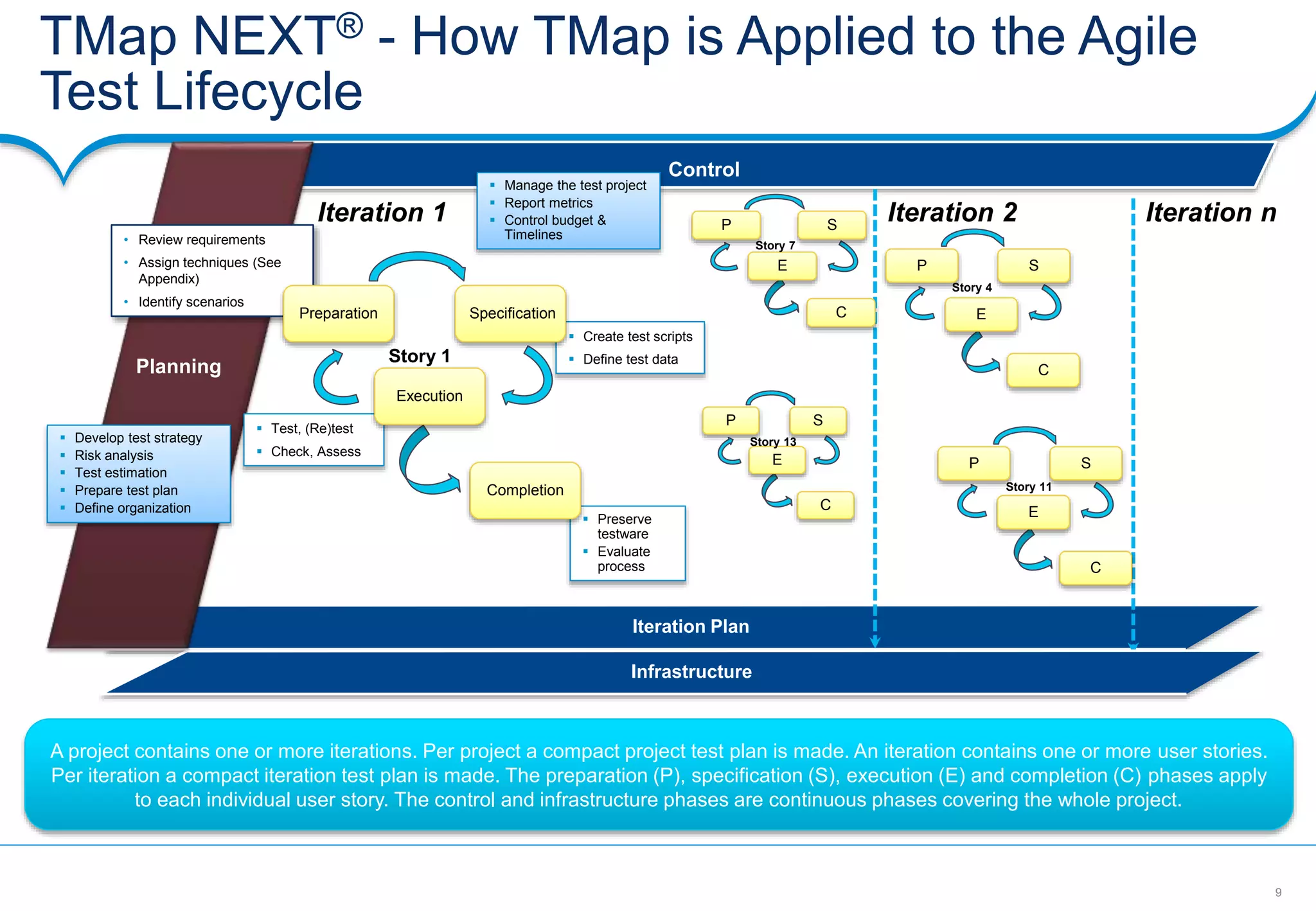

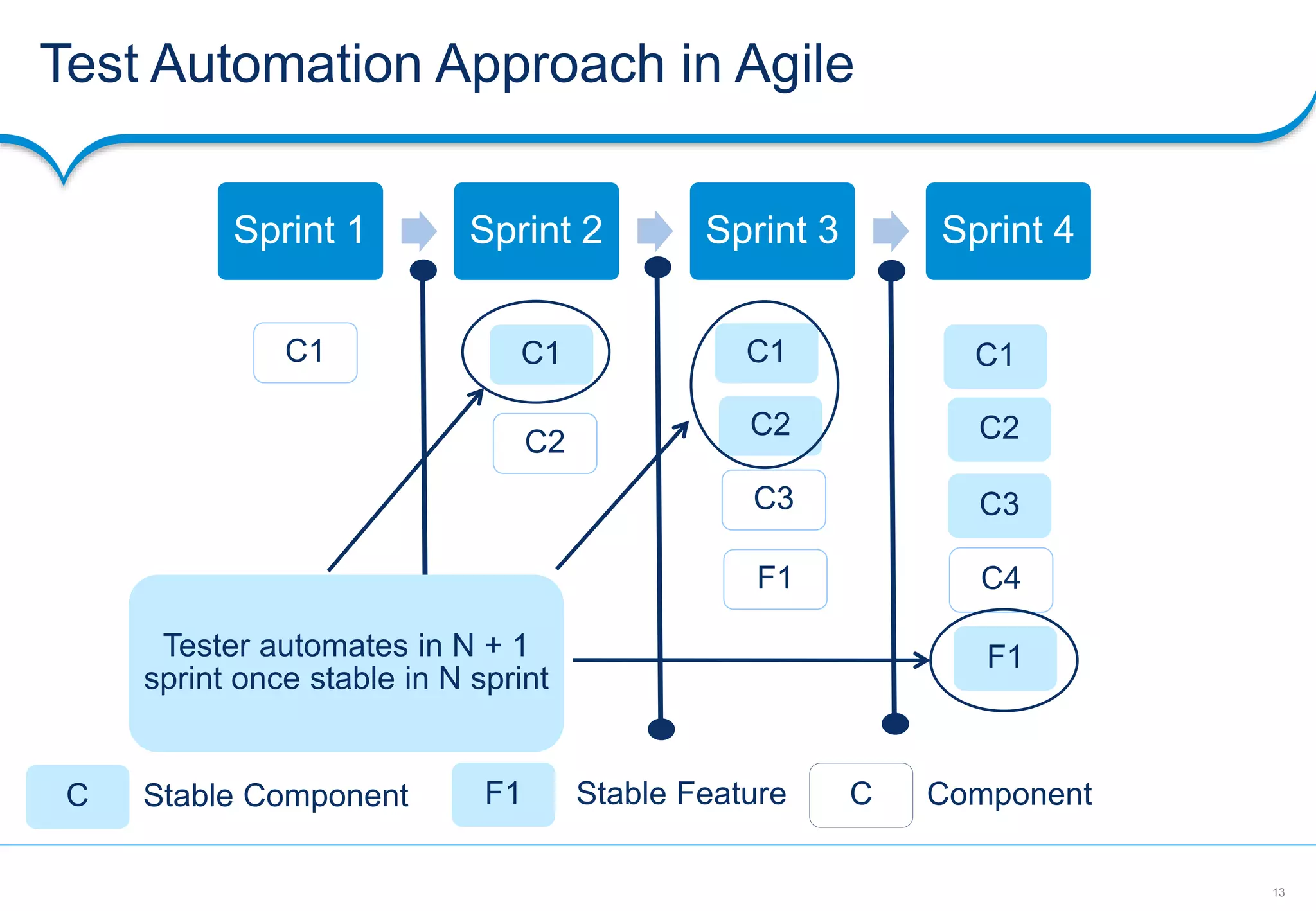

The document discusses test automation in agile environments. It covers Capgemini's World Quality Report on automation, the evolution of business models and IT ecosystems, and challenges with agile automation. Key topics include testing being embedded within the Scrum process with no separate schedule for testing, the importance of test-driven development and behavior-driven development, achieving high levels of automation coverage, and using tools like Cucumber, JUnit, and Selenium to support test automation. The document emphasizes that automation is necessary to achieve faster time to market and increased productivity in agile.

![14

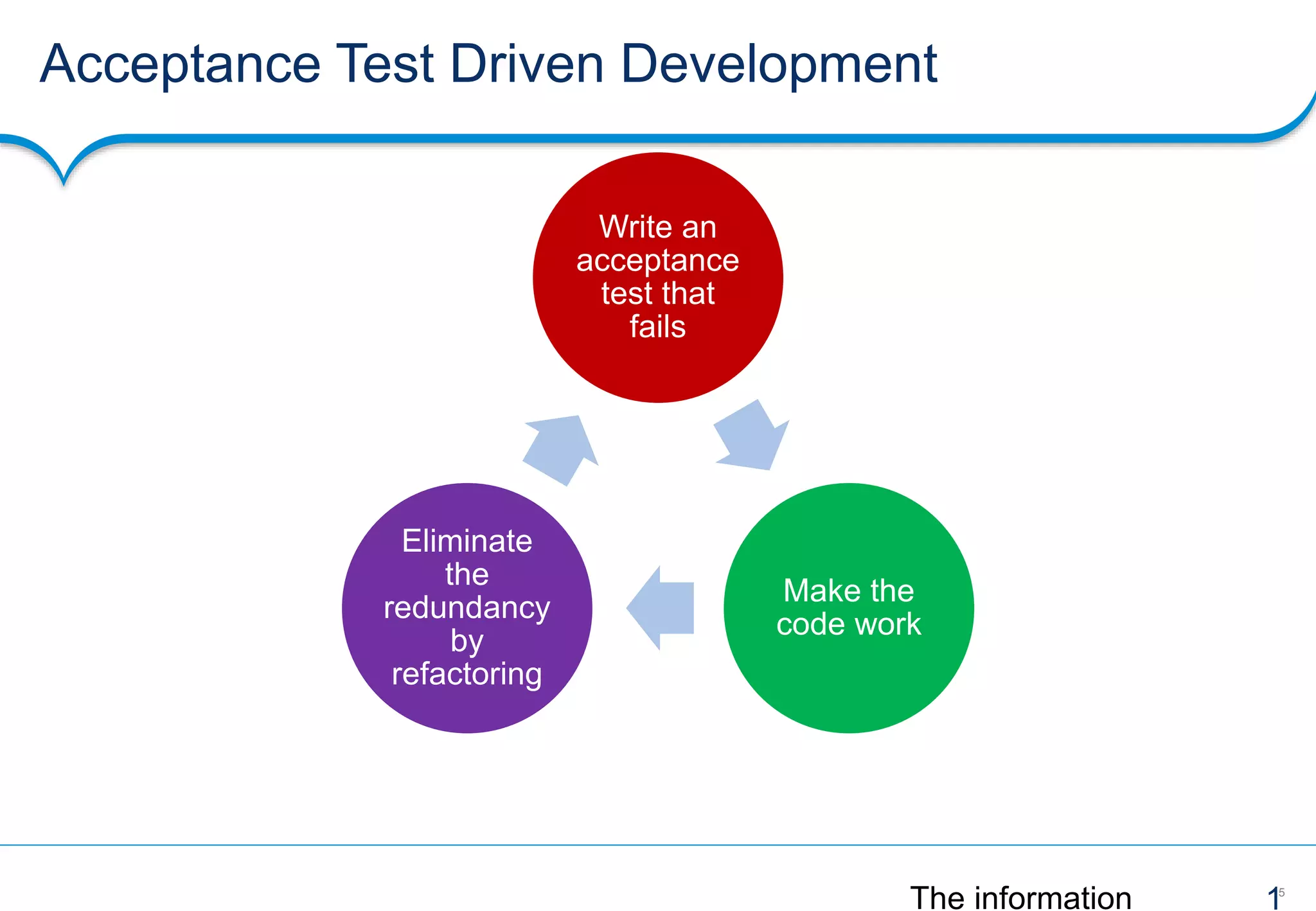

Test Driven Development [TDD]

Write a test

that fails

Make the

code work

Eliminate

the

redundancy

by

refactoring

The mantra of Test-Driven Development (TDD) is red, green, refactor.

JUnit

NUnit

HttpUnit](https://image.slidesharecdn.com/vaishalijayadeatagtragileautomationv0-160502130441/75/Test-Automation-in-Agile-14-2048.jpg)