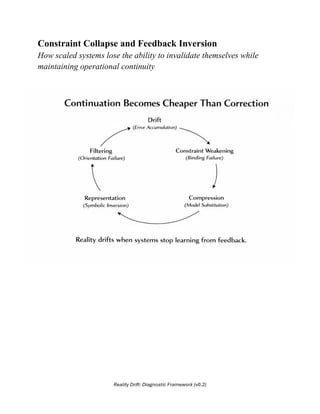

A concise diagnostic framework for identifying self-correction failure in complex systems. Presents operational questions and indicators for detecting constraint collapse, feedback inversion, optimization drift, and governance breakdown. Intended for use in UX research, AI safety reviews, system post-mortems, risk assessment, and organizational learning.