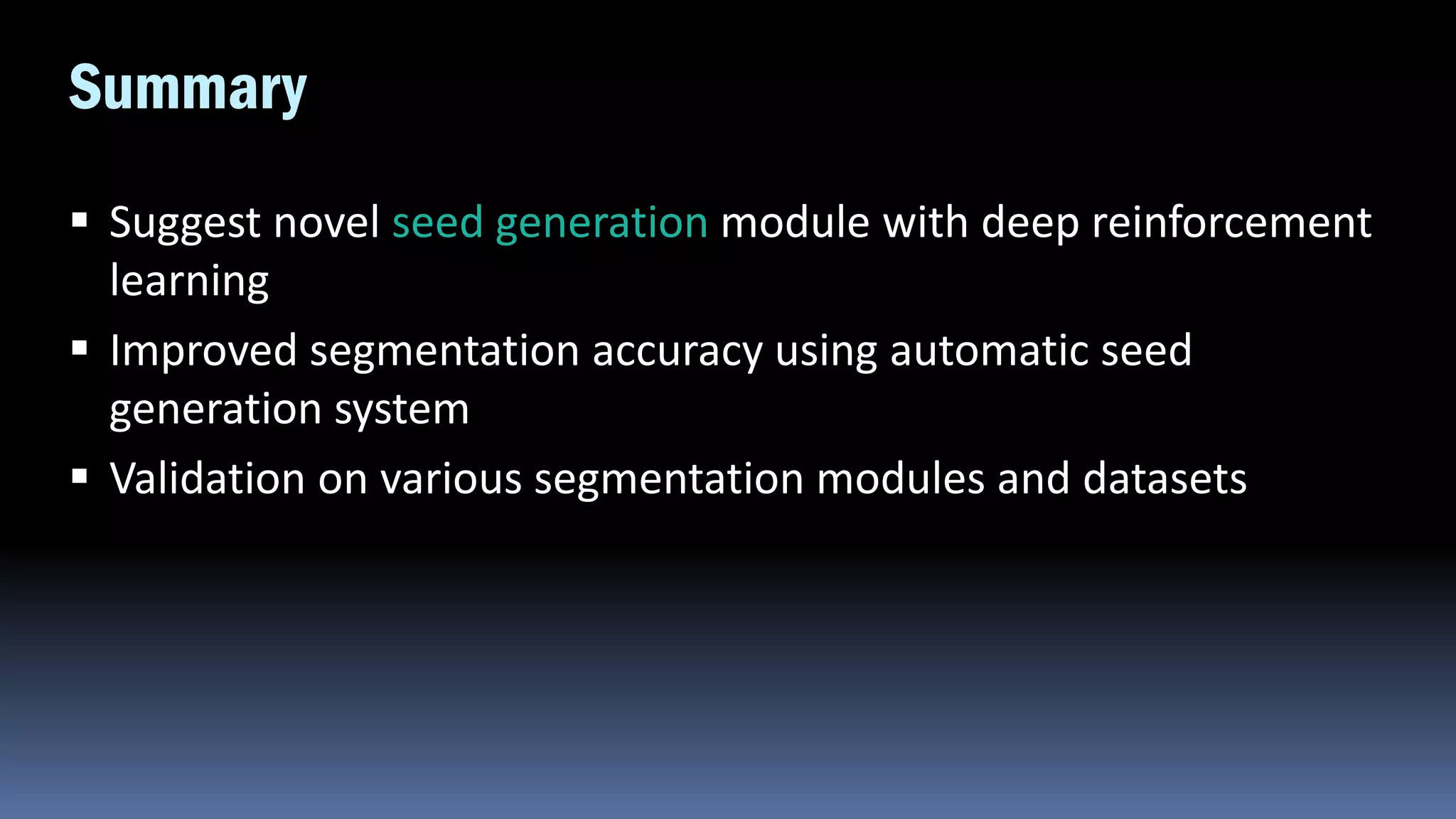

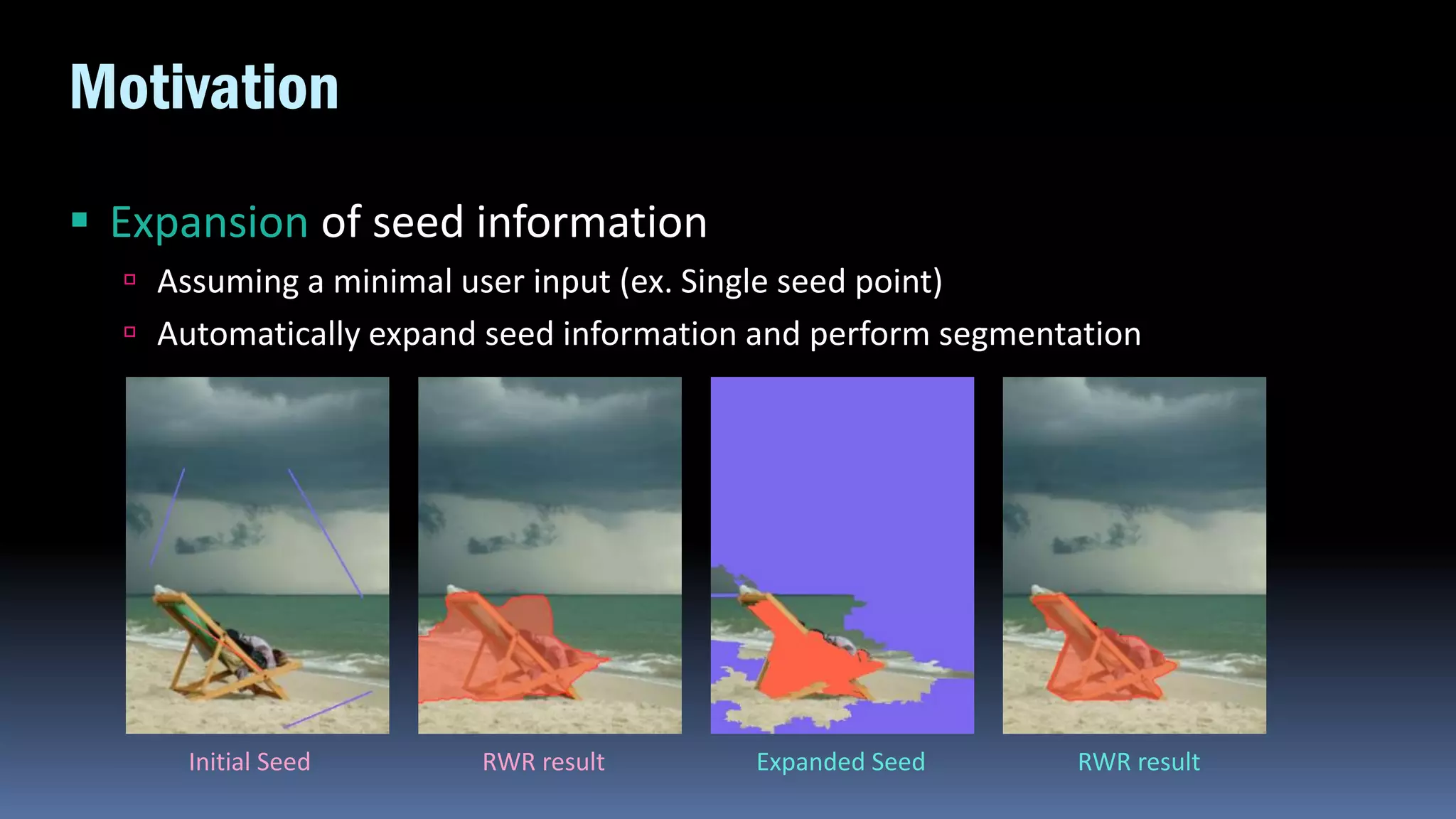

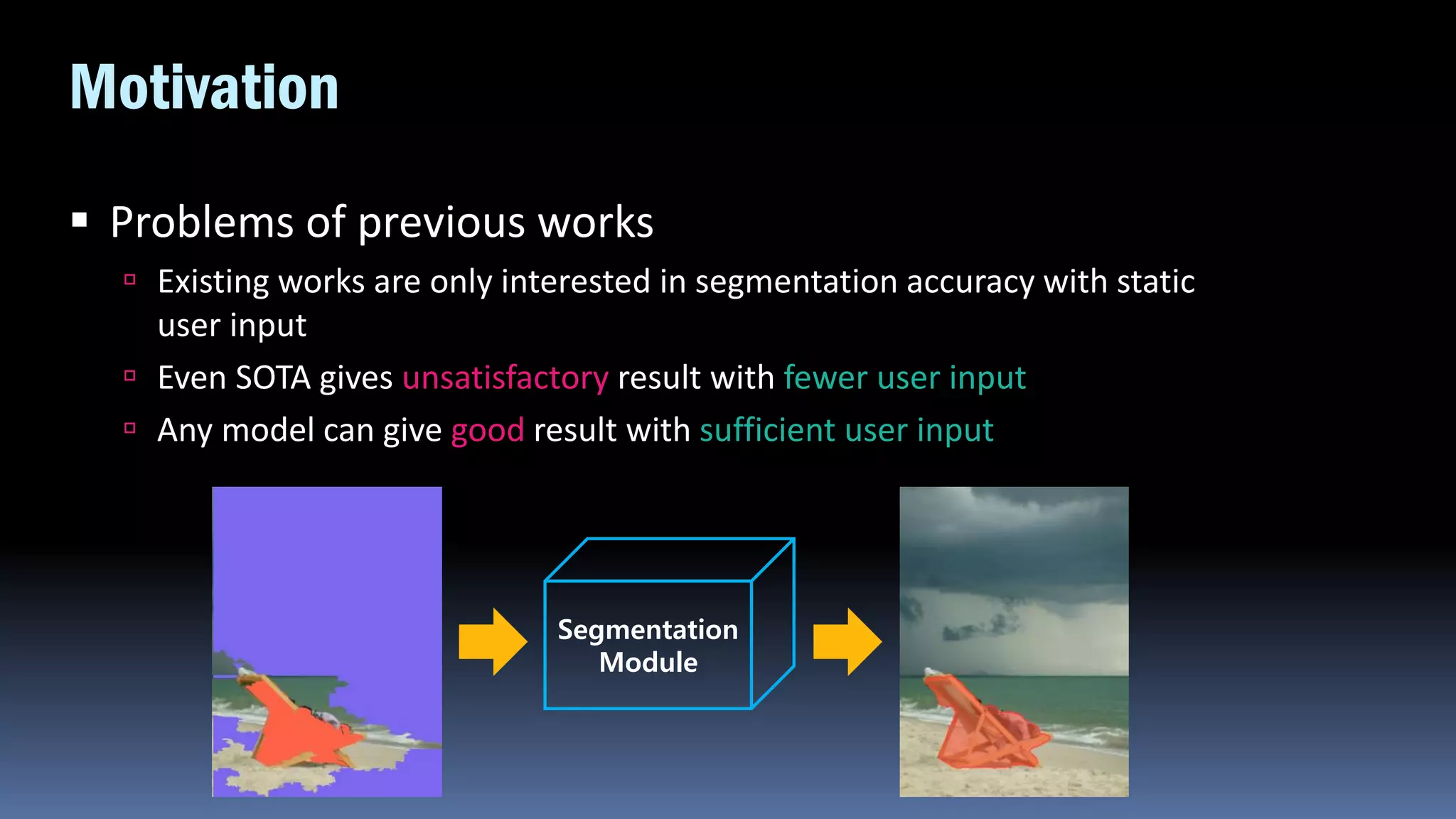

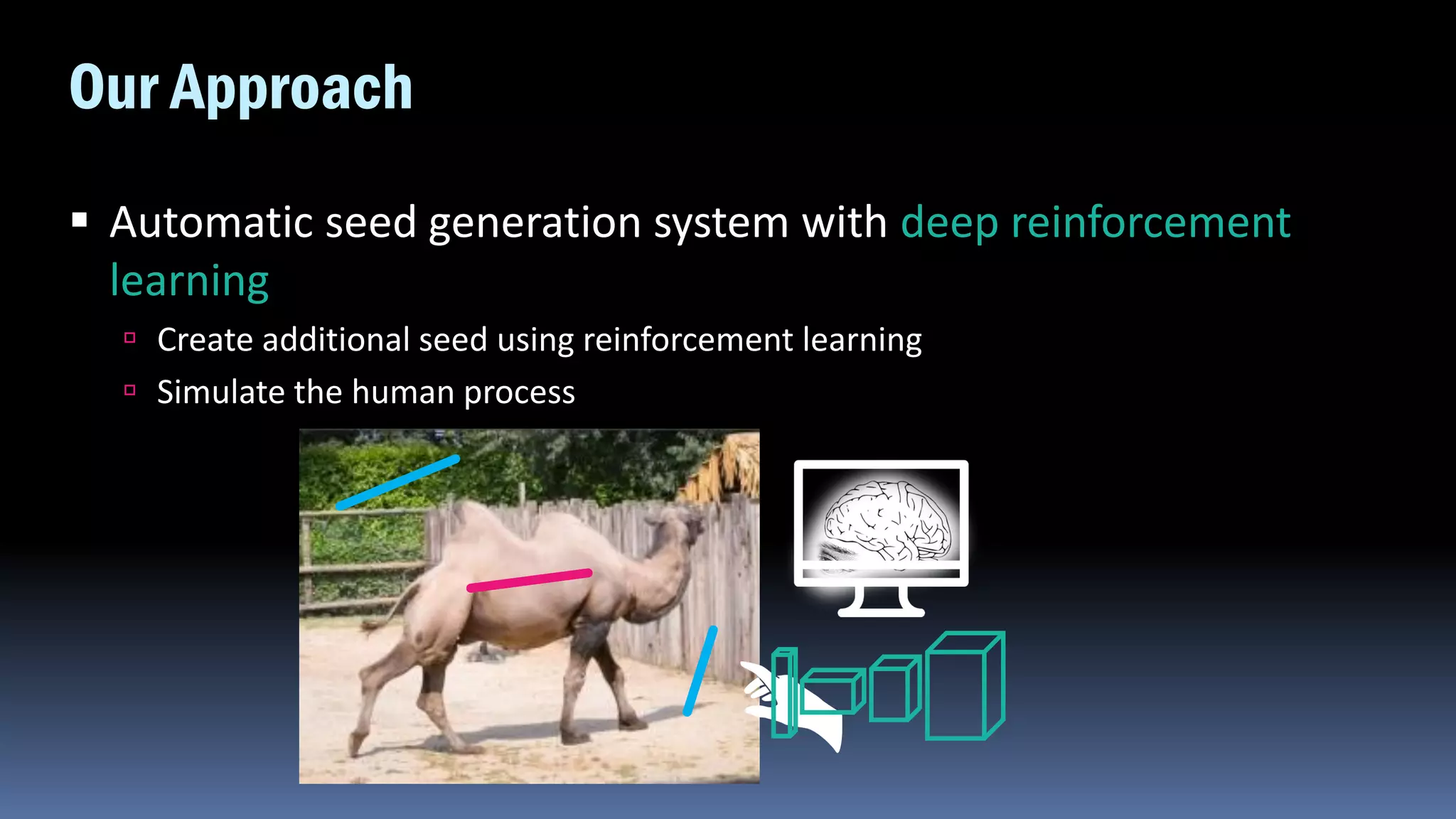

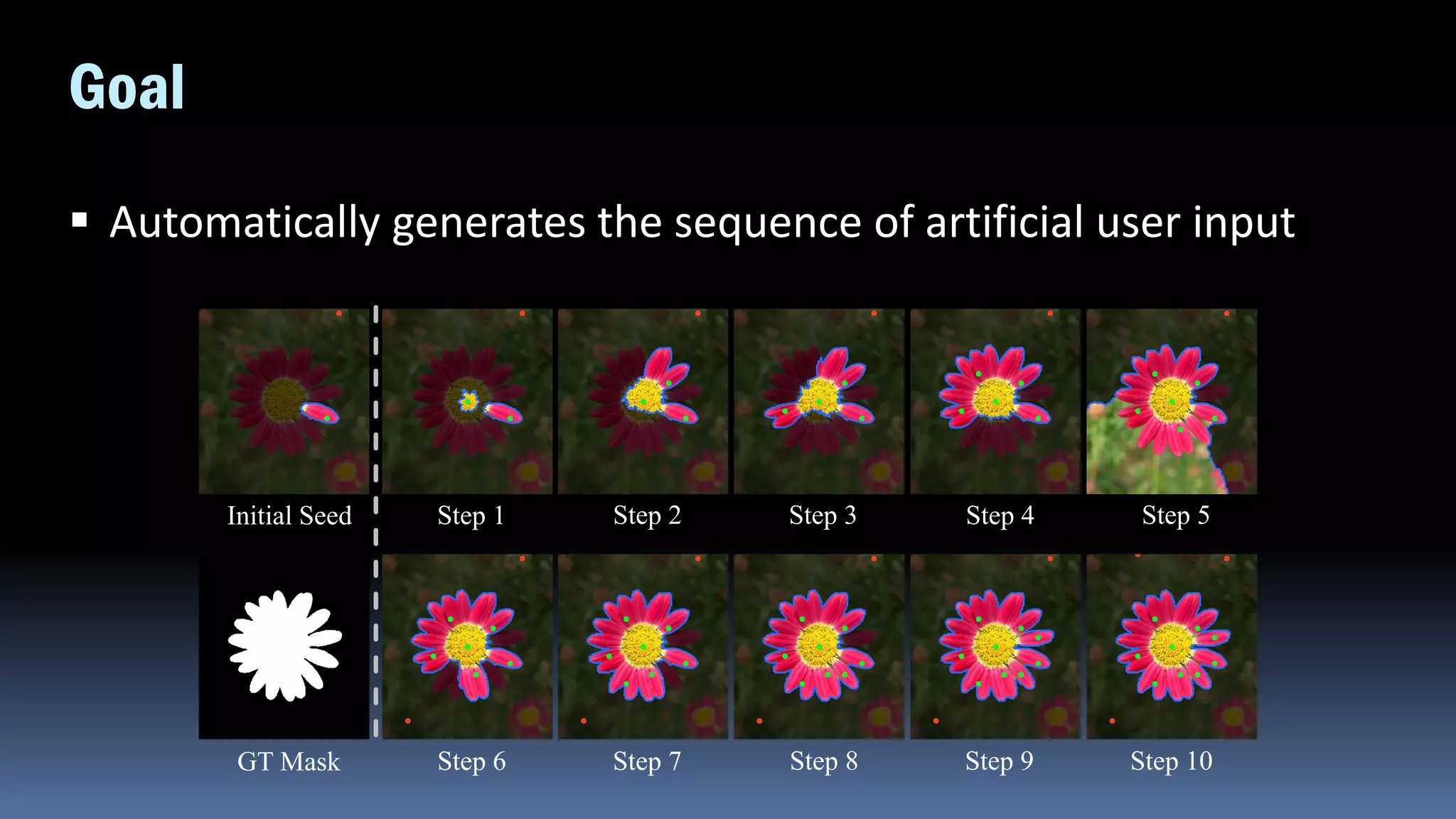

The document presents a novel automatic seed generation system for interactive image segmentation using deep reinforcement learning, aimed at enhancing segmentation accuracy with minimal user input. It critiques existing methods for relying heavily on user interaction and proposes a model that automatically expands seed information to reduce this burden. The results indicate that the proposed system significantly outperforms traditional techniques through an end-to-end training approach.

![Previous Approach

Early approaches : Graph Cut, GrabCut, Random Walk, Random

Walk with Restart, Geodesic [1,2,3,4,5]

Using MRF optimization to extract region of interest

Determine the region by comparing the correspondence between labeled and

unlabeled pixels

[1] Y. Y. Boykov et al. Interactive graph cuts for optimal boundary & region segmentation of objects in nd images. In ICCV, 2001.

[2] C. Rother et al. Grabcut: Interactive foreground extraction using iterated graph cuts. In ToG. 2004.

[3] L. Grady. Random walks for image segmentation. In PAMI. 2006.

[4] T. H. Kim et al. Generative image segmentation using random walks with restart. In ECCV. 2008.

[5] V. Gulshan et al. Geodesic star convexity for interactive image segmentation. In CVPR. IEEE, 2010.](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-7-2048.jpg)

![Previous Approach

Recent approaches : Deep Learning [1,2] …

Fine-tuning semantic segmentation network (FCN [3]) for interactive

segmentation task

End-to-end training by concatenating seed point and image

Significant performance improvements compared to classical

techniques

[1] N. Xu et al. Deep interactive object selection. In CVPR. 2016.

[2] J. Hao Liew et al. Regional interactive image segmentation networks. In ICCV. 2017.

[3] J. Long et al. Fully convolutional networks for semantic segmentation. In CVPR. 2015](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-8-2048.jpg)

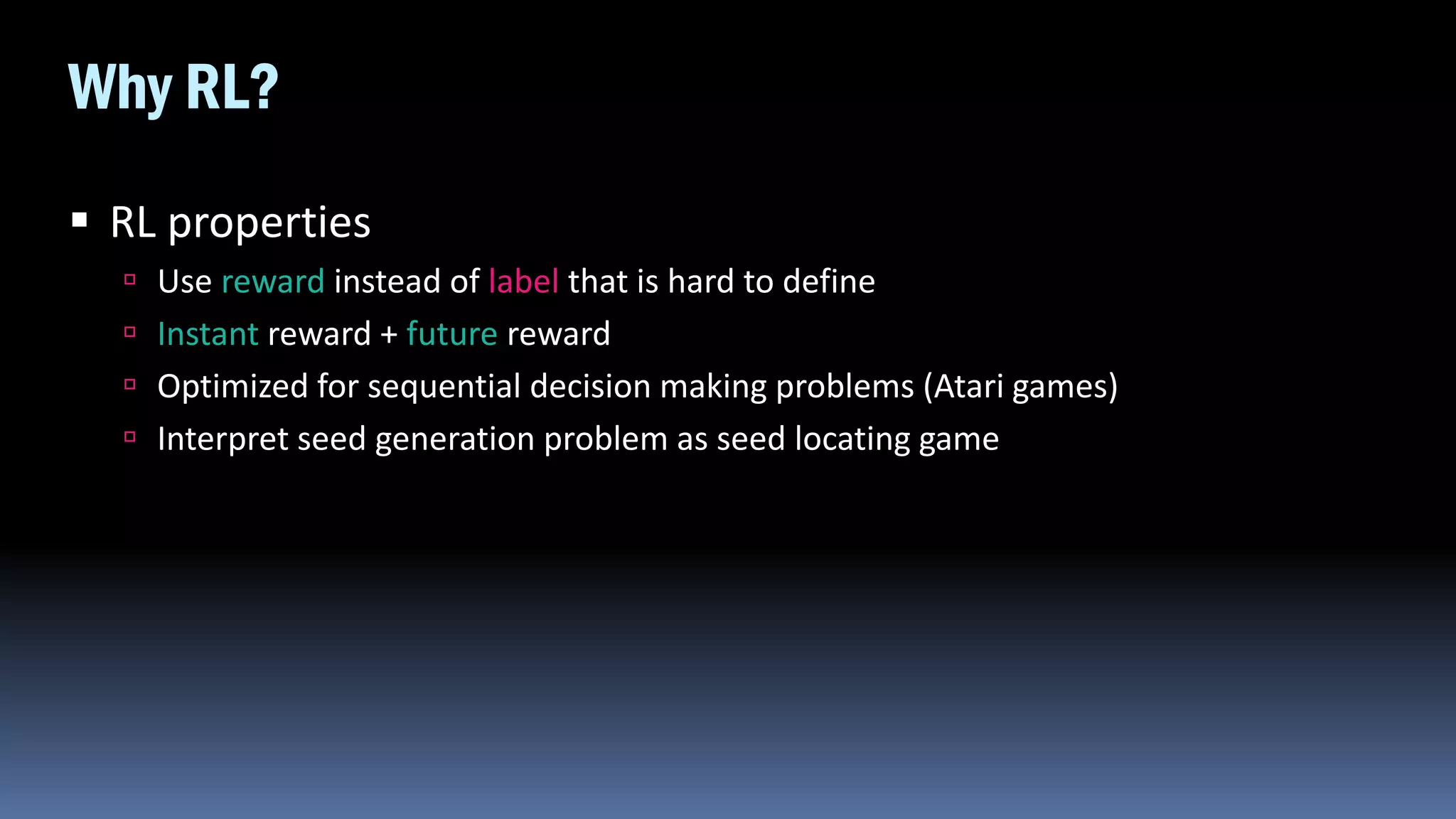

![Challenges

Minimizing user interaction

Main metric of segmentation : Accuracy %, Click #

Reducing the input burden of the user

Deep learning frameworks still require a large number of clicks

Segmentation Pascal Grabcut Berkeley

MSCOCO

(seen)

MSCOCO

(unseen)

Graph Cut [1] 15.06 11.10 14.33 18.67 17.80

Random Walk [2] 11.37 12.30 14.02 13.91 11.53

Geodesic [3] 11.73 8.38 12.57 14.37 12.45

iFCN [4] 6.88 6.04 8.65 8.31 7.82

RIS-Net [5] 5.12 5.00 6.03 5.98 6.44

[1] Y. Y. Boykov et al. Interactive graph cuts for optimal boundary & region segmentation of objects in nd images. In ICCV, 2001.

[2] L. Grady. Random walks for image segmentation. In PAMI. 2006.

[3] V. Gulshan et al. Geodesic star convexity for interactive image segmentation. In CVPR. IEEE, 2010.

[4] N. Xu et al. Deep interactive object selection. In CVPR. 2016.

[5] J. Hao Liew et al. Regional interactive image segmentation networks. In ICCV. 2017.](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-9-2048.jpg)

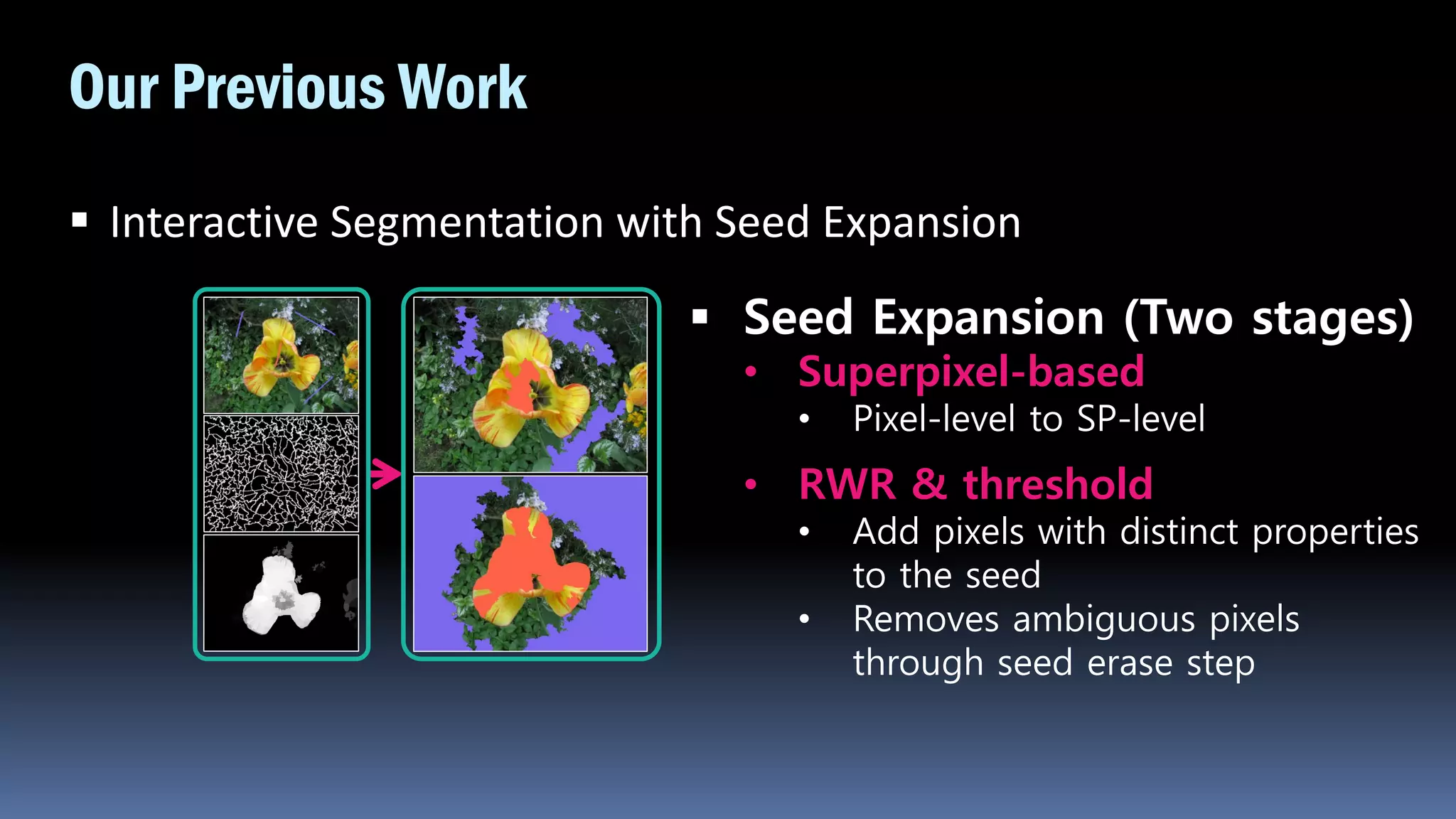

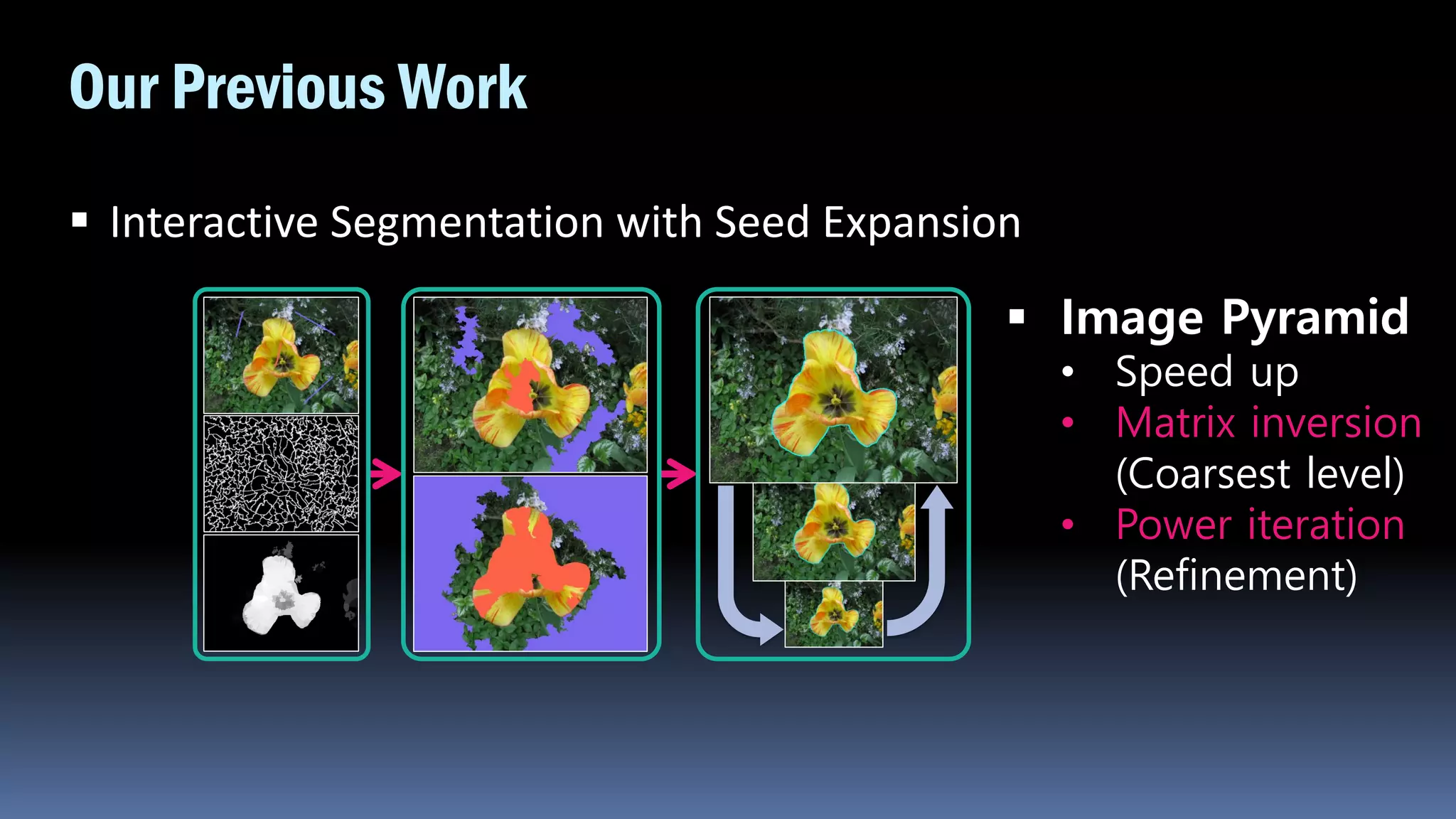

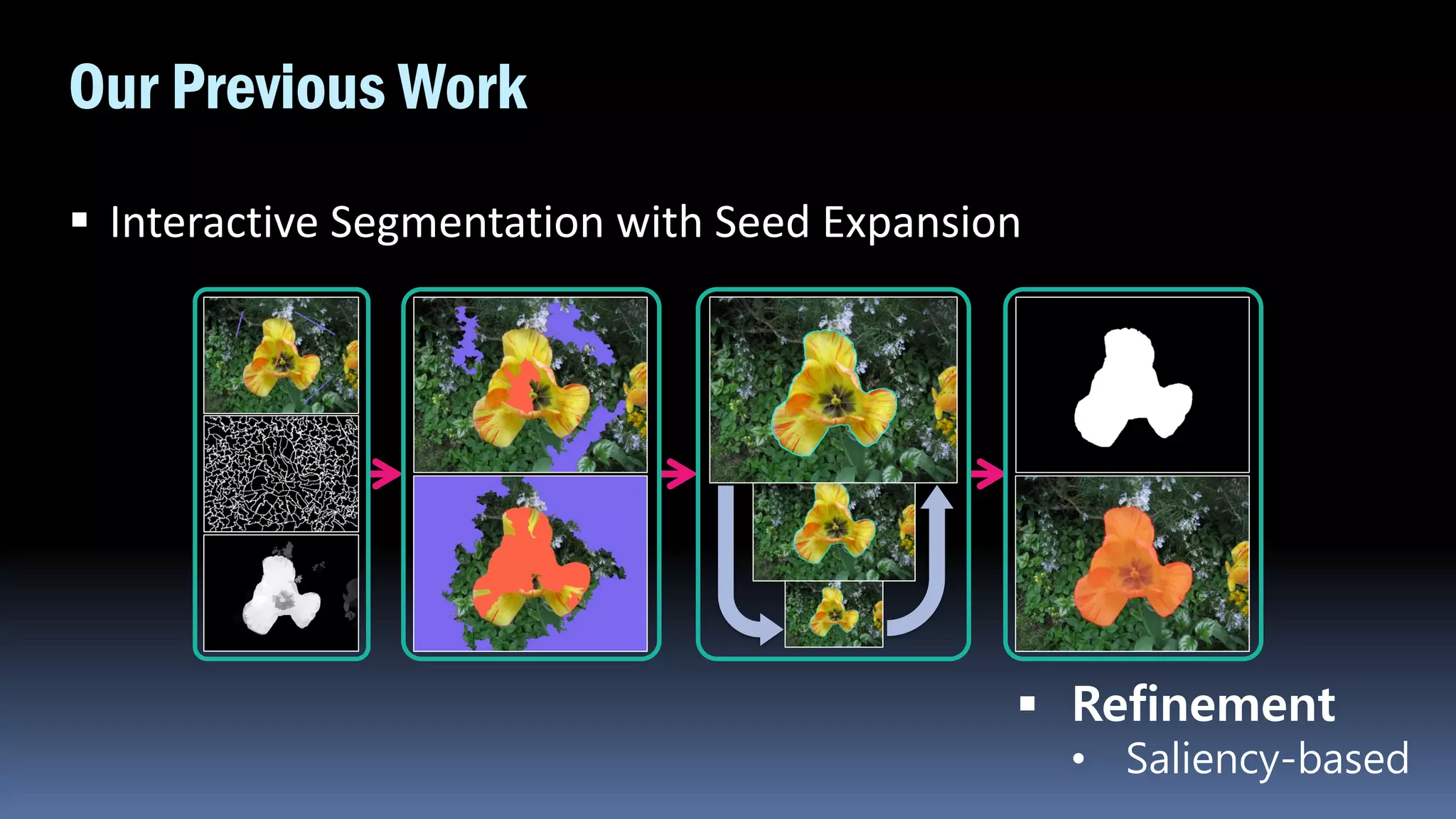

![Our Previous Work

Results

Limitation

Heuristic approach

Insufficient accuracy

Method F-score (%)

RWR [1] 74.48

Ours 80.32

[1] T. H. Kim et al. Generative image segmentation using random walks with restart. In ECCV. 2008.

Image &

Scribble

RWR

Results

Expanded

Seed

Our

Results](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-18-2048.jpg)

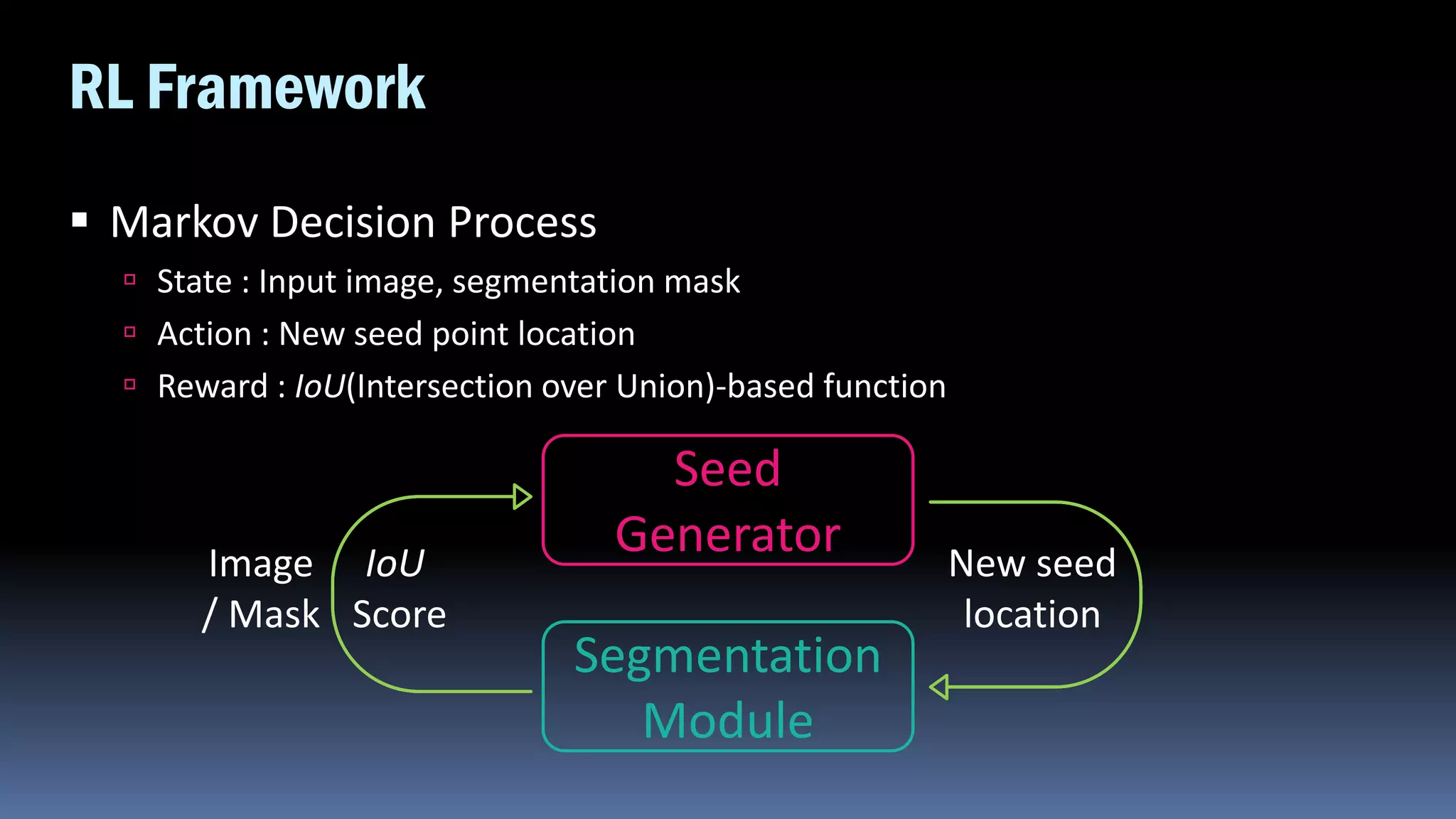

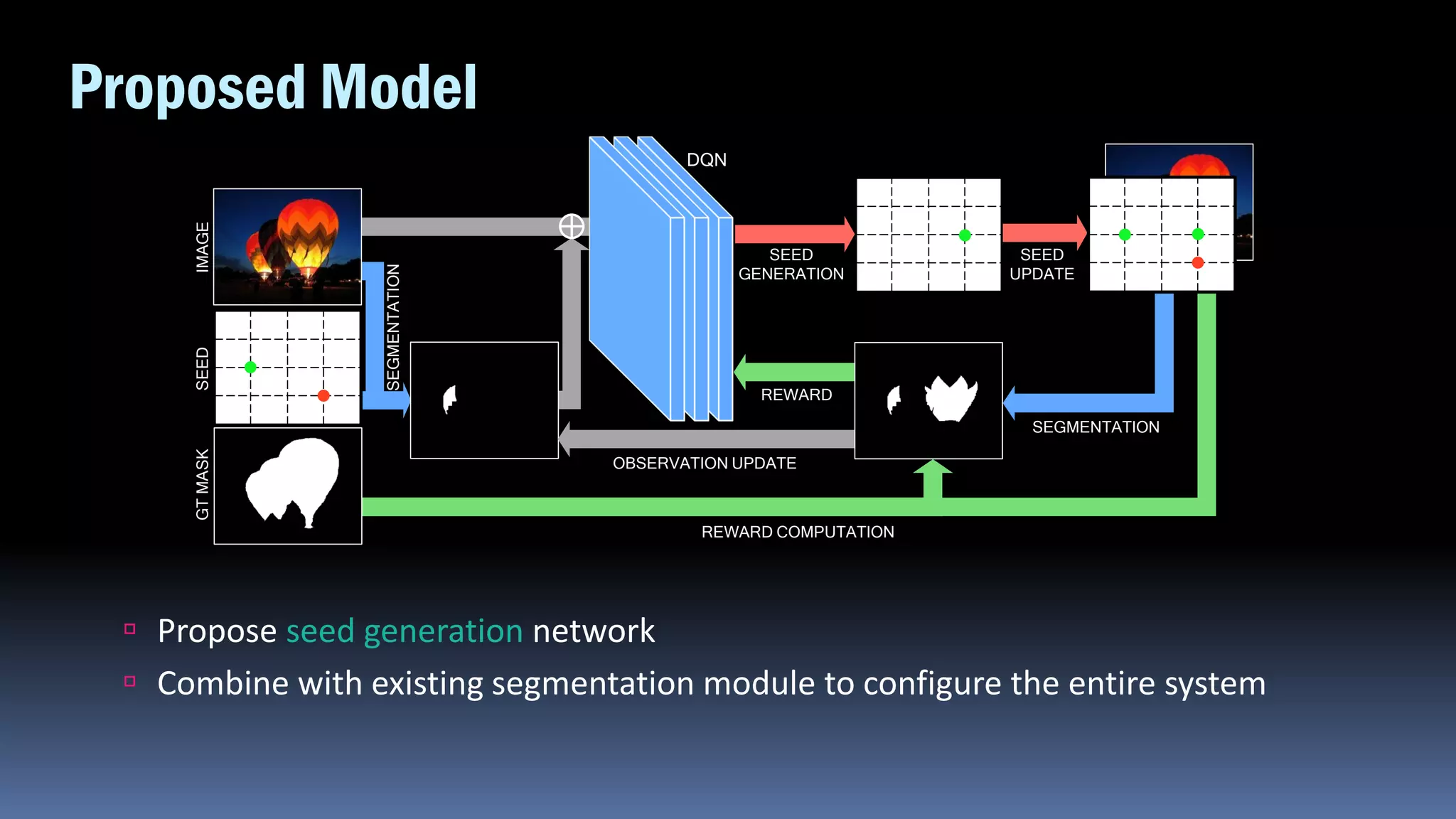

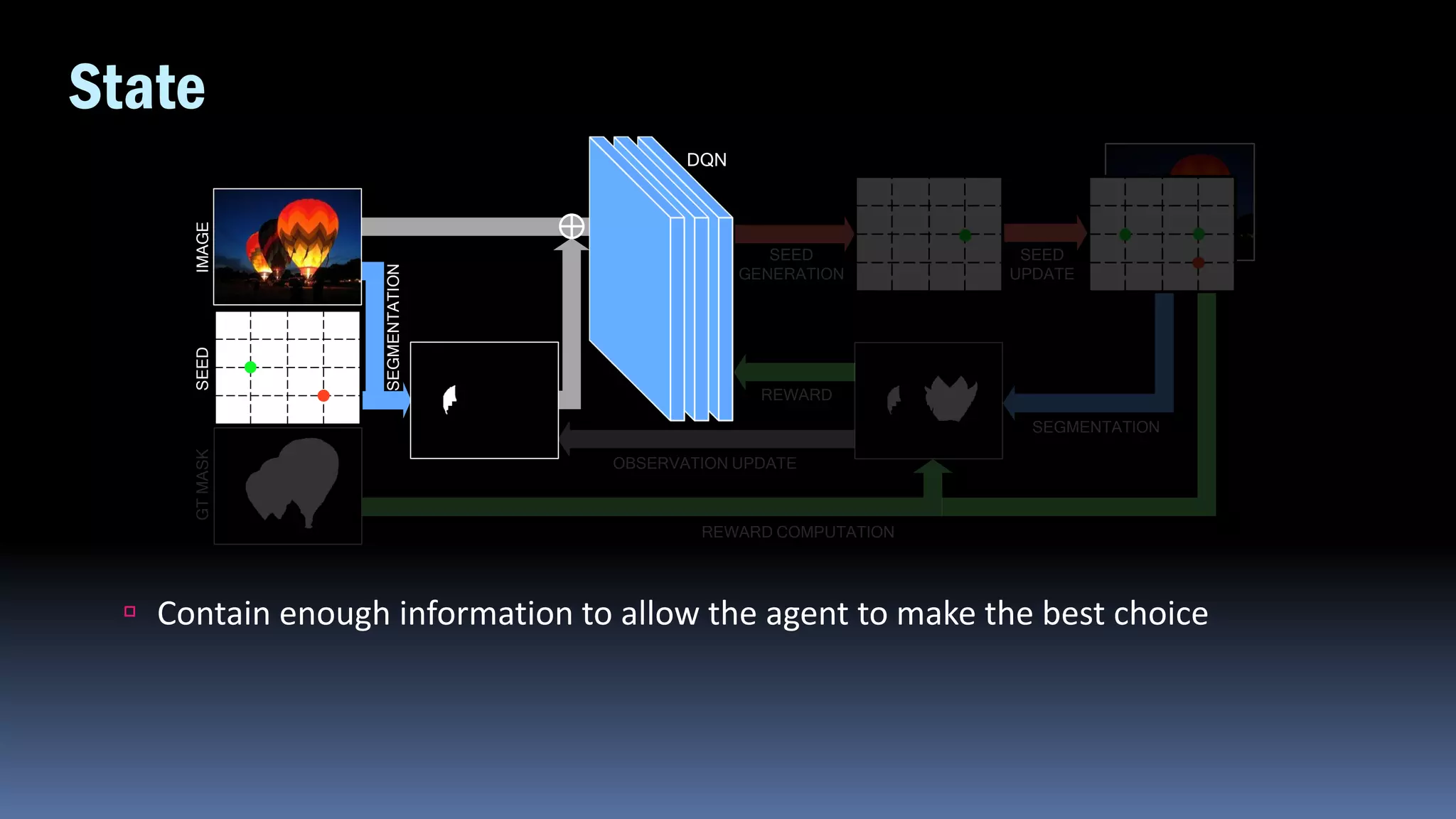

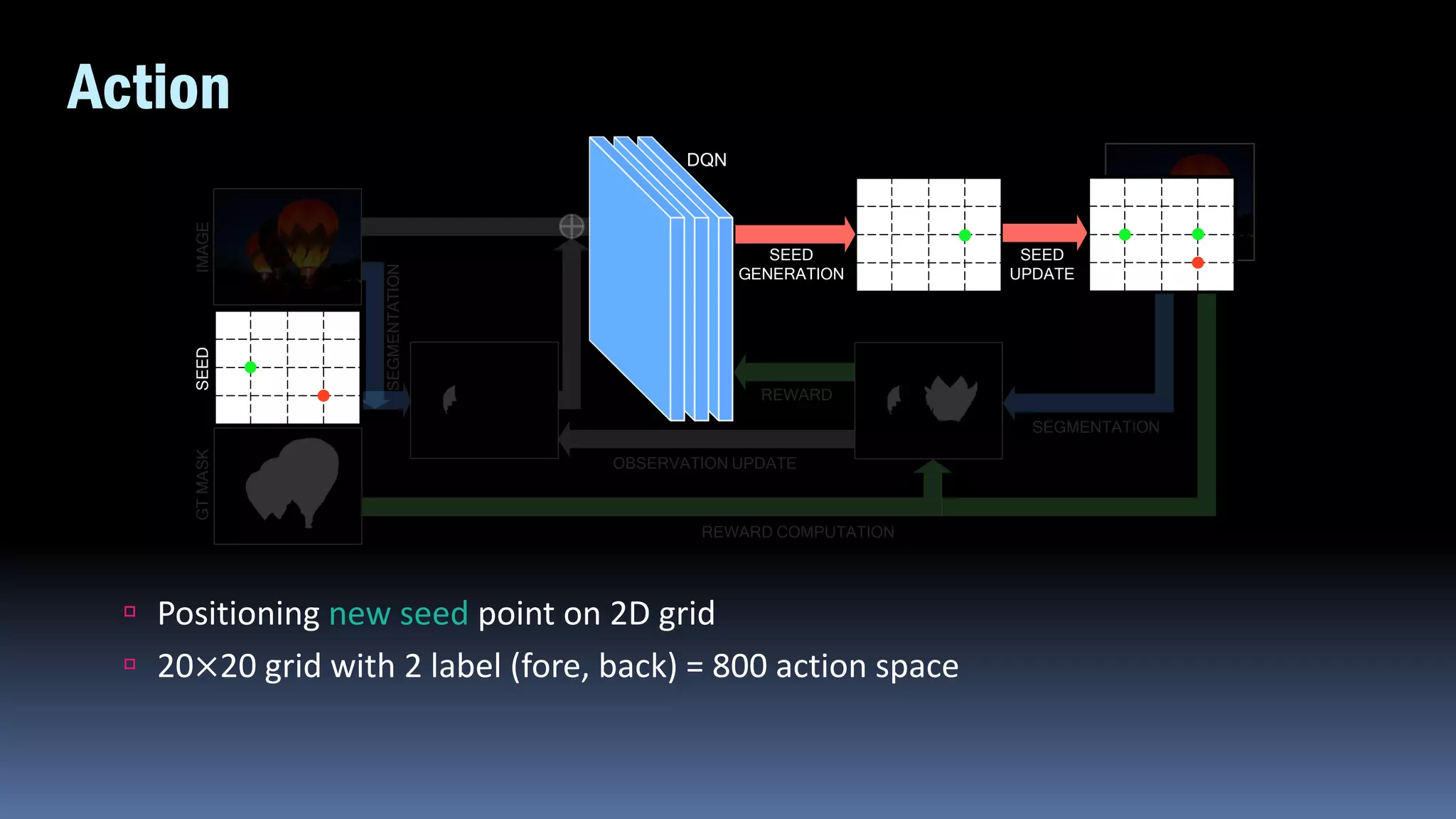

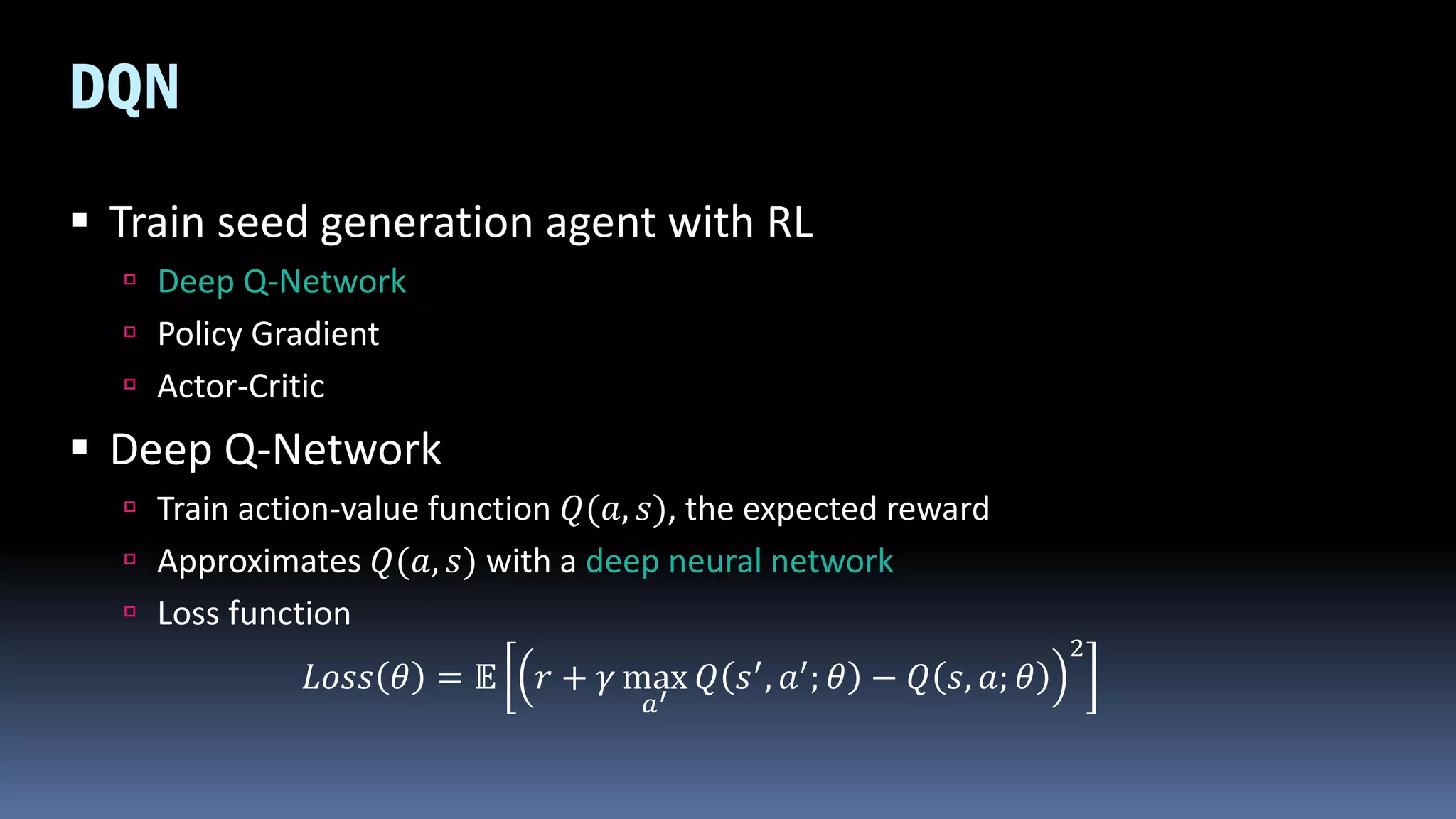

![DQN

DQN architecture

Basic architecture from [1]

Double DQN [2]

Dueling DQN [3]

[1] V. Mnih et al. Human-level control through deep reinforcement learning. In Nature. 2015.

[2] H. Van Hasselt et al. Deep reinforcement learning with double q-learning. In AAAI, 2016.

[3] Z. Wang et al. Dueling network architectures for deep reinforcement learning. In ICML, 2016.](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-37-2048.jpg)

![Experiments

MSRA10K dataset

Saliency task

10,000 images (9,000 train, 1,000 test)

Initial seed generated from GT mask

1 foreground, 1 background seed point

Our model

Segmentation module : Random Walk [1]

Stop after 10 seed generation

[1] L. Grady. Random walks for image segmentation. In PAMI. 2006.](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-38-2048.jpg)

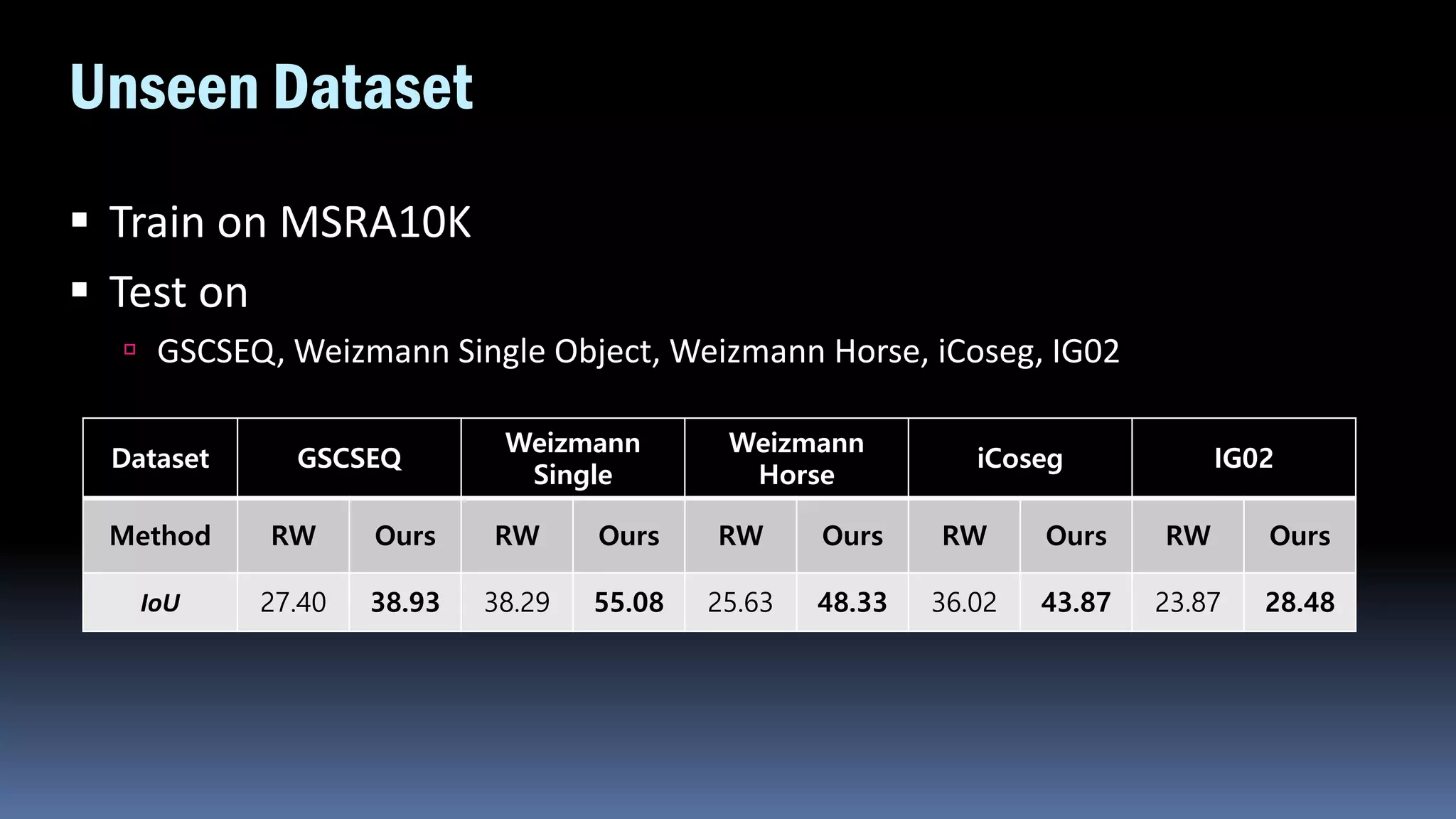

![MSRA10K

Quantitative Results

5 random seed sets

Intersection-over-Union score comparison

Method Set 1 Set 2 Set 3 Set 4 Set 5 Mean

RW [1] 39.59 39.65 39.71 39.77 39.89 39.72

Ours 60.70 60.12 61.28 61.87 60.90 60.97

[1] L. Grady. Random walks for image segmentation. In PAMI. 2006.](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-39-2048.jpg)

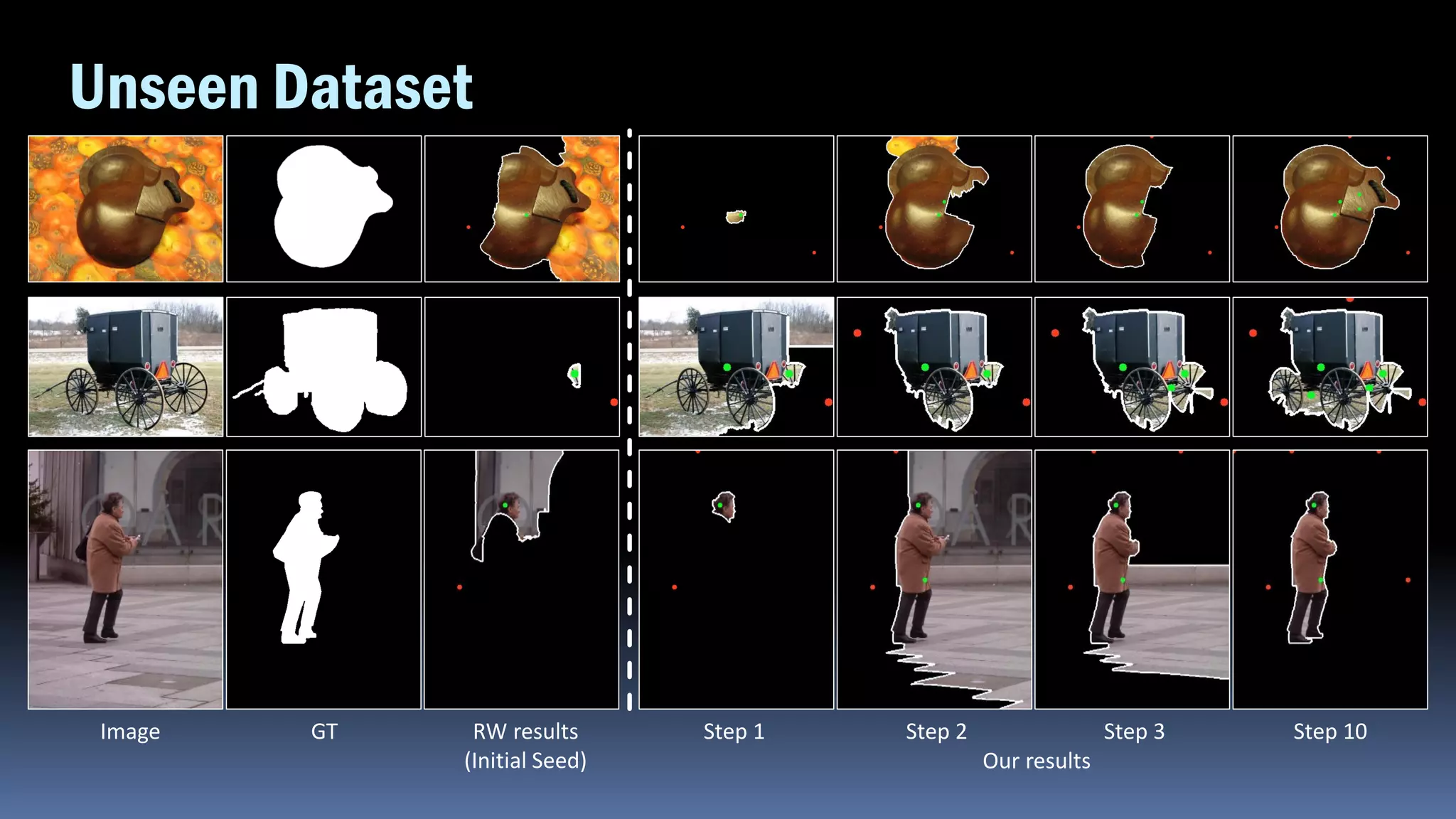

![MSRA10K

Image GT RW results [1]

(Initial Seed)

Step 1 Step 2 Step 3 Step 10

Our results

[1] L. Grady. Random walks for image segmentation. In PAMI. 2006.](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-40-2048.jpg)

![MSRA10K

Comparison with supervised methods

Same condition (Network configuration, Scratch)

Directly output segmentation mask

Input type : image only (FCN), with seed (iFCN)

Method FCN [1] iFCN [2] Ours

IoU 37.20 44.60 60.97

[1] J. Long et al. Fully convolutional networks for semantic segmentation. In CVPR. 2015

[2] N. Xu et al. Deep interactive object selection. In CVPR. 2016.](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-41-2048.jpg)

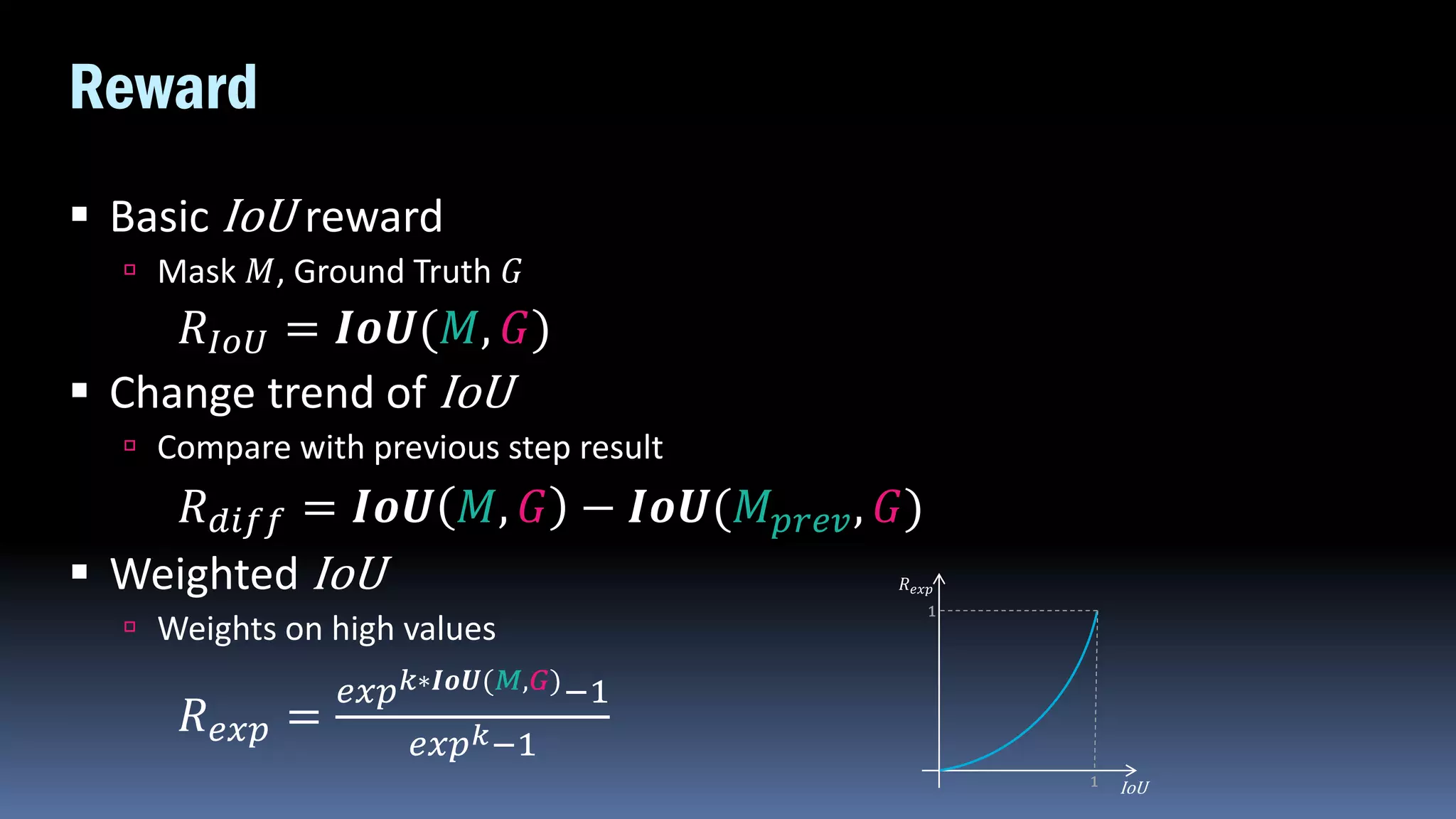

![Ablation Study

Reward function

Method RW [1] 𝑹 𝑰𝒐𝑼 𝑹 𝒅𝒊𝒇𝒇 𝑹 𝒔𝒊

IoU 39.72 42.55 44.45 60.97

𝑅𝐼𝑜𝑈 𝑅 𝑑𝑖𝑓𝑓 𝑅 𝑠𝑖

[1] L. Grady. Random walks for image segmentation. In PAMI. 2006.](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-42-2048.jpg)

![Ablation Study

Other segmentation module

Method GC [1] Ours (GC) GSC [2] Ours (GSC)

IoU 38.44 52.10 58.34 63.48

RWR [3] Ours (RWR)

35.71 53.04

Image GT Initial Ours

GCGSCRWR

[1] C. Rother et al. Grabcut: Interactive foreground extraction using iterated graph cuts. In ToG. 2004.

[2] V. Gulshan et al. Geodesic star convexity for interactive image segmentation. In CVPR. IEEE, 2010.

[3] T. H. Kim et al. Generative image segmentation using random walks with restart. In ECCV. 2008.](https://image.slidesharecdn.com/seednetautomaticseedgenerationwithdeepreinforcementlearningforrobustinteractivesegmentation-181119055545/75/Seed-net-automatic-seed-generation-with-deep-reinforcement-learning-for-robust-interactive-segmentation-43-2048.jpg)