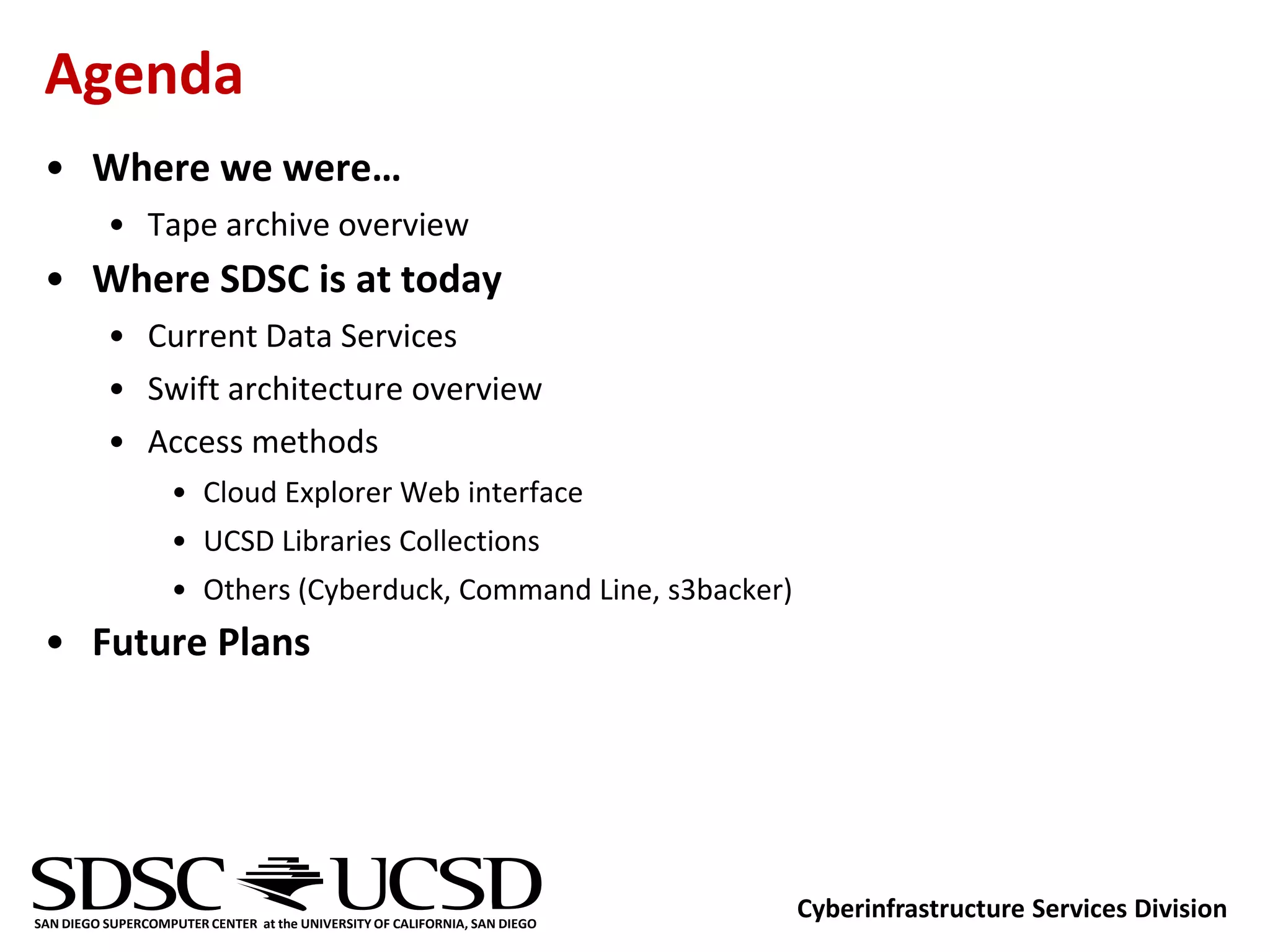

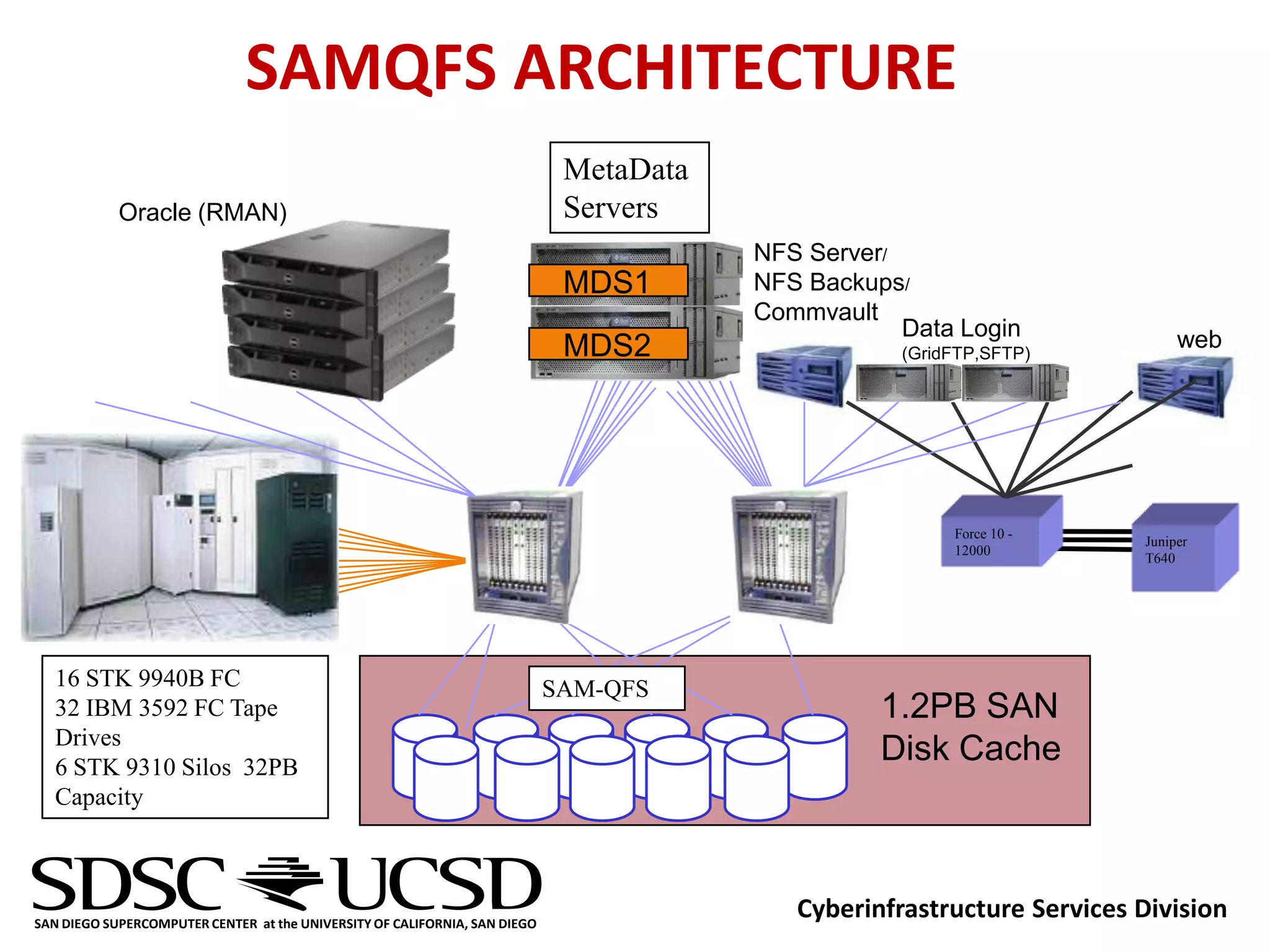

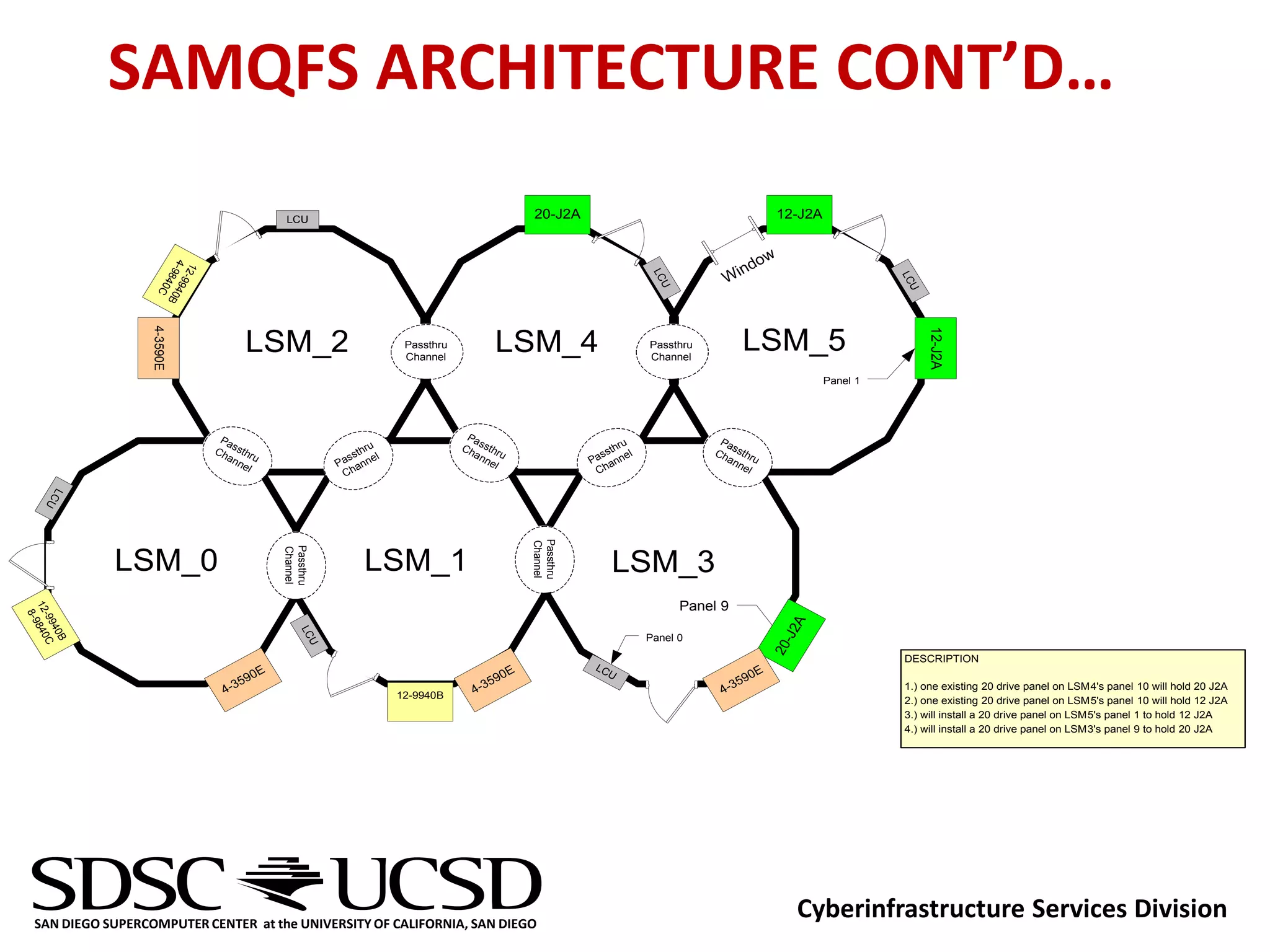

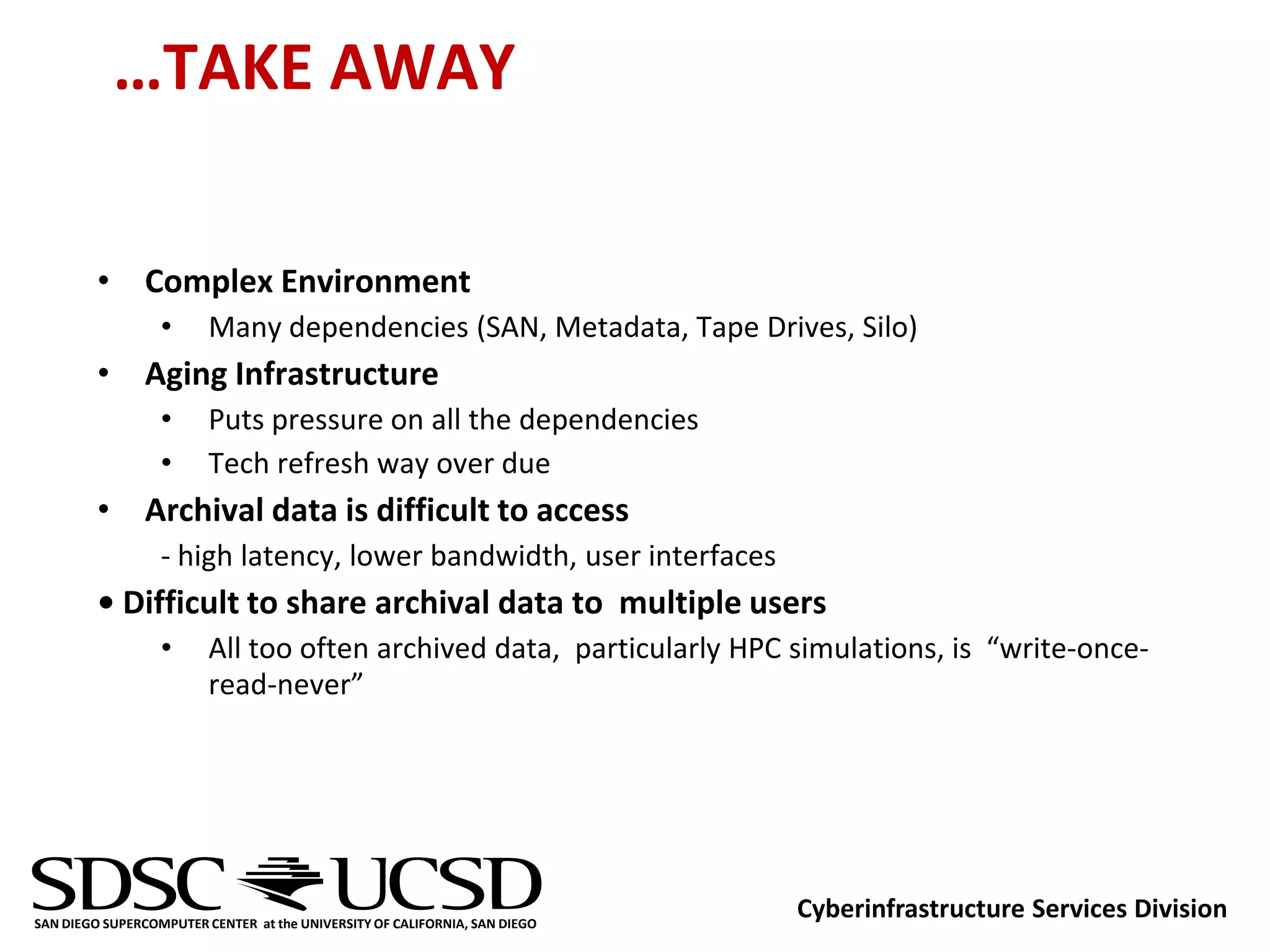

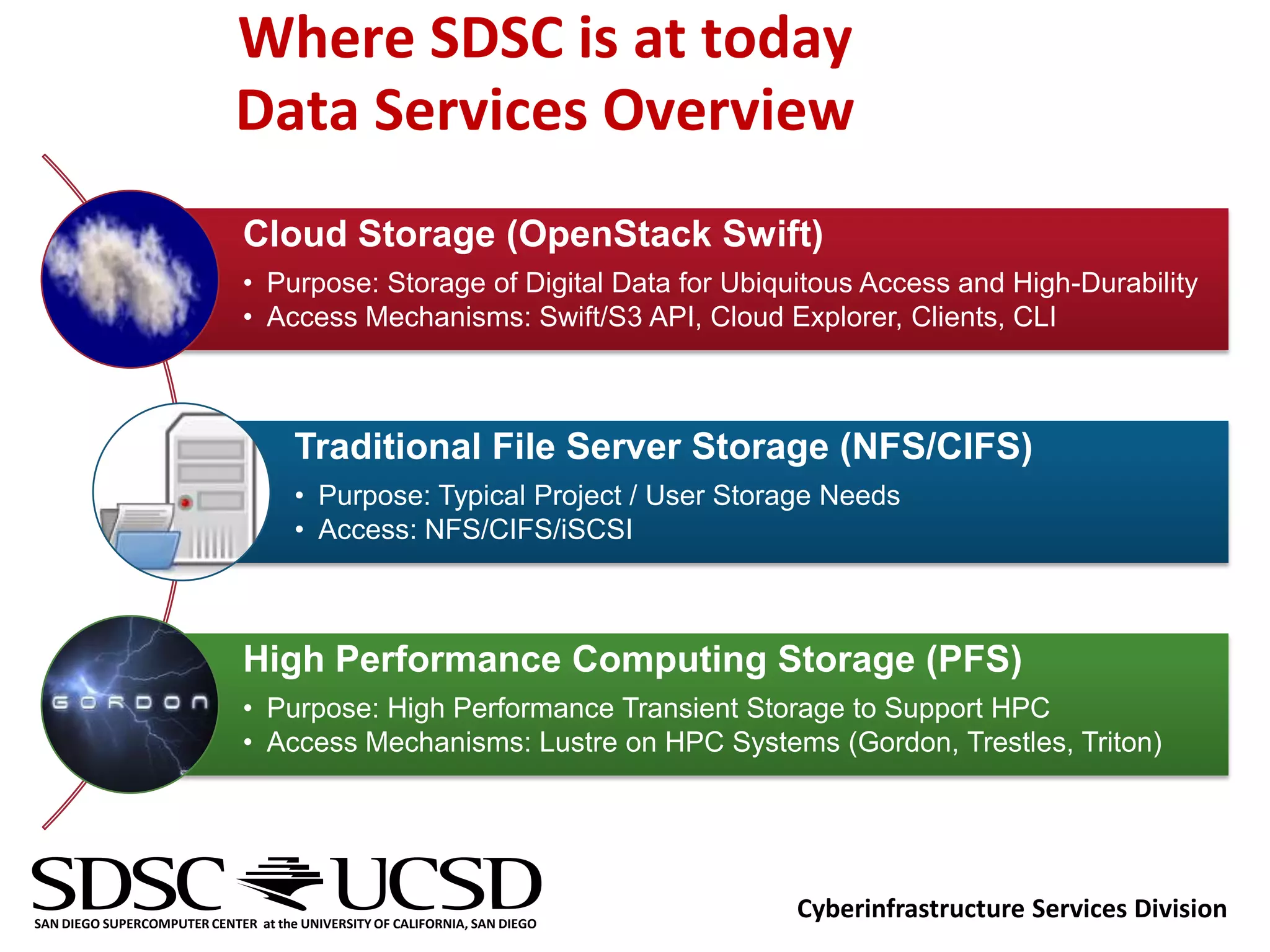

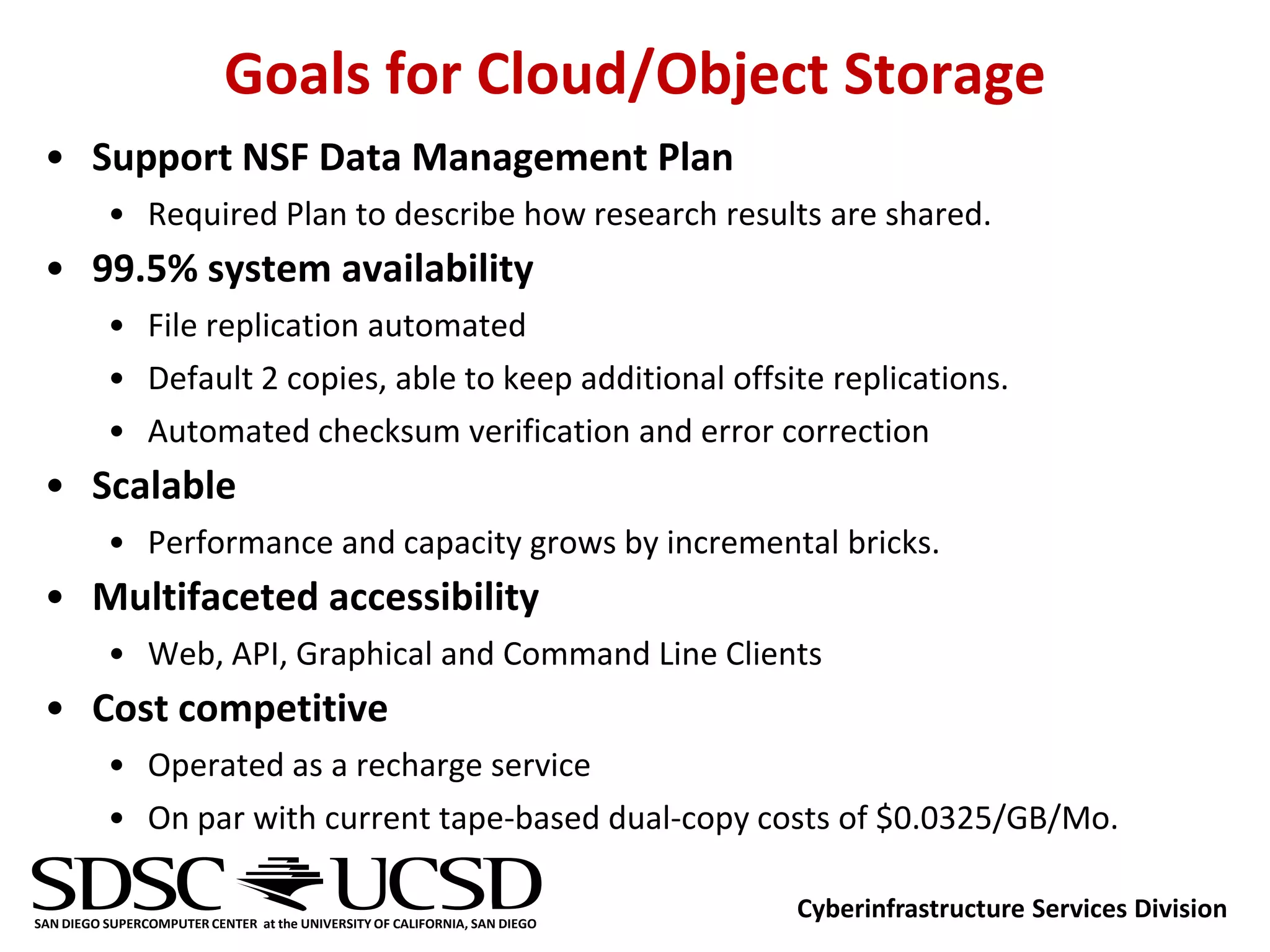

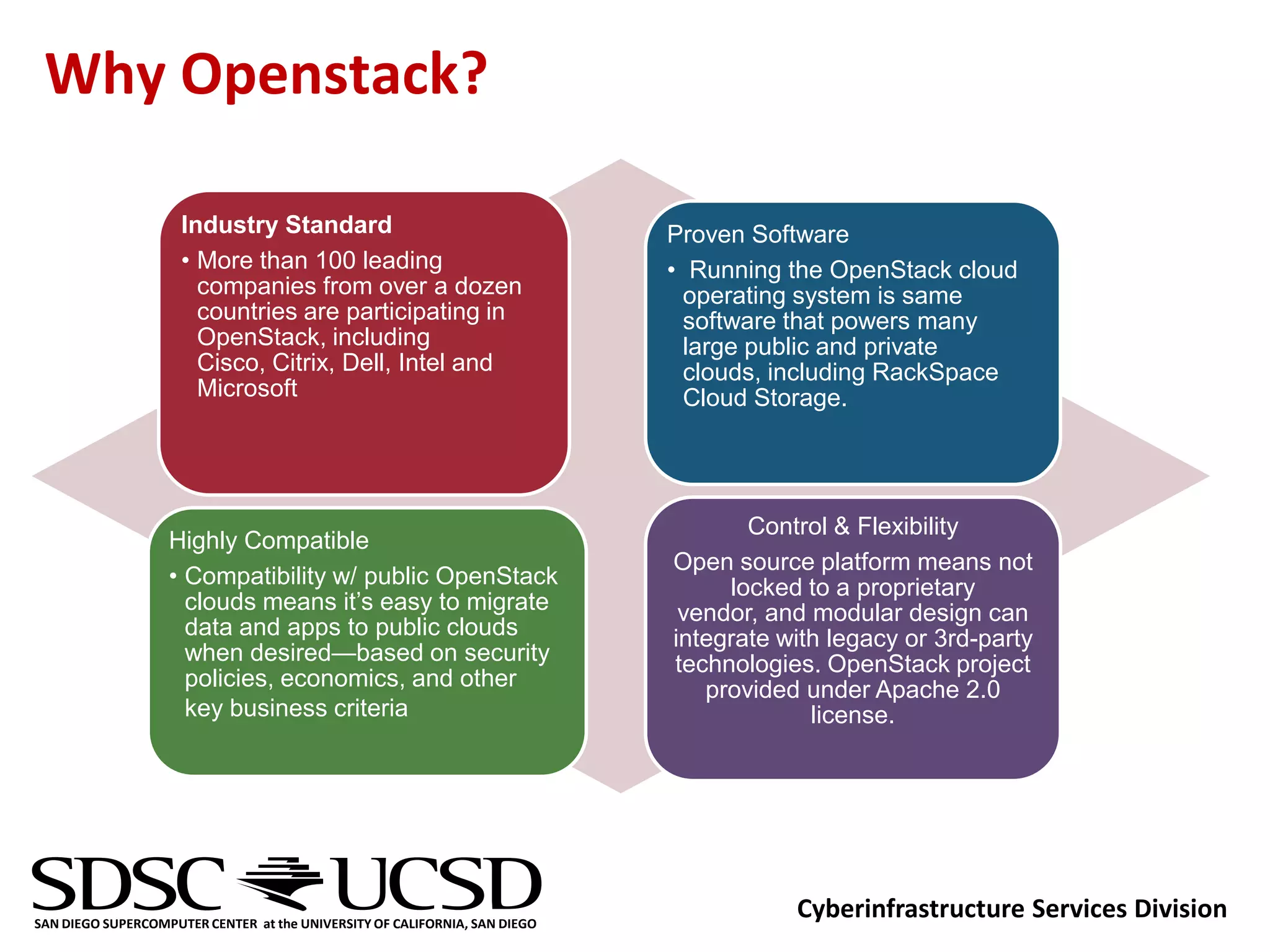

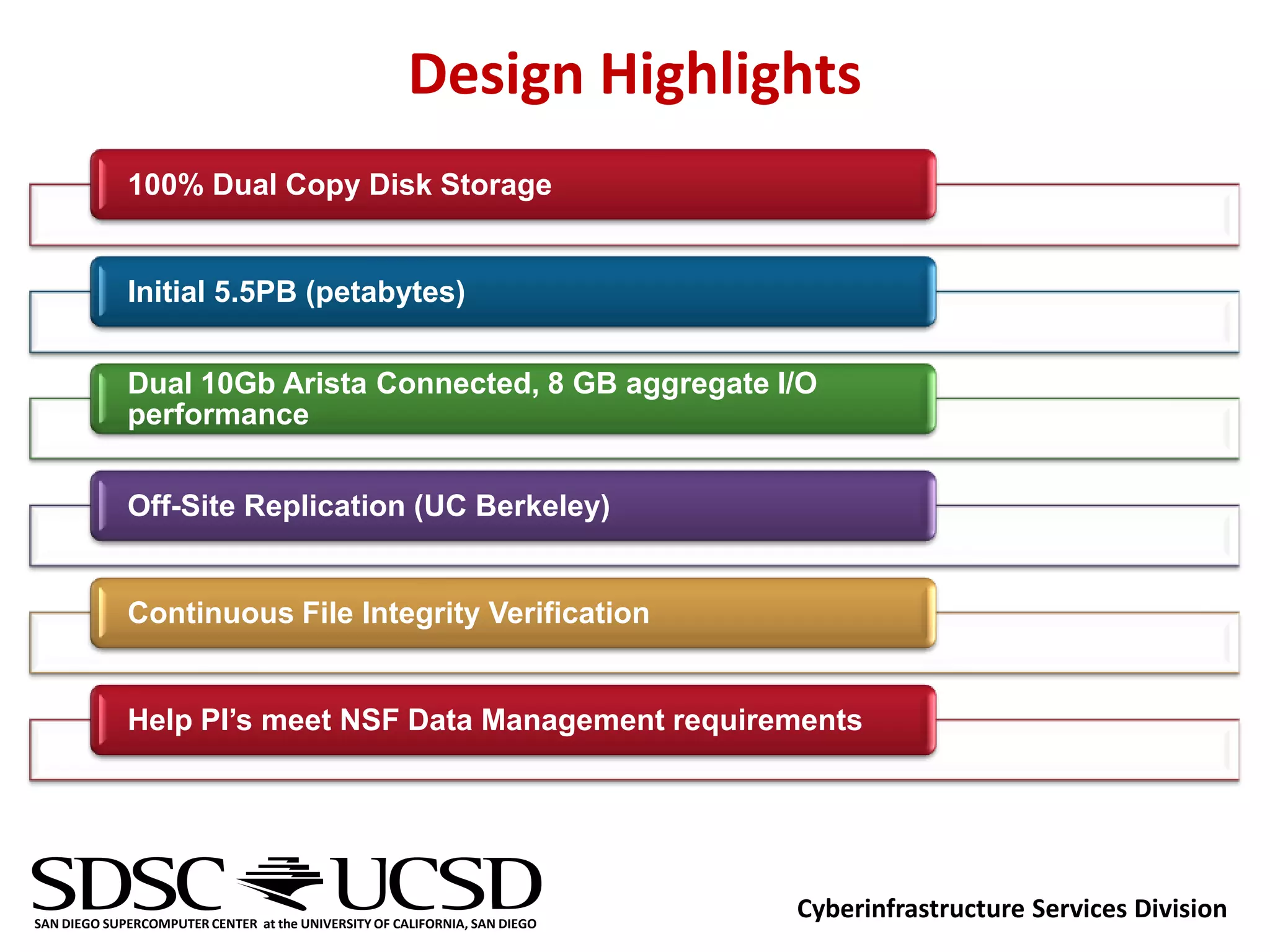

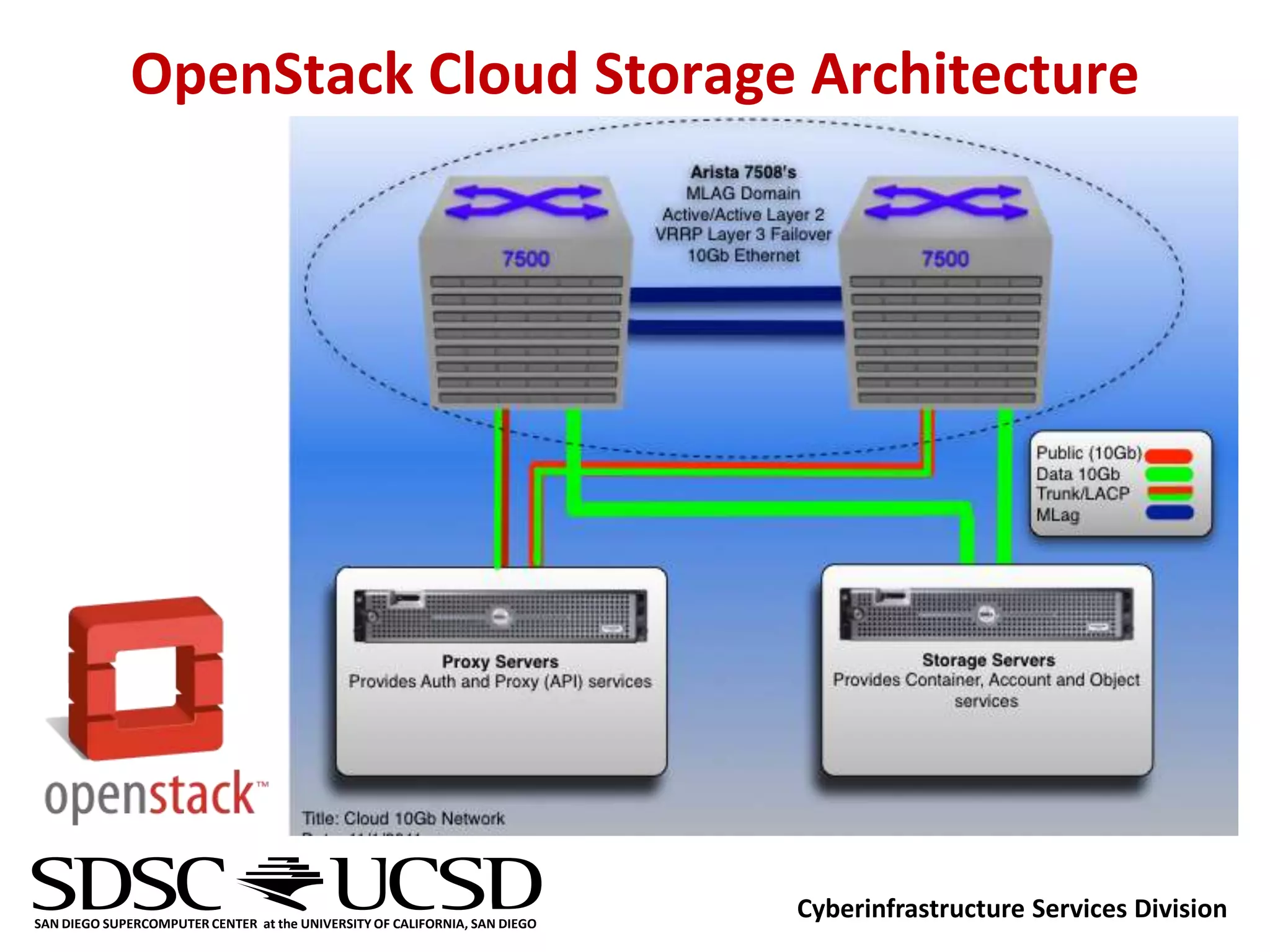

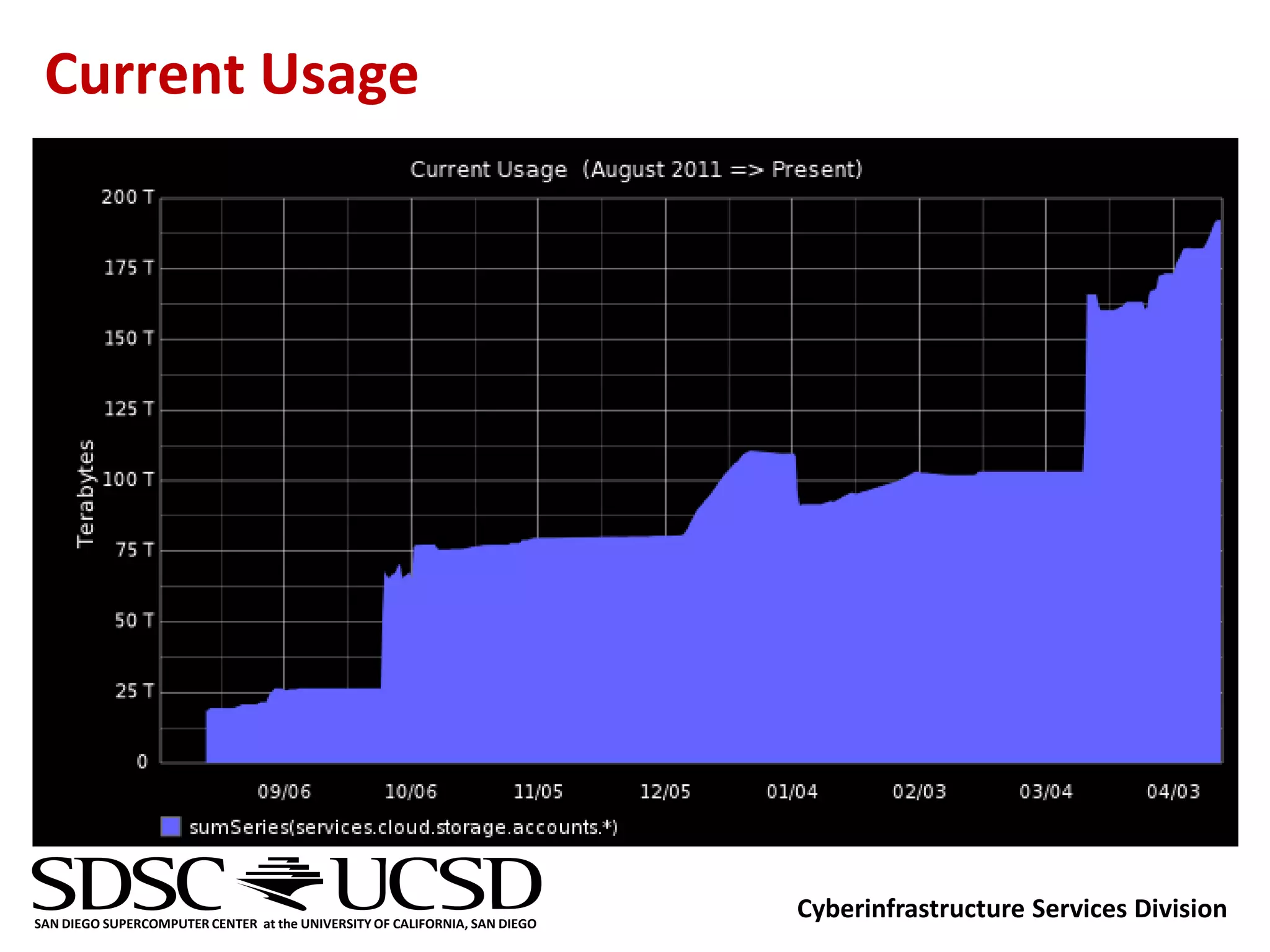

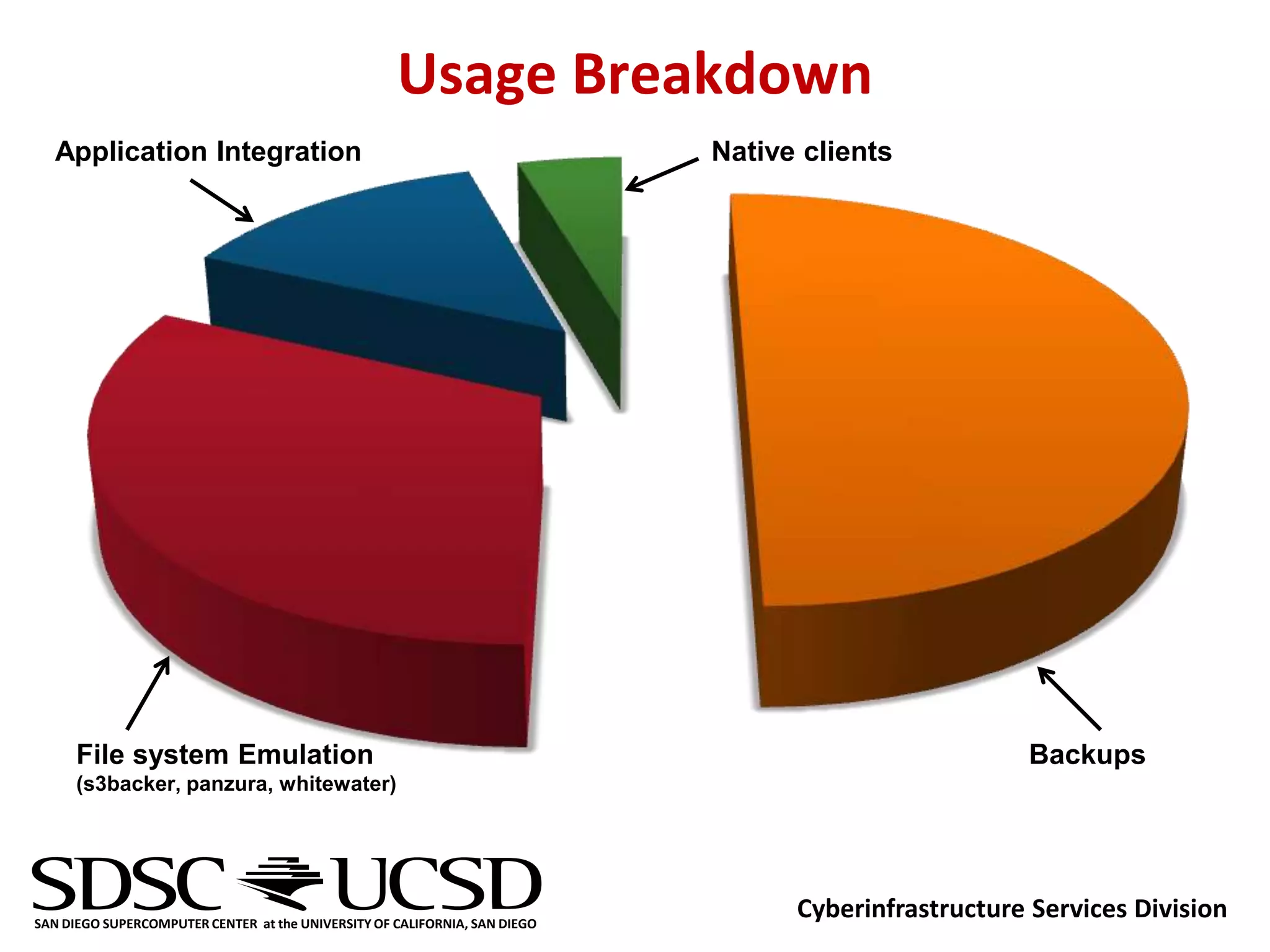

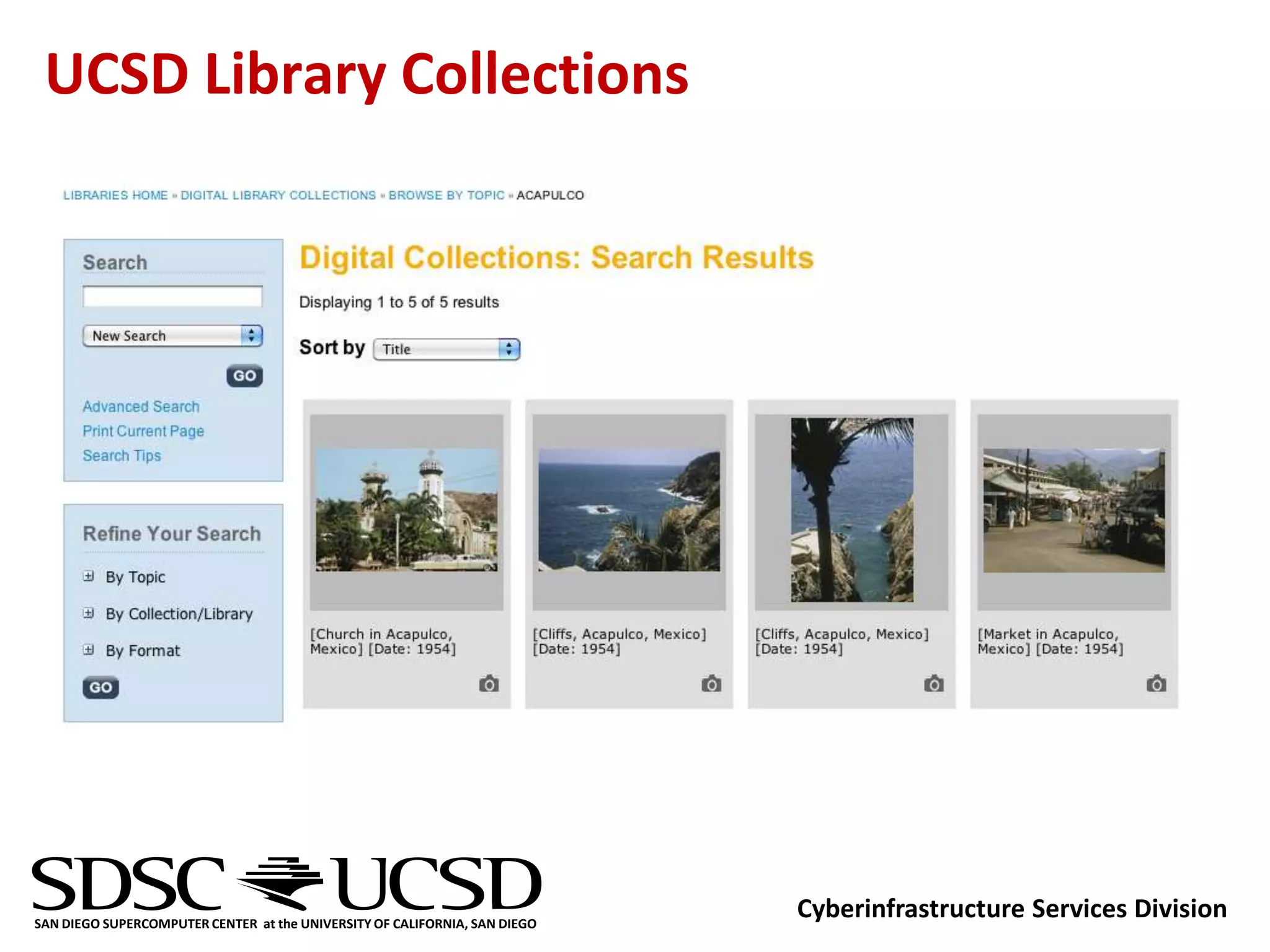

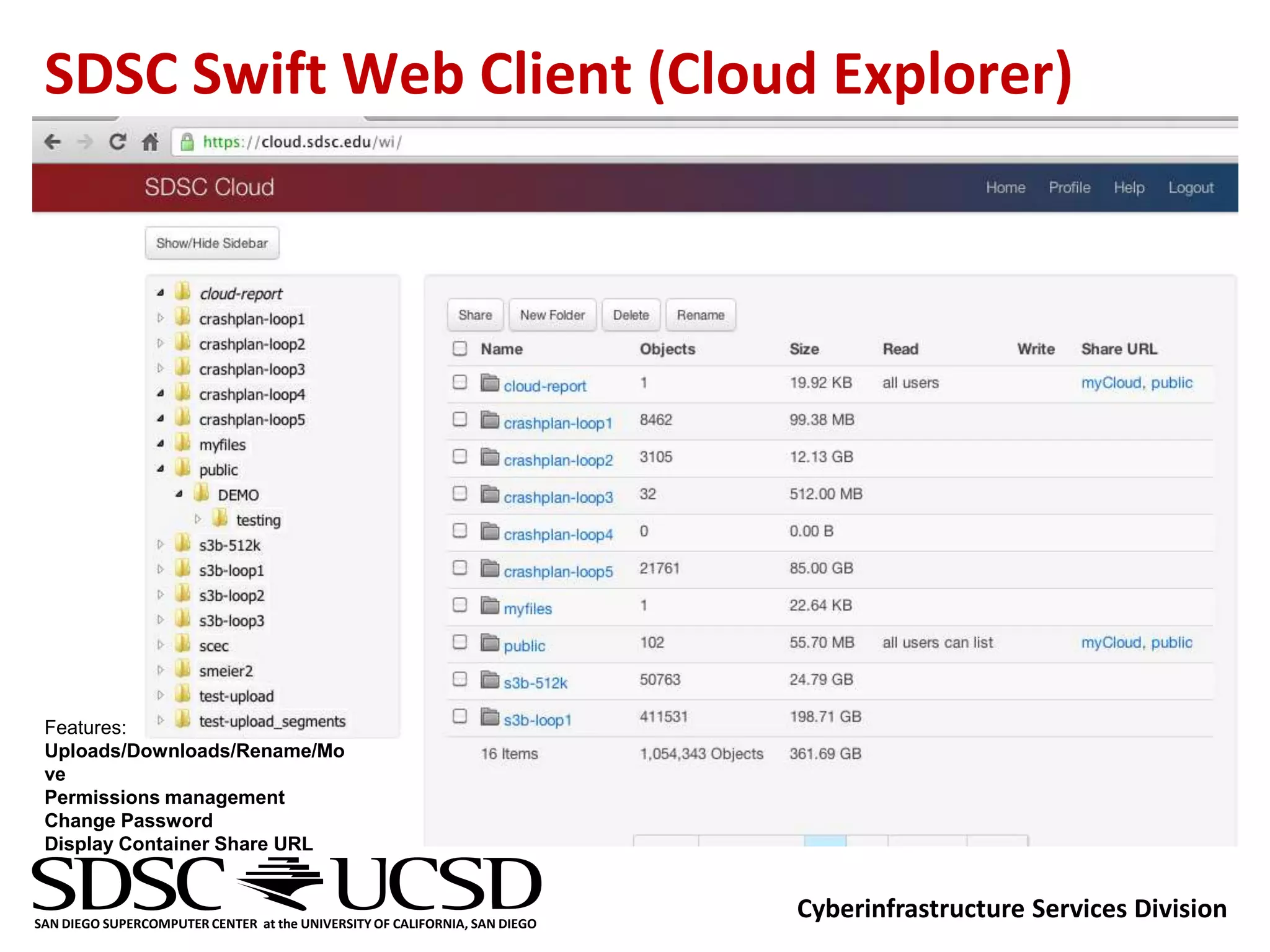

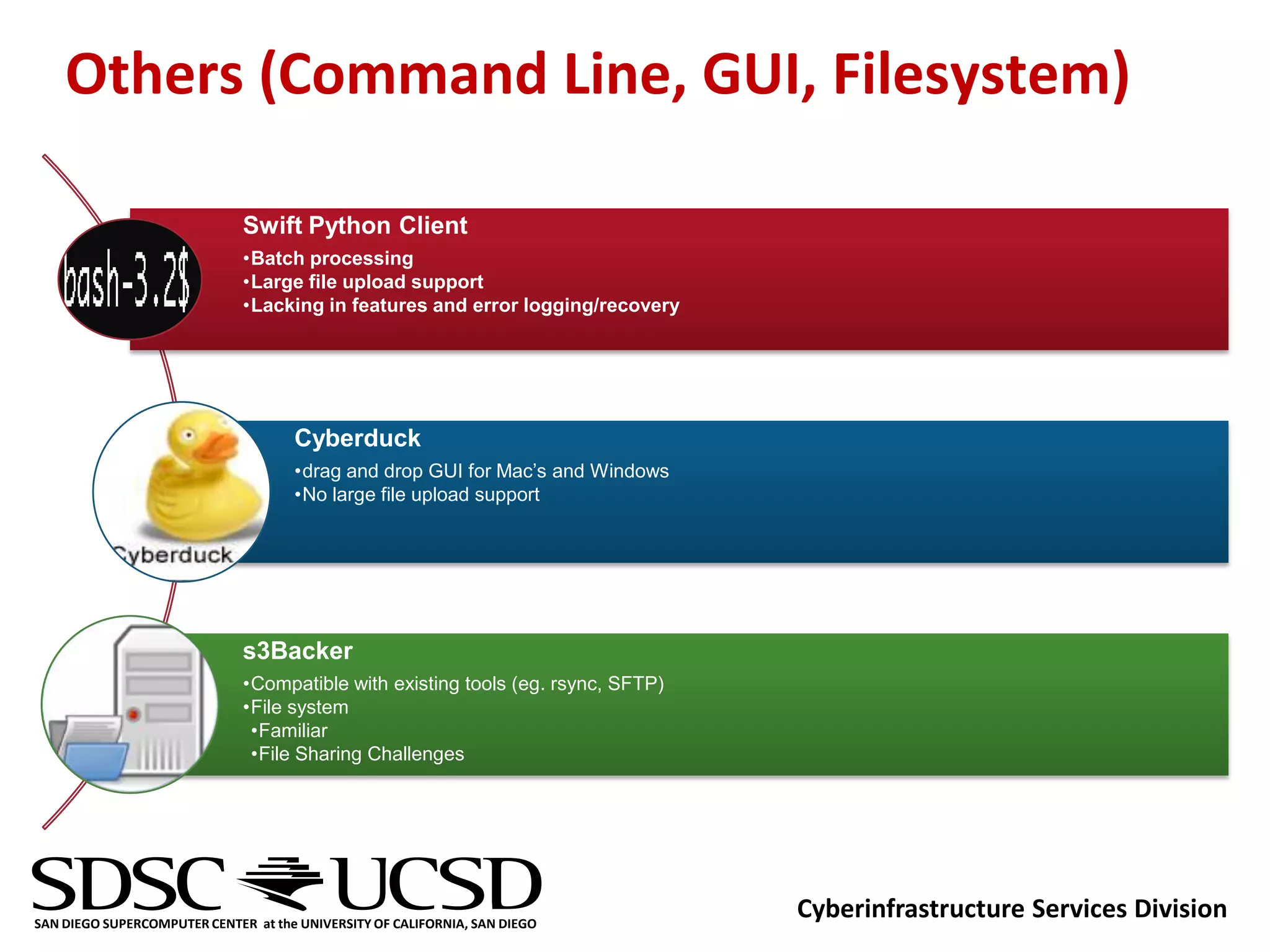

The document discusses the transition from tape storage to cloud storage at the San Diego Supercomputer Center (SDSC). It provides an overview of SDSC's previous tape archive system and current OpenStack Swift cloud storage system. The Swift system provides scalable, durable storage with 99.5% availability and supports access through web interfaces, command line tools, and library collections. Future plans include integrating Active Directory authentication and improving large file upload support.