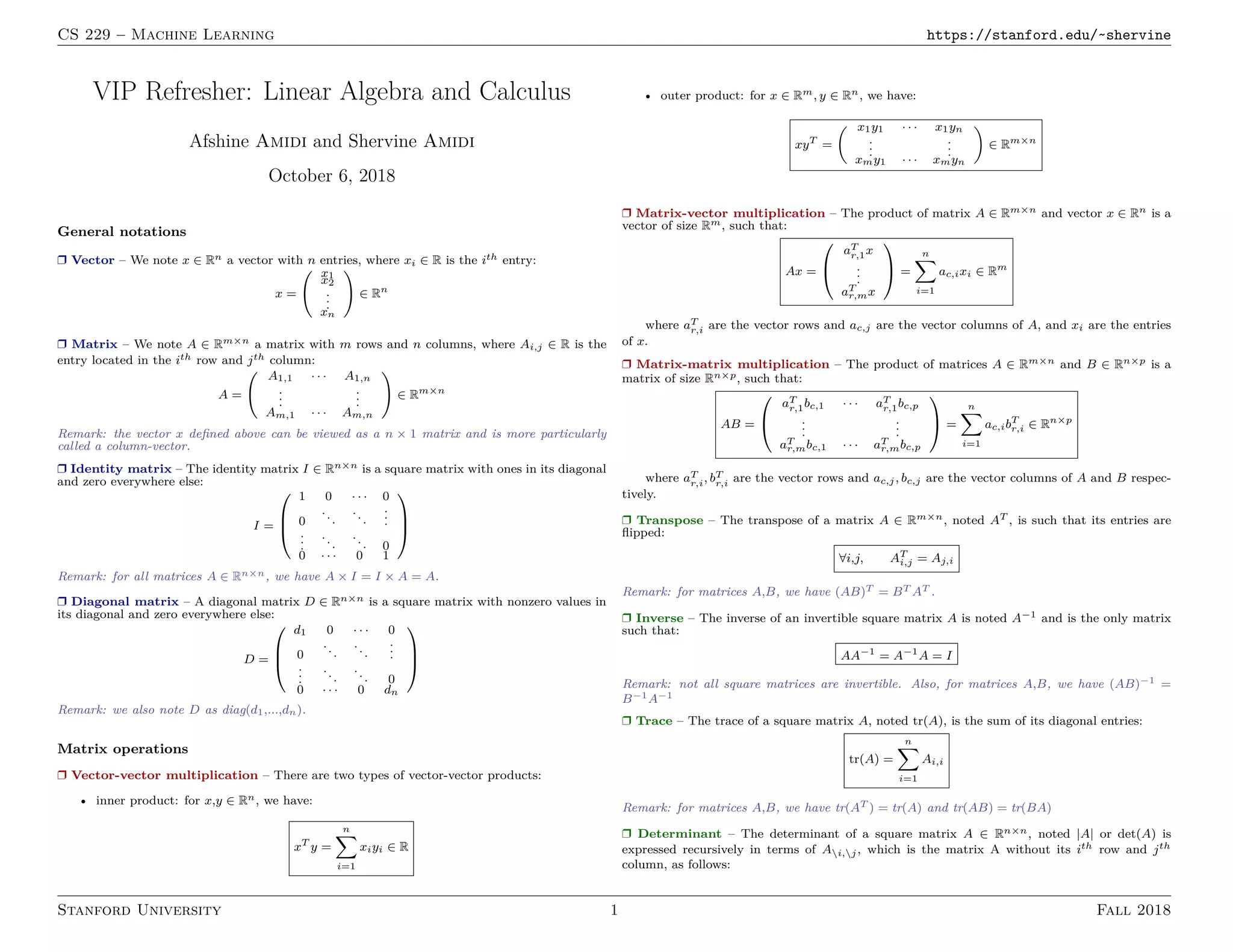

This document provides an overview of key linear algebra and calculus concepts for machine learning, including:

1) Notations for vectors, matrices, and operations like matrix multiplication and transposition.

2) Common matrix properties such as symmetry, positive semi-definiteness, eigenvalues, and singular value decomposition.

3) Derivatives used in calculus on matrices, including the gradient and Hessian of functions with respect to vectors and matrices.