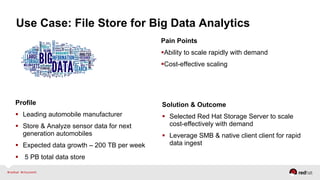

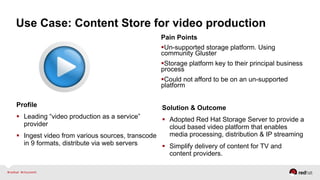

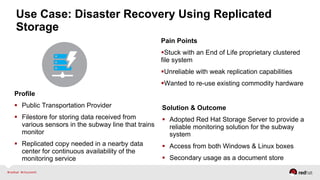

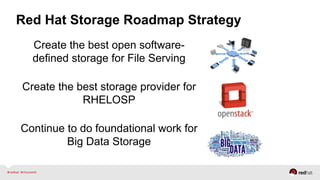

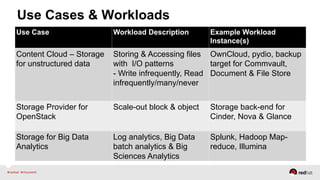

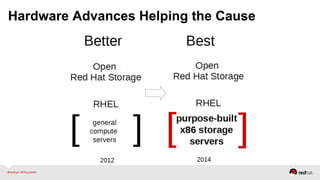

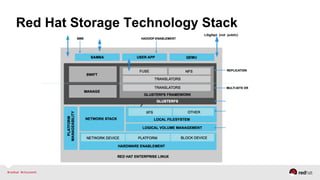

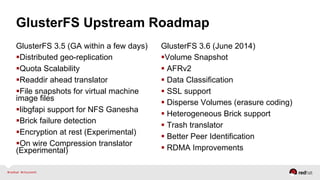

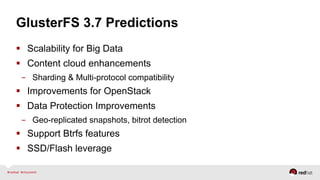

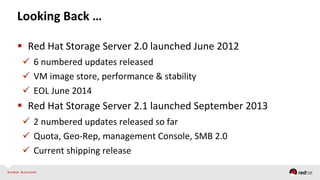

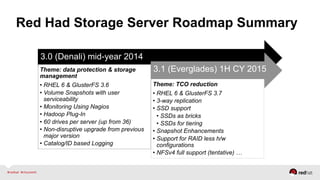

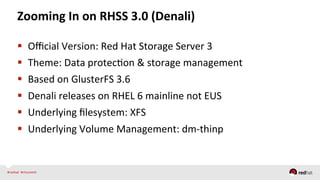

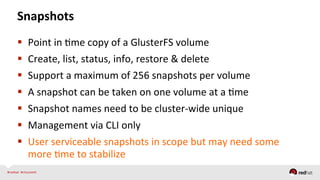

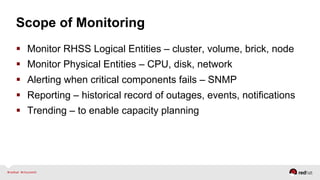

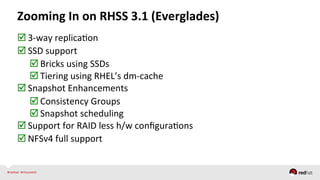

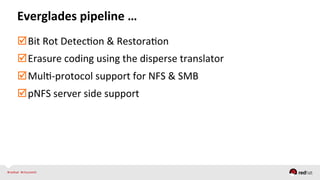

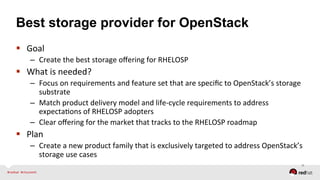

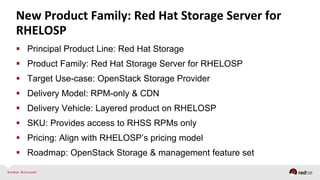

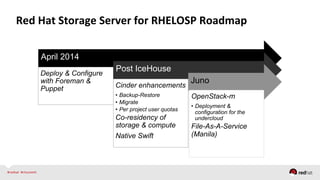

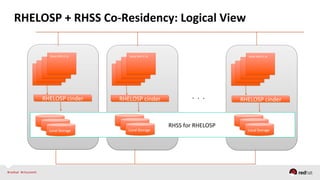

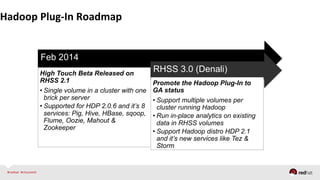

The document covers the Red Hat Storage Server's roadmap and its integration with OpenStack, highlighting use cases such as big data analytics, video content production, and disaster recovery. It discusses various customer pain points and the effective solutions provided by Red Hat, including the adoption of its storage server for different applications. The roadmap outlines future developments aimed at enhancing data protection, storage management, and compatibility with OpenStack and big data workloads.