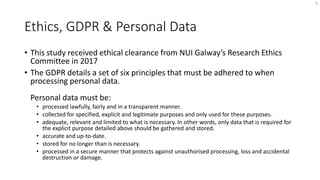

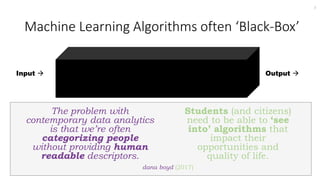

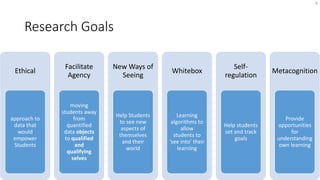

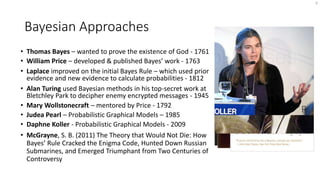

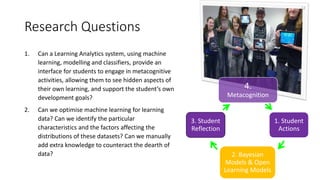

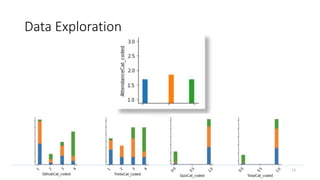

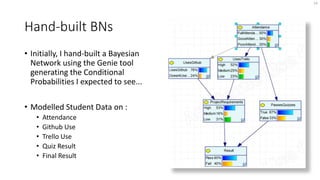

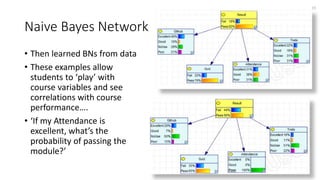

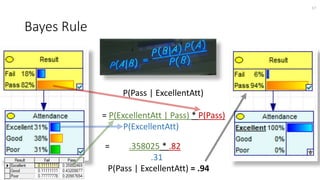

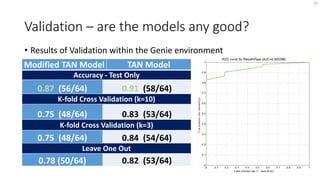

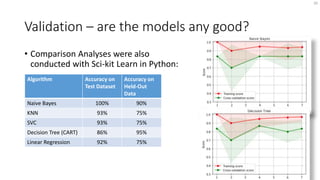

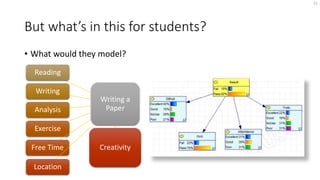

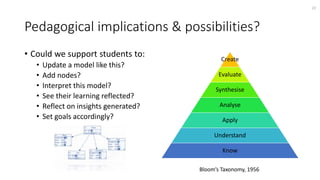

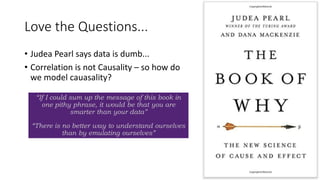

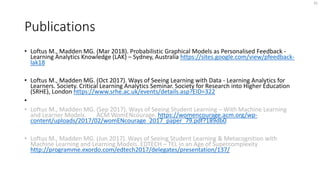

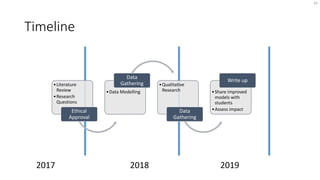

This document summarizes Mary Loftus' PhD research which aims to use probabilistic graphical models and machine learning to provide personalized feedback and support metacognition for students. The research goals are to empower students, increase their agency over their data, help them see new aspects of themselves and their learning, and make learning algorithms more transparent. The document discusses using Bayesian networks to model relationships between student data and performance. Initial models were built by hand and from data, and validated using various methods. The research questions focus on whether these techniques can support student metacognition and goal-setting. Potential pedagogical implications are discussed, like having students build their own models or reflecting on insights. Overall, the research aims to bring quantitative