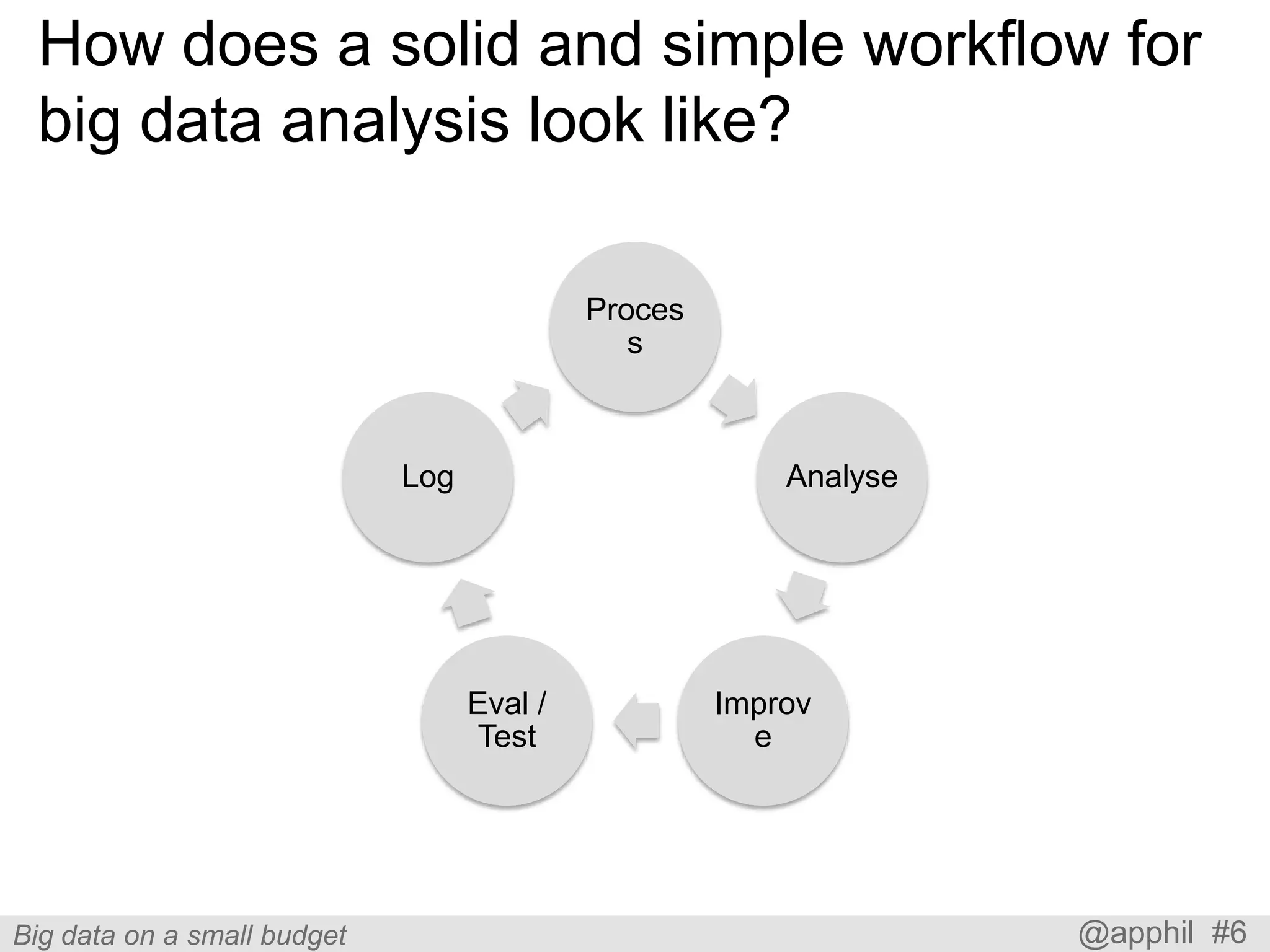

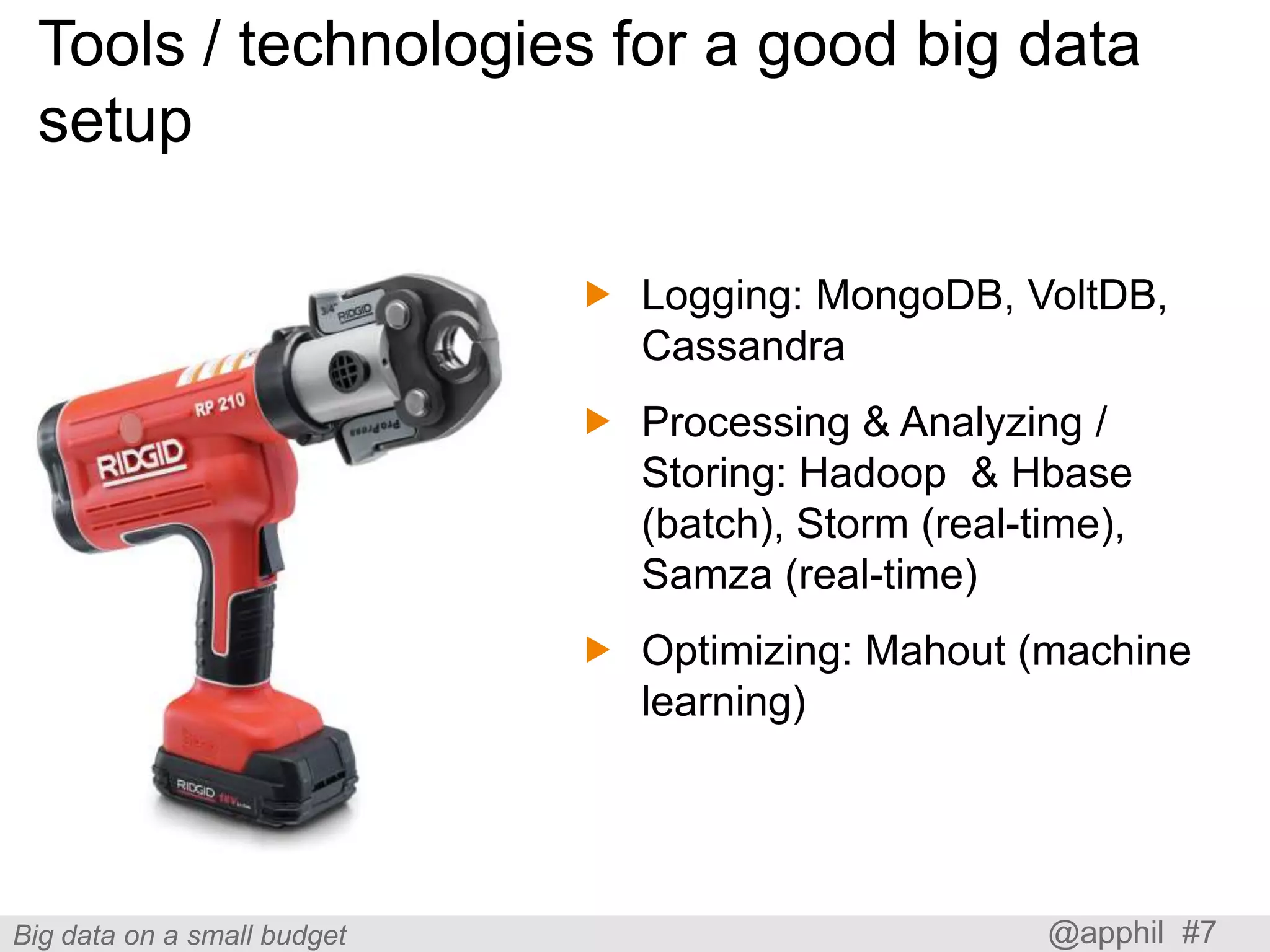

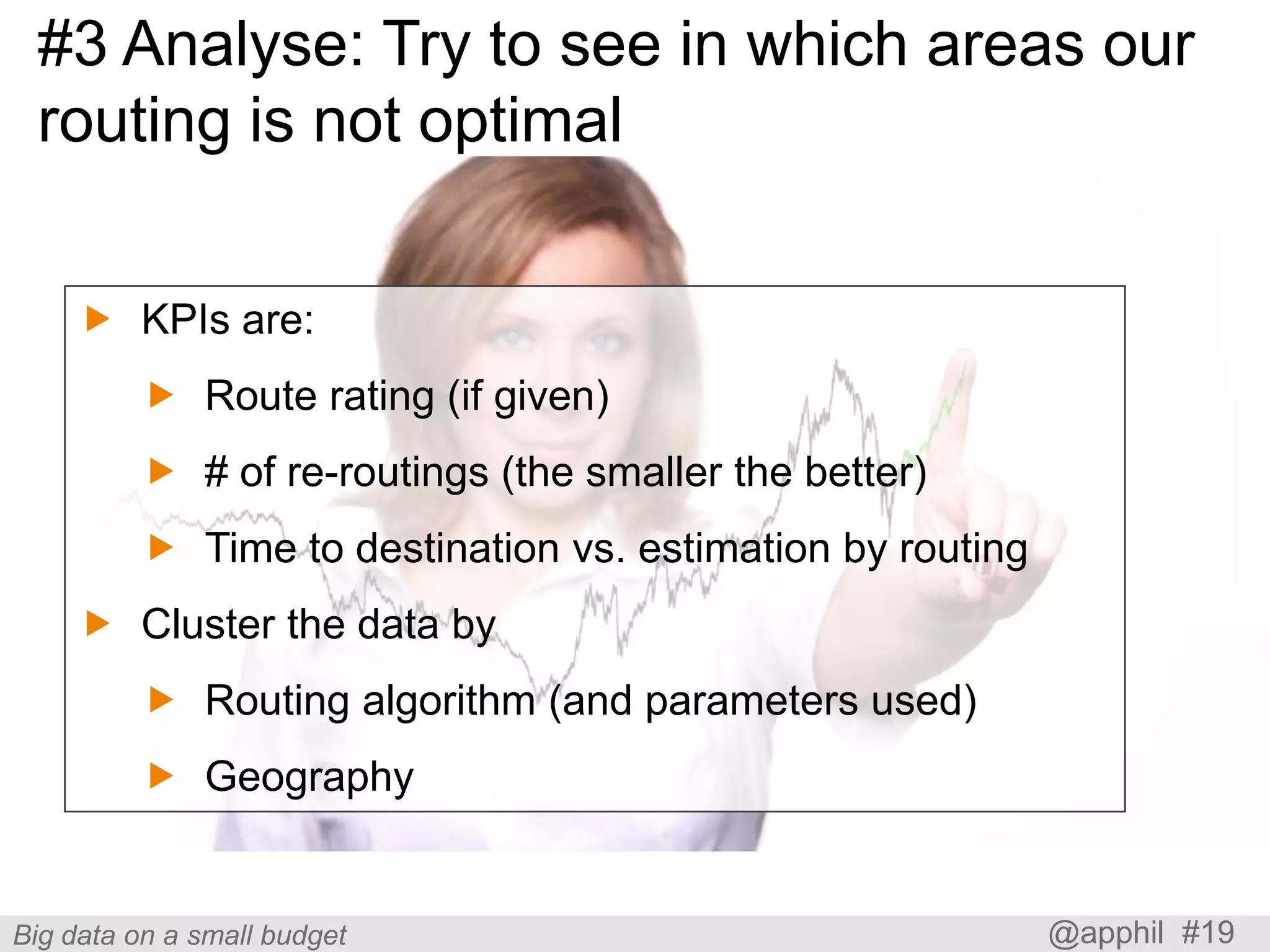

The document discusses leveraging big data on a budget, highlighting its value in improving business intelligence and user experience through analysis and optimization techniques. It outlines workflows for data logging, processing, analysis, and evaluation, emphasizing the importance of using cost-effective tools and technologies. Key strategies include understanding when not to rely on big data and leveraging real-time and batch processing based on project needs.