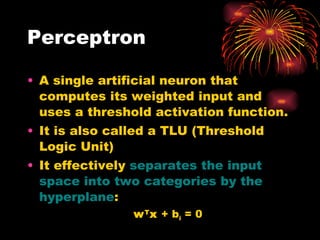

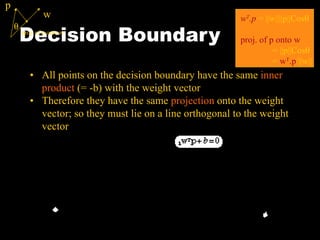

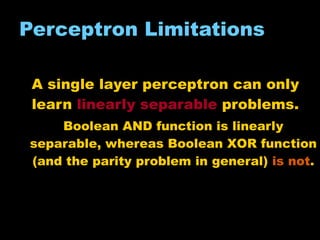

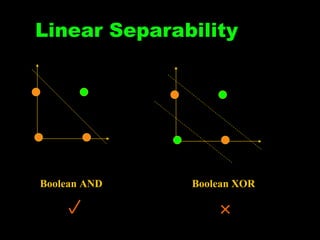

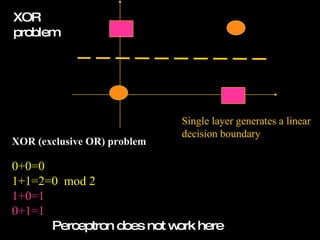

1. A perceptron is a basic artificial neural network that can learn linearly separable patterns. It takes weighted inputs, applies an activation function, and outputs a single binary value.

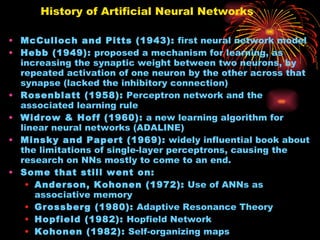

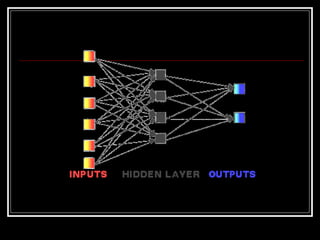

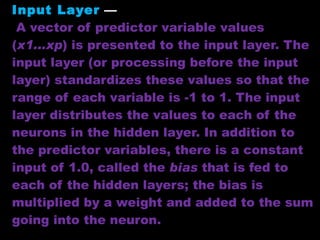

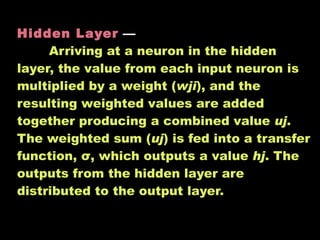

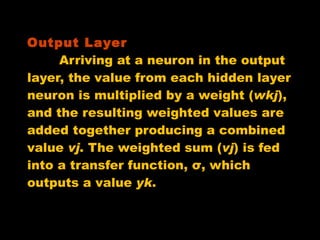

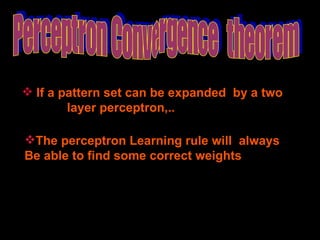

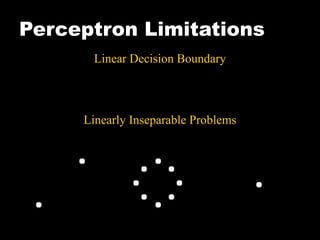

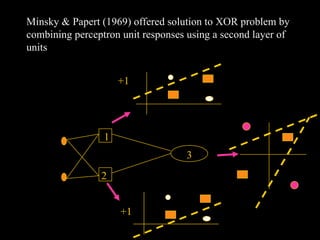

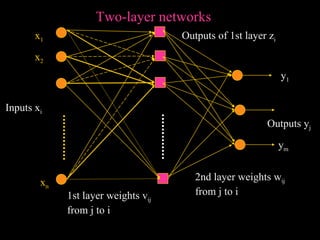

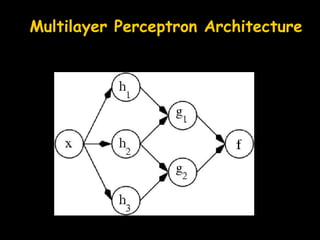

2. Multilayer perceptrons can learn non-linear patterns by using multiple layers of perceptrons with weighted connections between them. They were developed to overcome limitations of single-layer perceptrons.

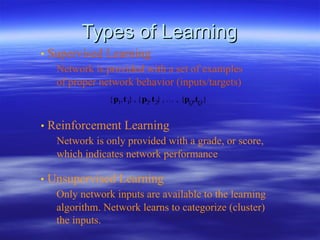

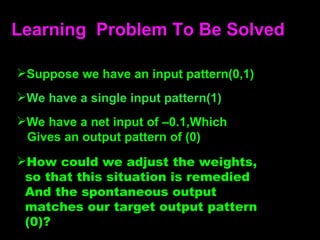

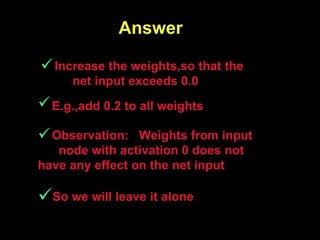

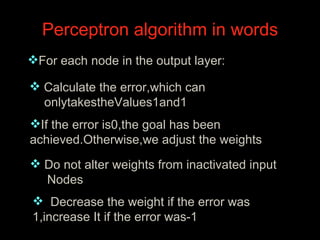

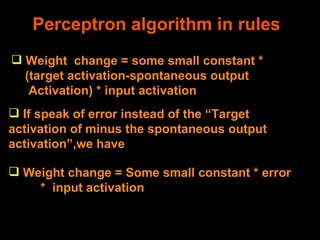

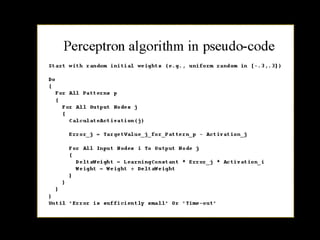

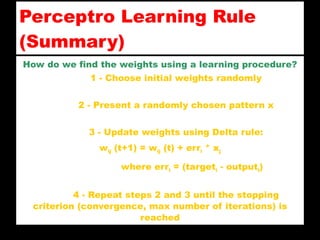

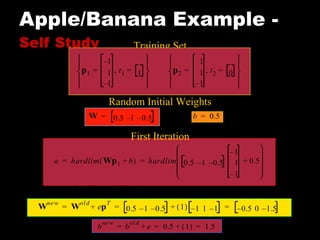

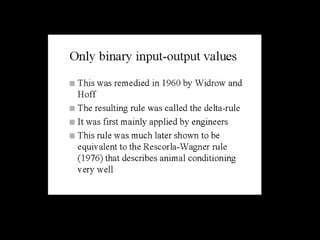

3. Perceptrons are trained using an error-correction learning rule called the delta rule or the least mean squares algorithm. Weights are adjusted to minimize the error between the actual and target outputs.

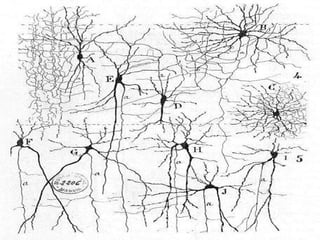

![Cybernetics and brain simulation Main articles: Cybernetics and Computational neuroscience There is no consensus on how closely the brain should be simulated . In the 1940s and 1950s, a number of researchers explored the connection between neurology , information theory , and cybernetics . Some of them built machines that used electronic networks to exhibit rudimentary intelligence, such as W. Grey Walter 's turtles and the Johns Hopkins Beast . Many of these researchers gathered for meetings of the Teleological Society at Princeton University and the Ratio Club in England. [24] By 1960, this approach was largely abandoned, although elements of it would be revived in the 1980s.](https://image.slidesharecdn.com/perceptron-100509120859-phpapp01/85/Perceptron-43-320.jpg)

![General intelligence Main articles: Strong AI and AI-complete Most researchers hope that their work will eventually be incorporated into a machine with general intelligence (known as strong AI ), combining all the skills above and exceeding human abilities at most or all of them. [12] A few believe that anthropomorphic features like artificial consciousness or an artificial brain may be required for such a project. [74] Many of the problems above are considered AI-complete : to solve one problem, you must solve them all. For example, even a straightforward, specific task like machine translation requires that the machine follow the author's argument ( reason ), know what is being talked about ( knowledge ), and faithfully reproduce the author's intention ( social intelligence ). Machine translation , therefore, is believed to be AI-complete: it may require strong AI to be done as well as humans can do it. [75]](https://image.slidesharecdn.com/perceptron-100509120859-phpapp01/85/Perceptron-45-320.jpg)