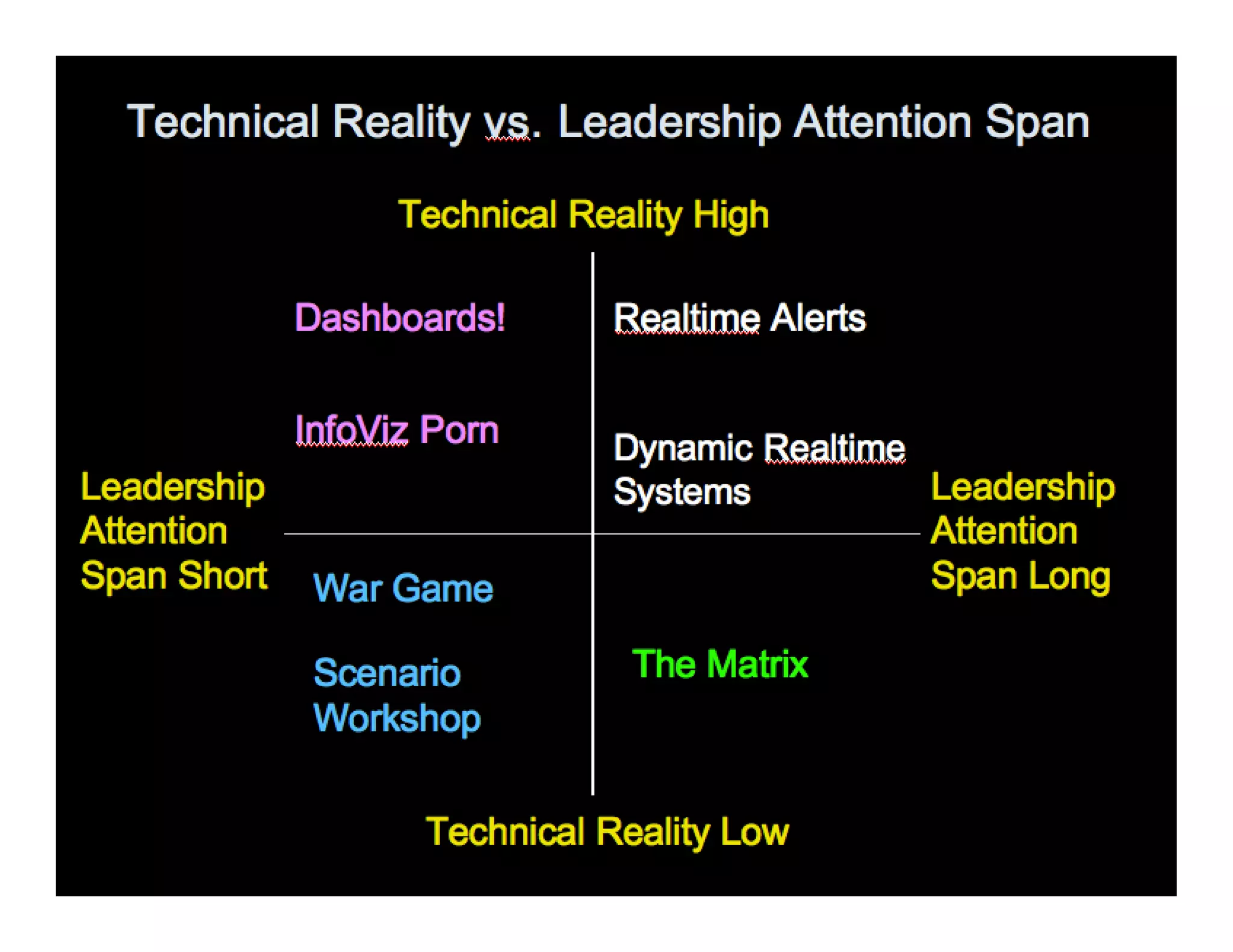

This document discusses common issues ("pathologies") that organizations face when adopting analytics and data-driven approaches. It summarizes these pathologies in 3 sentences or less:

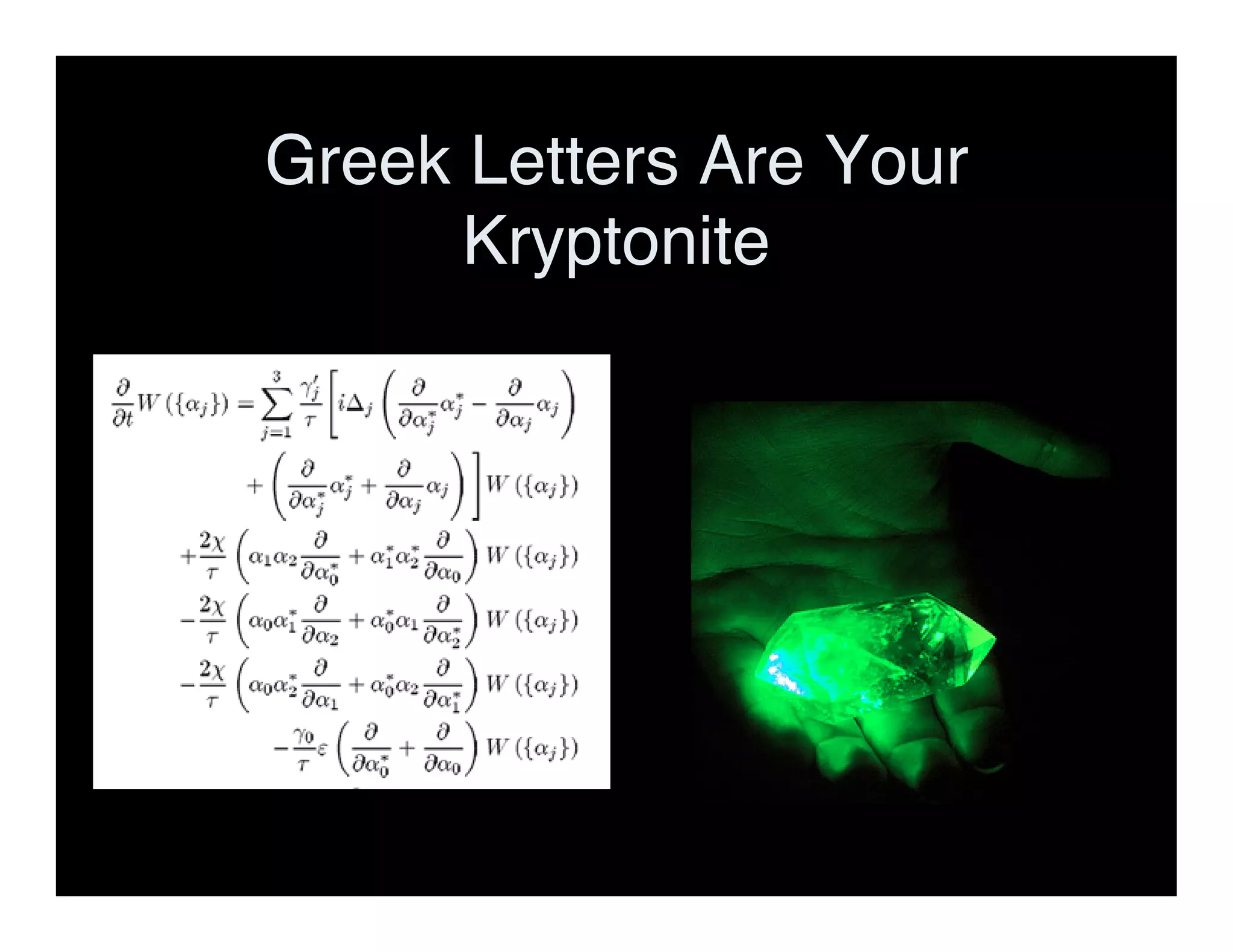

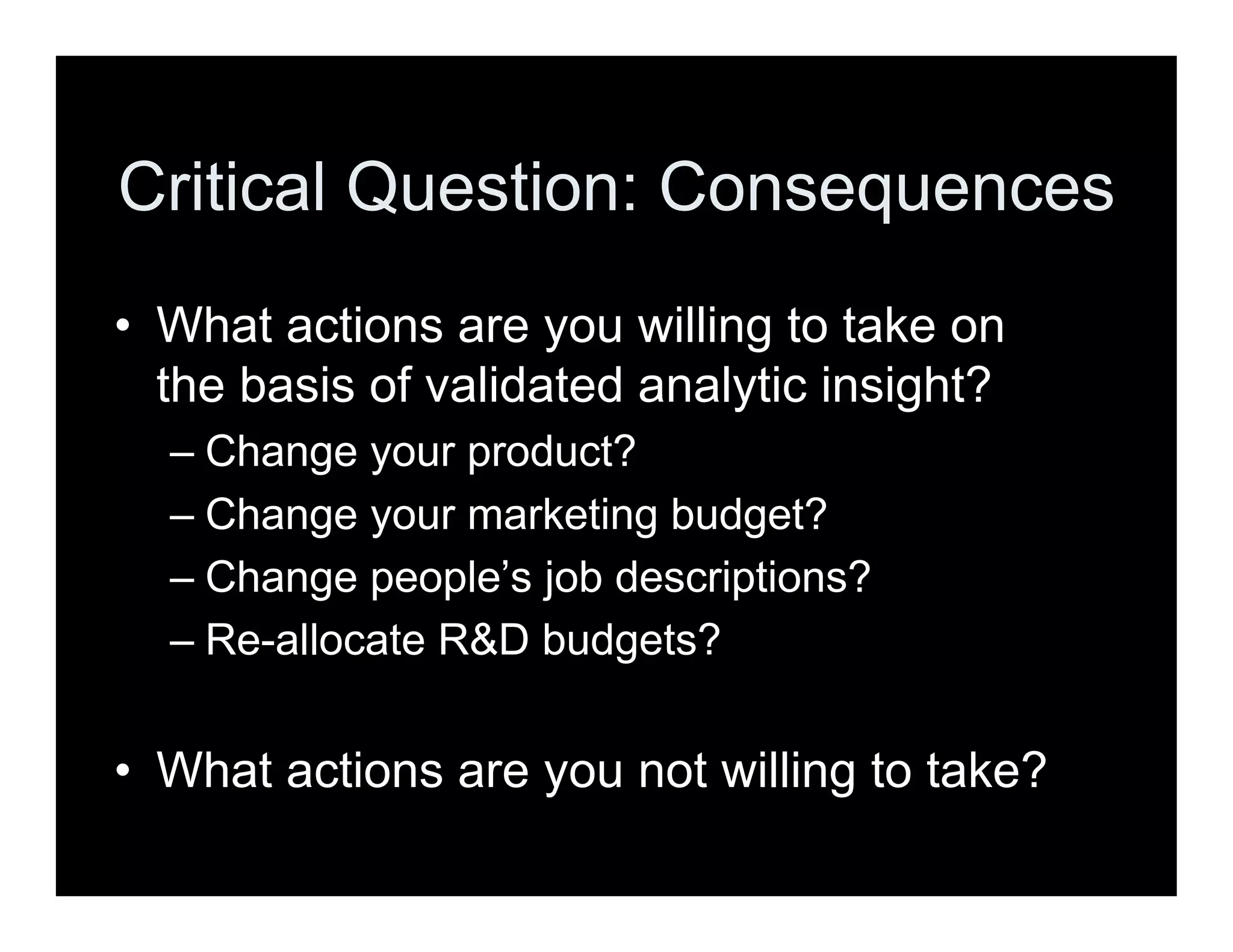

Organizations often treat analytics as a "black box" without understanding how it works due to the technical nature of analytics and lack of transparency in algorithms and methods. Many projects fail because organizations jump into analytics without properly preparing their data, validating results, or planning how insights will be implemented and drive business changes. To successfully adopt analytics, organizations must ask critical questions about data quality, intended use cases, and consequences of results in order to focus efforts and avoid wasting resources on initiatives that do not provide value.