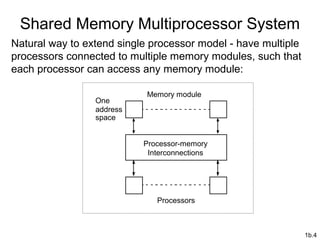

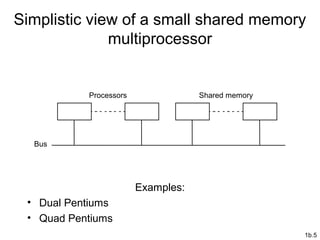

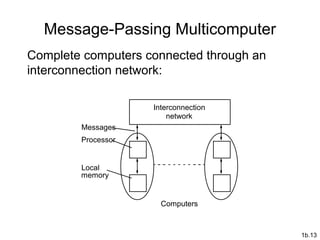

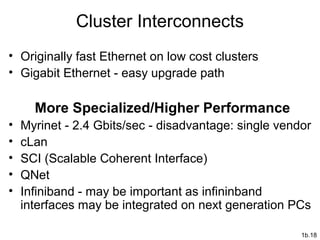

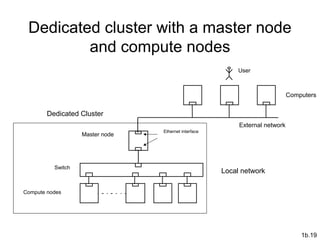

There are two main types of parallel computers: shared memory multiprocessors and distributed memory multicomputers. Shared memory multiprocessors have multiple processors that can access a shared memory address space, while distributed memory multicomputers consist of separate computers connected by an interconnect network that communicate by message passing. Beowulf clusters are a type of distributed memory multicomputer made from interconnected commodity computers that provide high-performance computing at low cost. Programming distributed memory systems requires using message passing libraries to explicitly specify communication between processes on different computers.