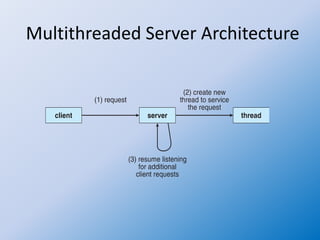

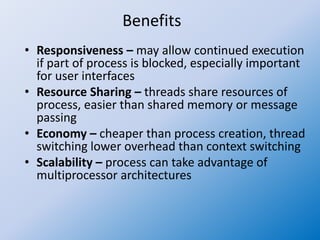

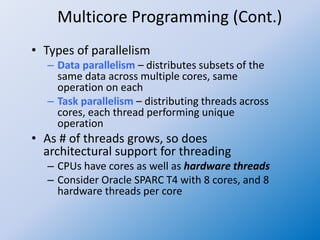

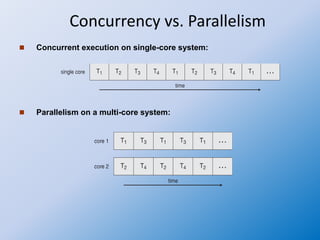

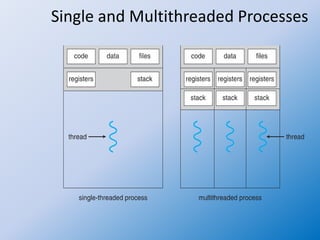

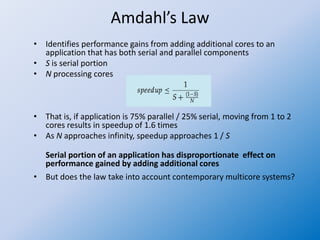

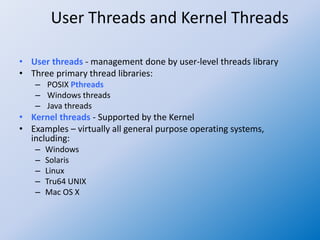

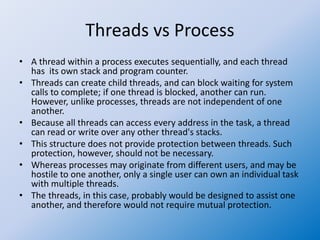

The document discusses multithreaded server architectures, outlining their benefits such as increased responsiveness, resource sharing, and cost efficiency compared to processes. It highlights the challenges of multicore programming, including activity division and data dependency, while explaining the differences between user and kernel threads. Additionally, it emphasizes the advantages of threads over processes, including resource efficiency and cooperative interaction among threads within a task.