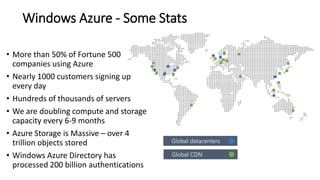

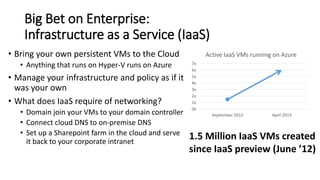

This document discusses Microsoft's use of software defined networking (SDN) in Windows Azure. Some key points:

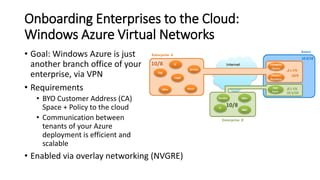

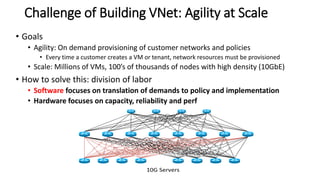

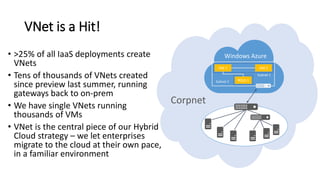

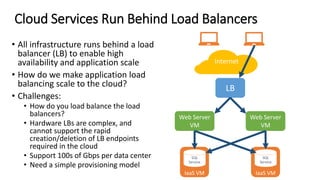

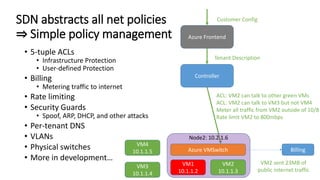

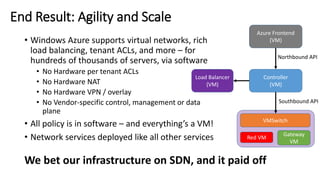

- Microsoft uses SDN to provide infrastructure services like virtual networks (VNETs), load balancing, and tenant access control lists at scale in Windows Azure.

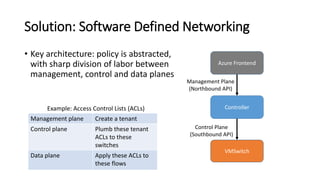

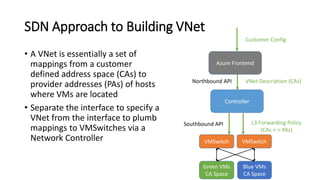

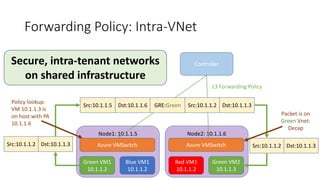

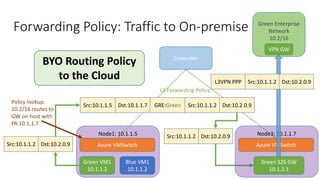

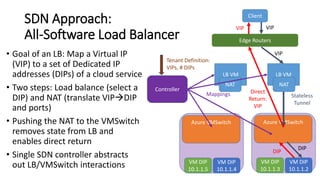

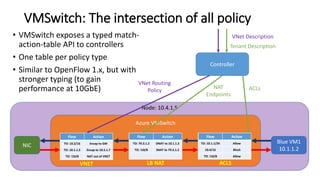

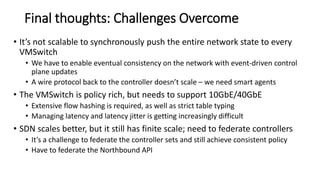

- The SDN approach separates the management, control, and data planes. A centralized controller programs network policies into virtual switches to enable services like VNETs and load balancing across hundreds of thousands of servers.

- This allows Windows Azure to securely connect enterprise infrastructure to the cloud and provide scalable networking services to tenants without relying on dedicated hardware appliances. SDN enables agility at massive scale in Windows Azure.