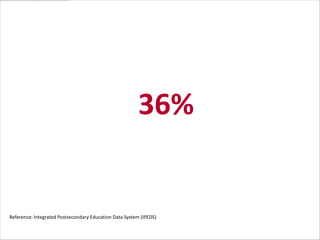

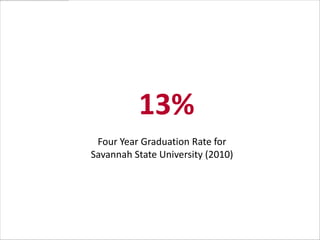

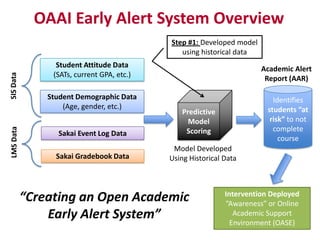

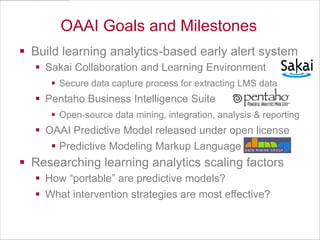

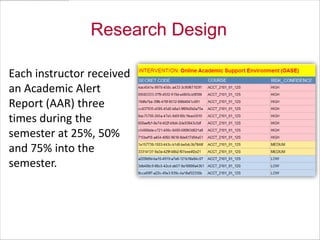

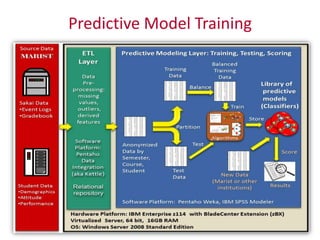

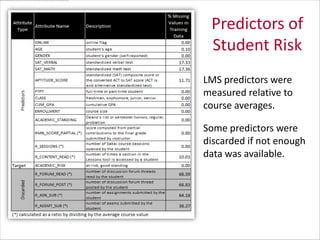

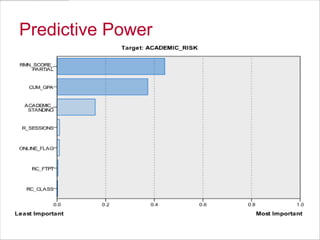

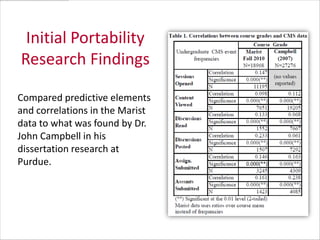

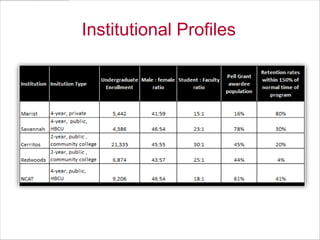

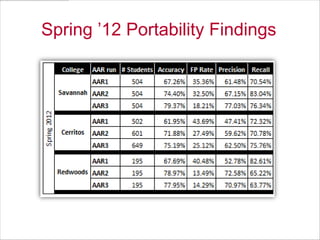

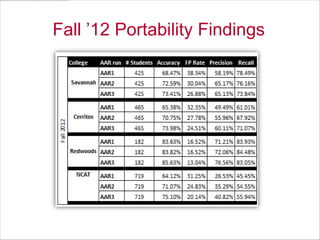

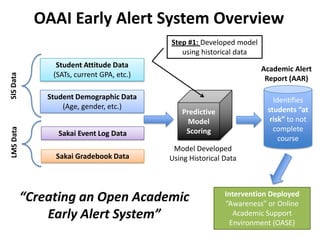

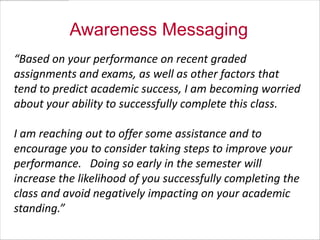

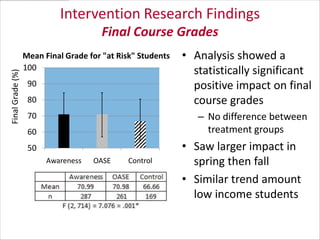

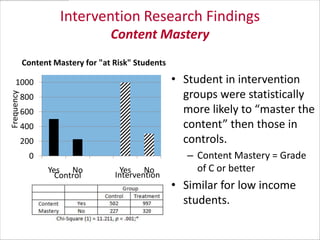

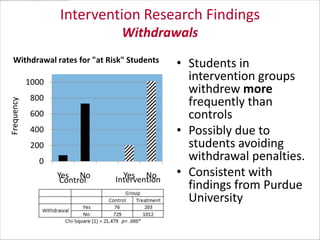

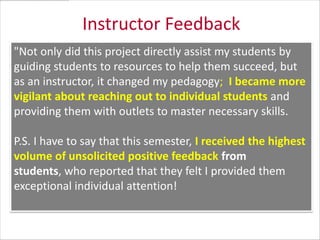

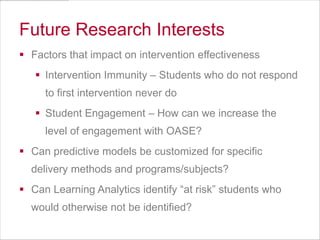

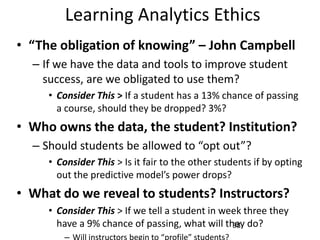

The Open Academic Analytics Initiative (OAAI) aims to develop an open-source academic early alert system using learning analytics to identify at-risk students. Funded by the Bill and Melinda Gates Foundation, this project involves deploying a predictive model across various institutions and providing academic alerts to instructors to enhance student success. Research findings indicate that while the intervention helped improve final course grades and content mastery, it also led to higher withdrawal rates among at-risk students.