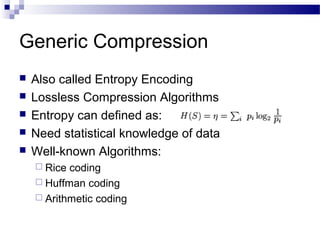

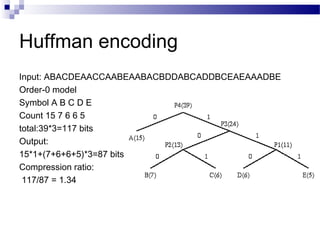

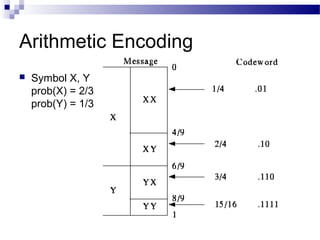

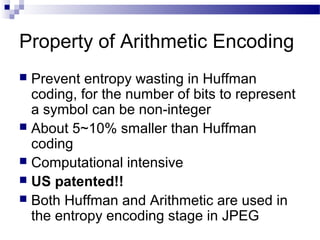

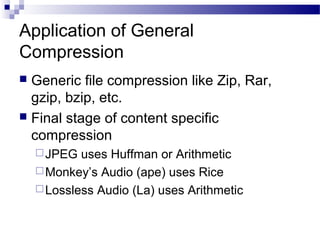

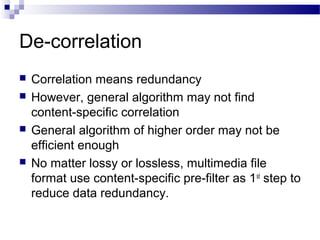

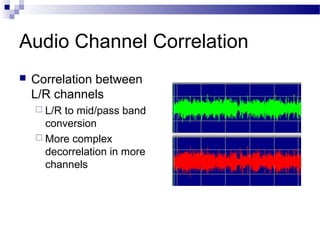

This document discusses multimedia compression. It begins by explaining why compression is needed due to the large size of raw audio and video data. It then outlines an overview of generic compression algorithms and content-specific compression techniques. It discusses lossy compression and introduces common lossless compression algorithms like Huffman coding and Arithmetic coding. Finally, it explains how content-specific compression aims to further reduce redundancy by de-correlating audio, images, and video based on properties like temporal, channel, color space, and spatial correlations.