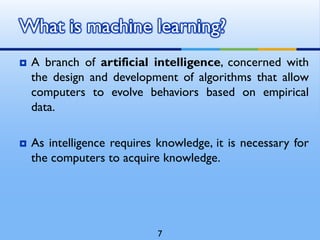

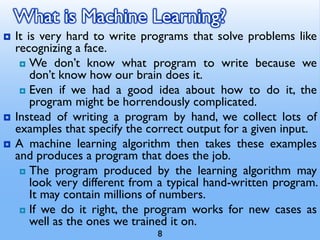

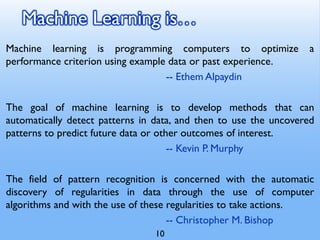

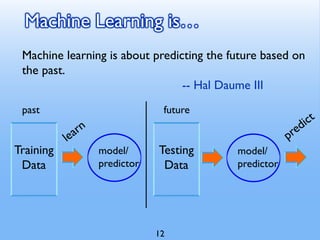

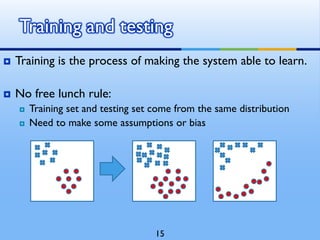

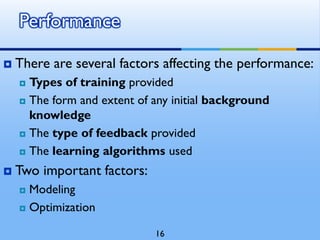

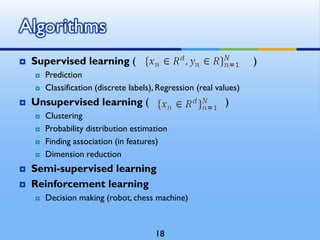

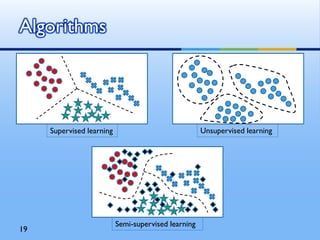

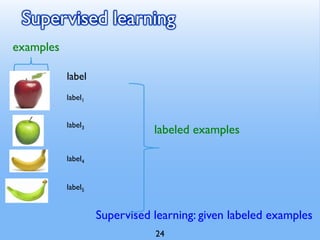

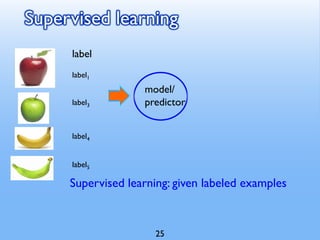

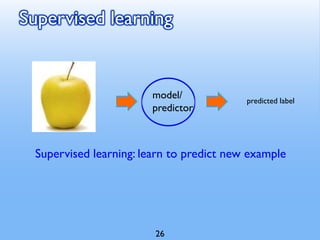

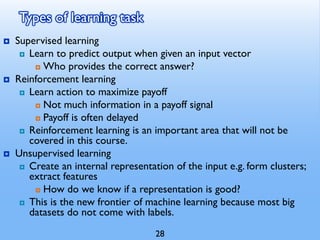

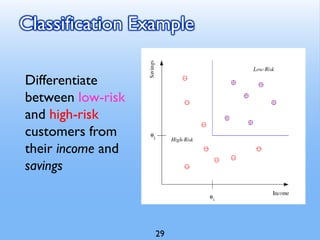

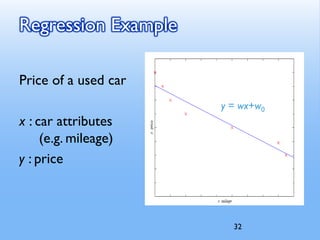

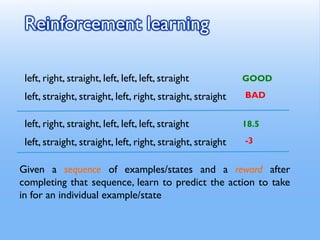

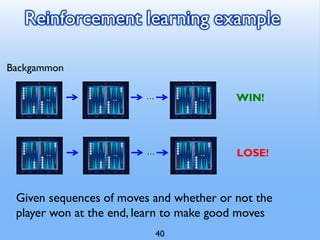

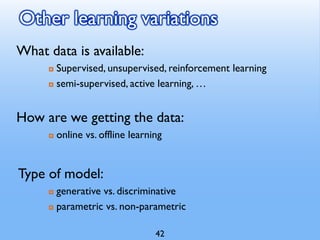

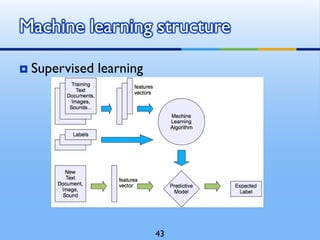

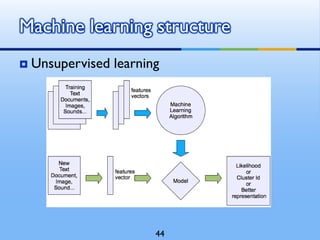

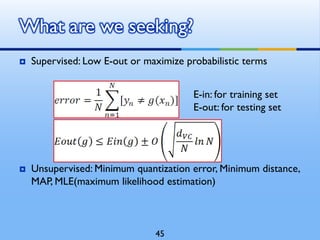

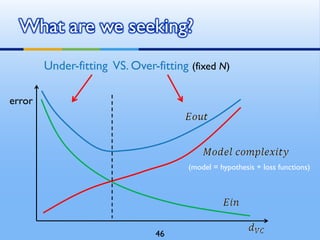

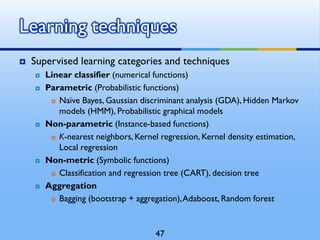

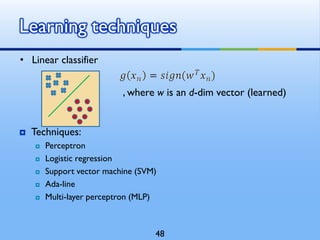

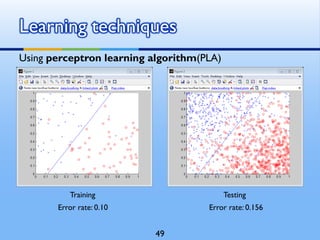

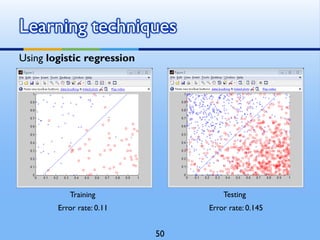

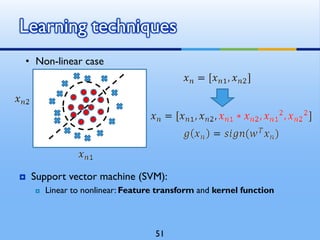

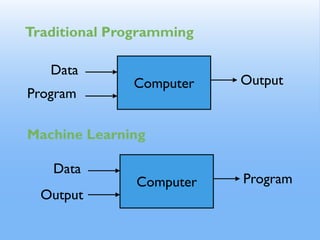

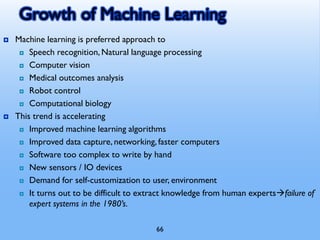

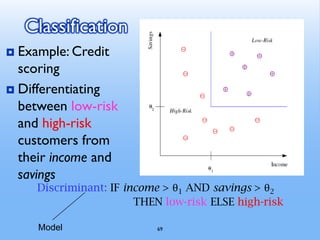

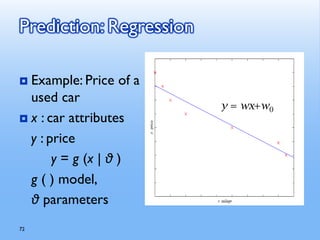

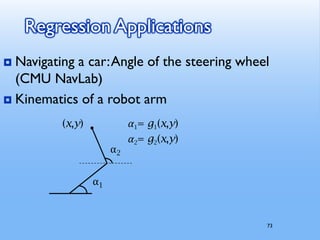

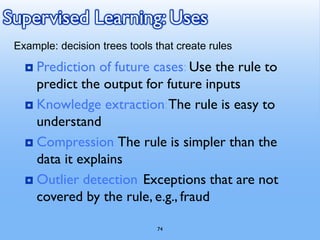

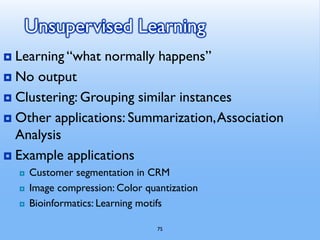

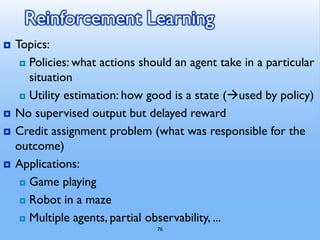

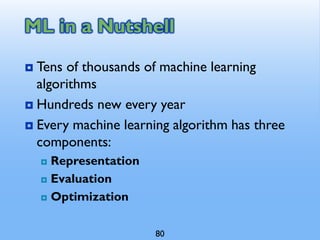

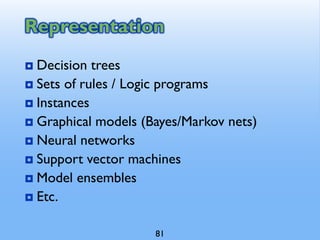

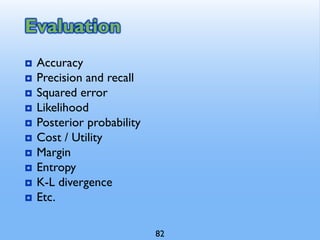

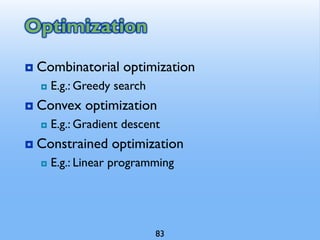

This document provides an overview of machine learning and artificial intelligence concepts. It discusses what machine learning is, including how machines can learn from examples to optimize performance without being explicitly programmed. Various machine learning algorithms and applications are covered, such as supervised learning techniques like classification and regression, as well as unsupervised learning and reinforcement learning. The goal of machine learning is to develop models that can make accurate predictions on new data based on patterns discovered from training data.

![Sample Applications

Web search

Computational biology

Finance

E-commerce

Space exploration

Robotics

Information extraction

Social networks

Debugging

[Your favorite area]

65](https://image.slidesharecdn.com/dr-191204061356/85/Machine-Learning-an-Research-Overview-65-320.jpg)

![[1] W. L. Chao, J. J. Ding, “Integrated Machine

Learning Algorithms for Human Age Estimation”,

NTU, 2011.

Reference

85](https://image.slidesharecdn.com/dr-191204061356/85/Machine-Learning-an-Research-Overview-85-320.jpg)