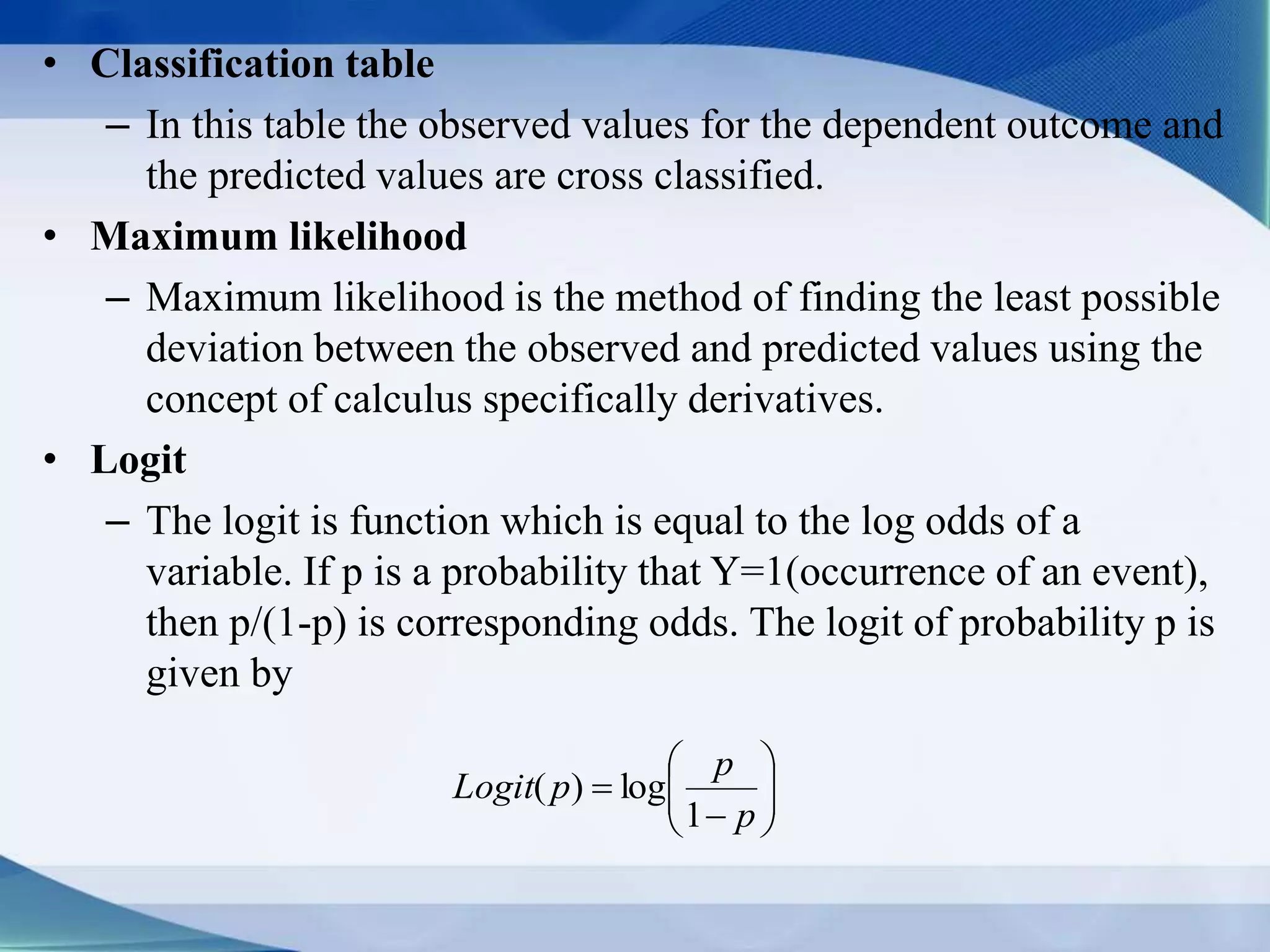

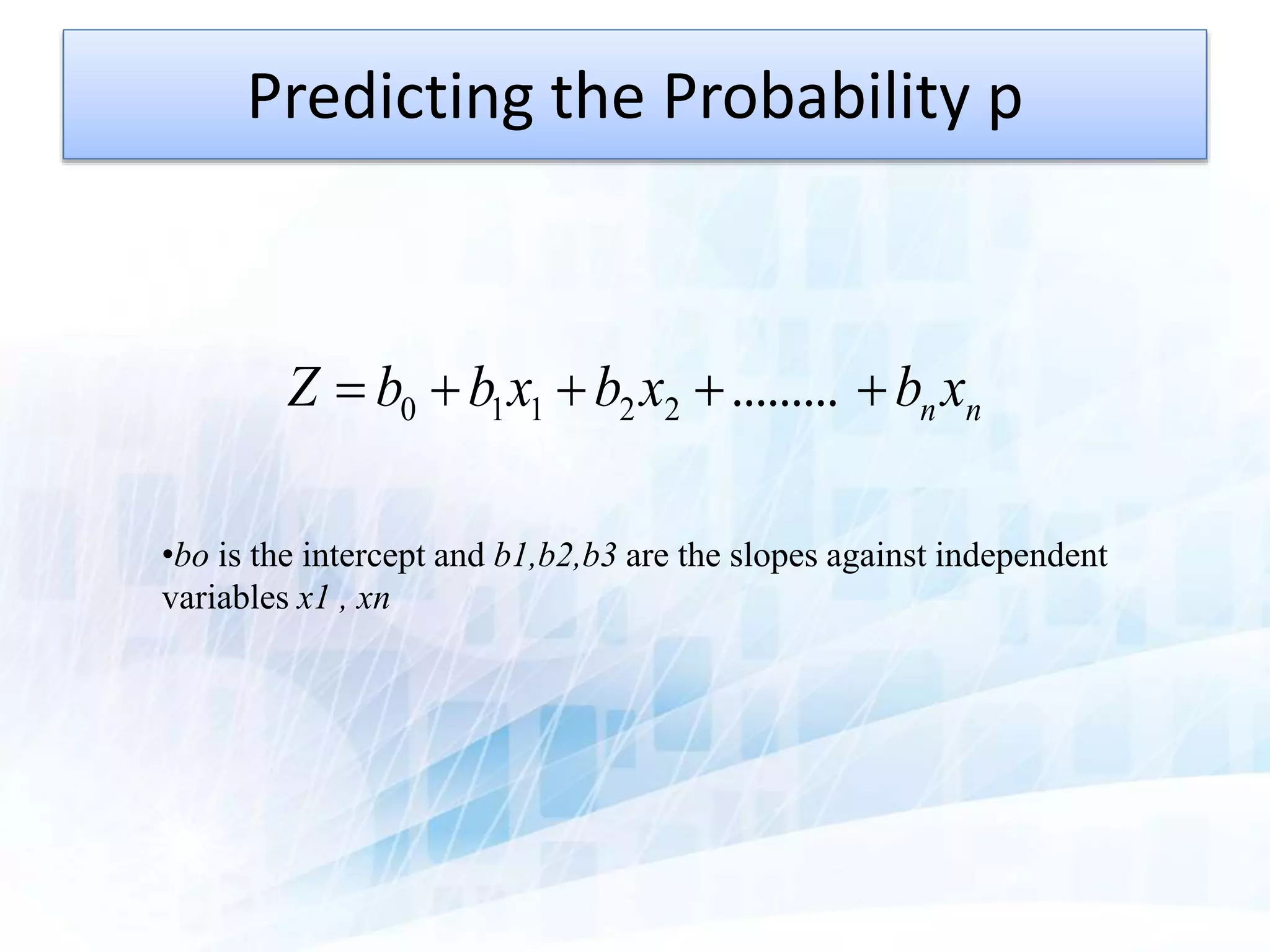

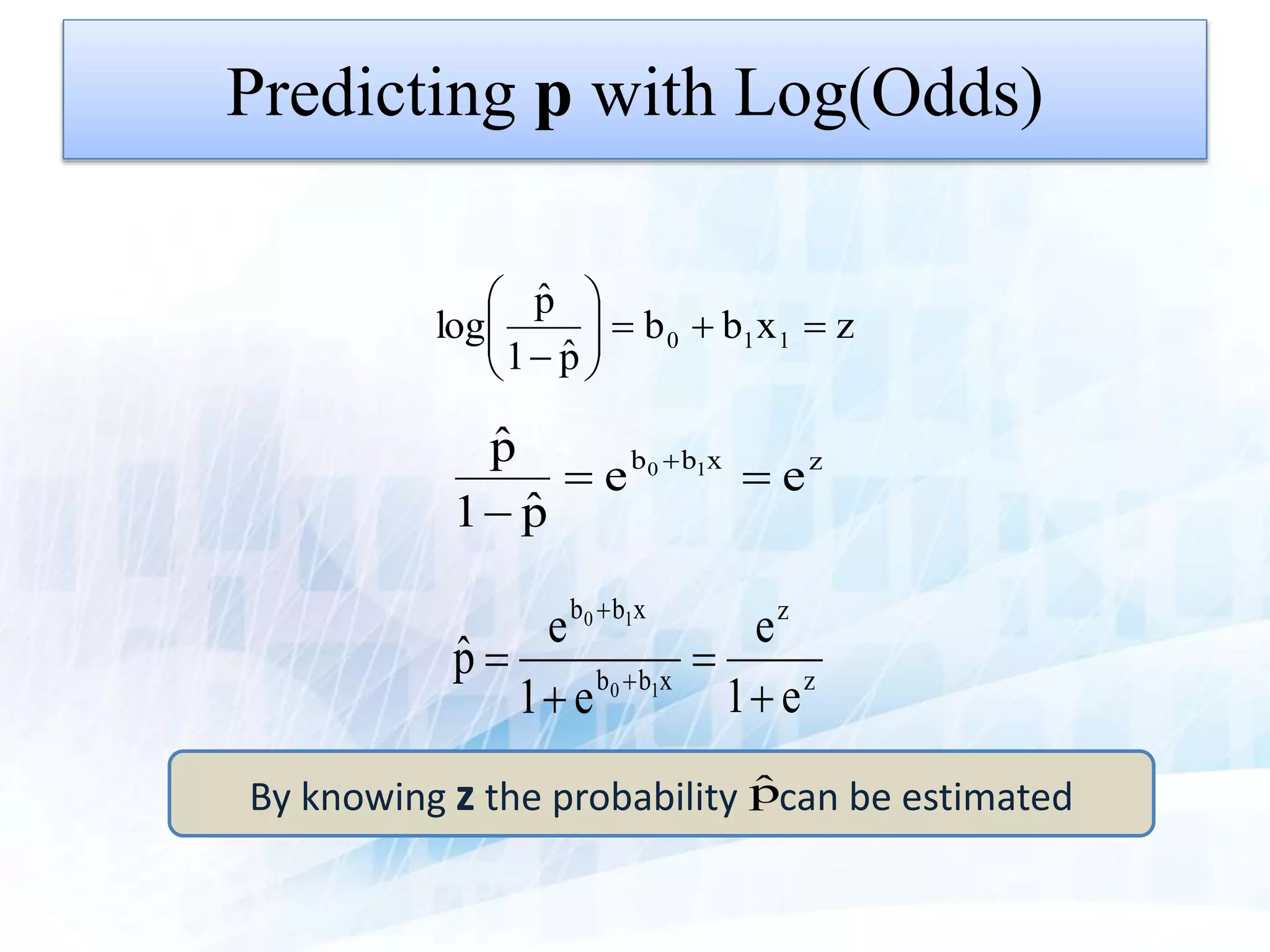

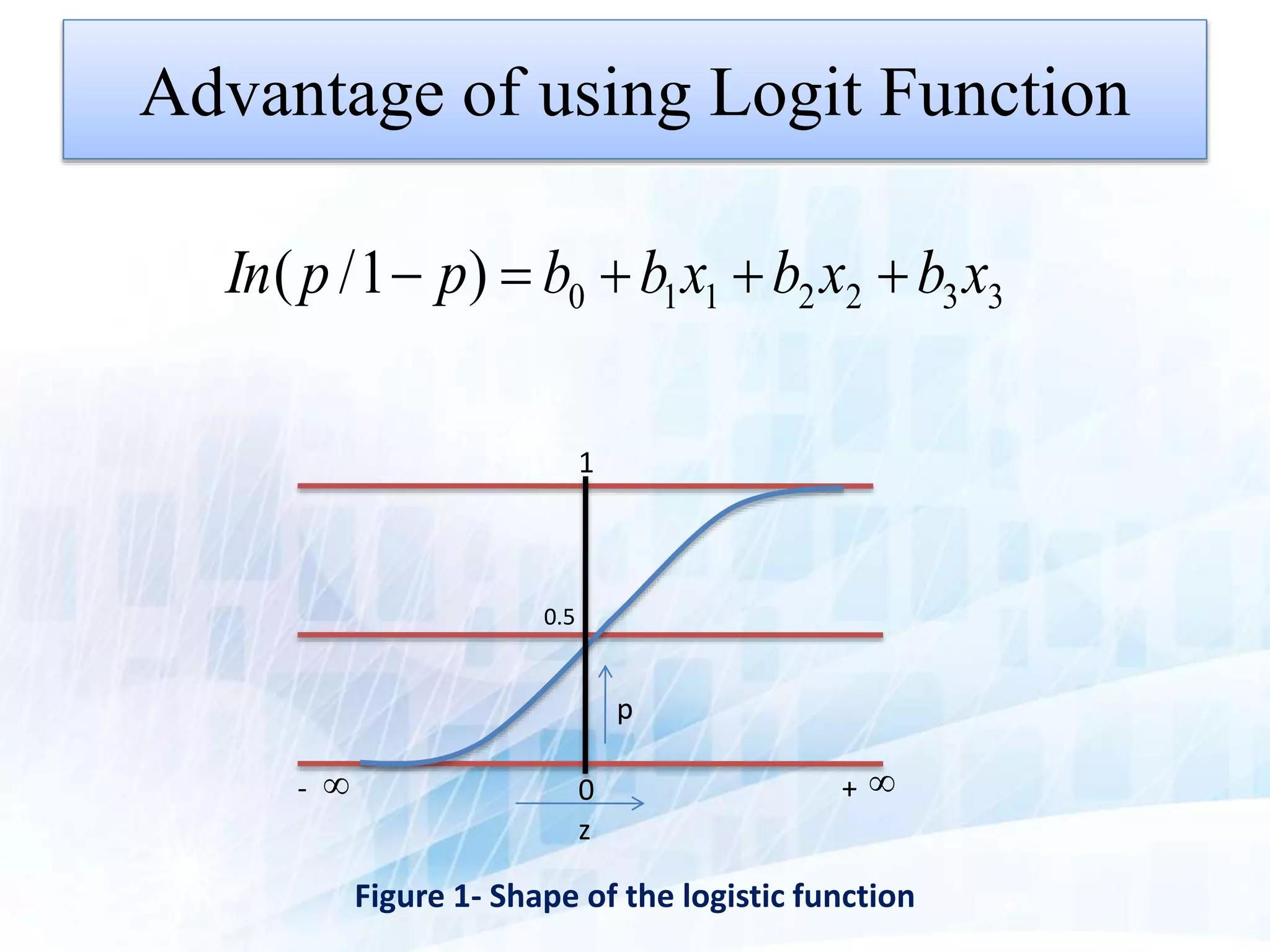

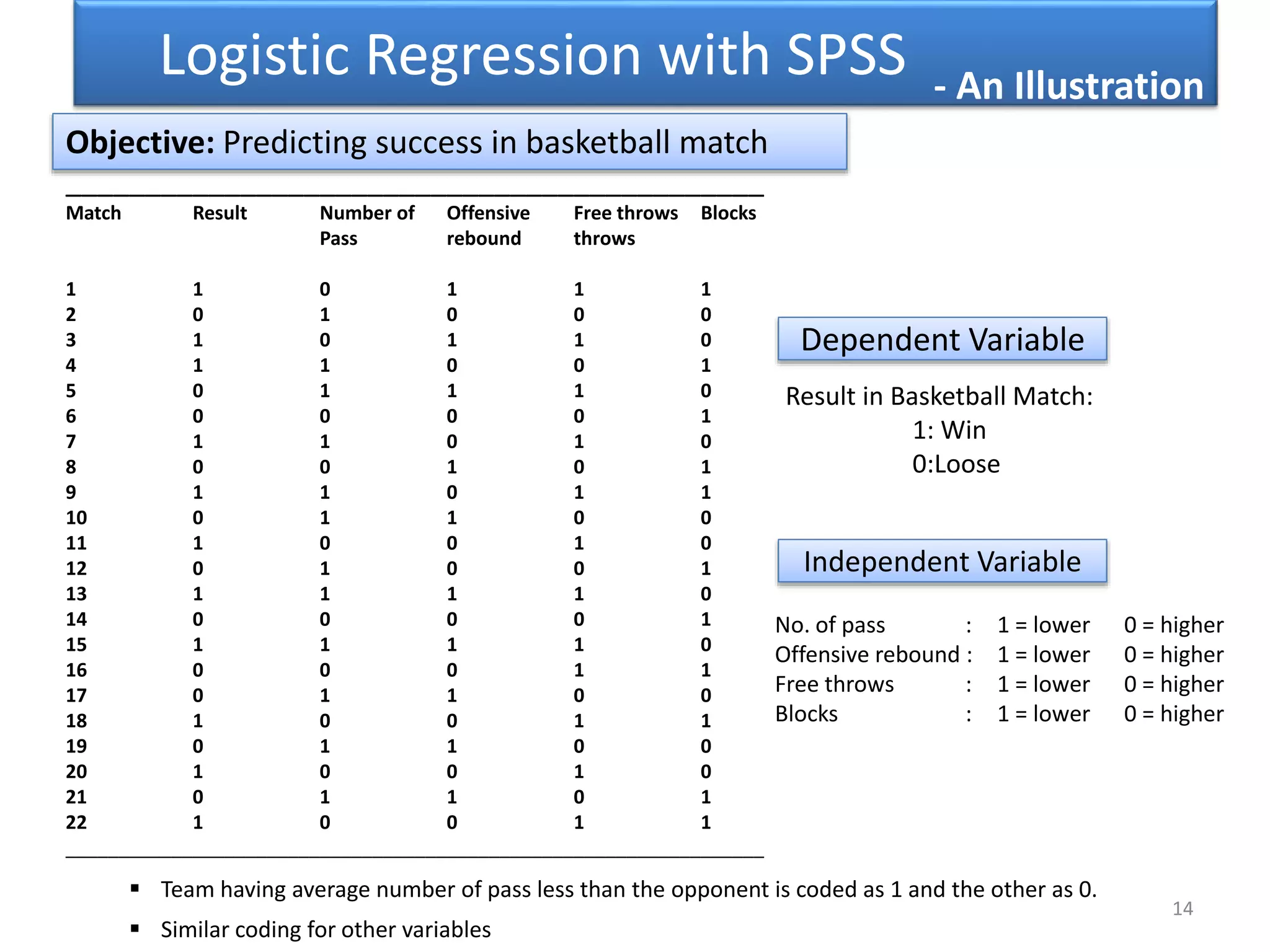

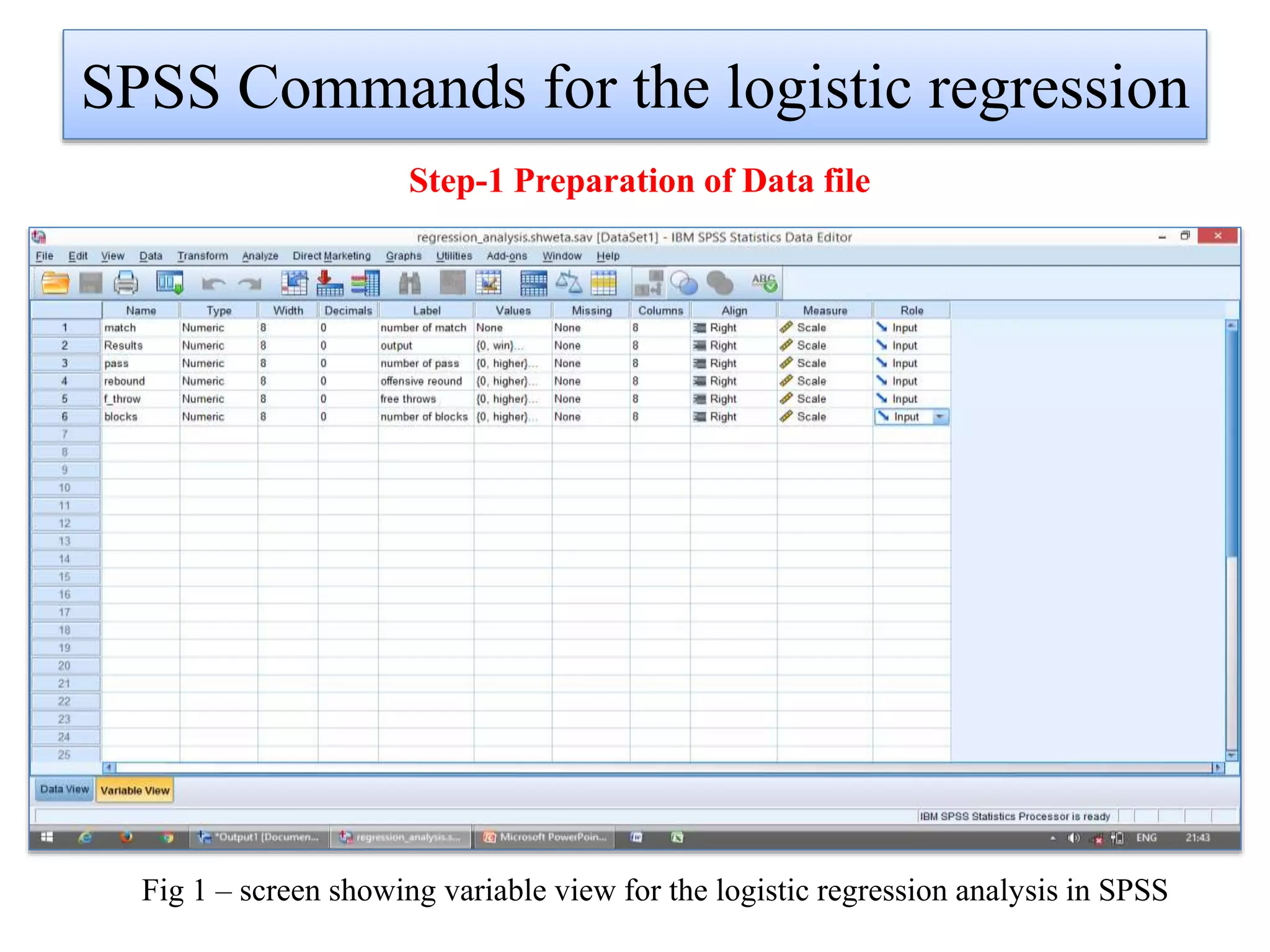

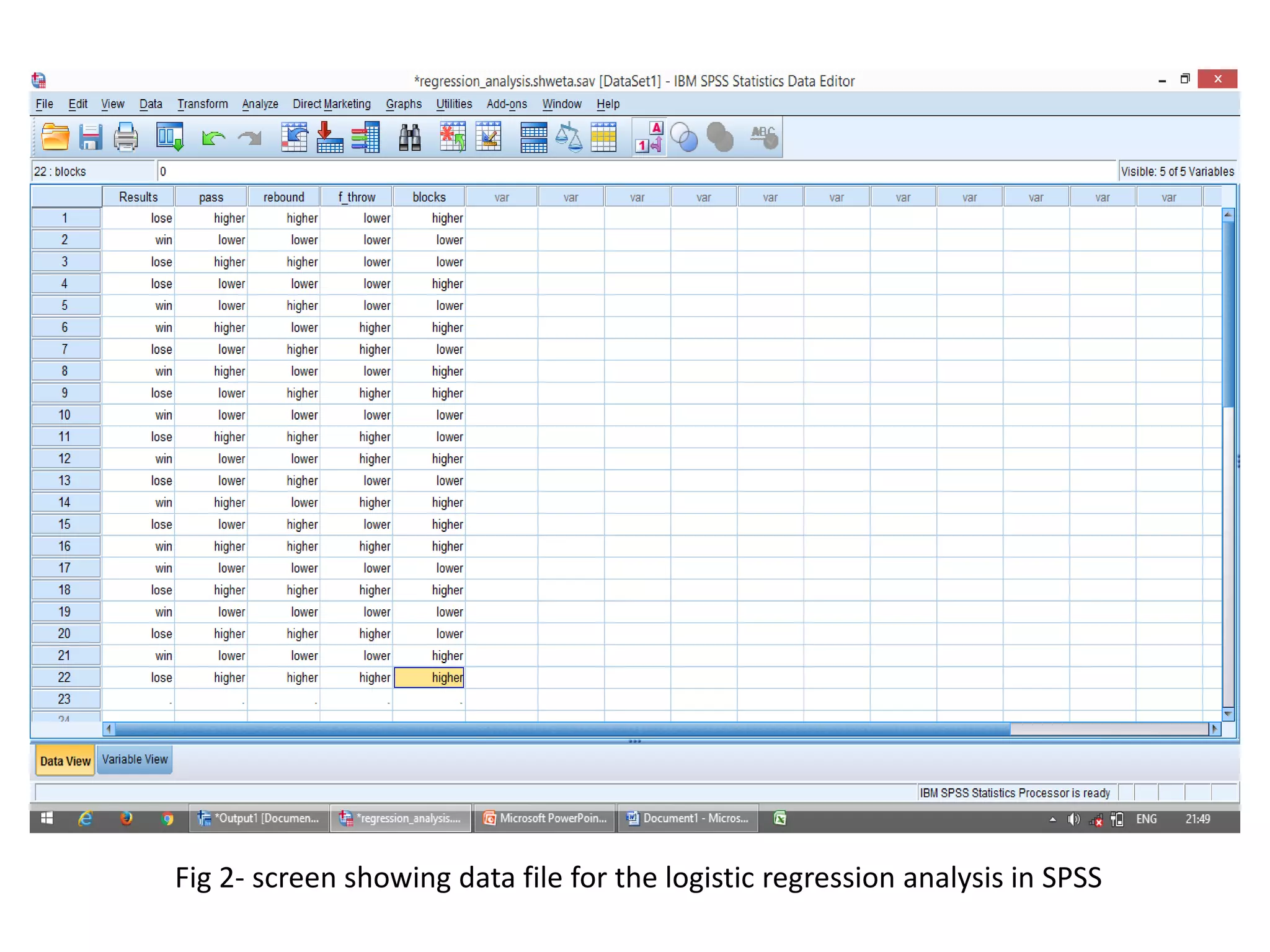

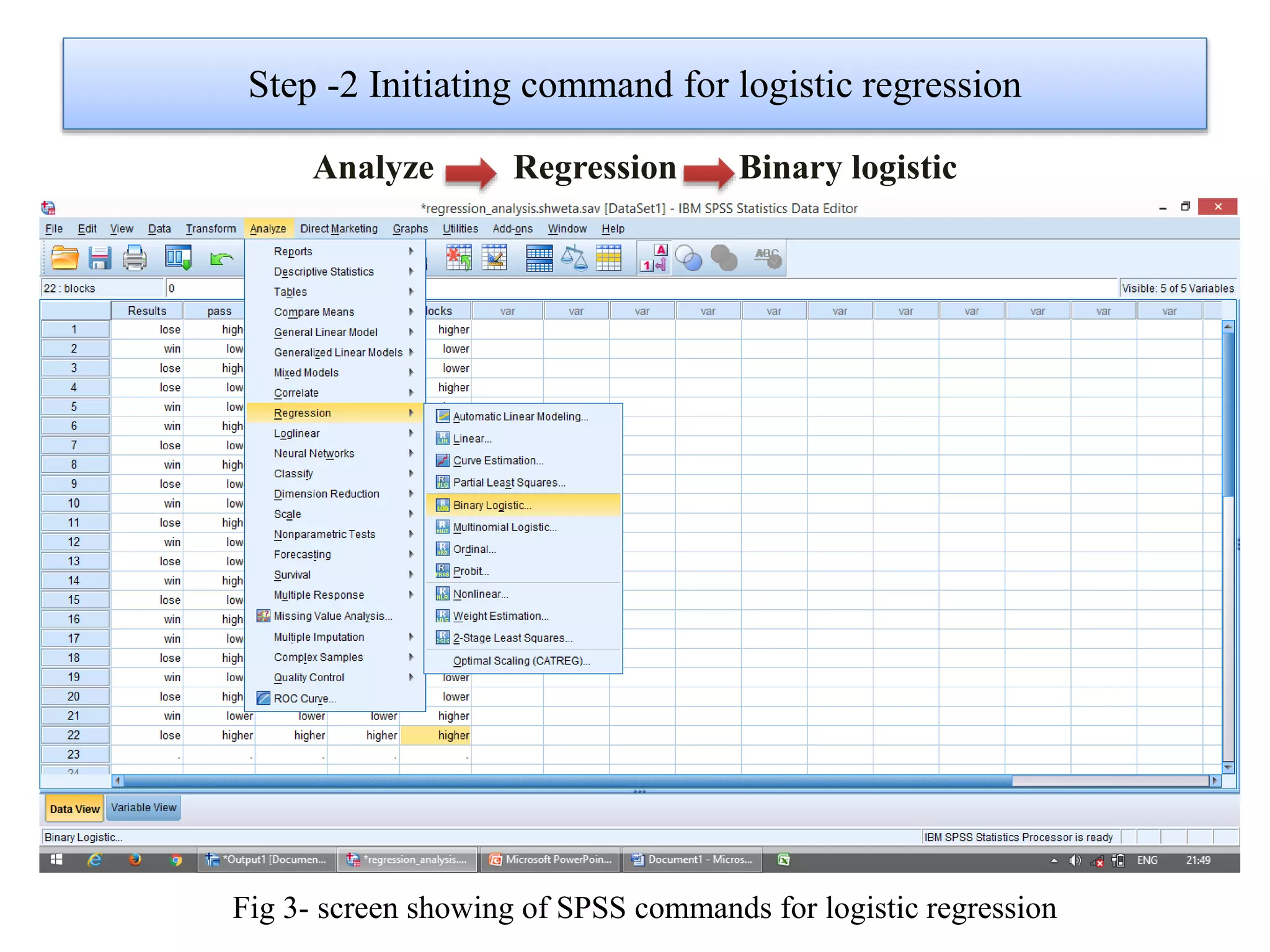

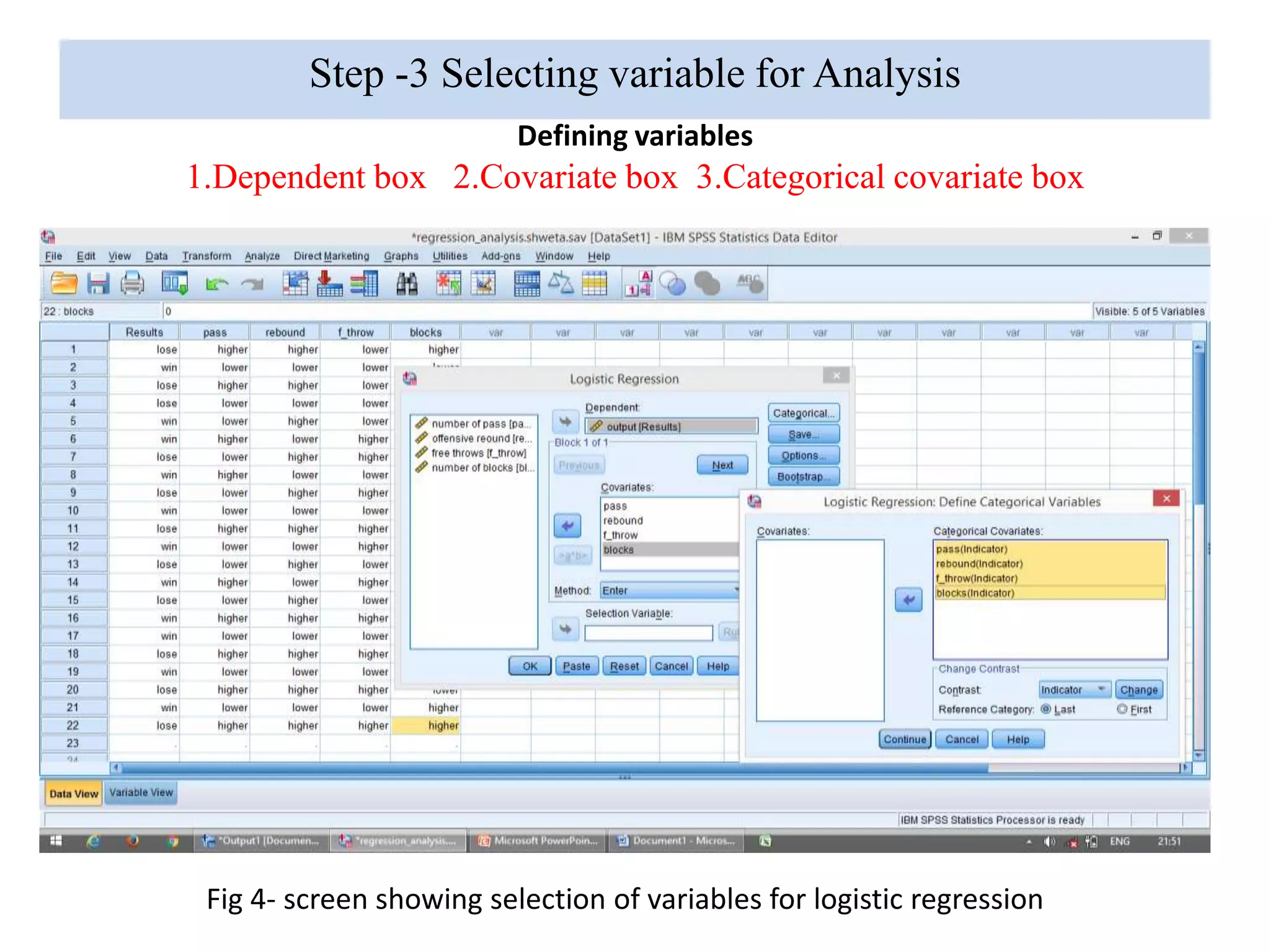

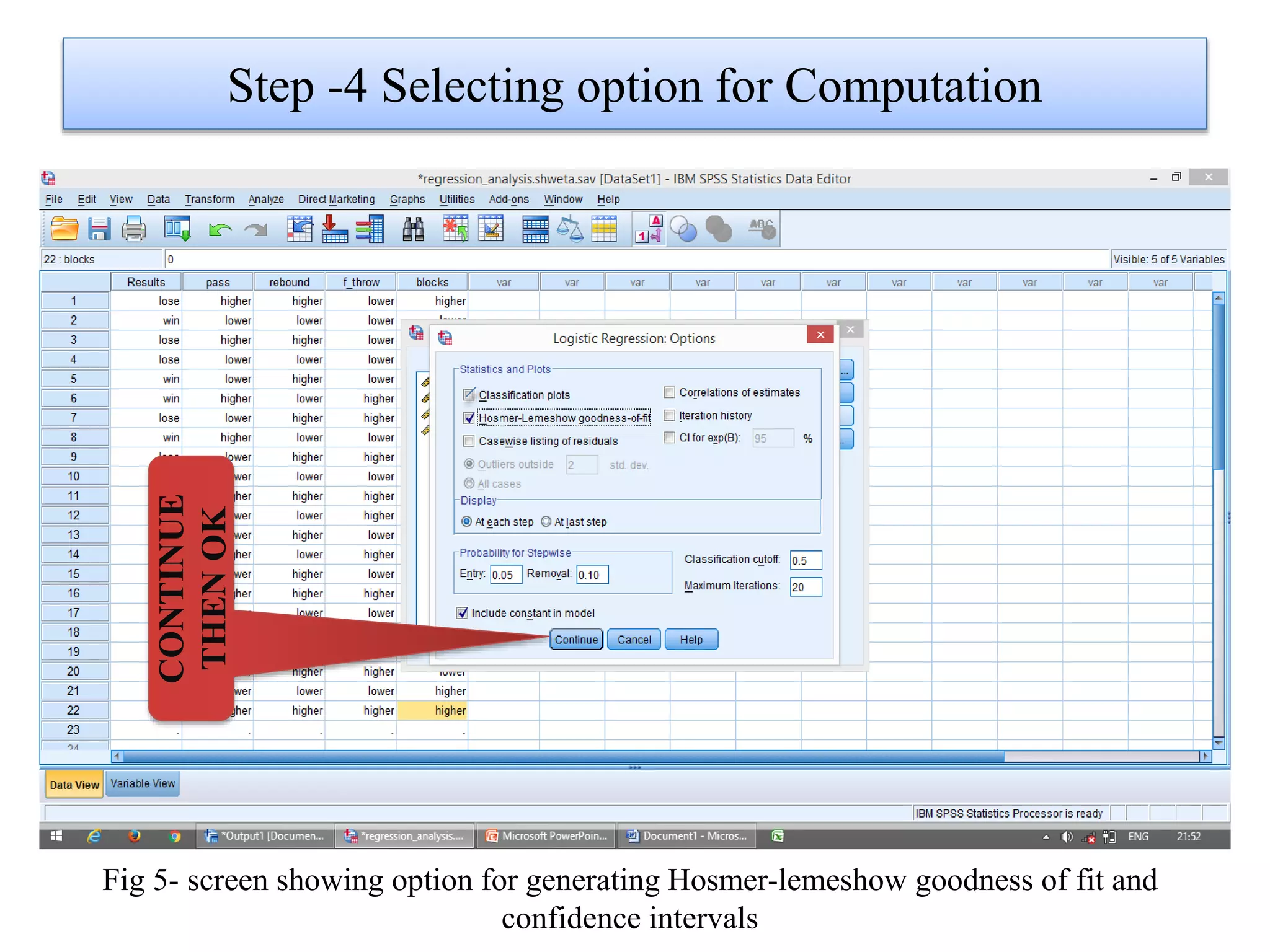

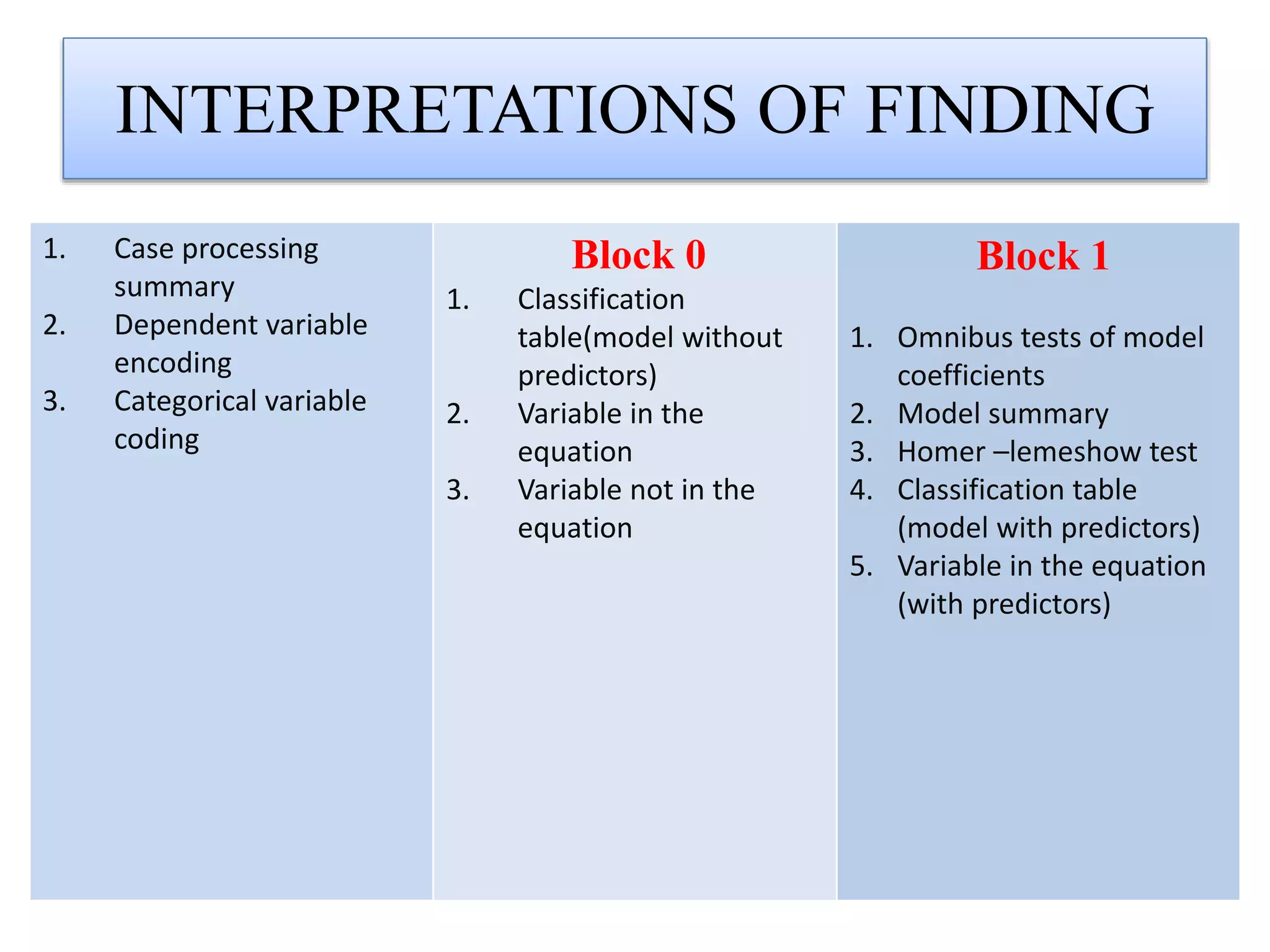

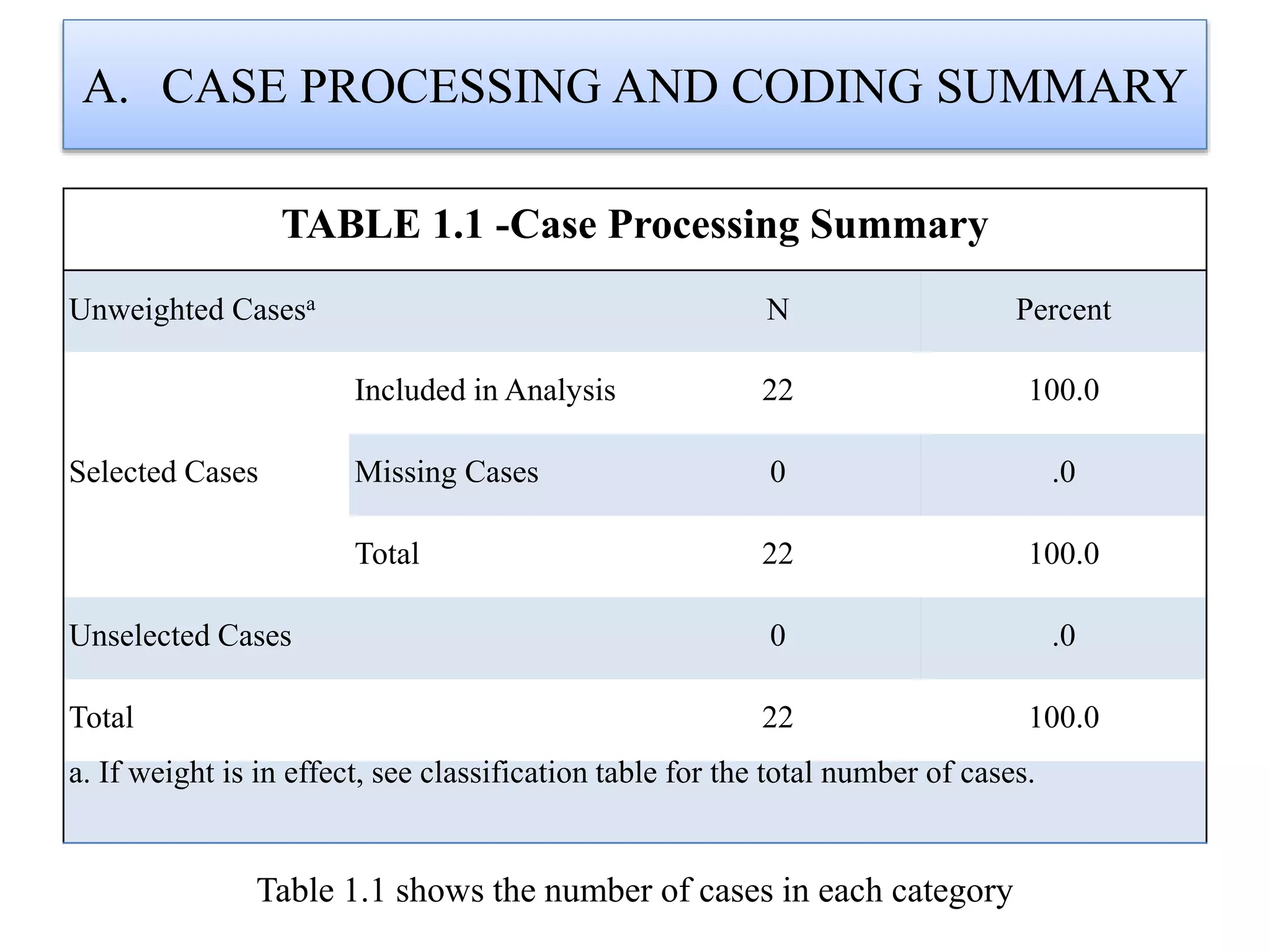

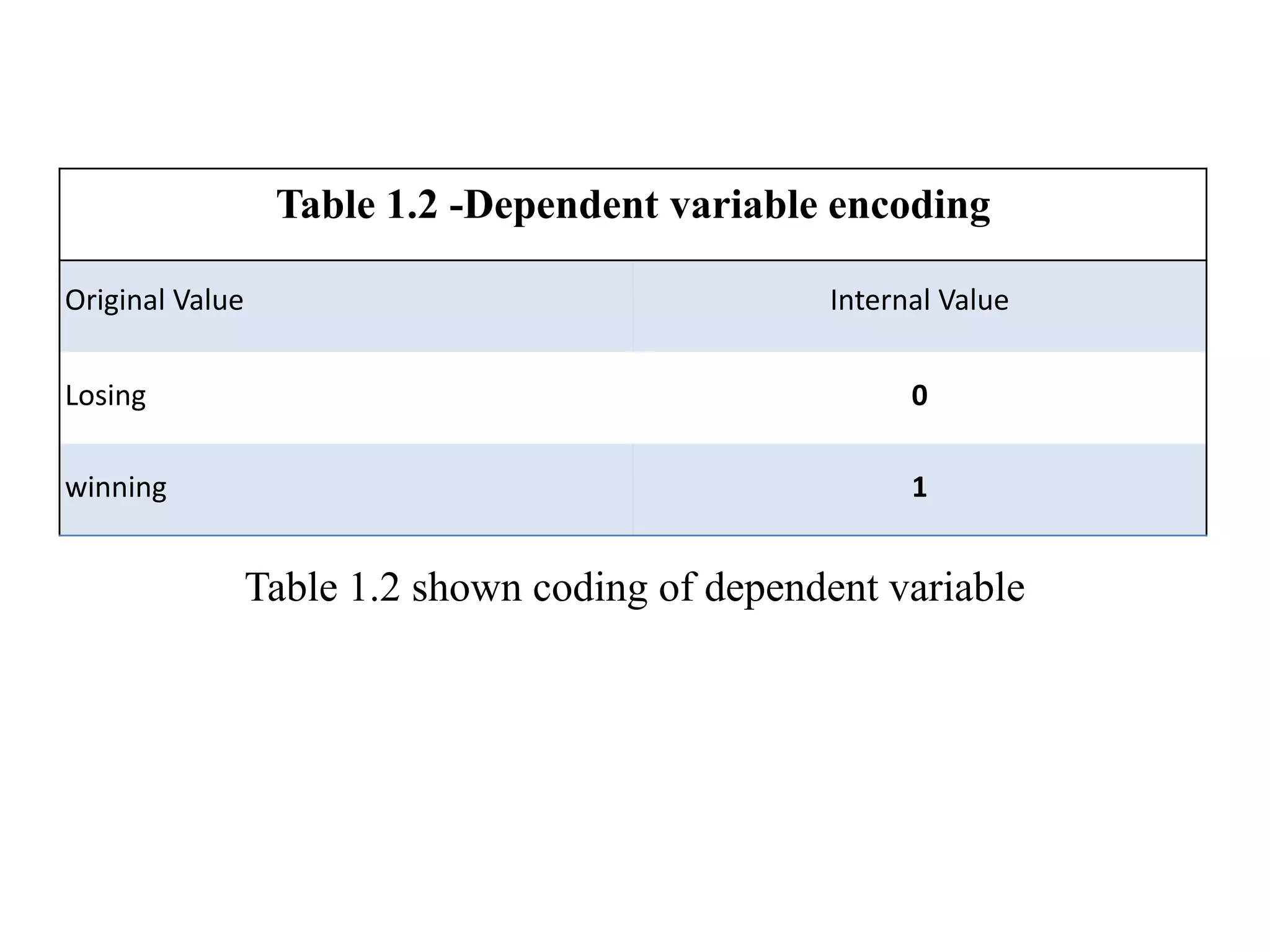

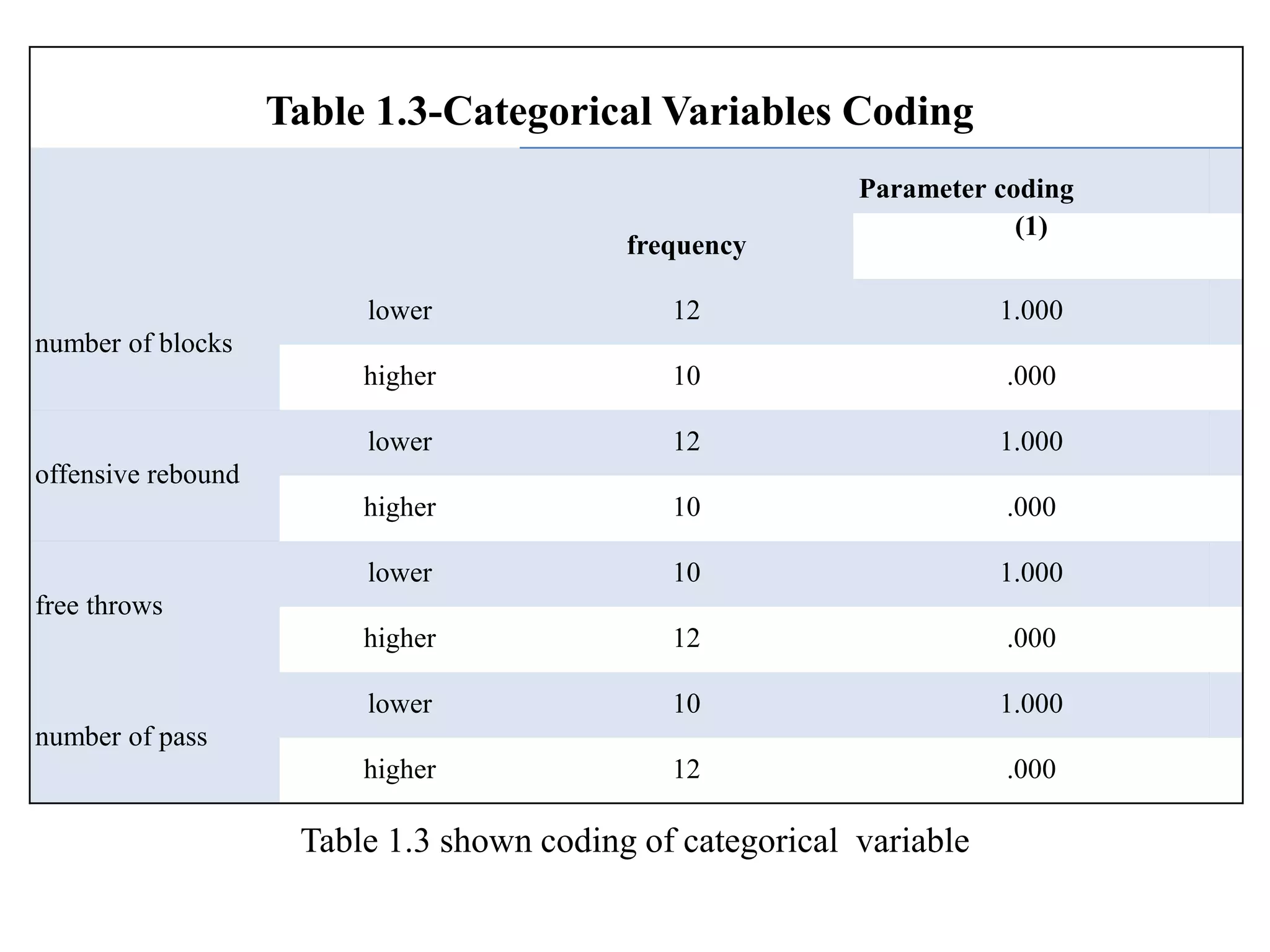

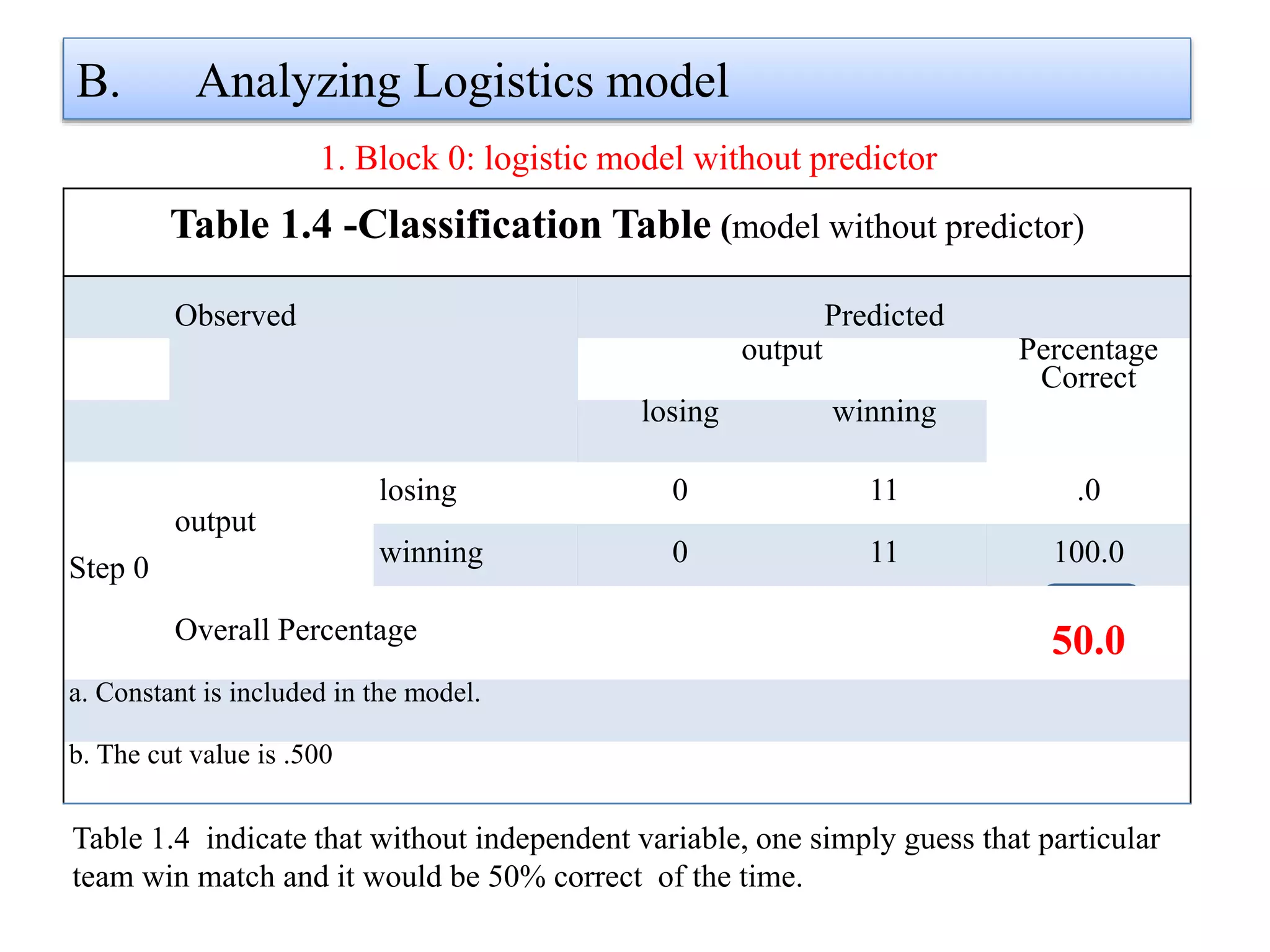

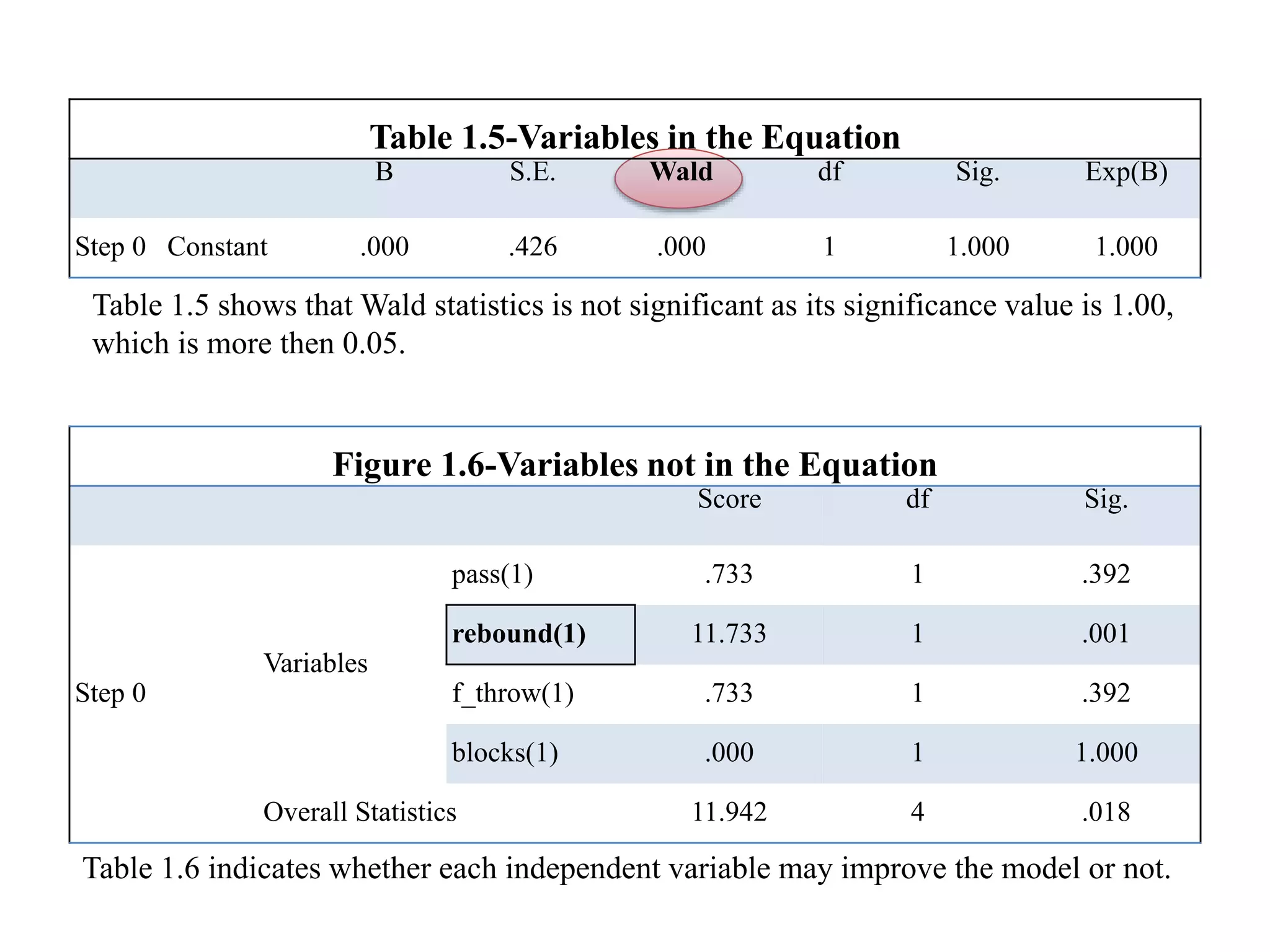

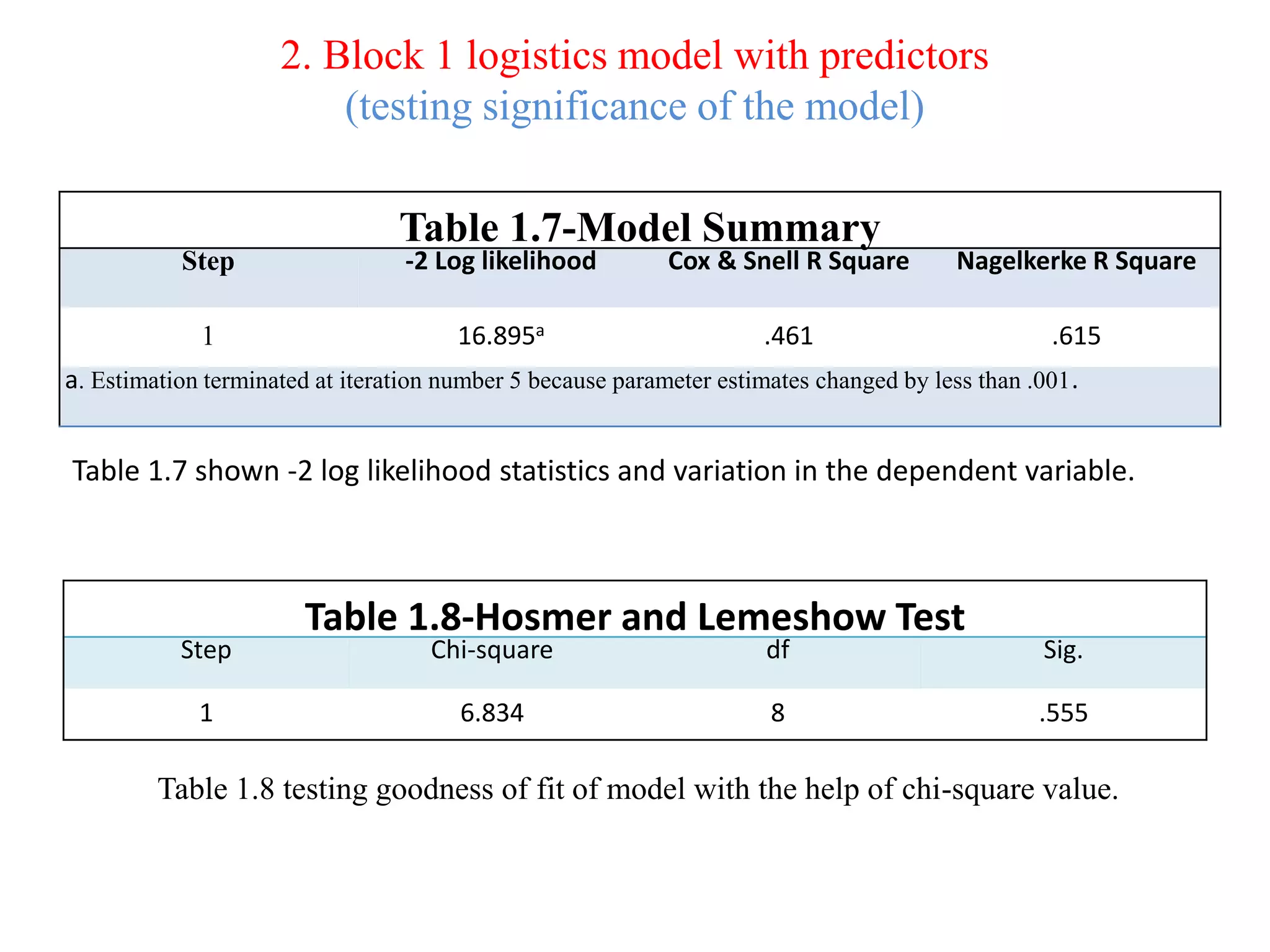

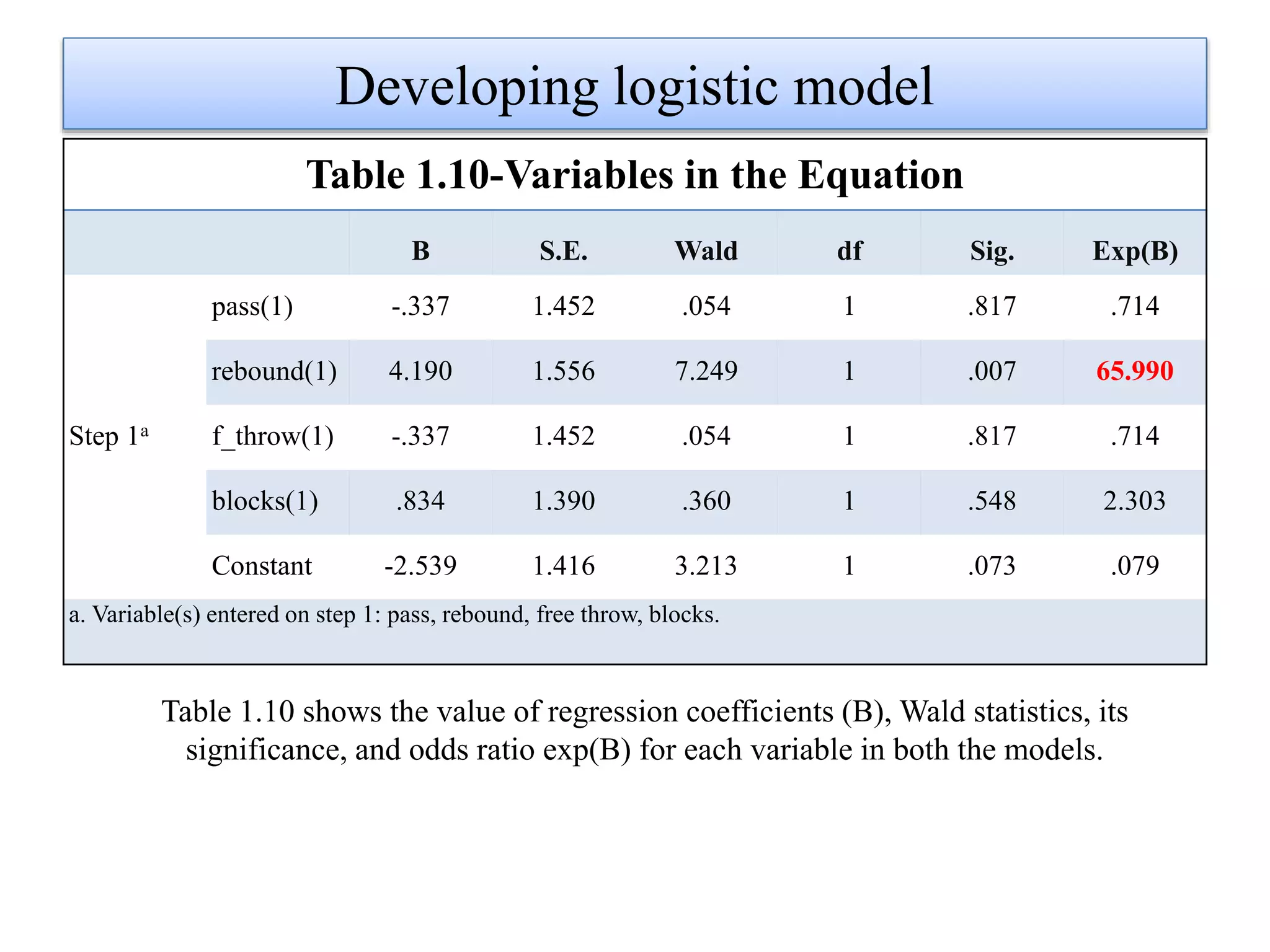

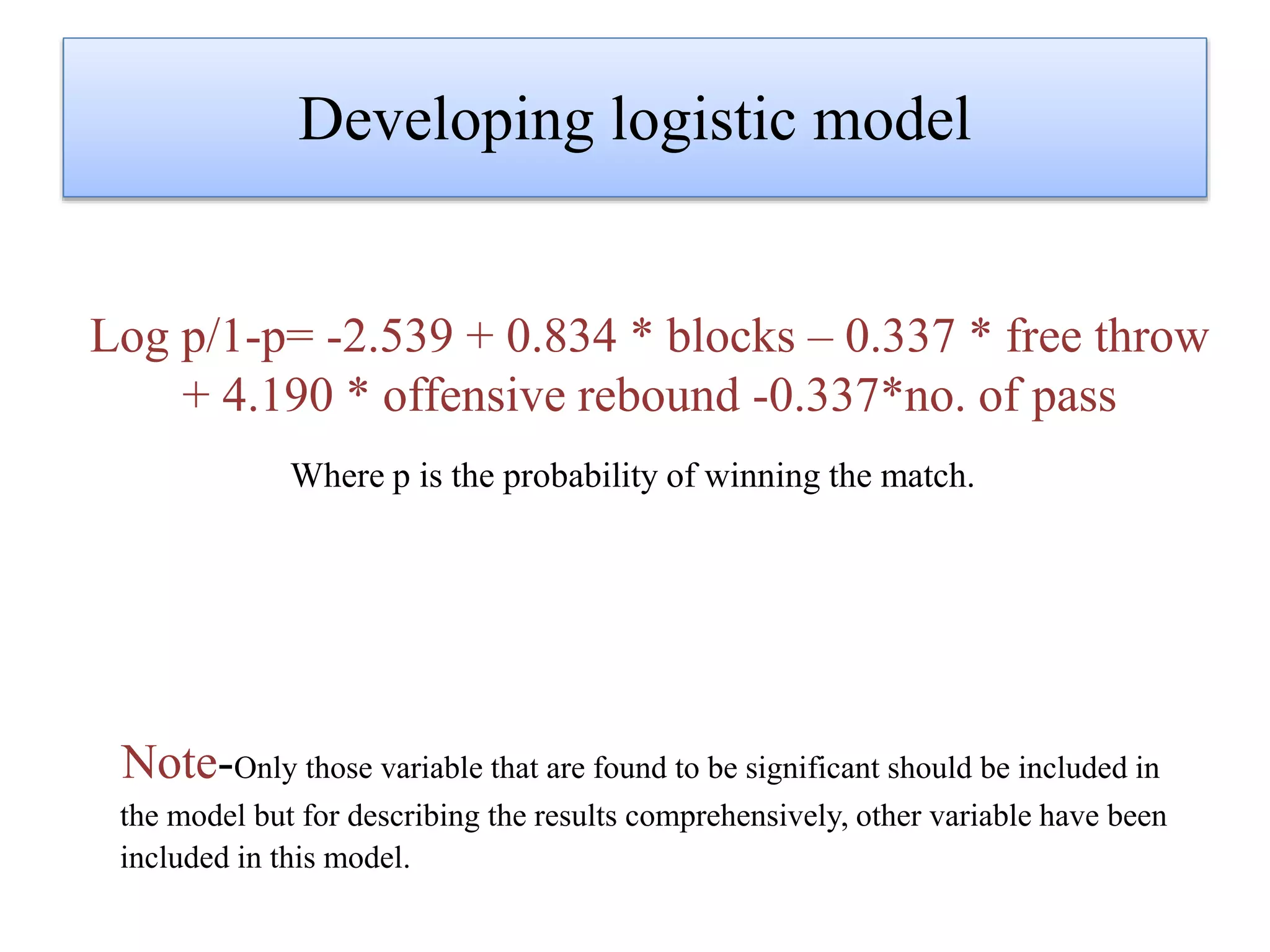

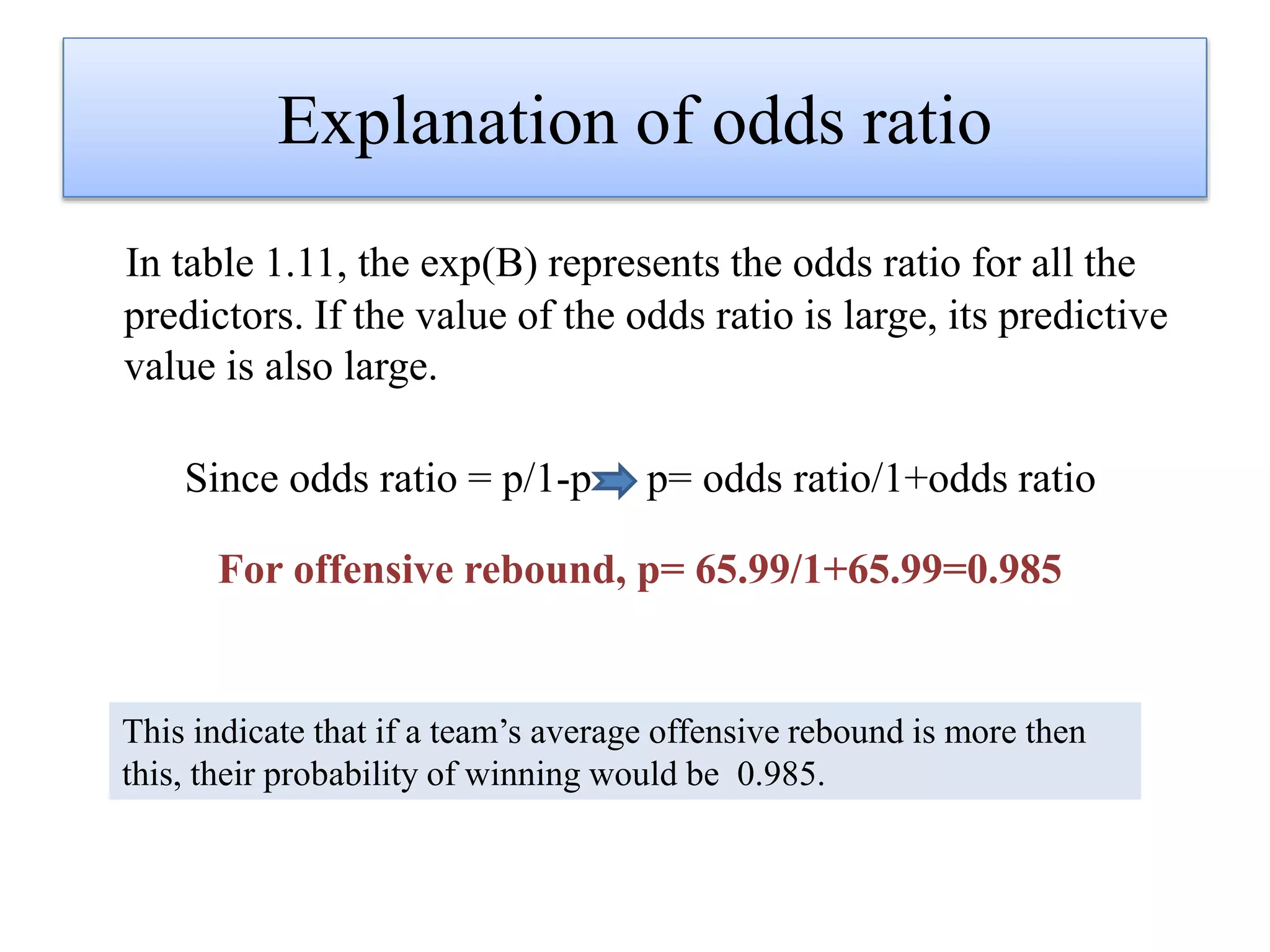

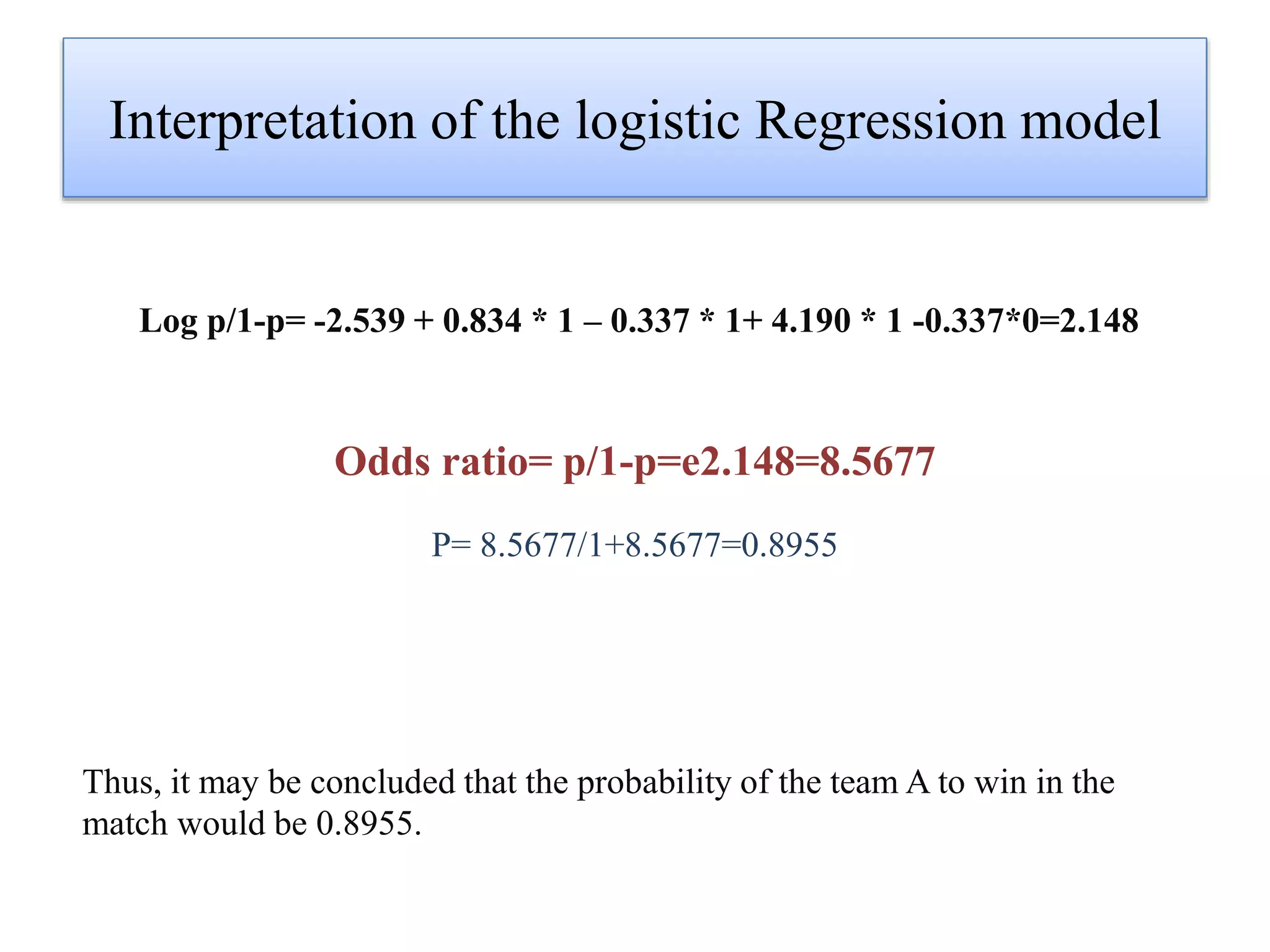

Logistic regression is used to predict categorical outcomes. The presented document discusses logistic regression, including its objectives, assumptions, key terms, and an example application to predicting basketball match outcomes. Logistic regression uses maximum likelihood estimation to model the relationship between a binary dependent variable and independent variables. The document provides an illustrated example of conducting logistic regression in SPSS to predict match results based on variables like passes, rebounds, free throws, and blocks.