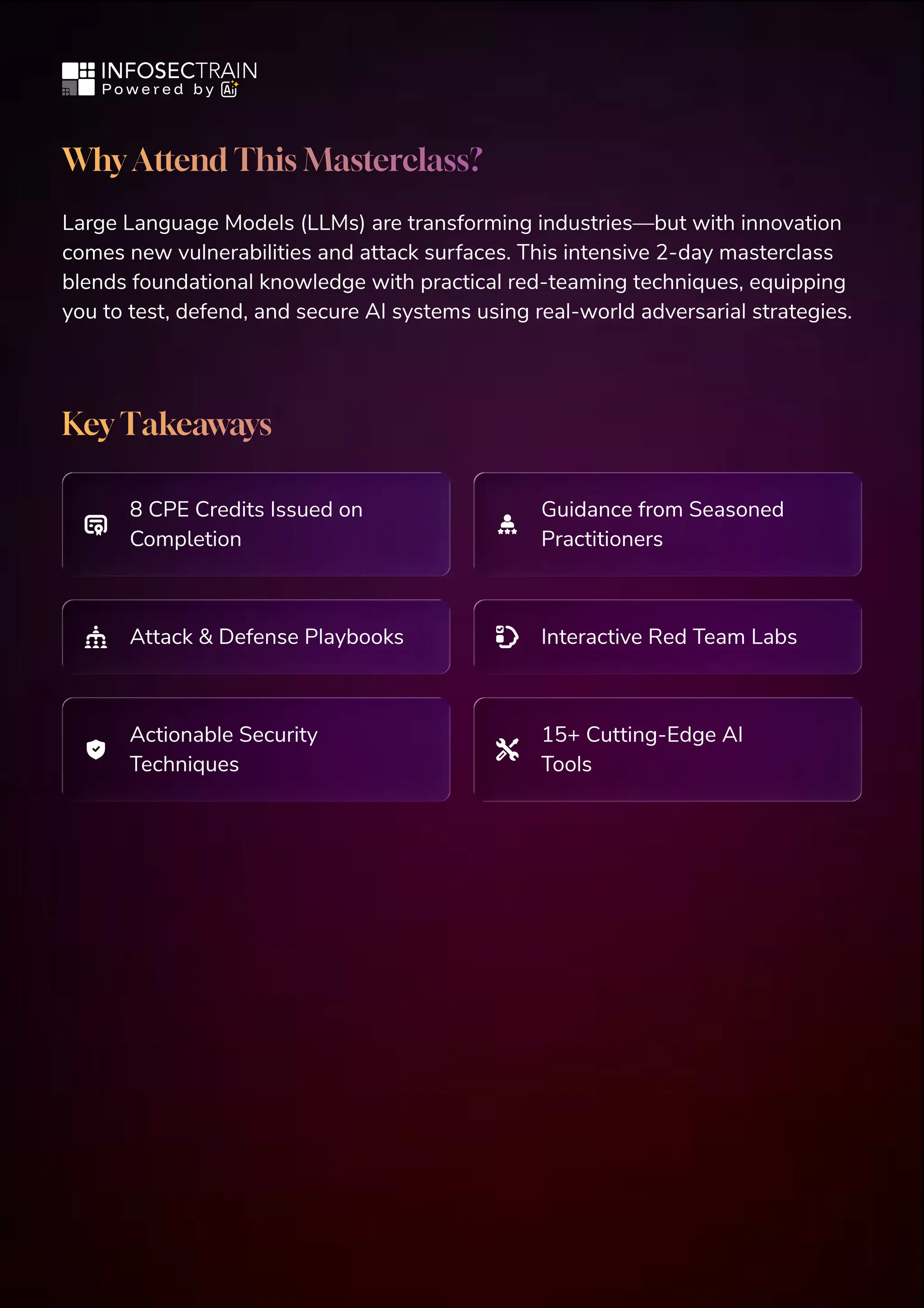

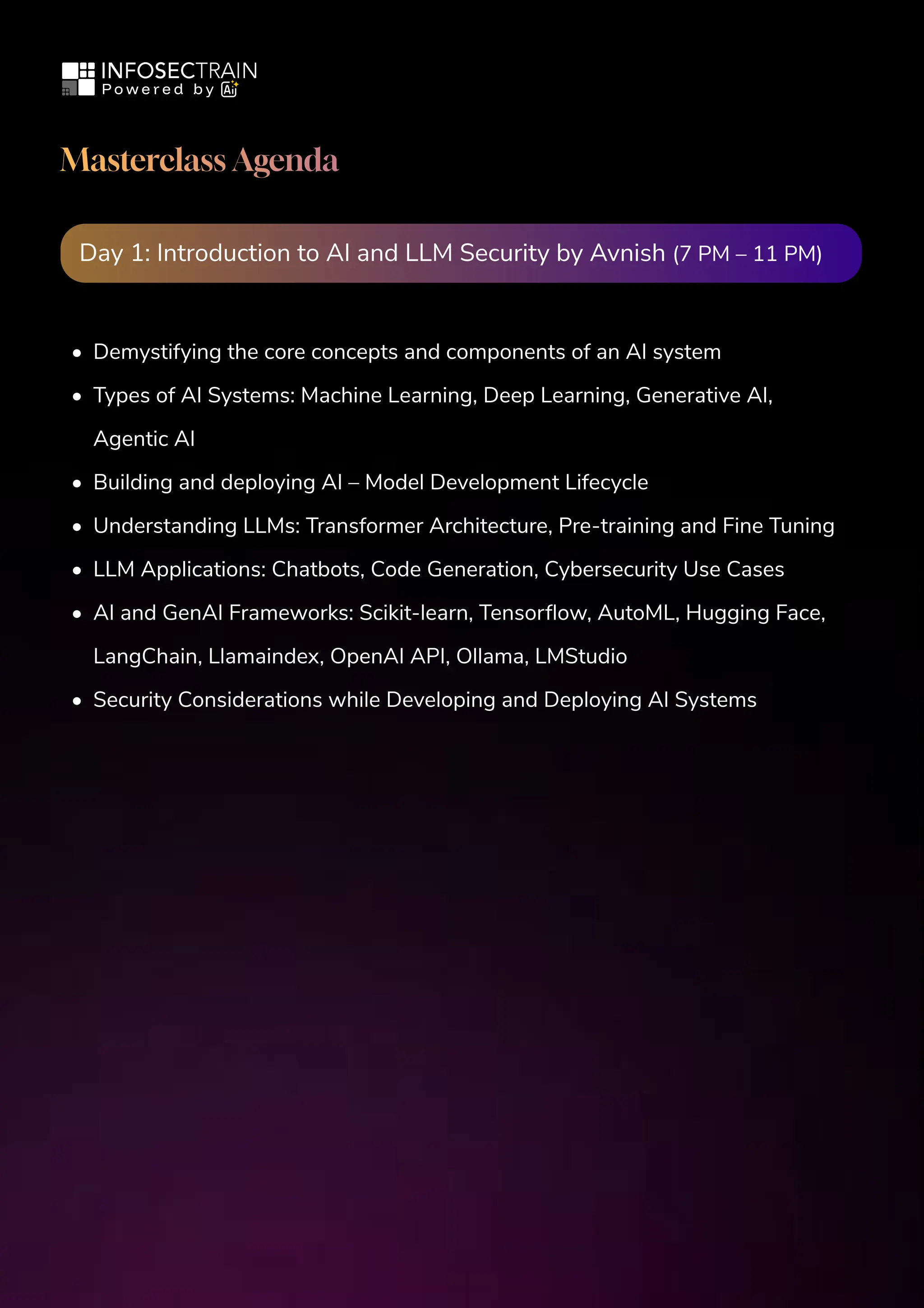

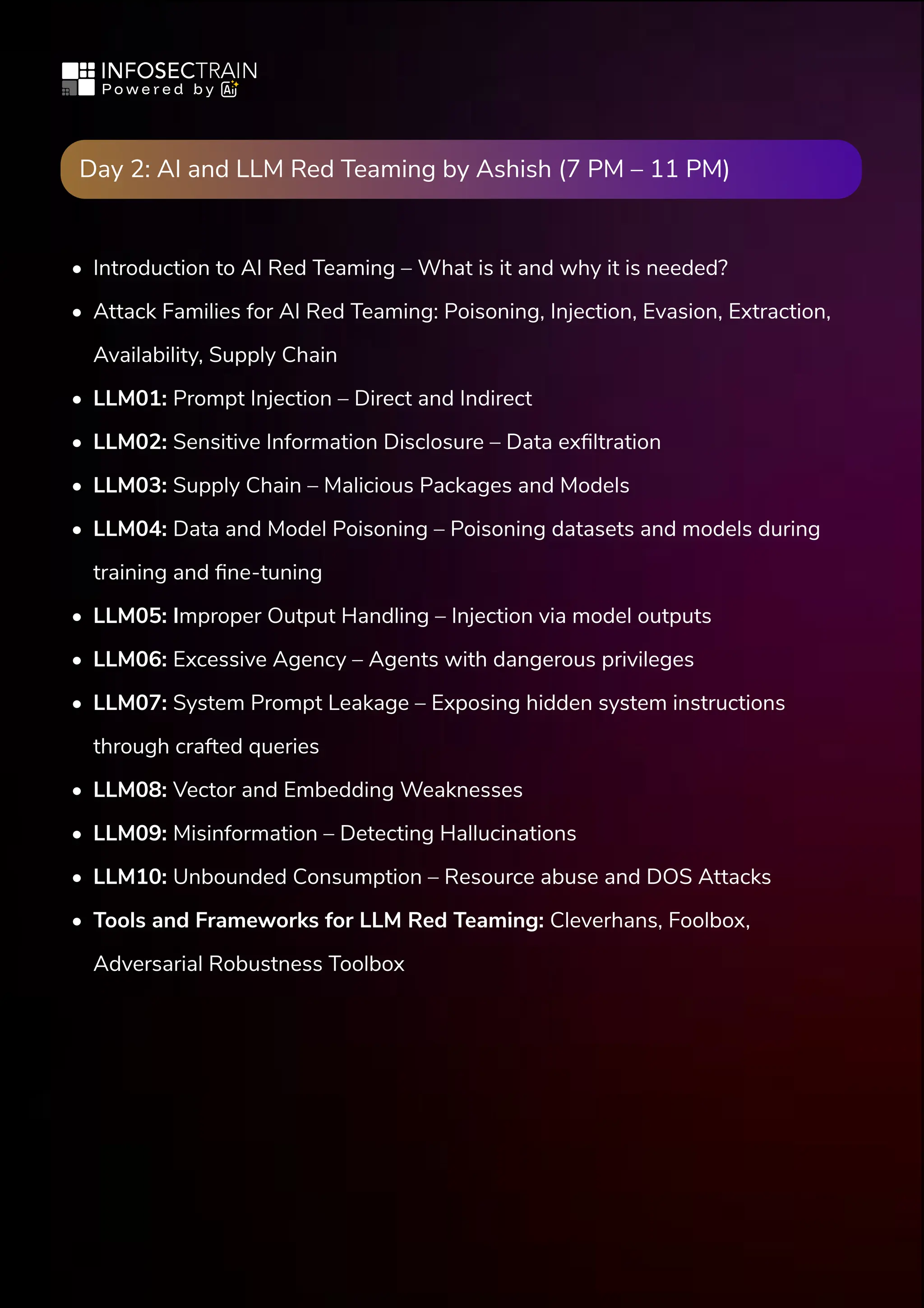

🔥 Ready to go hands-on with 𝐋𝐋𝐌 𝐑𝐞𝐝 𝐓𝐞𝐚𝐦𝐢𝐧𝐠? Join the 𝐋𝐋𝐌 𝐒𝐞𝐜𝐮𝐫𝐢𝐭𝐲 & 𝐑𝐞𝐝 𝐓𝐞𝐚𝐦𝐢𝐧𝐠 𝐌𝐚𝐬𝐭𝐞𝐫𝐜𝐥𝐚𝐬𝐬 – the ultimate 2-day deep dive into 𝐚𝐭𝐭𝐚𝐜𝐤𝐢𝐧𝐠 & 𝐝𝐞𝐟𝐞𝐧𝐝𝐢𝐧𝐠 𝐀𝐈 𝐬𝐲𝐬𝐭𝐞𝐦𝐬.

🗓️ Nov 1–2, 2025 | 🕖 7–11 PM IST | 💻 Online

💥 Early Bird: ₹999 + GST / $75 USD

Get trained by industry experts Avnish Naithani & Ashish Dhyani and master 15+ AI security tools. Earn 𝟔 𝐂𝐏𝐄 𝐜𝐫𝐞𝐝𝐢𝐭𝐬 and take home 𝐋𝐋𝐌 𝐚𝐭𝐭𝐚𝐜𝐤 & 𝐝𝐞𝐟𝐞𝐧𝐬𝐞 𝐩𝐥𝐚𝐲𝐛𝐨𝐨𝐤𝐬 that actually work.

Limited seats — don’t miss out!

📩 For Queries: sales@infosectrain.com