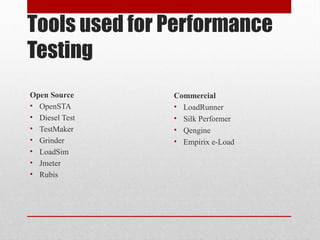

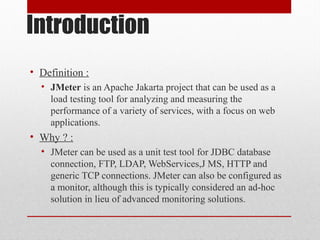

- JMeter is an open source load testing tool that can test web applications and other services. It uses virtual users to simulate real user load on a system.

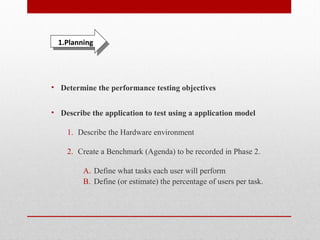

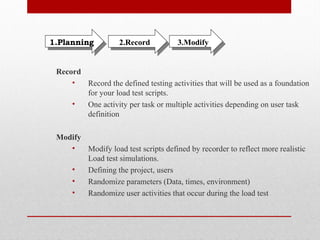

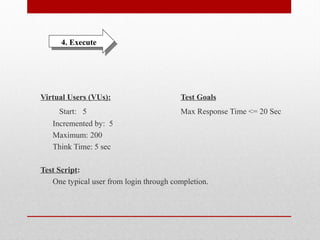

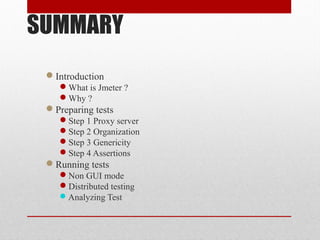

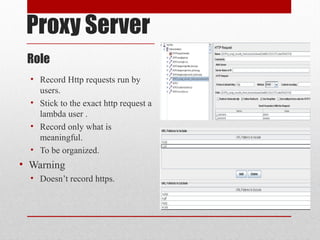

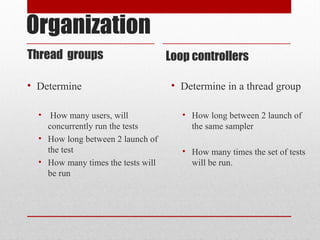

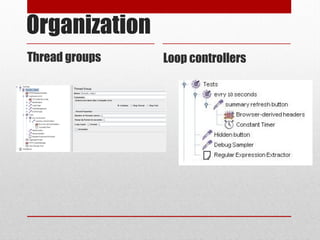

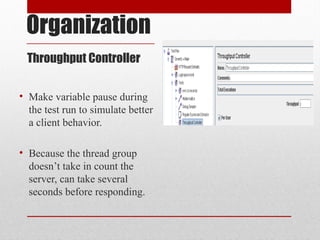

- JMeter tests are prepared by recording HTTP requests using a proxy server. Tests are organized into thread groups and loops to simulate different user behaviors and loads.

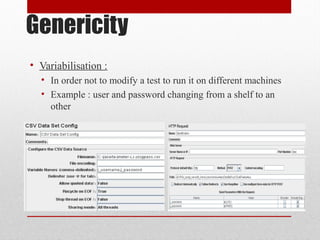

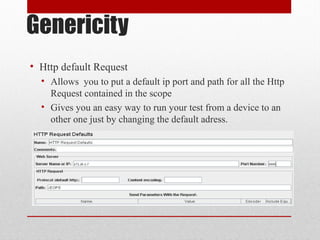

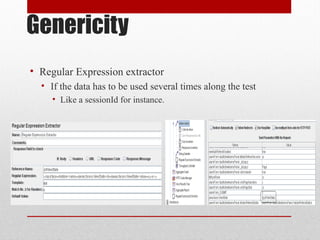

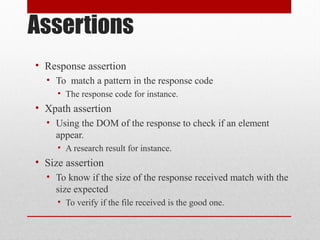

- Tests can be made generic by using variables and default values so the same tests can be run against different environments. Assertions are added to validate responses.

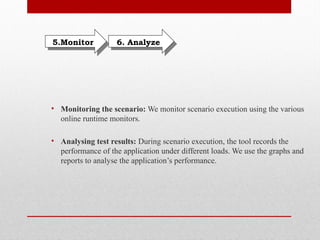

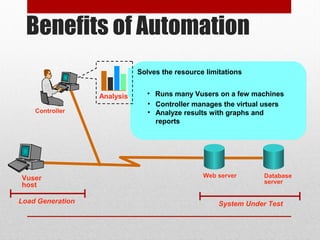

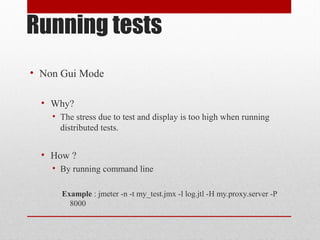

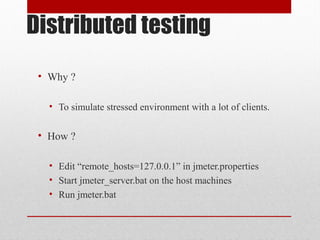

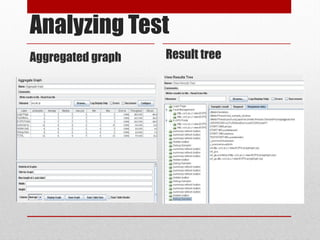

- Tests are run in non-GUI mode for load testing and can be distributed across multiple machines for high user loads. Test results are analyzed using aggregated graphs and result trees.