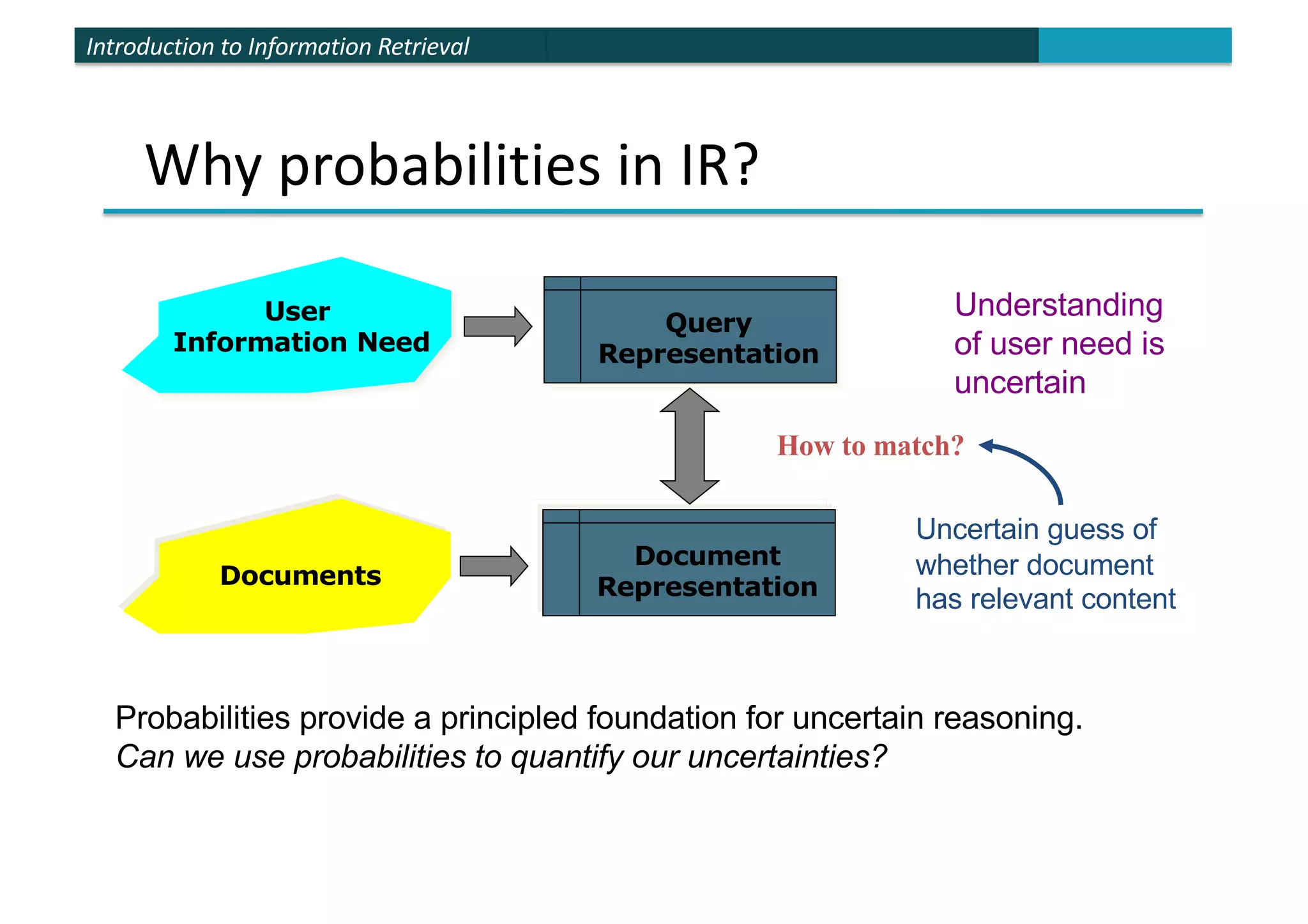

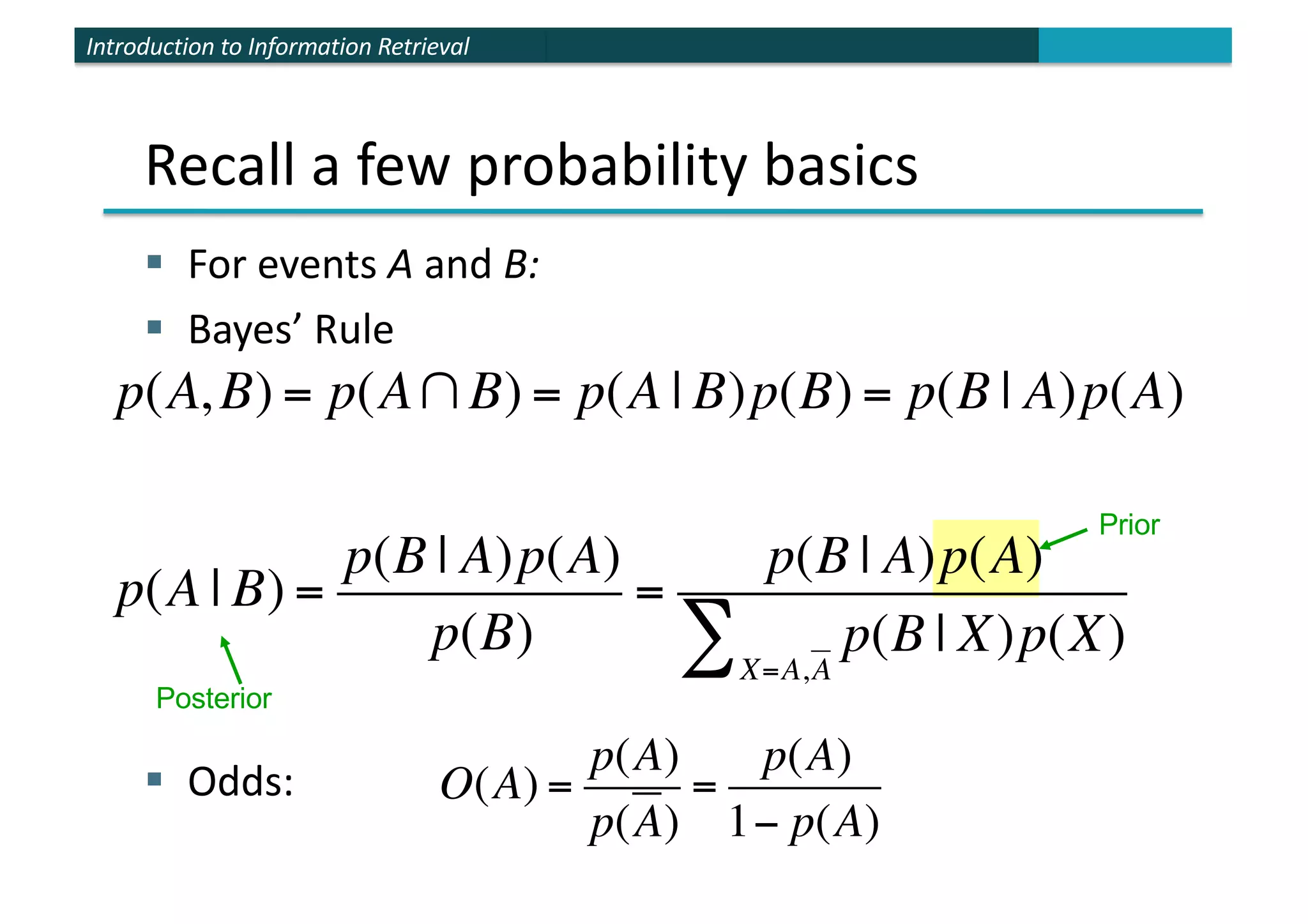

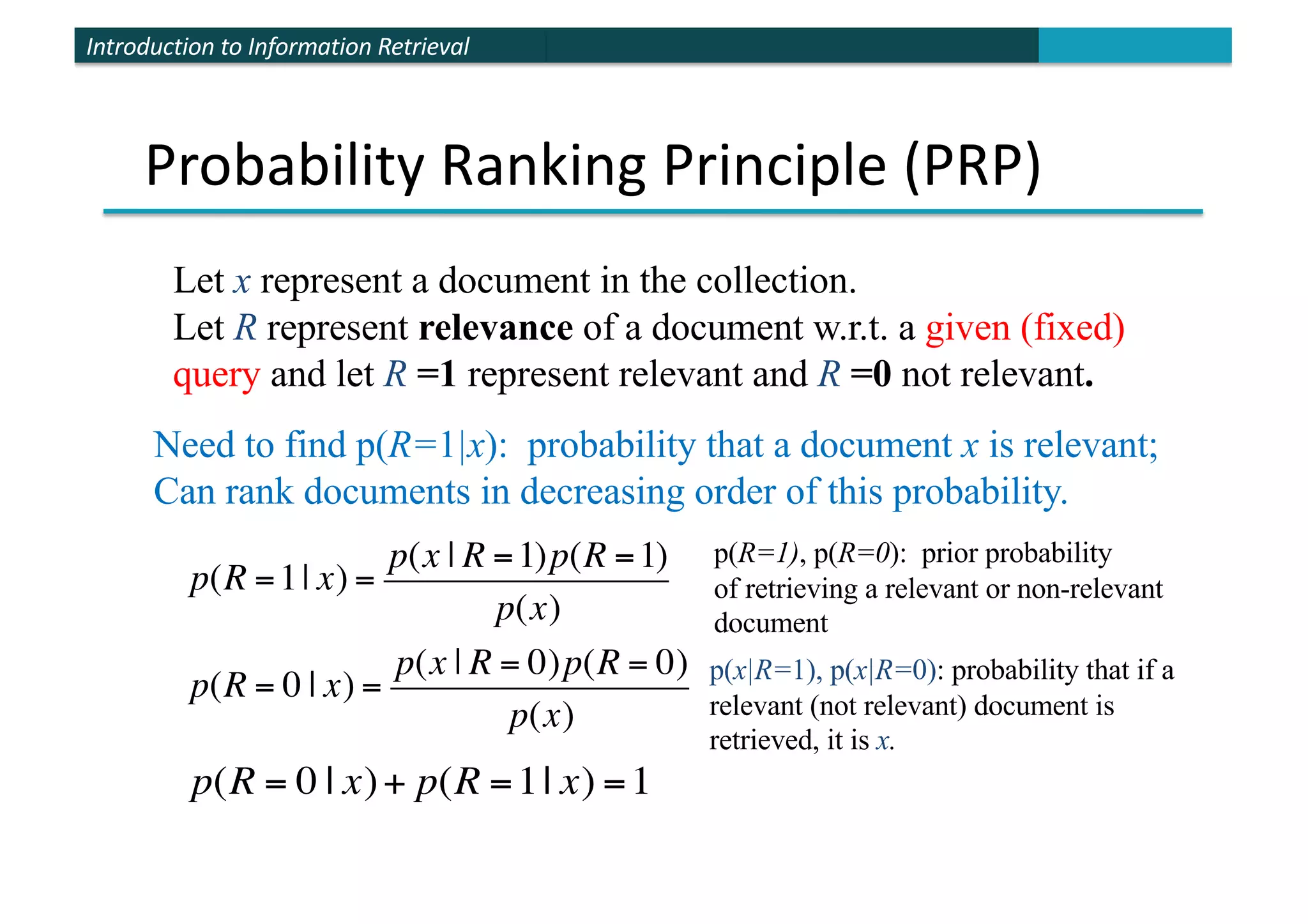

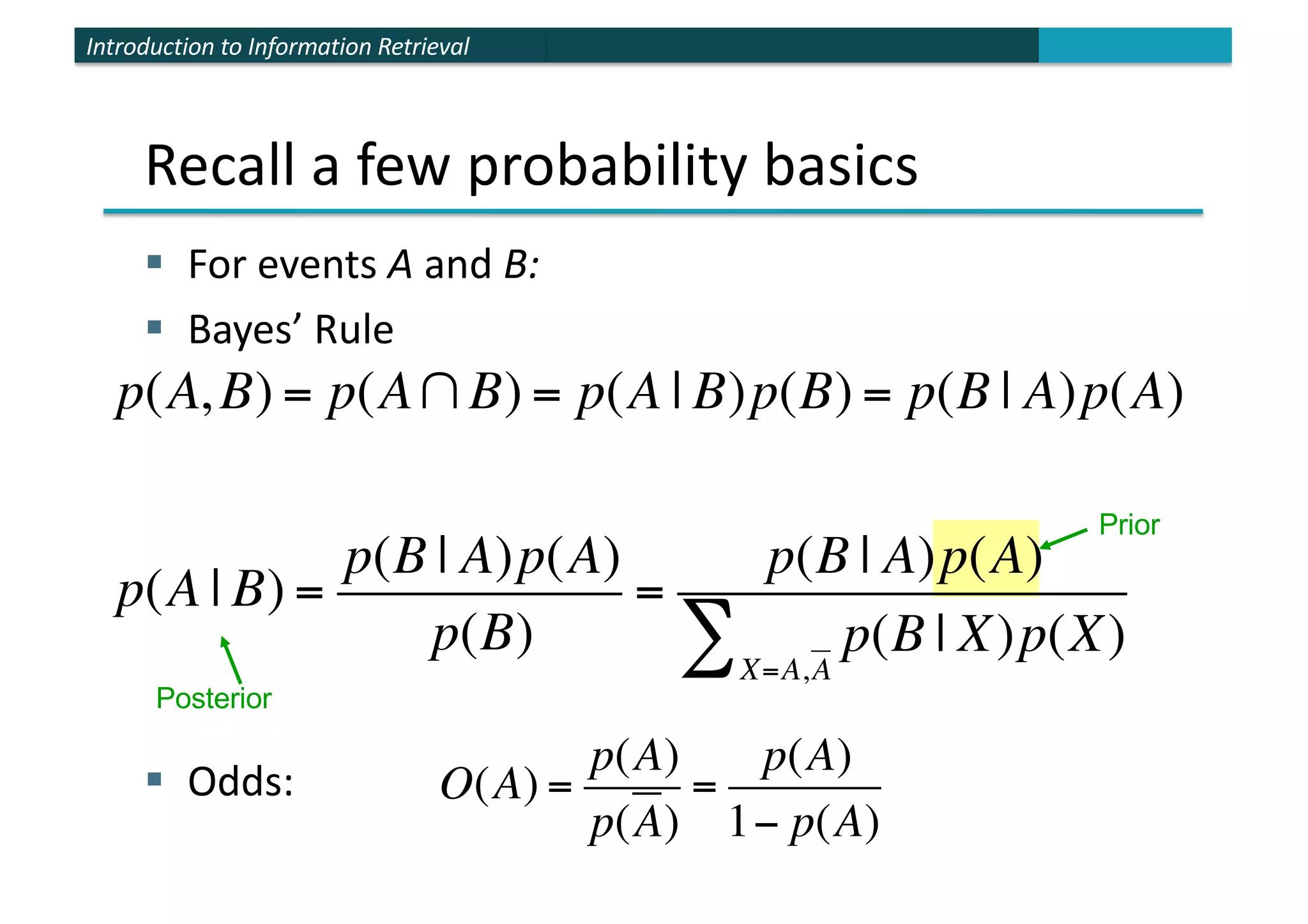

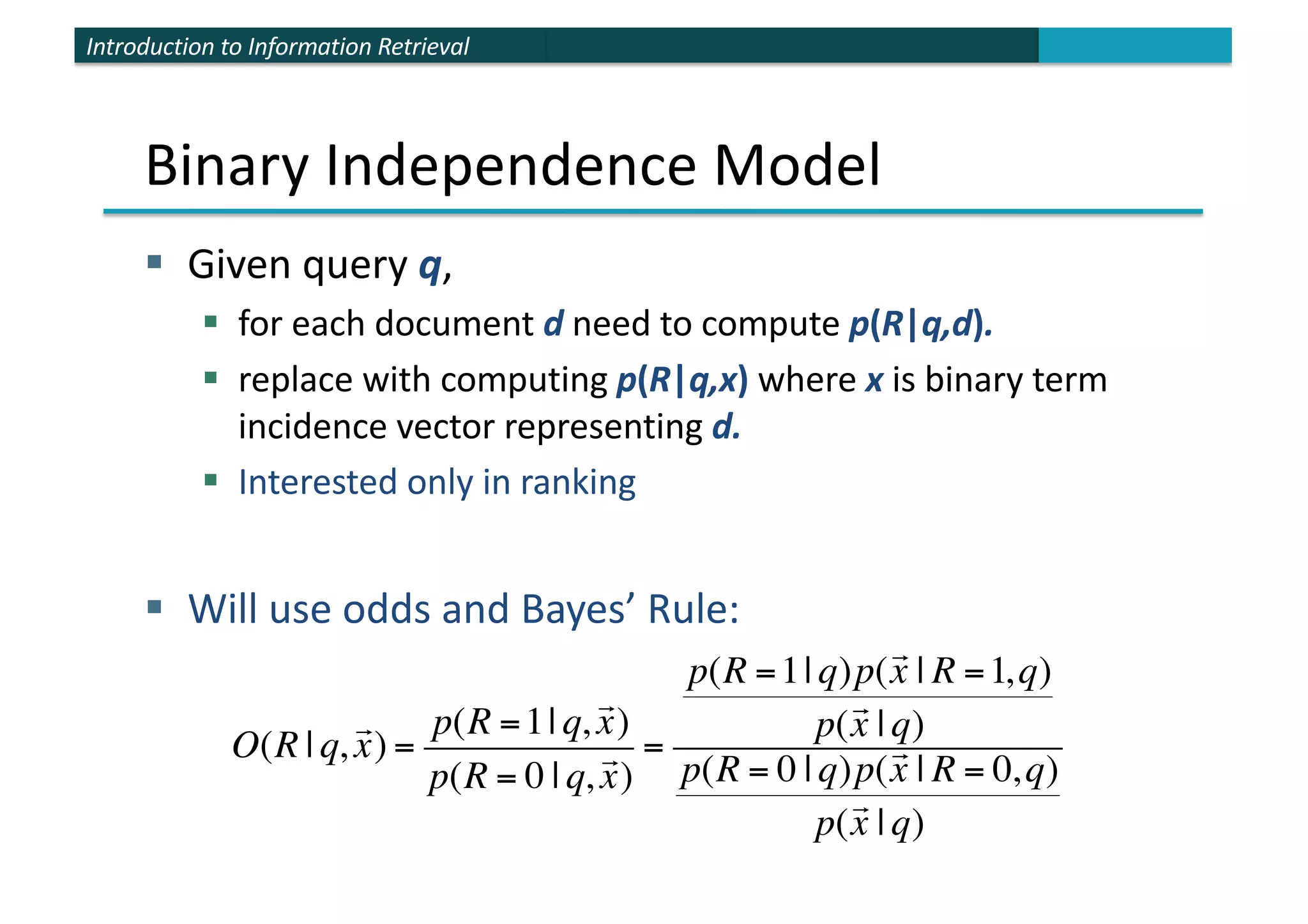

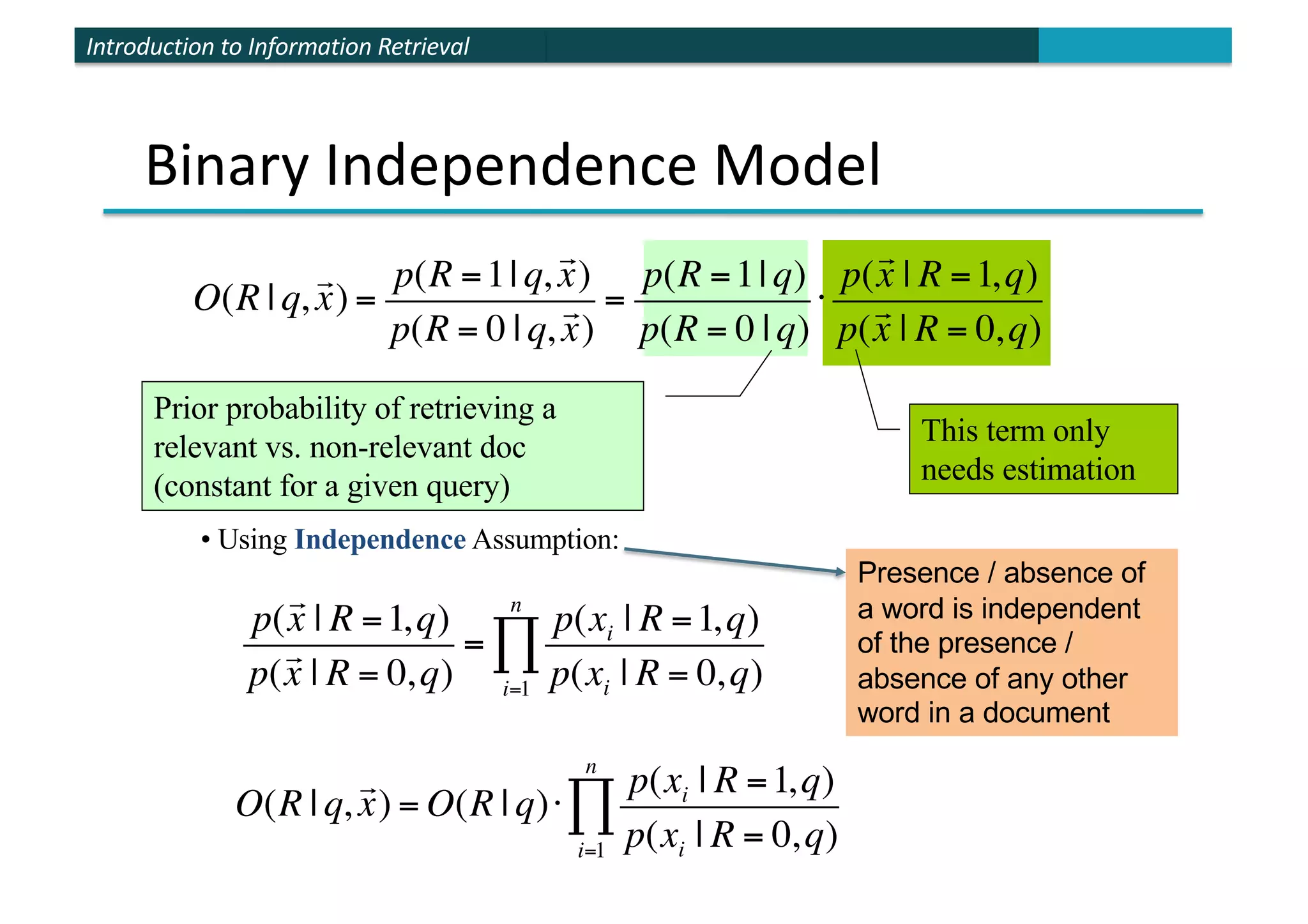

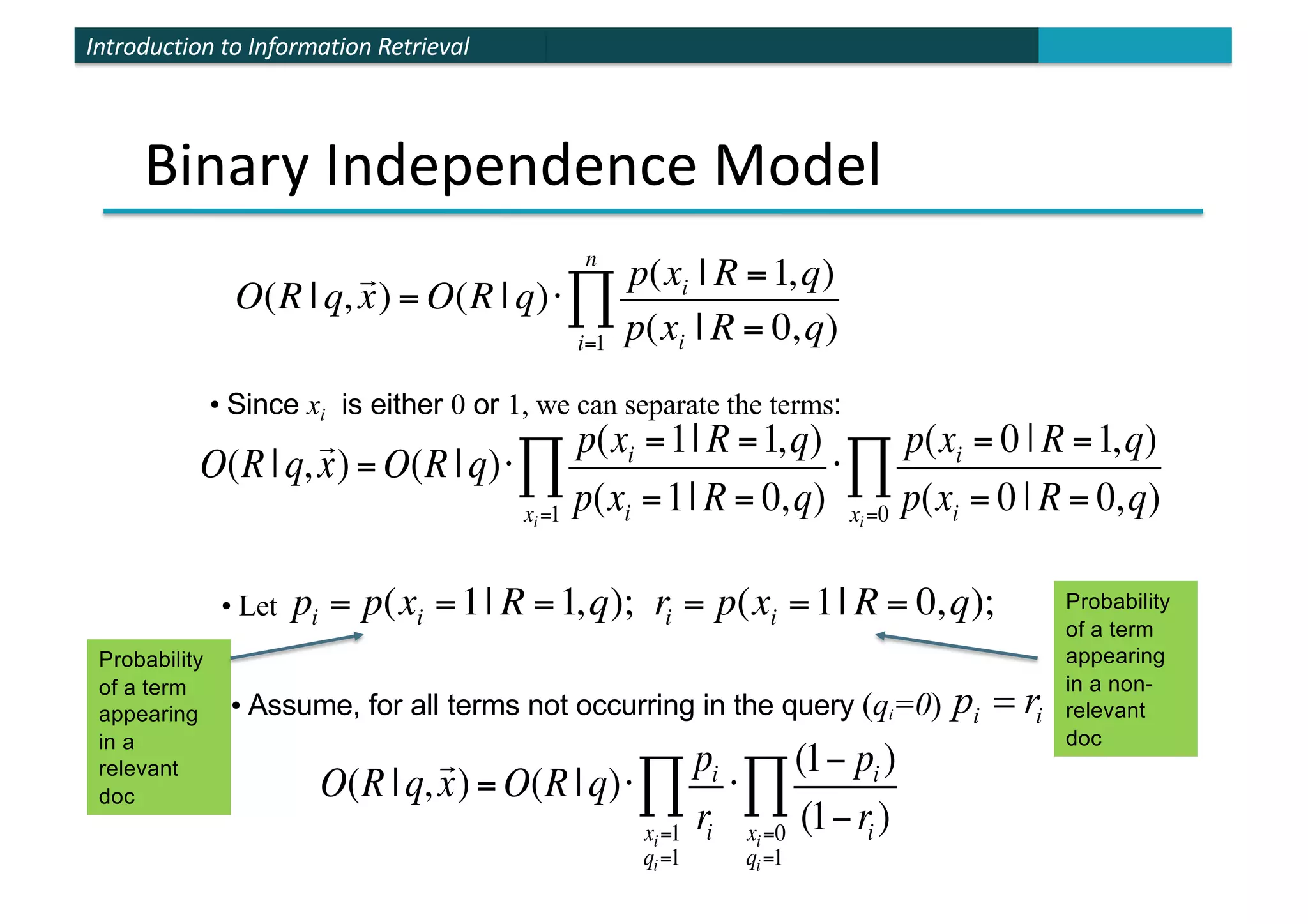

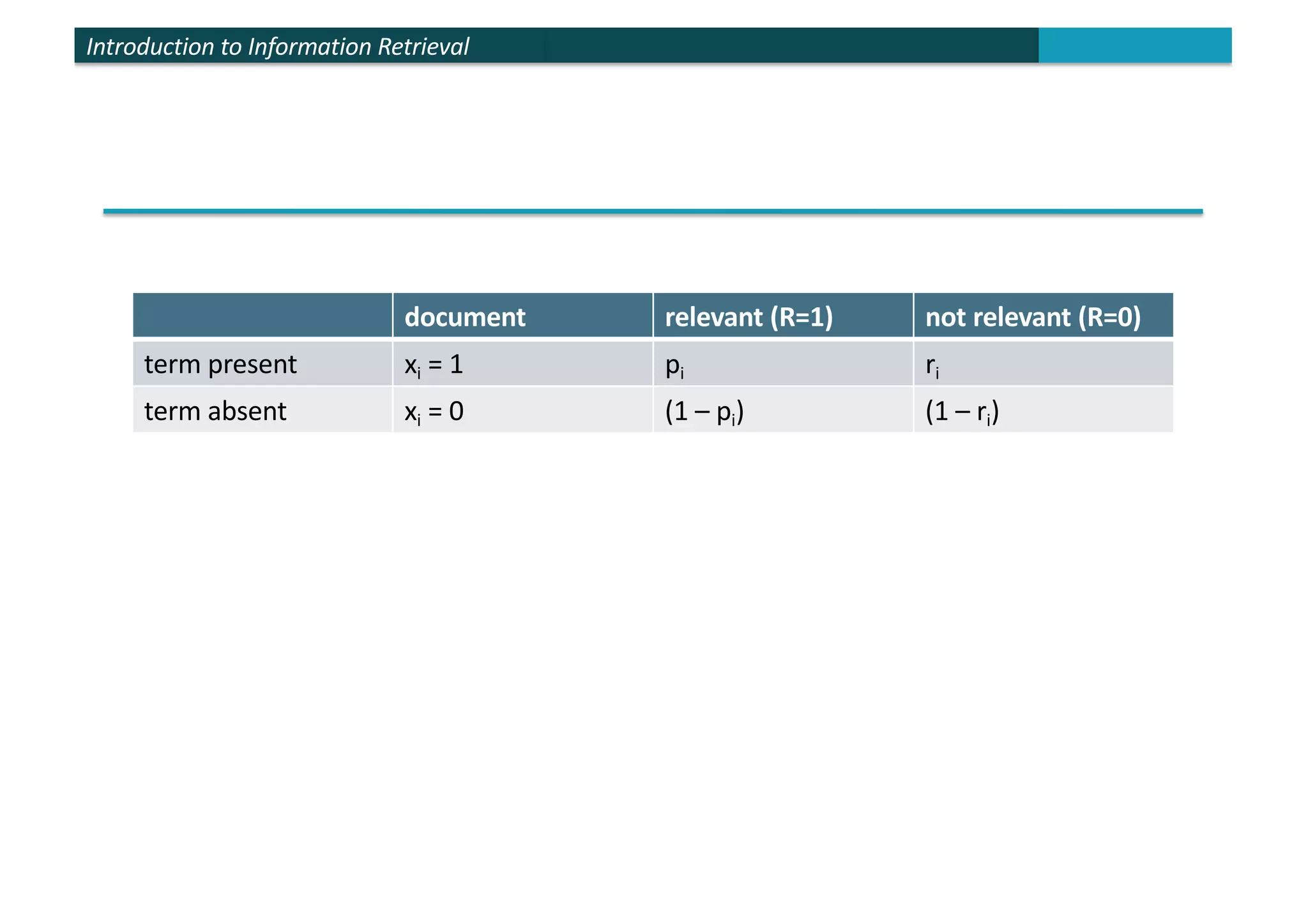

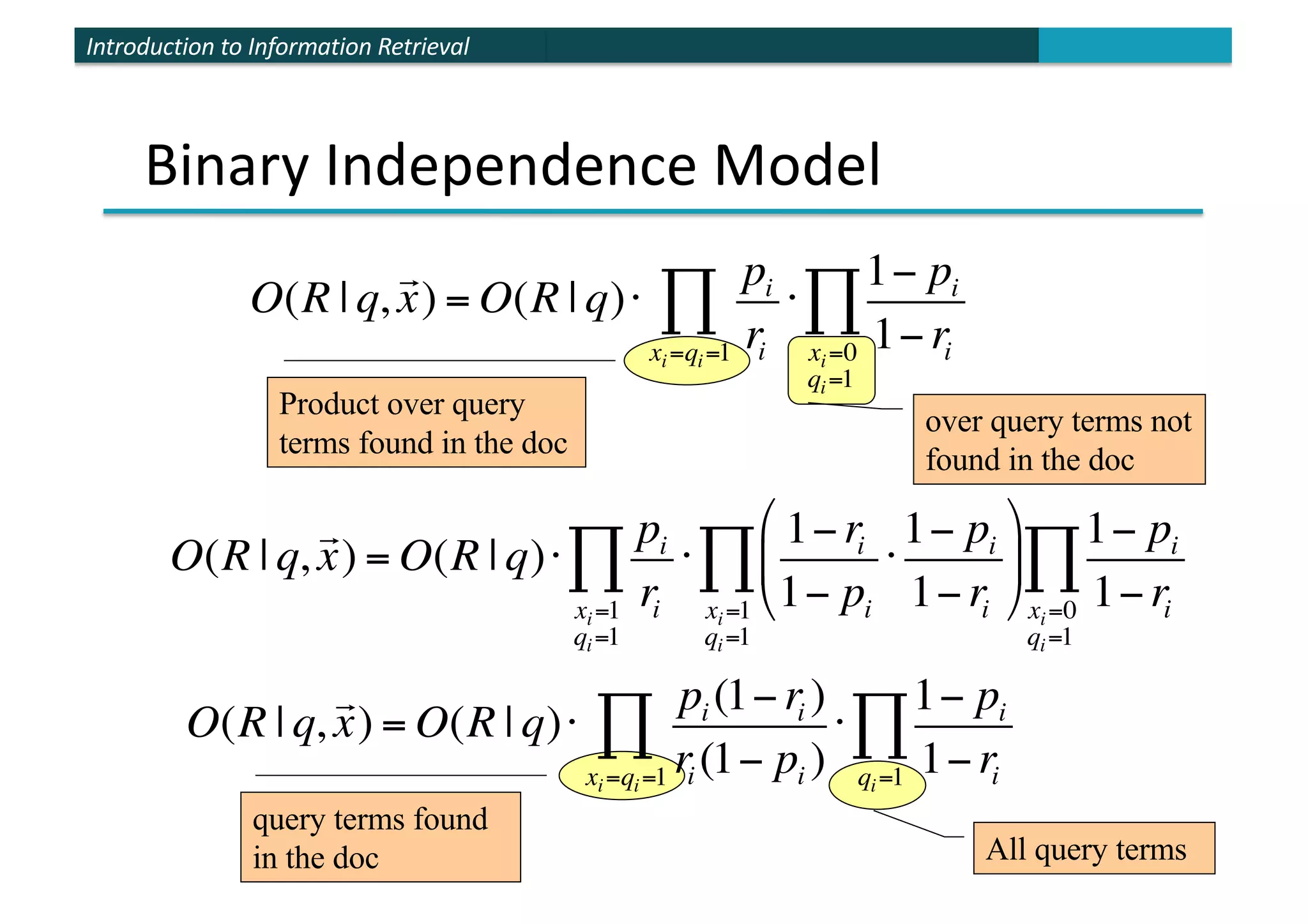

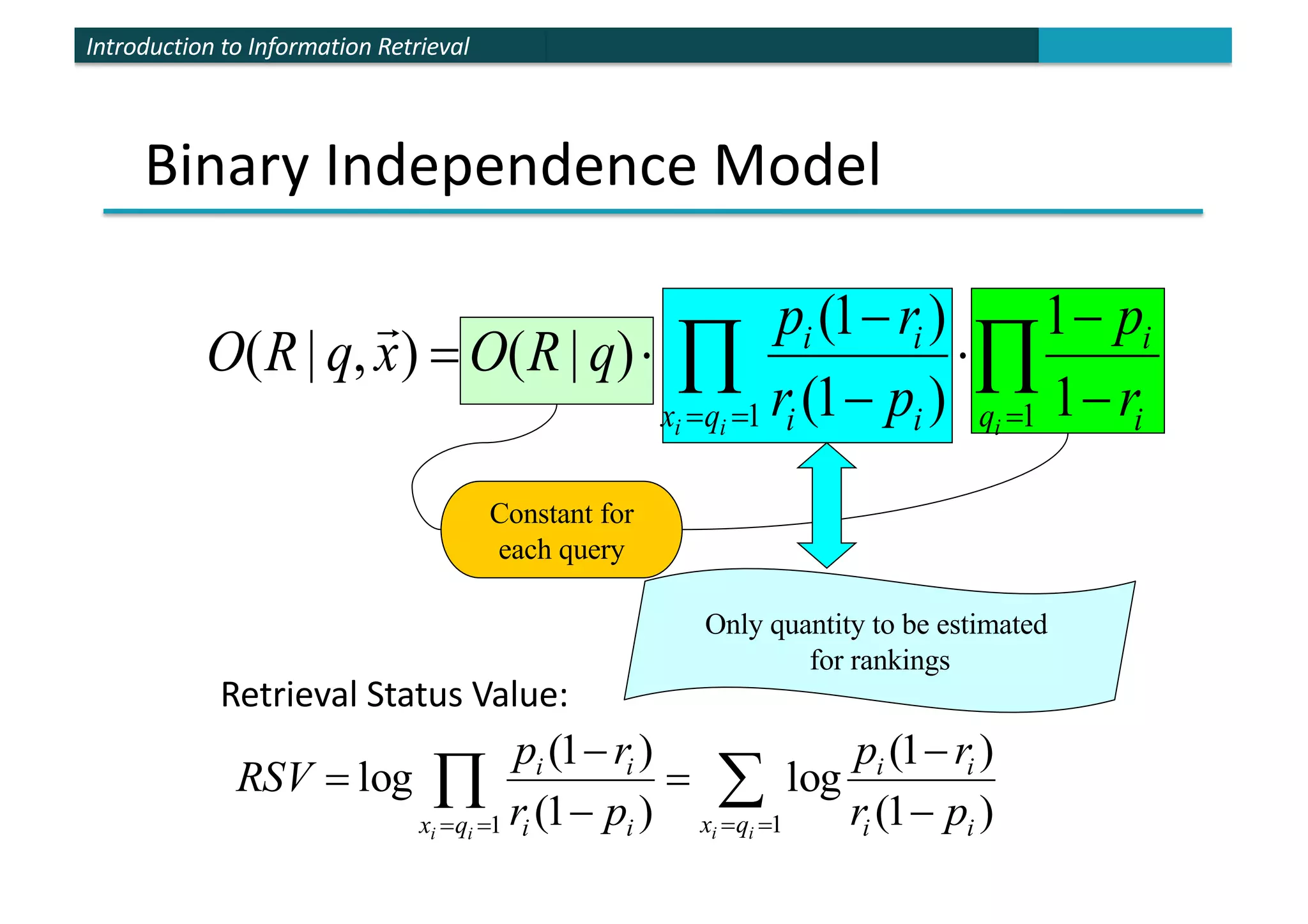

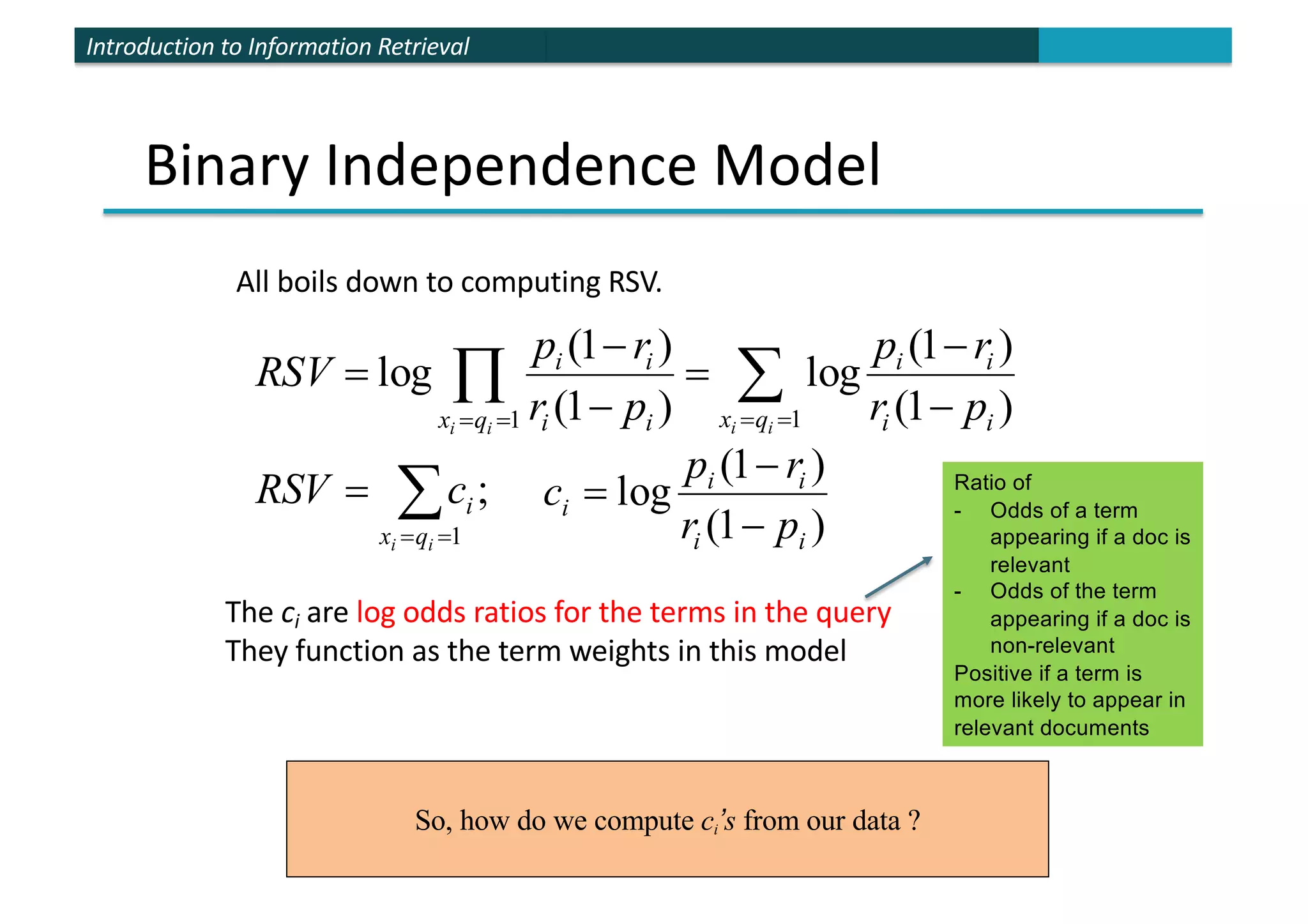

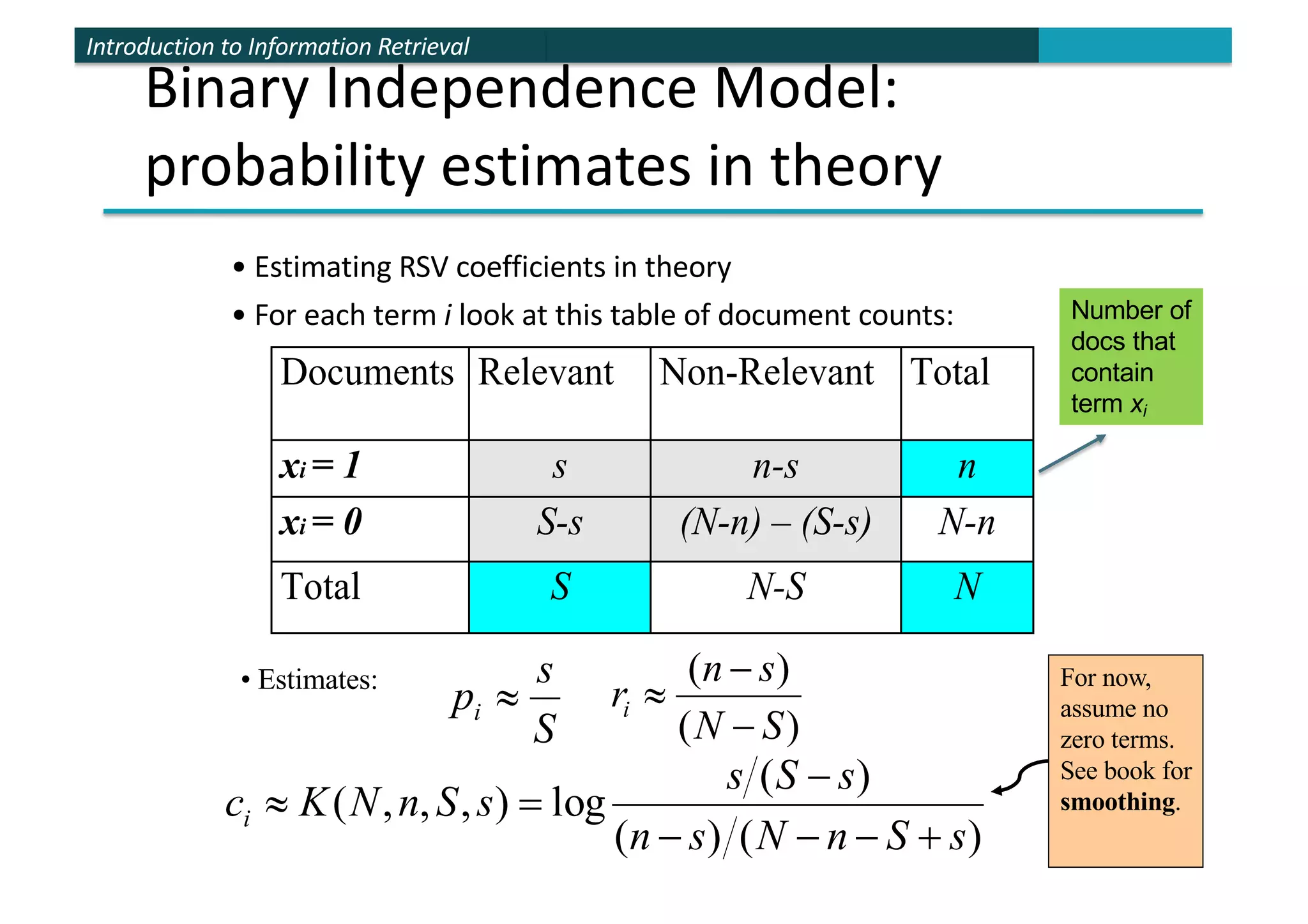

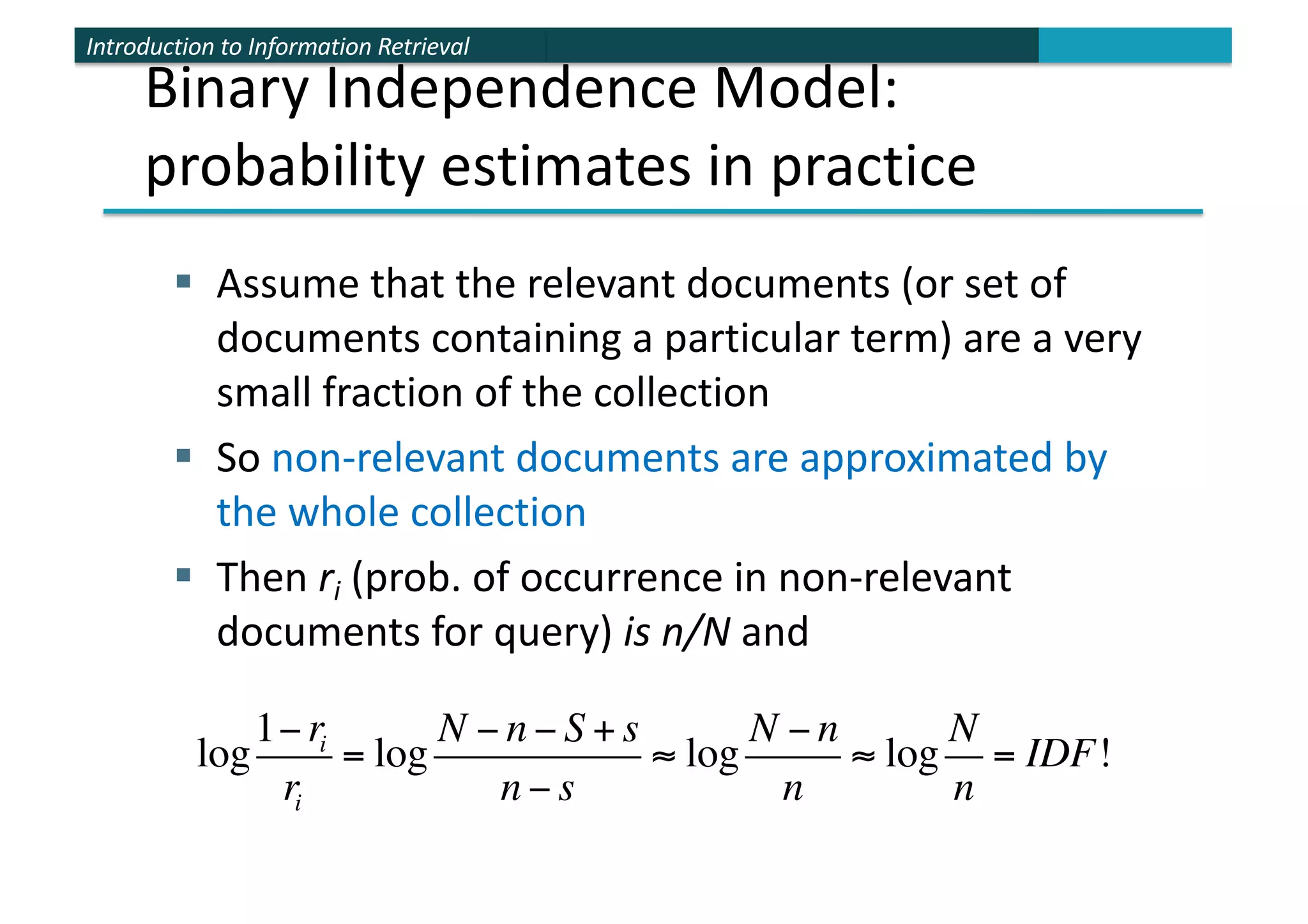

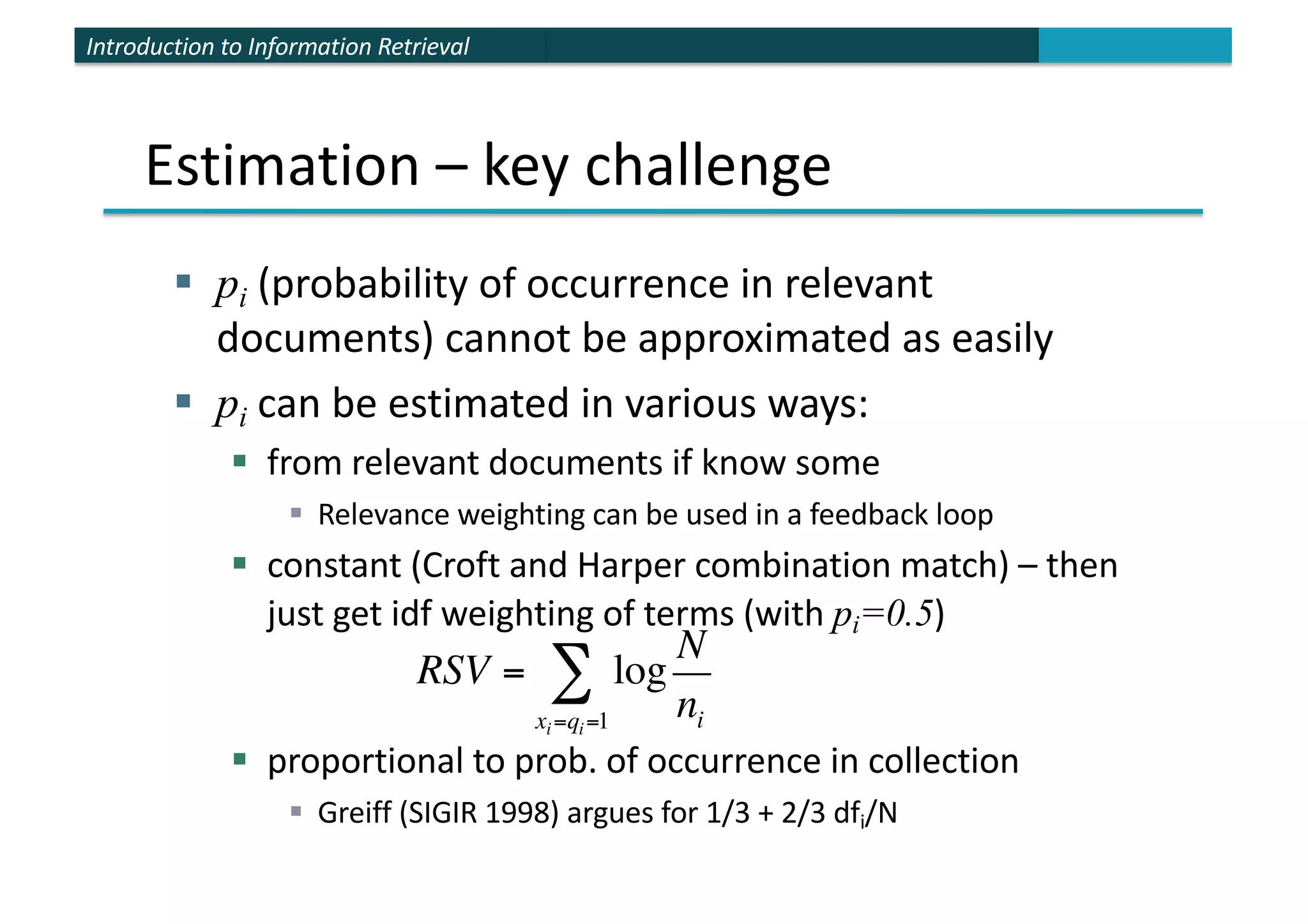

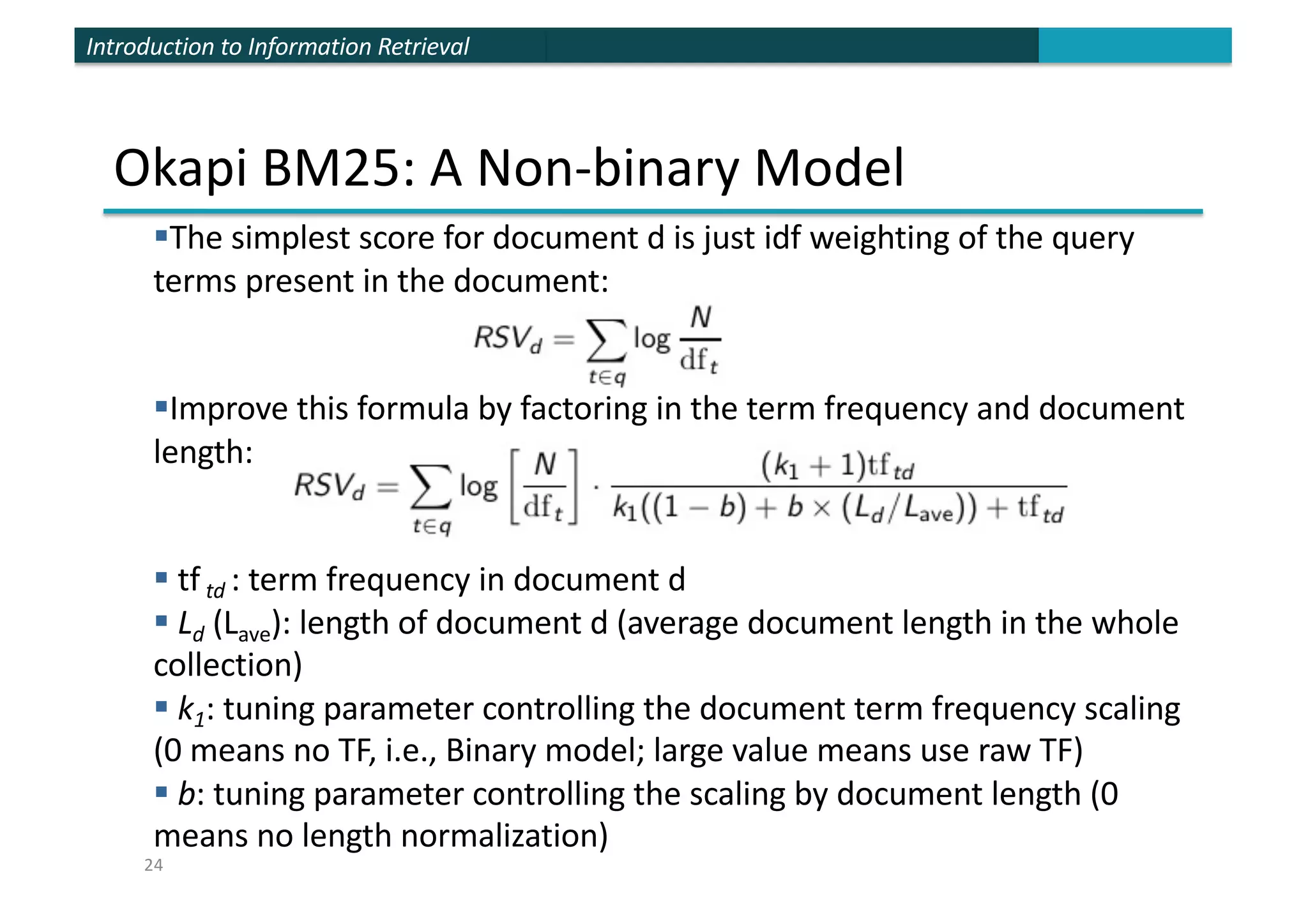

The document provides an introduction to probabilistic information retrieval and the binary independence model. It discusses how probabilities can be used to quantify uncertainties in information retrieval. The binary independence model uses probabilities to estimate the relevance of a document to a query based on term matching. It makes independence assumptions to estimate probabilities of terms appearing in relevant and non-relevant documents to calculate retrieval status values and rank documents.